This is the multi-page printable view of this section. Click here to print.

Architectures

1 - Logging architecture

Architecture overview

Logging follows a fixed routine. The system generates logs, which are collected and then forwarded to Insight. Insight stores the logs in the Audit Store. The Audit Store holds the logs and these log records are used in various areas, such as, forensics, alerts, reports, dashboards, and so on. This section explains the logging architecture.

The ESA v10.1.0 only supports protectors having the PEP server version 1.2.2+42 and later.

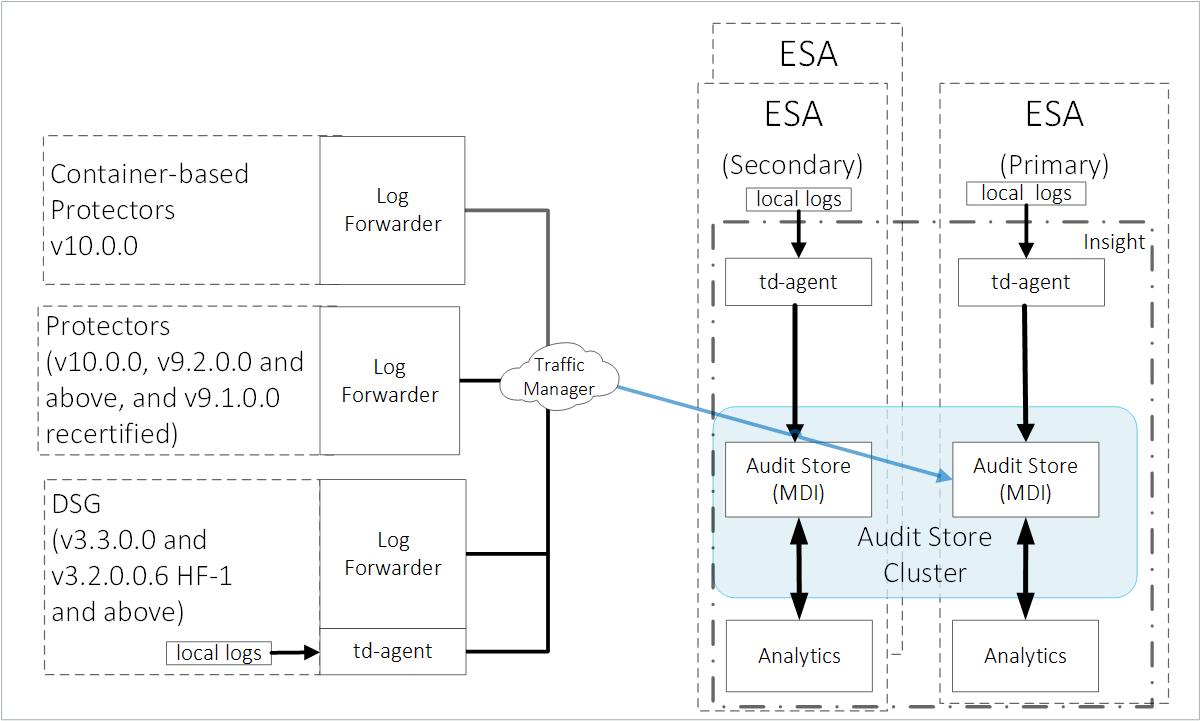

ESA:

The ESA has the td-agent service installed for receiving and sending logs to Insight. Insight stores the logs in the Audit Store that is installed on the ESA. From here, the logs are analyzed by Insight and used in various areas, such as, forensics, alerts, reports, dashboards, visualizations, and so on. Additionally, logs are collected from the log files generated by the Hub Controller and Membersource services and sent to Insight. By default, all Audit Store nodes have all node roles, that is, Master-eligible, Data, and Ingest. A minimum of three ESAs are required for creating a dependable Audit Store cluster to protect it from system crashes. The architecture diagram shows three ESAs.

Protectors:

The logging system is configured on the protectors to send logs to Insight on the ESA using the Log Forwarder.

DSG:

The DSG has the td-agent service installed. The td-agent forwards the appliance logs to Insight on the ESA. The Log Forwarder service forwards the data security operations-related logs, namely protect, unprotect, and reprotect, and the PEP server logs to Insight on the ESA.

Important: The gateway logs are not forwarded to Insight.

Container-based protectors

The container-based protectors are the Immutable Java Application Protector Container and REST containers. They Immutable Java Application Protector Container represents a new form factor for the Java Application Protector. The container is intended to be deployed on the Kubernetes environment.

The REST container represents a new form factor that is being developed for the Application Protector REST. The REST container is deployed on Kubernetes, residing on any of the Cloud setups.

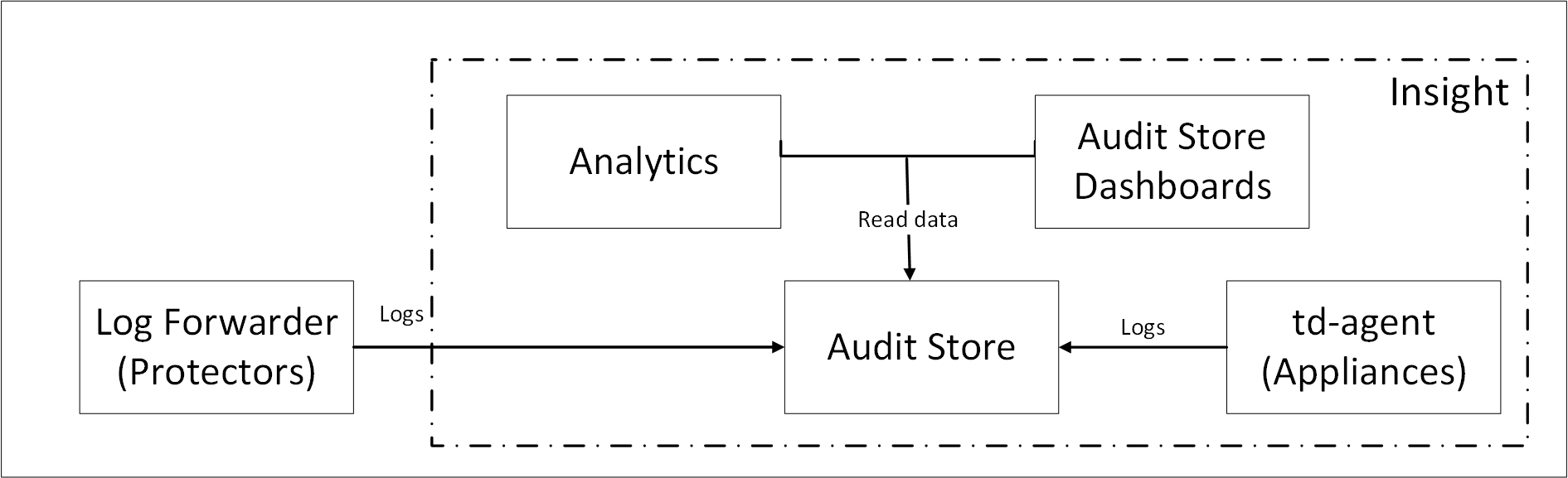

Components of Insight

The solution for collecting and forwarding the logs to Insight composes the logging architecture. The various components of Insight are installed on an appliance or an ESA.

A brief overview of the Insight components is provided in the following figure.

Understanding Analytics

Analytics is a component that is configured when setting up the ESA. After it is installed, the tools, such as, the scheduler, reports, rollover tasks, and signature verification tasks are available. These tools are used to maintain the Insight indexes.

Understanding the Audit Store Dashboards

The logs stored in the Audit Store hold valuable data. This information is very useful when used effectively. To view the information in an effective way, Insight provides tools such as dashboards and visualization. These tools are used to view and analyze the data in the Audit Store. The ESA logs are displayed on the Discover screen of the Audit Store Dashboards.

Understanding the Audit Store

The Audit Store is the database of the logging ecosystem. The main task of the Audit Store is to receive all the logs, store them, and provide the information when log-related data is requested. It is very versatile and processes data fast.

The Audit Store is a component that is installed on the ESA during the installation. The Audit Store is scalable, hence, additional nodes can be added to the Audit Store cluster.

Understanding the td-agent

The td-agent forms an integral part of the Insight ecosystem. It is responsible for sending logs from the appliance to Insight. It is the td-agent service that is configured to send and receive logs. The service is installed, by default, on the ESA and DSG.

Based on the installation, the following configurations are performed for the td-agent:

- Insight on the local system: In this case, the td-agent is configured to collect the logs and send it to Insight on the local system.

- Insight on a remote system: In this case, Insight is not installed locally, such as the DSG, but it is installed on the ESA. The td-agent is configured to forward logs securely to Insight in the ESA.

Understanding the Log Forwarder

The Log Forwarder is responsible for forwarding data security operation logs to Insight in the ESA. In cases when the ESA is unreachable, the Log Forwarder handles the logs until the ESA is available.

For Linux-based protectors, such as the Oracle Database Protector for Linux, if the connection to the ESA is lost, then the Log Forwarder starts collecting the logs in the memory cache. If the ESA is still unreachable after the cache is full, then the Log Forwarder continues collecting the logs and stores in the disk. When the connection to the ESA is restored, the logs in the cache are forwarded to Insight. The default memory cache for collecting logs is 256 MB. If the filesystem for Linux protectors is not EXT4 or XFS, then the logs will not be saved to the disk after the cache is full.

For information about updating the cache limit, refer here.

The following table provides information about how the Log Forwarder handles logs in different situations.

| If.. | then the Log Forwarder… |

|---|---|

| Connection to ESA is lost | Starts collecting logs in the in-memory cache based on the cache limit defined. |

| Connection to ESA is lost and the cache is full | In case of Linux-based protectors, the Log Forwarder continues to collect the logs and stores in disk. If the disk space is full, then all the cache files are emptied and the Log Forwarder continues to run. For Windows-based protectors, the Log Forwarder starts throwing away the logs. |

| Connection to ESA is restored | Forwards logs to Insight on the ESA. |

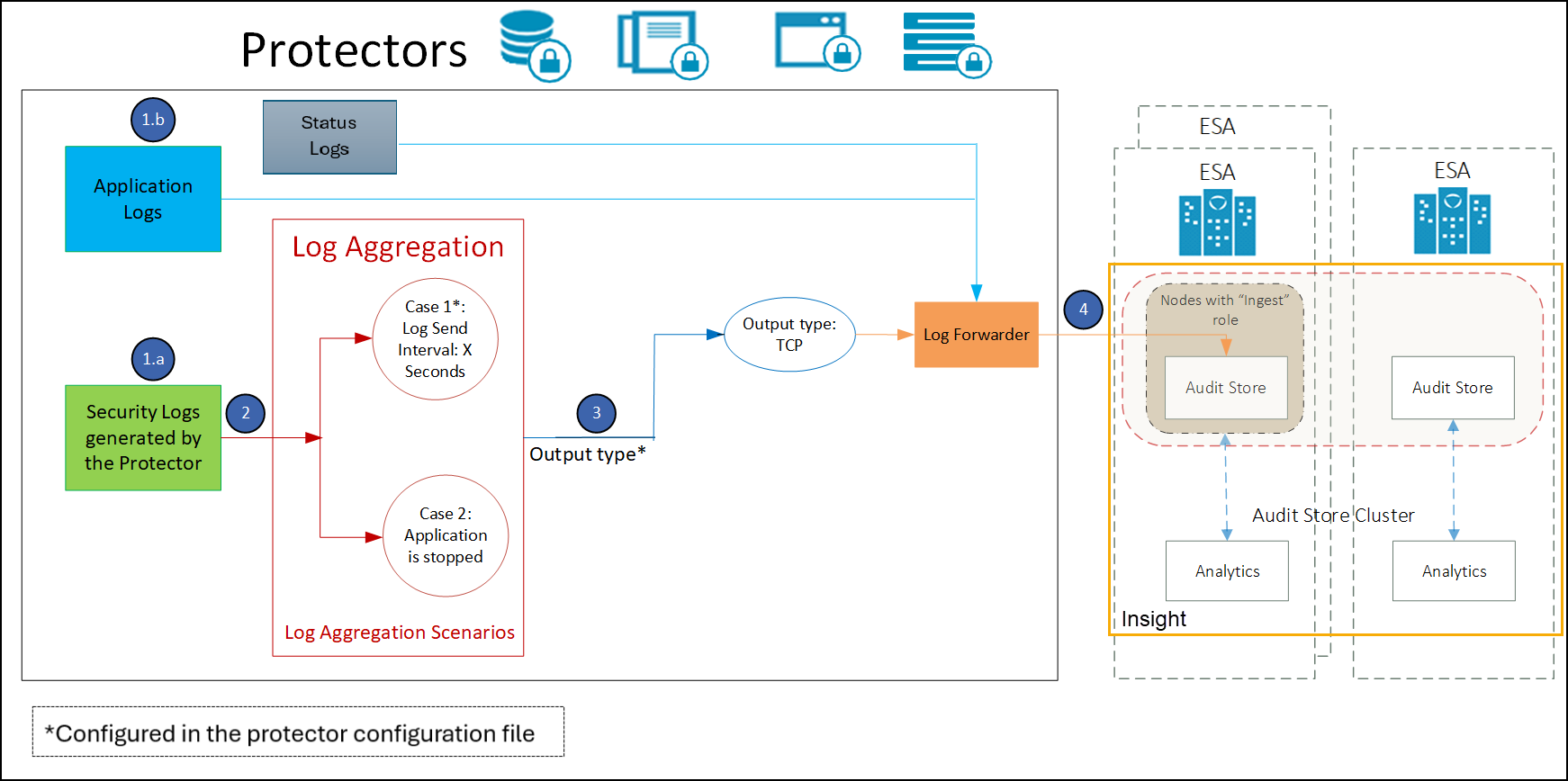

Understanding the log aggregation

The architecture, the configurations, and the workflow provided here describes the log aggregation feature.

Log aggregation happens within the protector.

- Protector flushes all security audits once every second, by default. If a different user, data elements, or operations are involved, the number of security logs generated every second varies. The operations include protect, unprotect, or reprotect. Also, the generation rate of security logs depends on the number of users, data elements, and operations involved.

- FluentBit, by default, sends one batch every 10 second and when this occurs it will take all security and application logs.

The following diagram describes the architecture and the workflow of the log aggregation.

- The security logs generated by the protectors are aggregated in the protectors.

- The application logs are not aggregated and they are sent directly to the Log Forwarder.

- The security logs are aggregated and flushed at specific time intervals.

- The aggregated security logs from the Log Forwarder are forwarded to Insight.

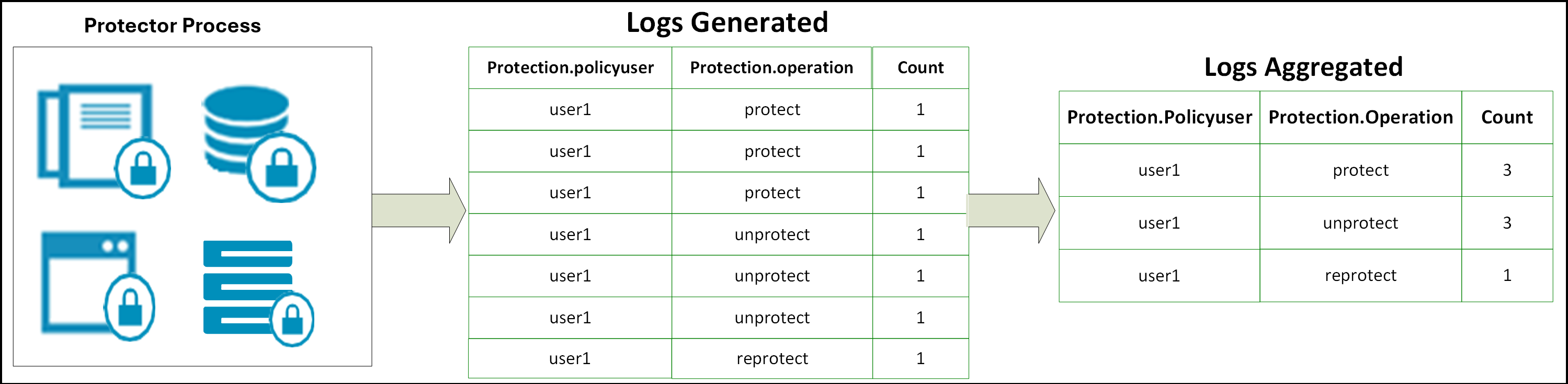

The following diagram illustrates how similar logs are aggregated.

The similar security logs are aggregated after the log send interval or when an application is stopped.

For example, if 30 similar protect operations are performed simultaneously, then a single log will be generated with a count of 30.

2 - Overview of the Protegrity logging architecture

Overview of logging

Protegrity software generates comprehensive logs. The logging infrastructure generates a huge number of log entries that take time to read. The enhanced logging architecture consolidates logs in Insight. Insight stores the logs in the Audit Store and provides tools to make it easier to view and analyze log data.

When the user performs some operation using Protegrity software, or interact with Protegrity software directly, or indirectly using a different software or interface, a log entry is generated. This entry is stored in the Audit Store with other similar entries. A log entry contains valuable information about the interaction between the Protegrity software and a user or other systems.

A log entry might contain the following information:

- Date and time of the operation.

- User who initiated the operation.

- Operation that was initiated.

- Systems involved in the operation.

- Files that were modified or accessed.

As the transactions build up, the quantum of logs generated also increases. Every day a lot of business transactions, inter-process activities, interactivity-based activities, system level activities, and other transactions take place resulting in a huge number of log entries. All these logs take up a lot of time and space to store and process.

Evolution of logging in Protegrity

Every system had its own advantages and disadvantages. Over time, the logging system evolved to reduce the disadvantages of the existing system and also improve on the existing features. This ensured that a better system was available that provided more information and at the same time reduced the processing and storage overheads.

Logging in legacy products

In legacy Protegrity platforms, that is version 8.0.0.0 and earlier, security events were collected in the form of logs. These events were a list of transactions when the protection, unprotection, and reprotection operations were performed. The logs were delivered as a part of the customer Protegrity security solution. This system allowed tracking of the operations and also provided information for troubleshooting the Protegrity software.

However, this had disadvantages due to the volume of the logs generated and the granularity of the logs. When many security operations were performed, the volume of logs kept on increasing. This made it difficult for the platform to keep track of everything. When the volume increased beyond a limit and could not be managed, then customers had to turn off receiving log operations for the successful attempts to get protected data in the clear.

The logs collected reported the security operations performed. However, the exact number of operations performed was difficult to record. This inconsistency existed across the various protectors. The SDKs could provide individual counts of the operations performed while database protectors could not provide the exact count of the operations performed.

To solve this issue of obtaining exact counts, the capability called metering was added to the Protegrity products. Metering provided a count of the total number of security events. Even in the case of Metering, storage was an issue. This is because one audit log was generated in PostgreSQL in each ESA. Cross replications of logs across ESAs was a challenge because there was no way to automatically replicate logs across ESAs.

New logging infrastructure

Protegrity continues to improve on the products and solutions provided. Starting from version 8.1.0.0, a new and robust logging architecture in introduced on the ESA. This new system improves on the way audit logs are created and processed on the protectors. The logs are processed and aggregated according to the event being performed. For example, if 40 protect operations are performed on the protector, then one log with the count 40 is created instead of 40 individual logs for each operation. This reduces the number of logs generated and at the same time retains the quality of the information generated.

The audit logs that are created provide a lot of information about the event being performed on the protector. In addition to system events and protection events, the audit log also holds information for troubleshooting the protector and the security events on the protector. This solves the issue of granularity of the logs that existed in earlier systems. An advantage of this architecture is that the logs help track the working of the system. It also allows monitoring the system for any issues, both in the working of the system and from a security perspective.

The new architecture uses software, such as, Fluent Bit and Fluentd. These allow logs to be transported from the protector to the ESA over a secure, encrypted line. This ensures the safety and security of the information. The new architecture also used Elasticsearch for replicating logs across ESAs. It made the logging system more robust and protected the data from being lost in the case of an ESA failure. Over iterations, the Elasticsearch was upgraded with additional security using Open Distro. From version 9.1.0.0, OpenSearch was introduced that improved the logging architecture further. These software provided configuration flexibility to provide a better logging system.

From version 9.2.0.0, Insight is introduced that allow the logs to be visualized and various reports to be created for monitoring the health and the security of the system. Additionally, from the ESA version 9.2.0.0 and protectors version 9.1.0.0 or 9.2.0.0 the new logging system has been improved even further. It is now possible to view how many security operations the Protegrity solution has delivered and what Protegrity protectors are being used in the solution.

The audit logs generated are important for a robust security solution and are stored in Insight in the ESA. Since the volume of logs generated have been reduced in comparison to legacy solutions, the logs are always received by the ESA. Thus, the capability to turn off logging is no longer required and has been deprecated. The new logging architecture offers a wide range of tools and features for managing logs. In the case where the volume of logs is very large, the logs can be archived using the Index Lifecycle Management (ILM) to the short term archive or long term archive. This frees up the system and resources and at the same time makes the logs available when required in the future.

For more information about ILM, refer here.

The process for archiving logs can also be automated using the scheduler provided with the ESA. In addition to archiving logs, the processes for auto generating reports, rolling over the index, and performing signature verification can also be automated.

For more information about scheduling tasks, refer here.