The Documentation is a repository for Protegrity product documentation. The documentation is available in the HTML format and can be viewed using your browser. You can also print and download the .pdf files of the required product documentation as per your requirements.

This is the multi-page printable view of this section. Click here to print.

Documentation

- 1: Installation

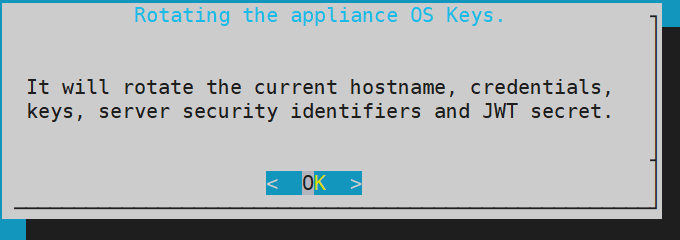

- 1.1: Overview of installation

- 1.2: System Requirements

- 1.3: Partitioning of Disk on ESA

- 1.4: Installing the ESA

- 1.4.1: Installing the ESA On-Premise

- 1.4.2: Installing ESA on Cloud Platforms

- 1.4.3: Installing ESA on VMware

- 1.5: Configuring the ESA

- 1.6: Verifying the ESA installation from the Web UI

- 1.7: Initializing the Policy Information Management (PIM) Module

- 1.8: Configuring the ESA in a Trusted Appliances Cluster (TAC)

- 1.9: Creating an Audit Store Cluster

- 2: Configuration

- 2.1: Sending logs to an external security information and event management (SIEM)

- 2.2: Configuring a Trusted Appliance Cluster (TAC) without Consul Integration

- 2.3: Configuring the IP Address for the Docker Interface

- 2.4: Configuring ESA

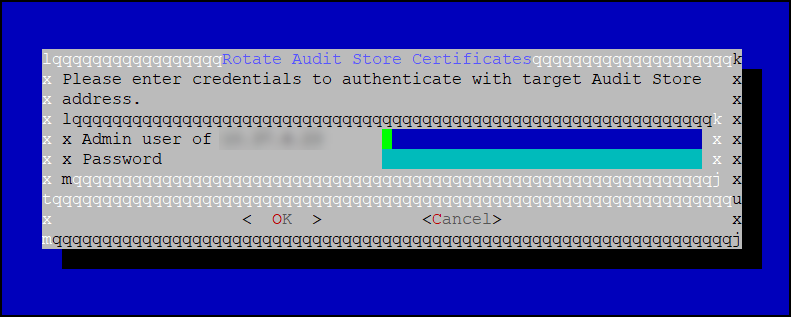

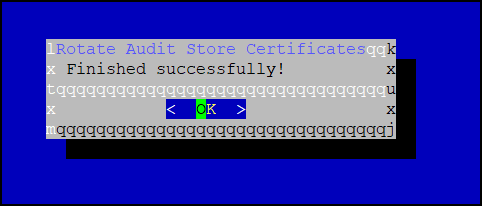

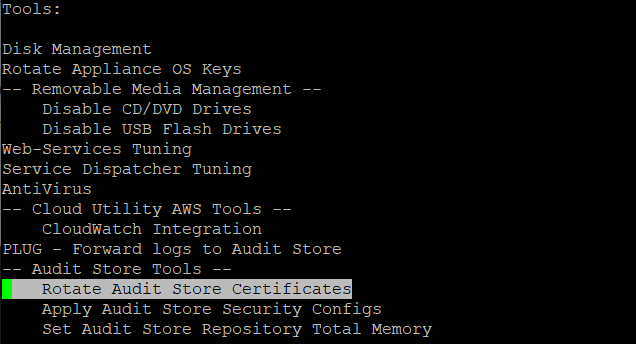

- 2.4.1: Rotating Insight certificates

- 2.4.2: Updating configurations after changing the domain name

- 2.4.3: Updating the IP address of the ESA

- 2.4.4: Updating the host name or domain name of the ESA

- 2.4.5: Updating Insight custom certificates

- 2.4.6: Removing an ESA from the Audit Store cluster

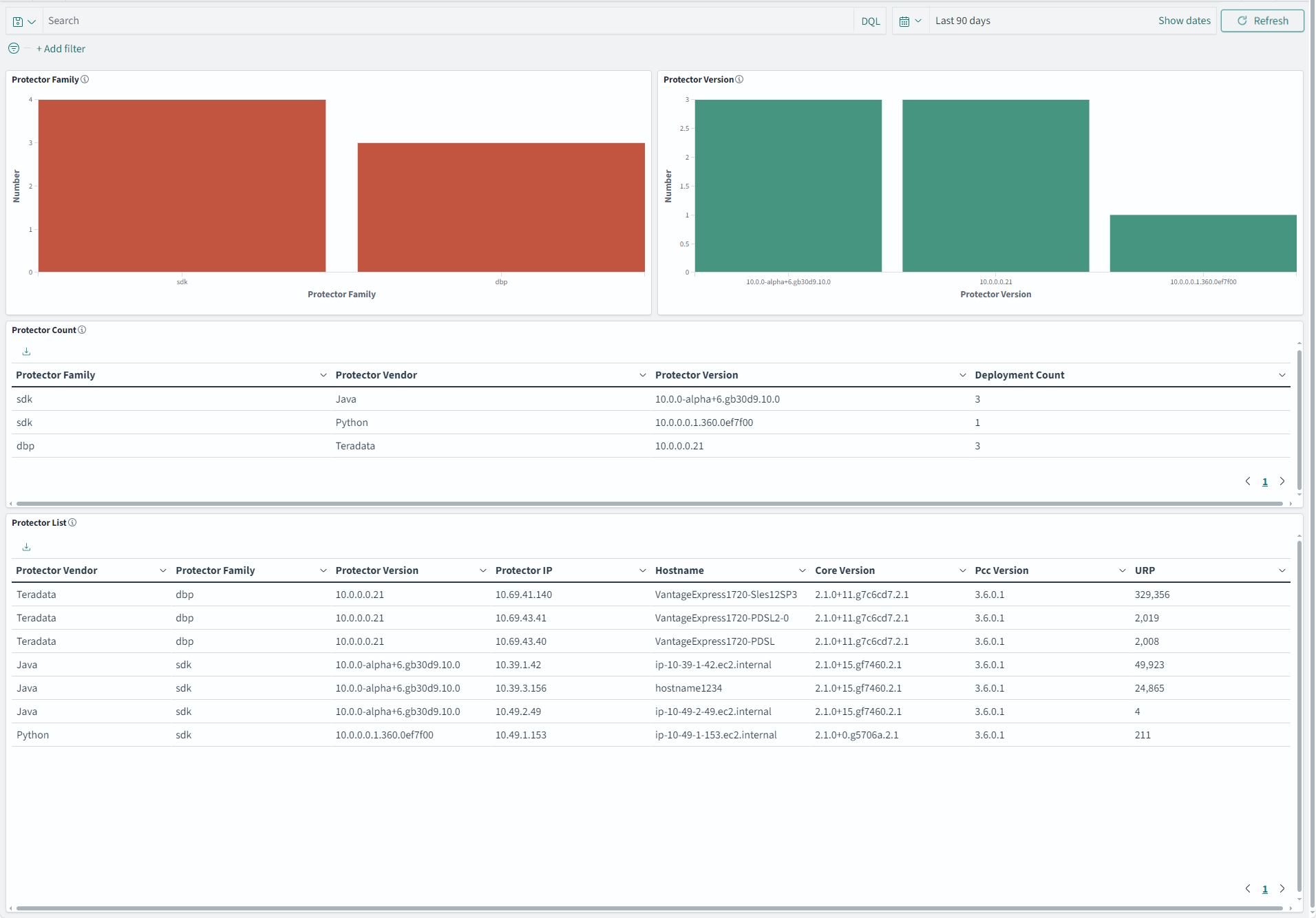

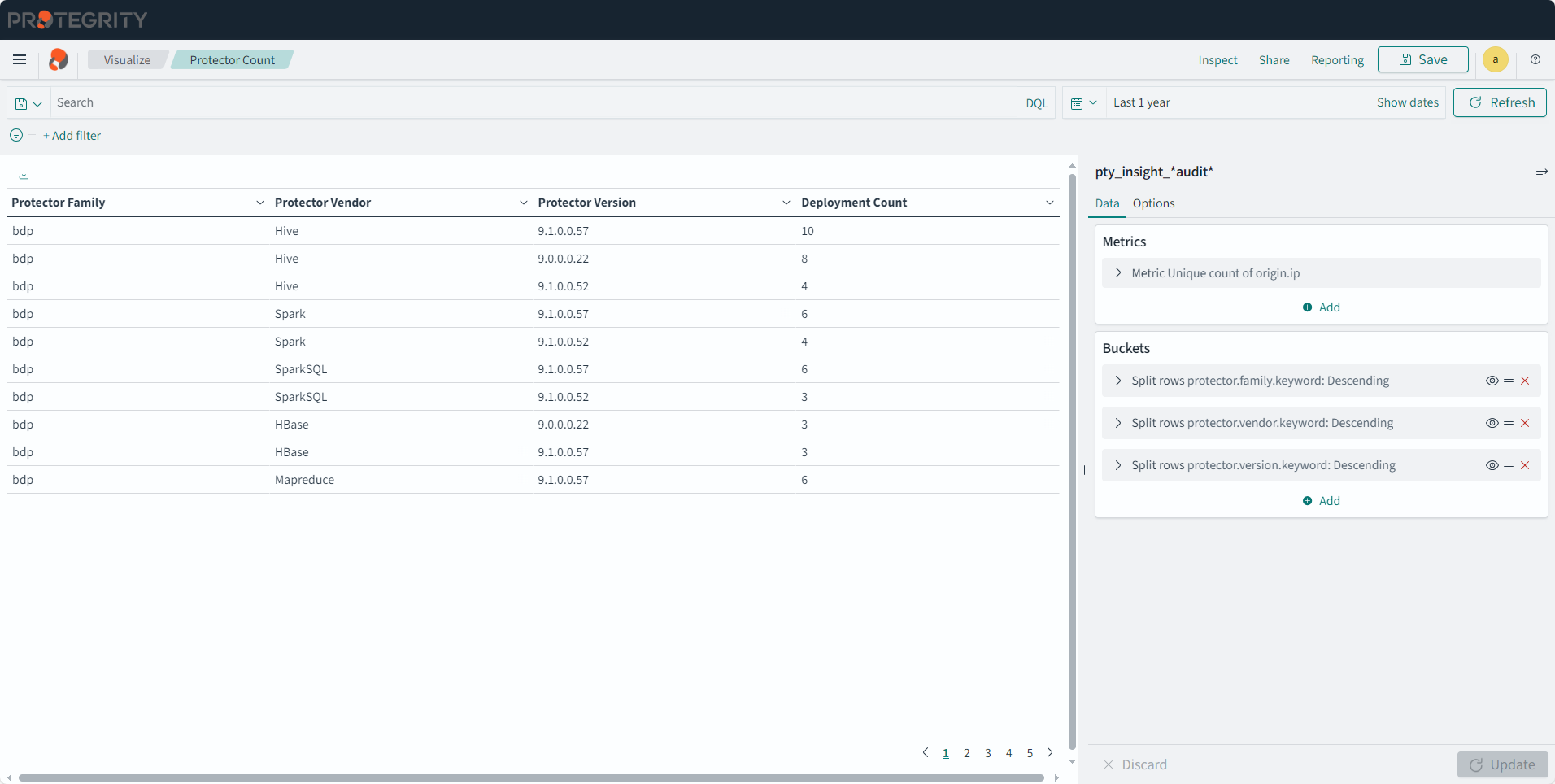

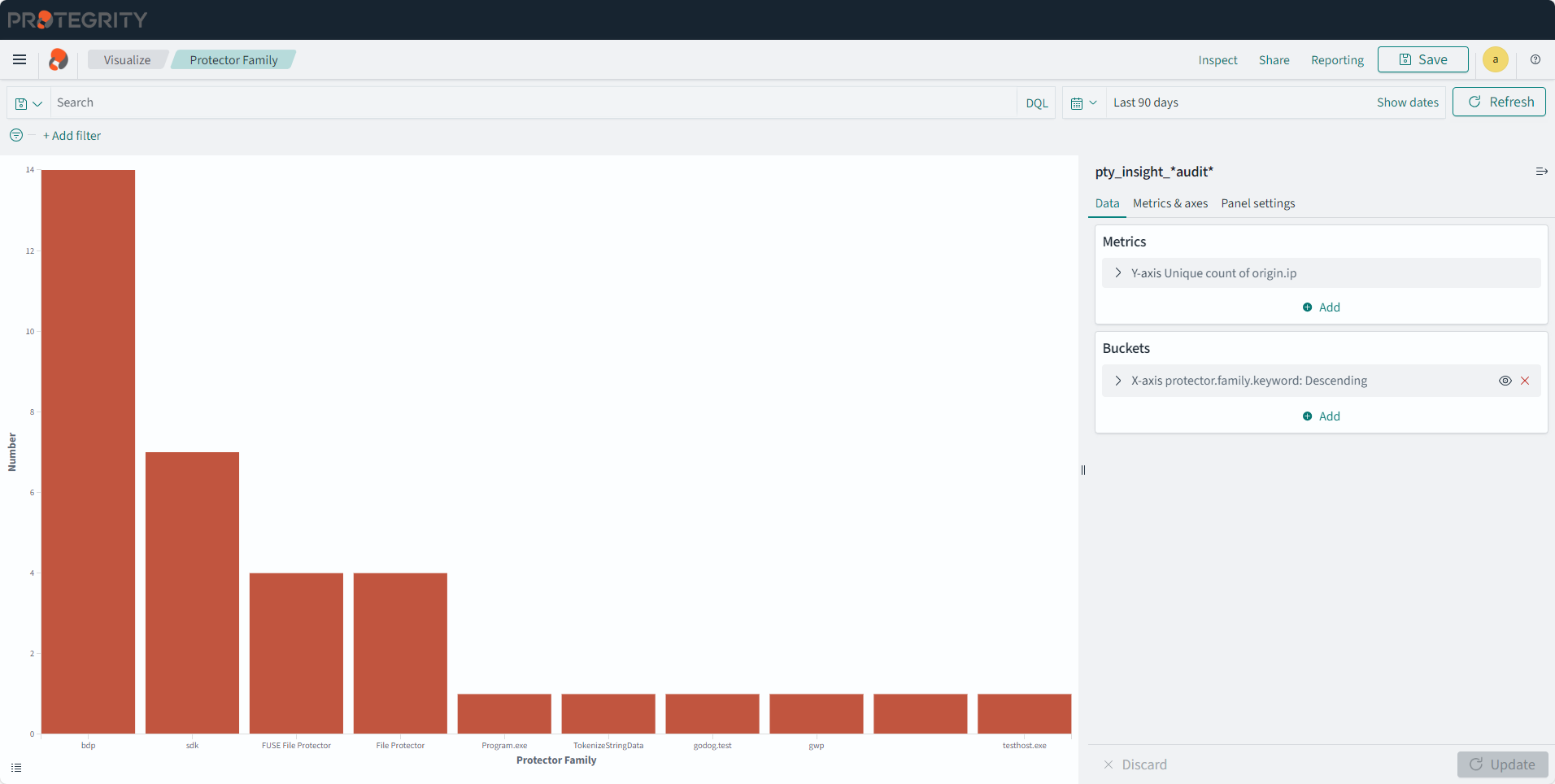

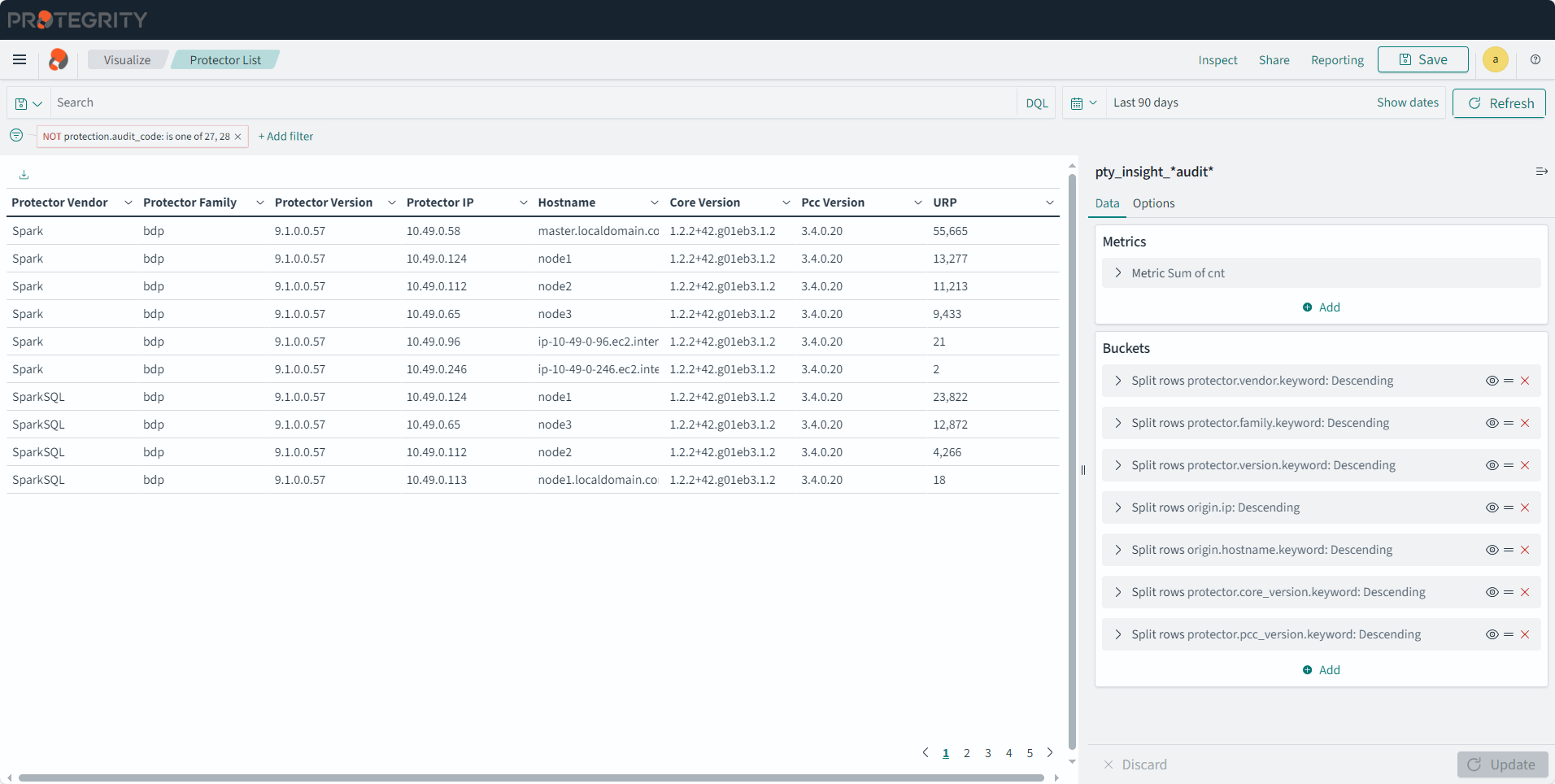

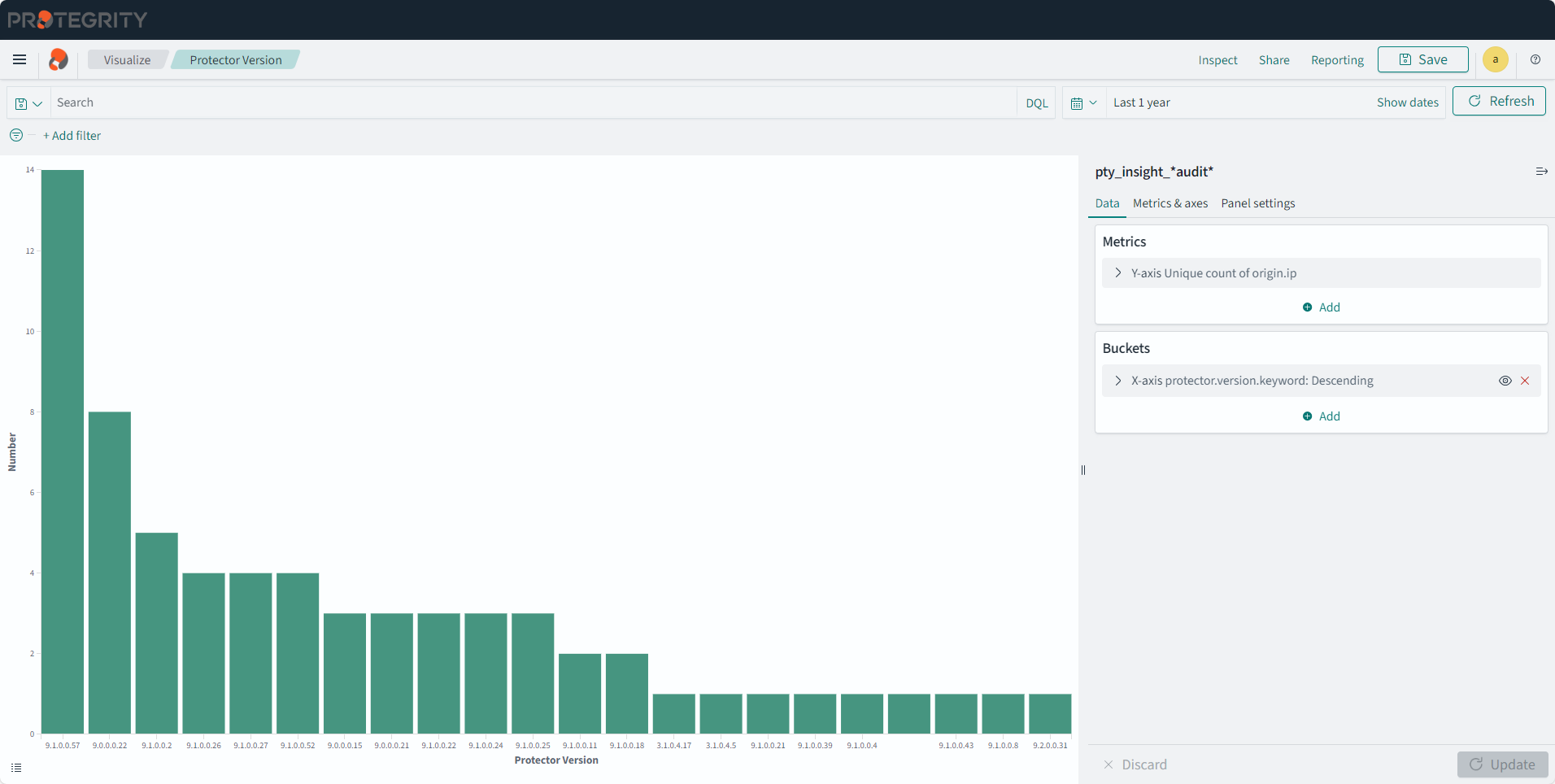

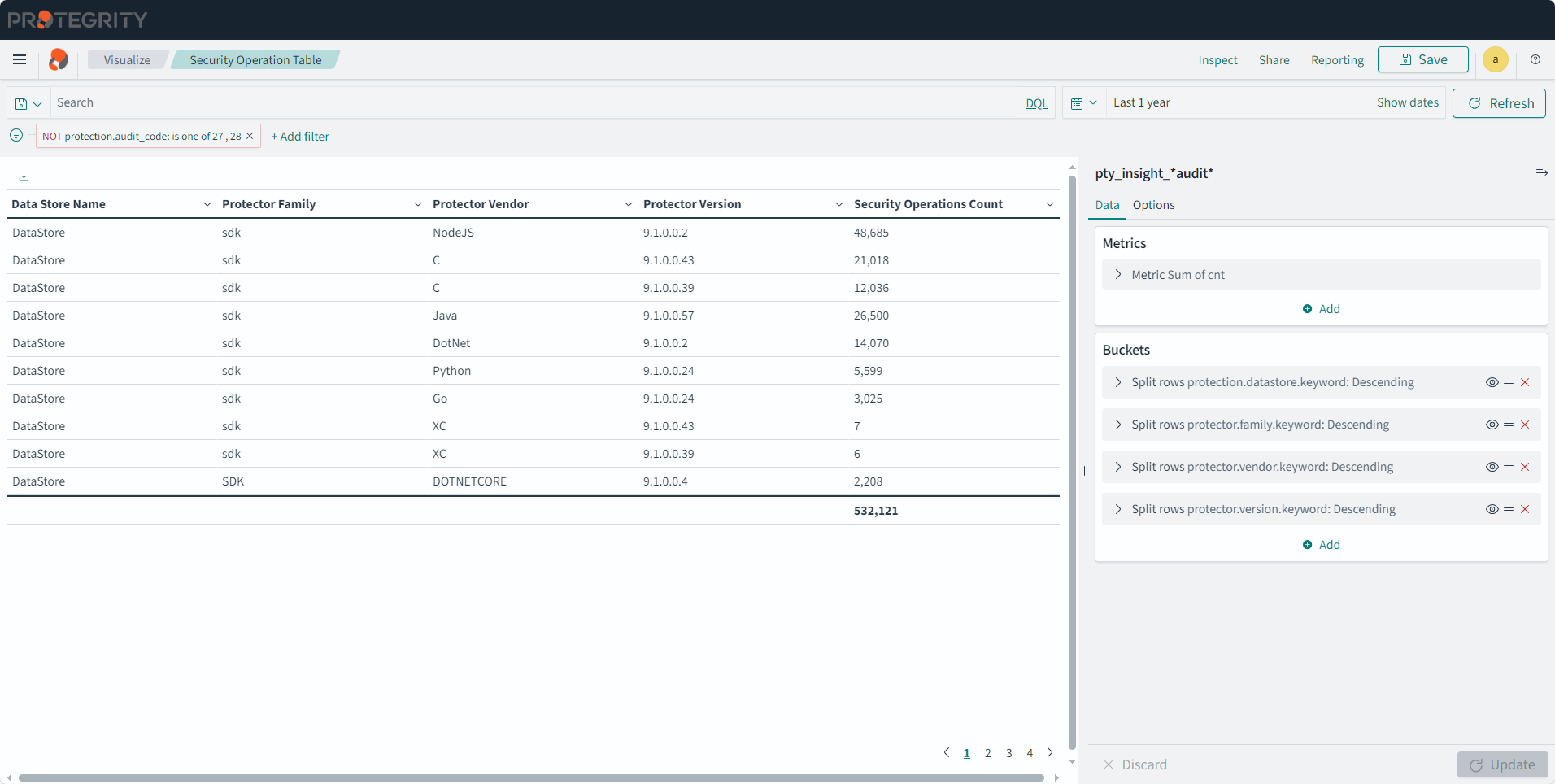

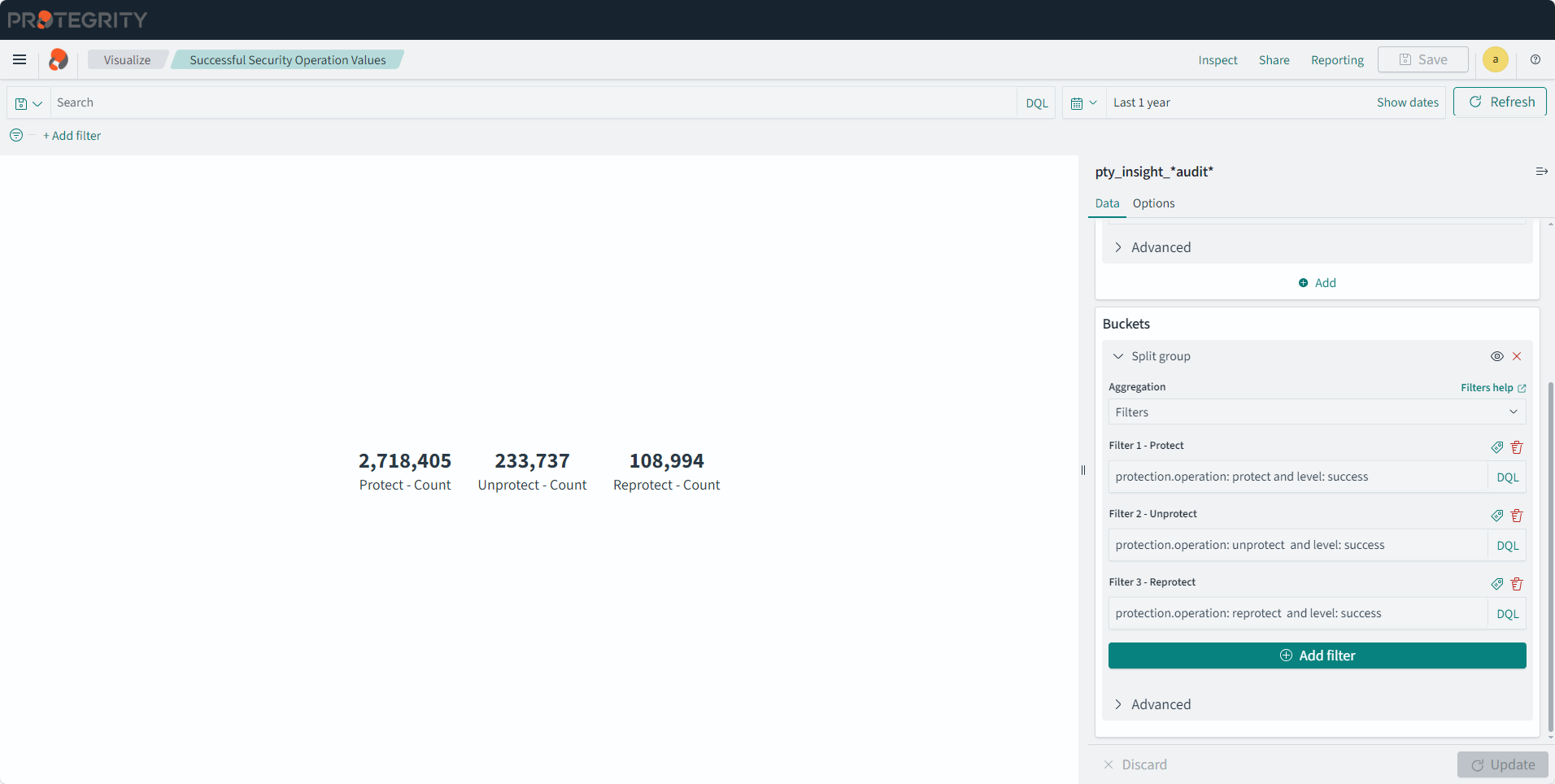

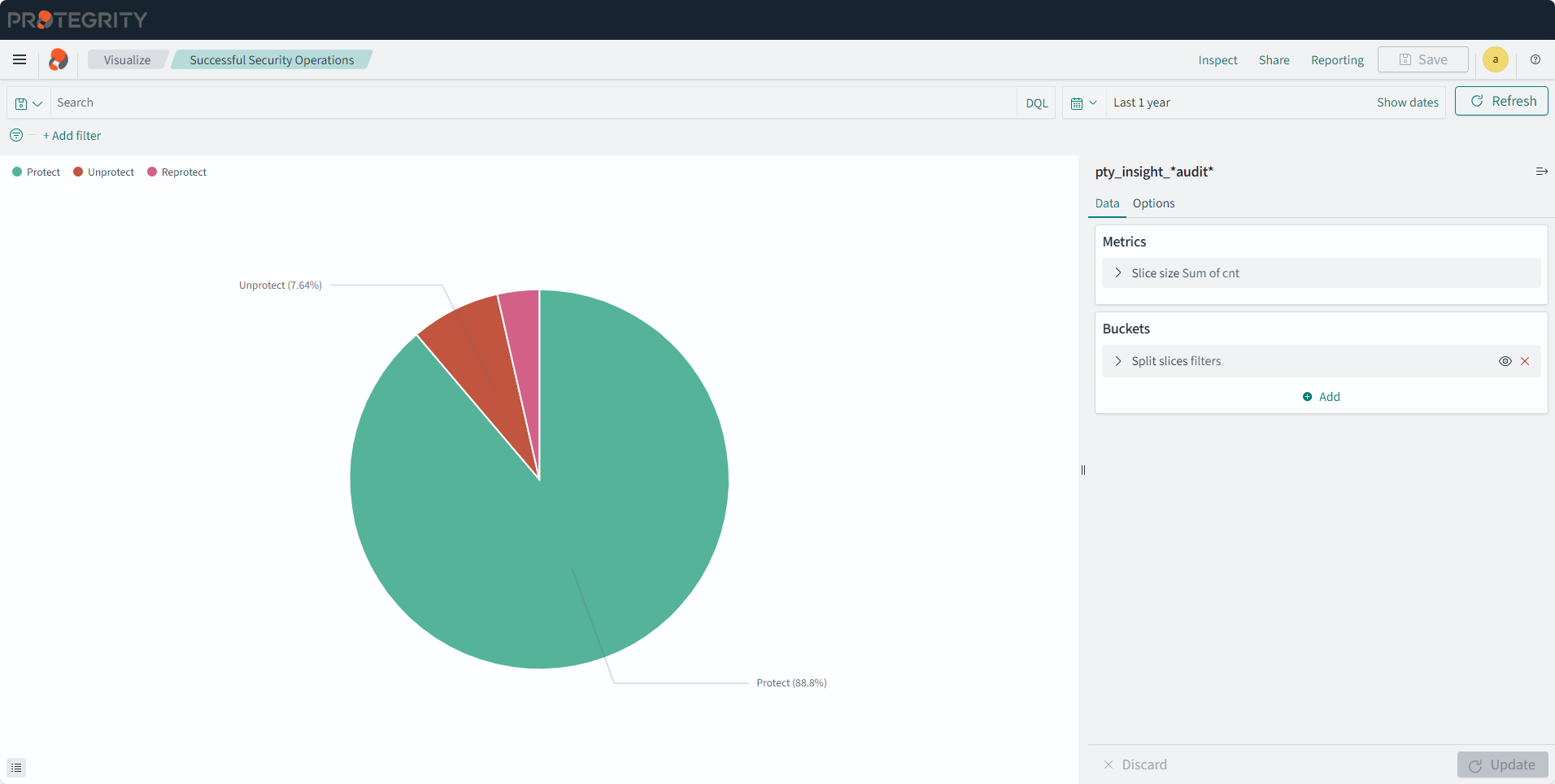

- 2.5: Identifying the protector version

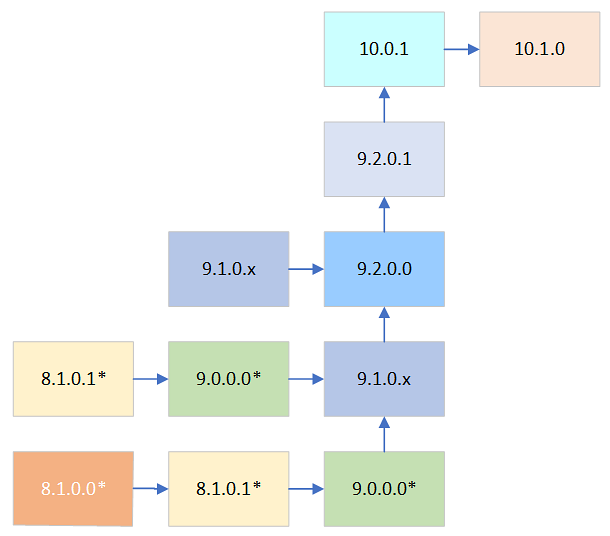

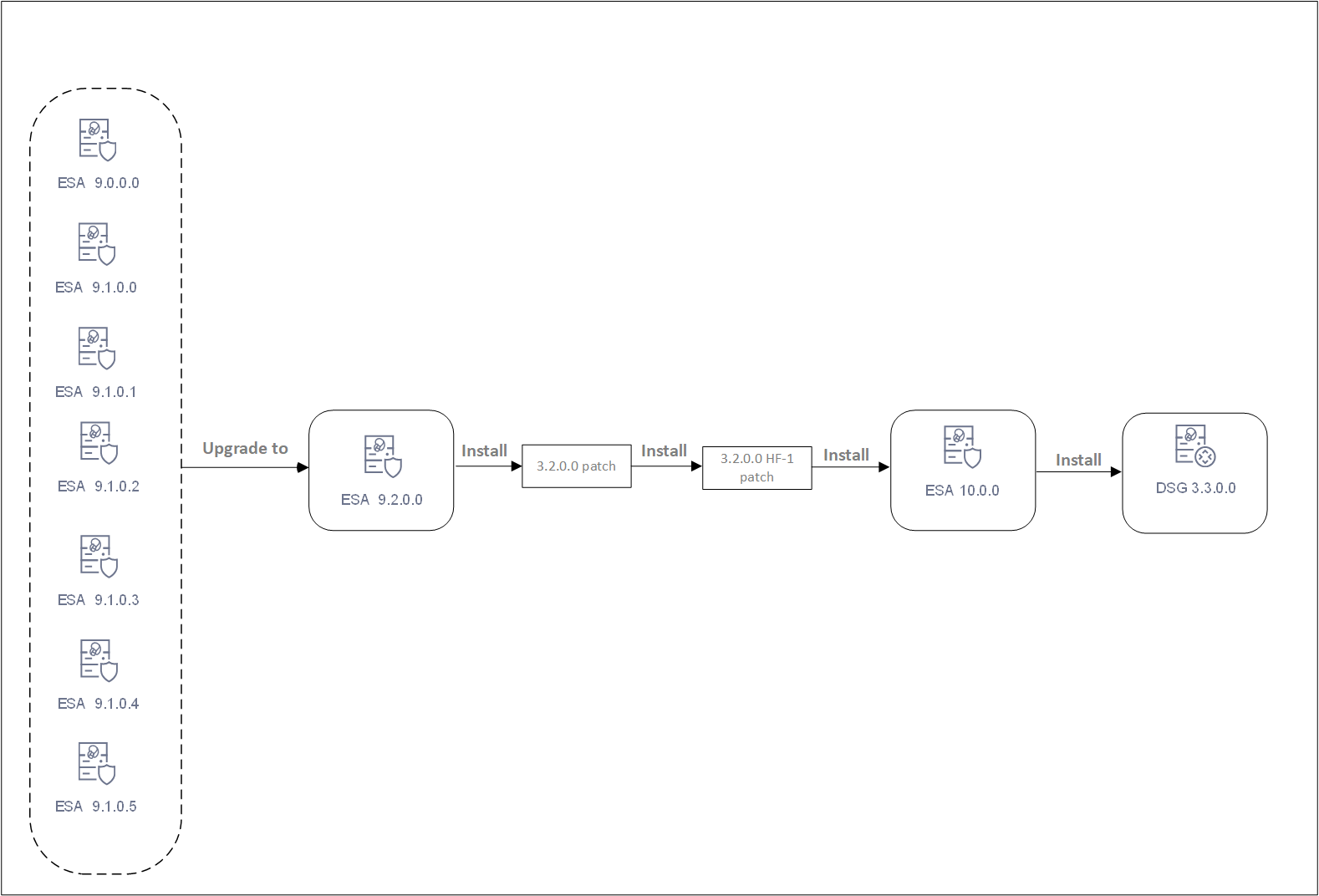

- 3: Upgrading ESA to v10.1.0

- 3.1: System and License Requirements

- 3.2: Upgrade Paths to ESA v10.1.0

- 3.3: Prerequisites

- 3.4: Upgrading from v10.0.1

- 3.5: Post upgrade steps

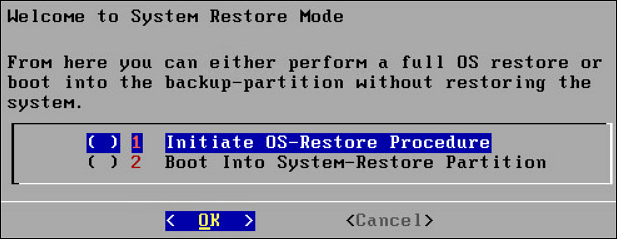

- 3.6: Restoring to the Previous Version of ESA

- 4: Enterprise Security Administrator (ESA)

- 4.1: Protegrity Appliance Overview

- 4.2: Installing ESA

- 4.3: Logging Into ESA

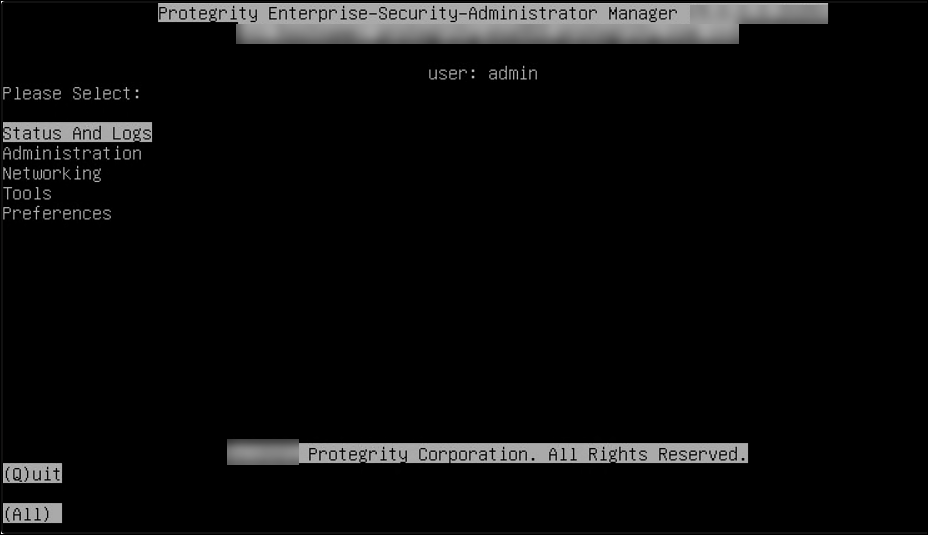

- 4.4: Command-Line Interface (CLI) Manager

- 4.4.1: Accessing the CLI Manager

- 4.4.2: CLI Manager Structure Overview

- 4.4.3: Working with Status and Logs

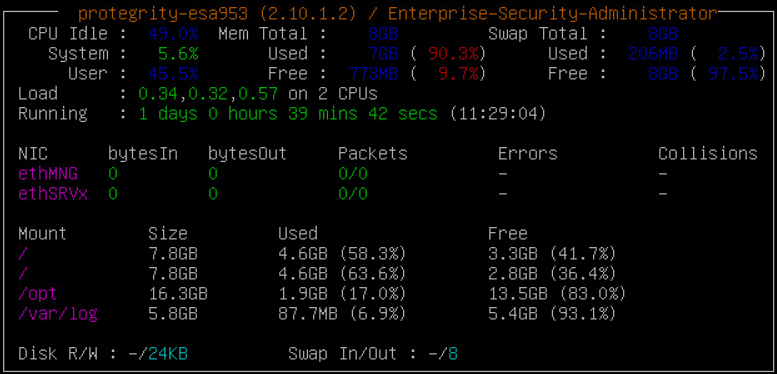

- 4.4.3.1: Monitoring System Statistics

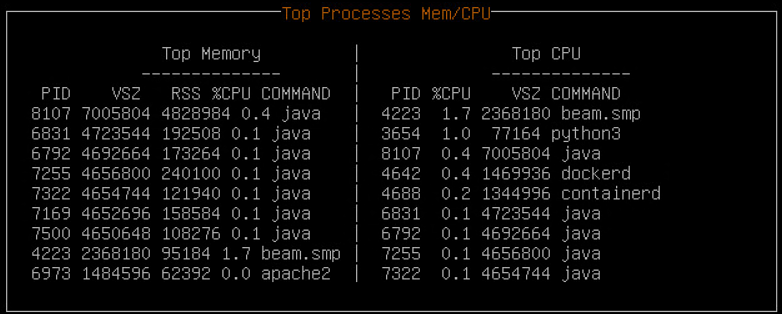

- 4.4.3.2: Viewing the Top Processes

- 4.4.3.3: Working with System Statistics (SYSSTAT)

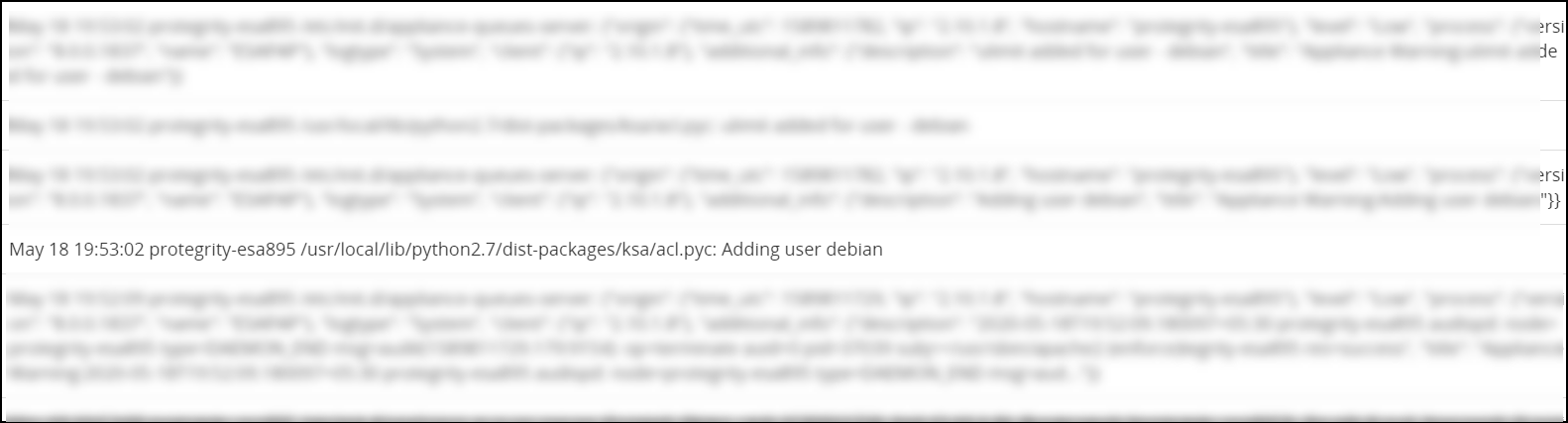

- 4.4.3.4: Auditing Service

- 4.4.3.5: Viewing Appliance Logs

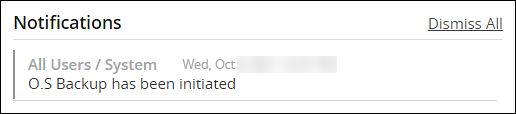

- 4.4.3.6: Viewing User Notifications

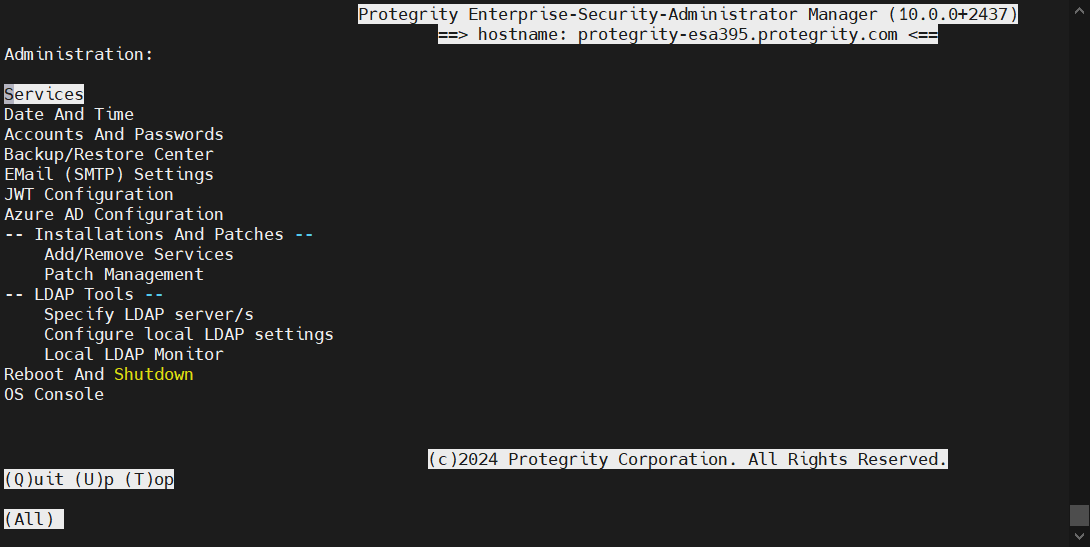

- 4.4.4: Working with Administration

- 4.4.4.1: Working with Services

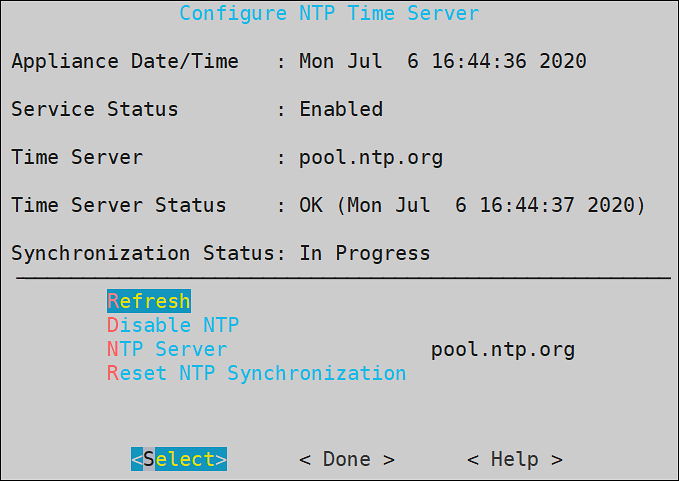

- 4.4.4.2: Setting Date and Time

- 4.4.4.3: Managing Accounts and Passwords

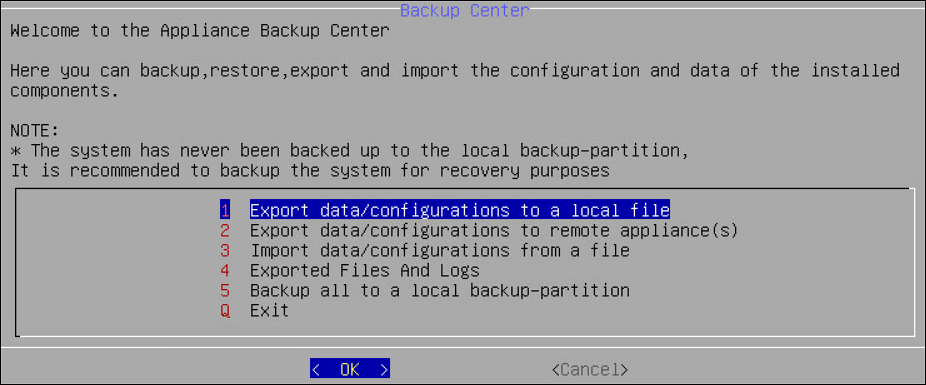

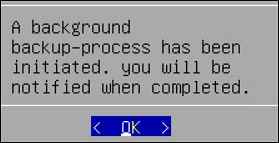

- 4.4.4.4: Working with Backup and Restore

- 4.4.4.5: Setting Up the Email Server

- 4.4.4.6: Working with Azure AD

- 4.4.4.6.1: Configuring Azure AD Settings

- 4.4.4.6.2: Enabling/Disabling Azure AD

- 4.4.4.7: Accessing REST API Resources

- 4.4.4.7.1: Using Basic Authentication

- 4.4.4.7.2: Using Client Certificates

- 4.4.4.7.3: Using JSON Web Token (JWT)

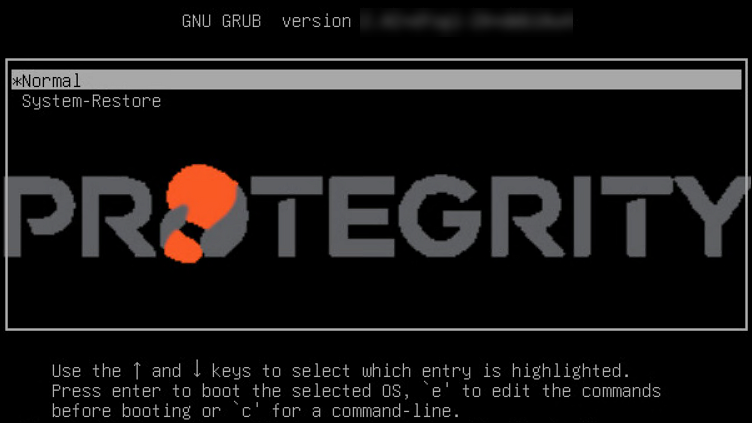

- 4.4.4.8: Securing the GRand Unified Bootloader

- 4.4.4.8.1: Enabling the Credentials for the GRUB Menu

- 4.4.4.8.2: Disabling the GRUB Credentials

- 4.4.4.9: Working with Installations and Patches

- 4.4.4.9.1: Add/Remove Services

- 4.4.4.9.2: Uninstalling Products

- 4.4.4.9.3: Managing Patches

- 4.4.4.10: Managing LDAP

- 4.4.4.10.1: Working with the Protegrity LDAP Server

- 4.4.4.10.2: Changing the Bind User Password

- 4.4.4.10.3: Working with Proxy Authentication

- 4.4.4.10.4: Configuring Local LDAP Settings

- 4.4.4.10.5: Monitoring Local LDAP

- 4.4.4.10.6: Optimizing Local LDAP Settings

- 4.4.4.11: Rebooting and Shutting down

- 4.4.4.12: Accessing the OS Console

- 4.4.5: Working with Networking

- 4.4.5.1: Configuring Network Settings

- 4.4.5.2: Configuring SNMP

- 4.4.5.2.1: Configuring SNMPv3 as a USM Model

- 4.4.5.2.2: Configuring SNMPv3 as a TSM Model

- 4.4.5.3: Working with Bind Services and Addresses

- 4.4.5.3.1: Binding Interface for Management

- 4.4.5.3.2: Binding Interface for Services

- 4.4.5.4: Using Network Troubleshooting Tools

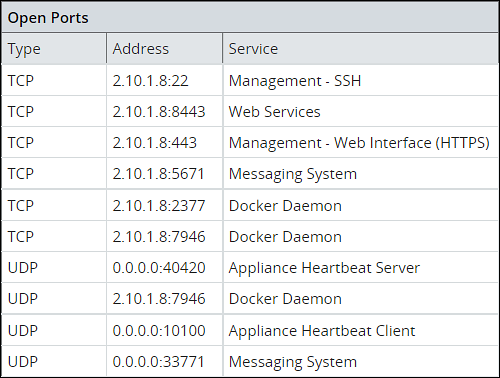

- 4.4.5.5: Managing Firewall Settings

- 4.4.5.6: Using the Management Interface Settings

- 4.4.5.7: Ports Allowlist

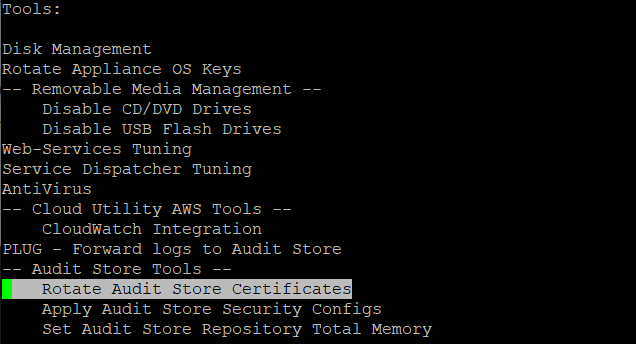

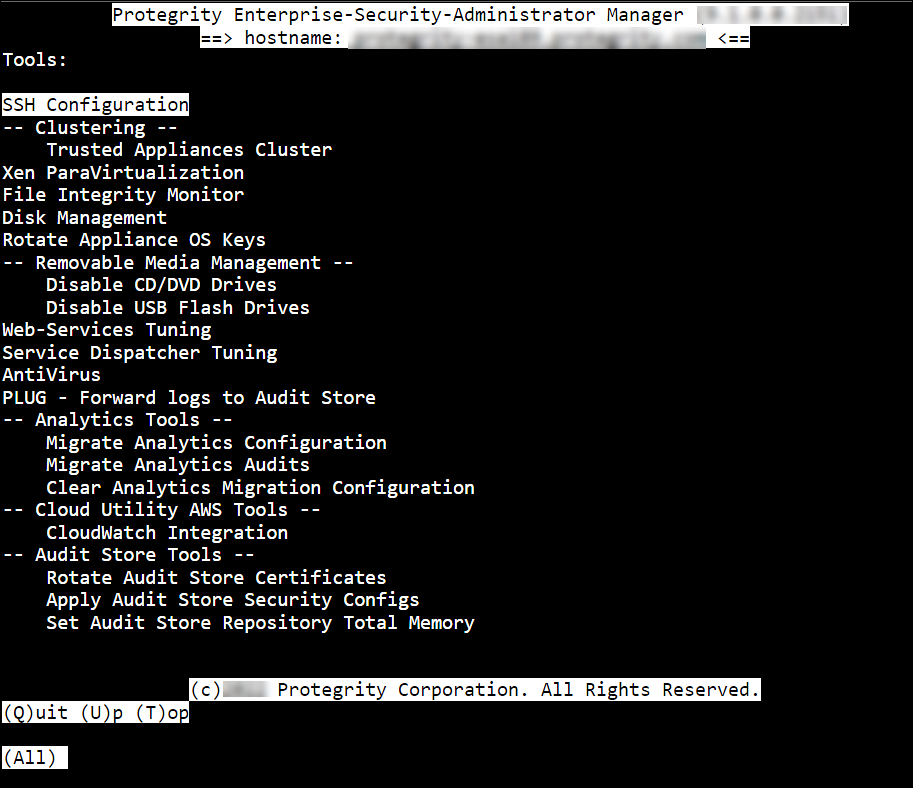

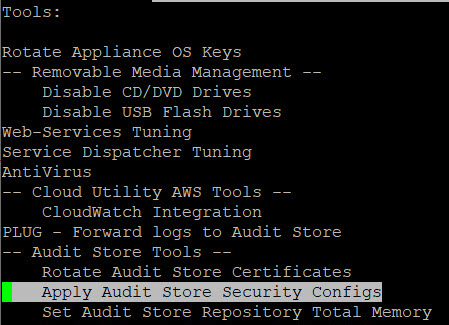

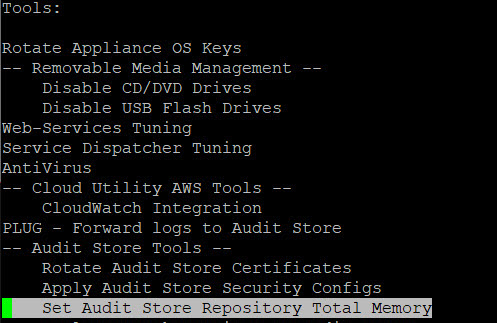

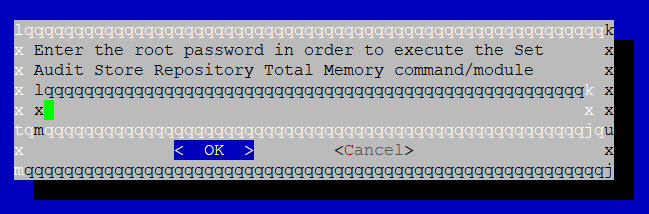

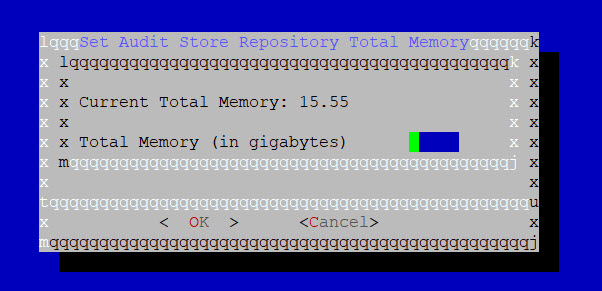

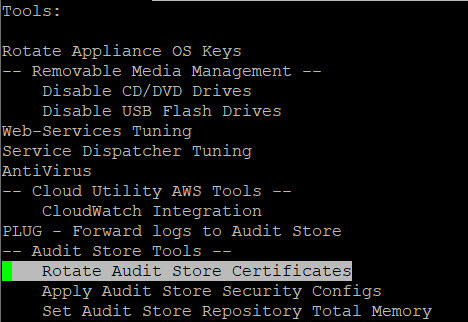

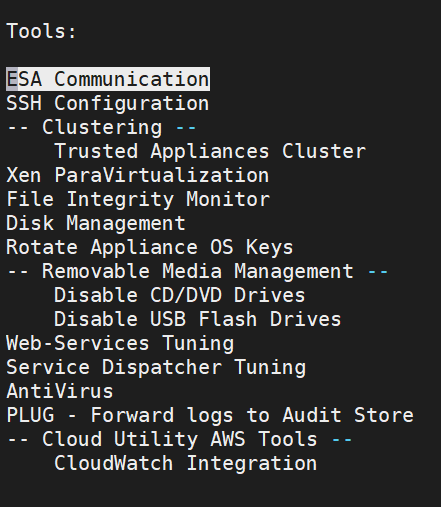

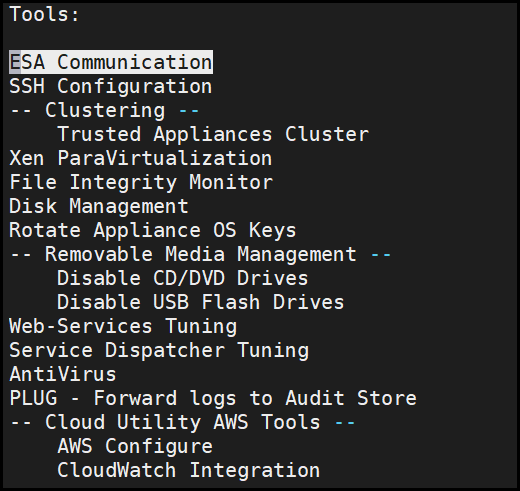

- 4.4.6: Working with Tools

- 4.4.6.1: Configuring the SSH

- 4.4.6.1.1: Specifying SSH Mode

- 4.4.6.1.2: Setting Up Advanced SSH Configuration

- 4.4.6.1.3: Managing SSH Known Hosts

- 4.4.6.1.4: Managing Authorized Keys

- 4.4.6.1.5: Managing Identities

- 4.4.6.1.6: Generating SSH Keys

- 4.4.6.1.7: Configuring the SSH

- 4.4.6.1.8: Customizing the SSH Configurations

- 4.4.6.1.9: Exporting/Importing the SSH Settings

- 4.4.6.1.10: Securing SSH Communication

- 4.4.6.2: Clustering Tool

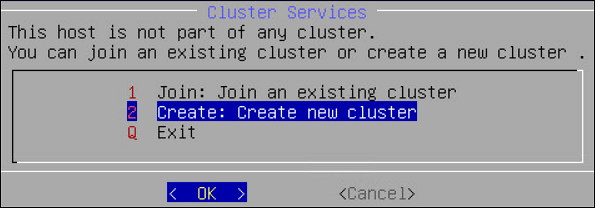

- 4.4.6.2.1: Creating a TAC using the CLI Manager

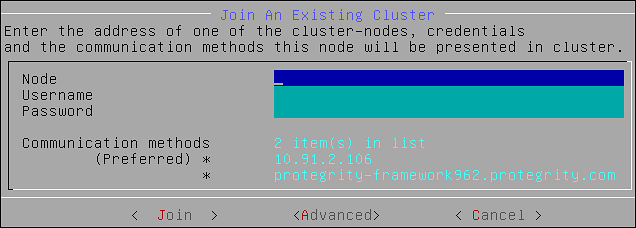

- 4.4.6.2.2: Joining an Existing Cluster using the CLI Manager

- 4.4.6.2.3: Cluster Operations

- 4.4.6.2.4: Managing a site

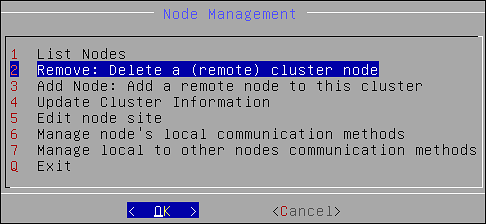

- 4.4.6.2.5: Node Management

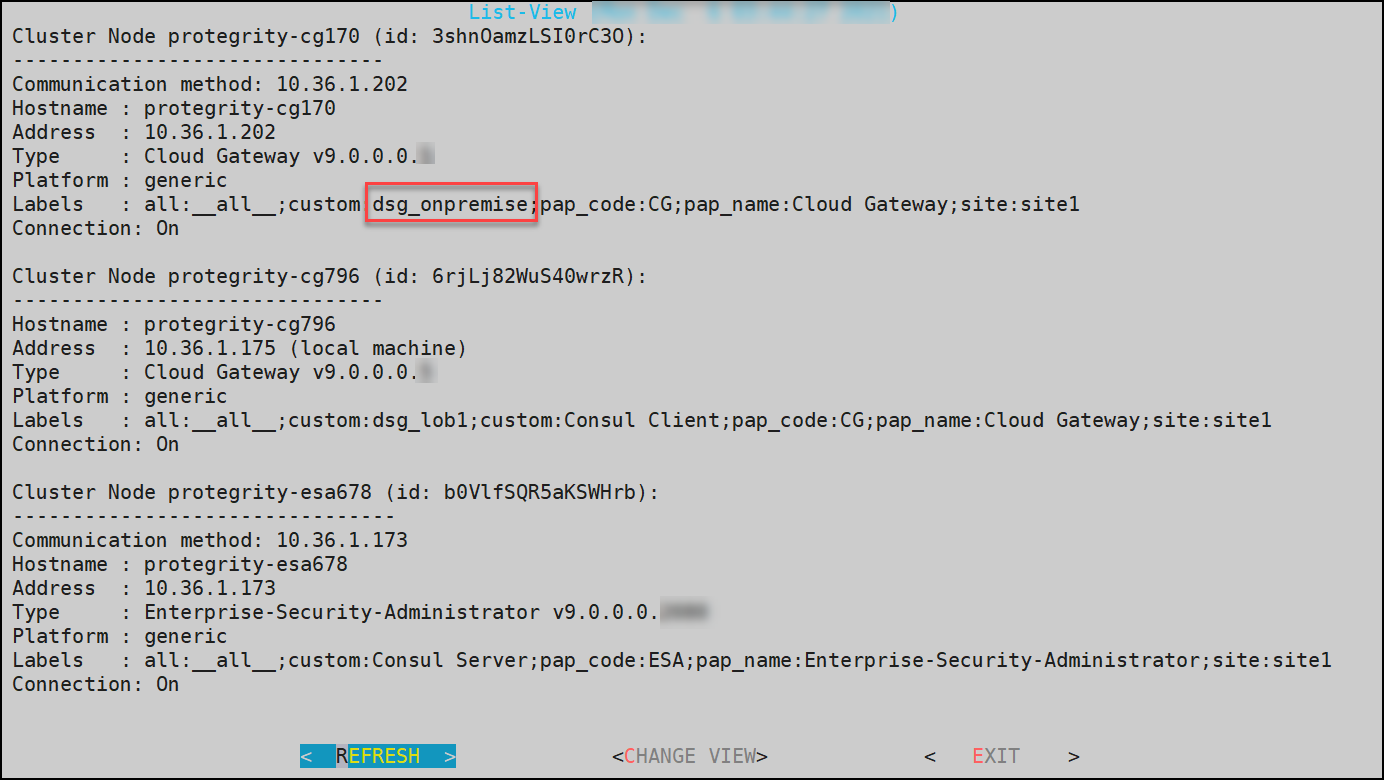

- 4.4.6.2.5.1: Show Cluster Nodes and Status

- 4.4.6.2.5.2: Viewing the Cluster Status using the CLI Manager

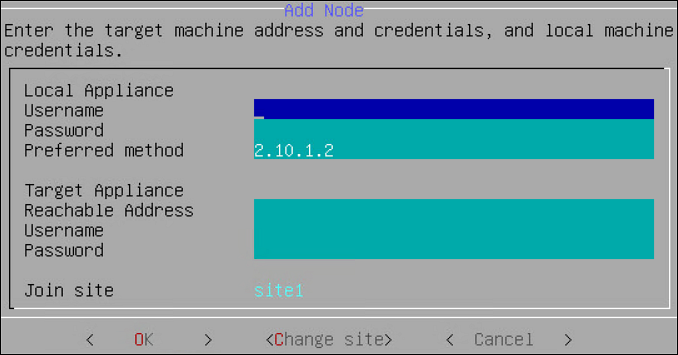

- 4.4.6.2.5.3: Adding a Remote Node to a Cluster

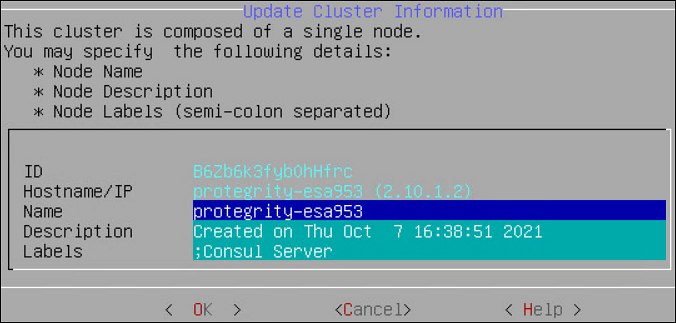

- 4.4.6.2.5.4: Updating Cluster Information using the CLI Manager

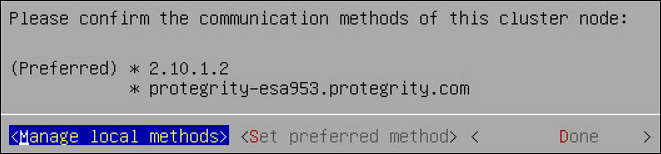

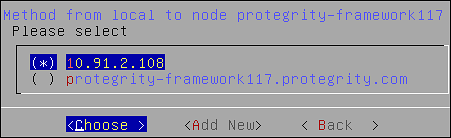

- 4.4.6.2.5.5: Managing Communication Methods for Local Node

- 4.4.6.2.5.6: Managing Local to Remote Node Communication

- 4.4.6.2.5.7: Removing a Node from a Cluster using CLI Manager

- 4.4.6.2.5.8: Uninstalling Cluster Services

- 4.4.6.2.6: Trusted Appliances Cluster

- 4.4.6.2.6.1: Updating Cluster Key

- 4.4.6.2.6.2: Redeploy Local Cluster Configuration to All Nodes

- 4.4.6.2.6.3: Cluster Service Interval

- 4.4.6.2.6.4: Execute Commands as OS Root User

- 4.4.6.3: Working with Xen Paravirtualization Tool

- 4.4.6.4: Working with the File Integrity Monitor Tool

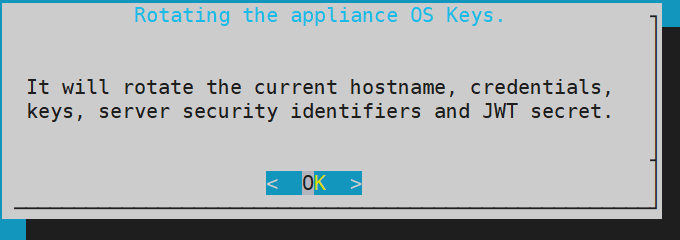

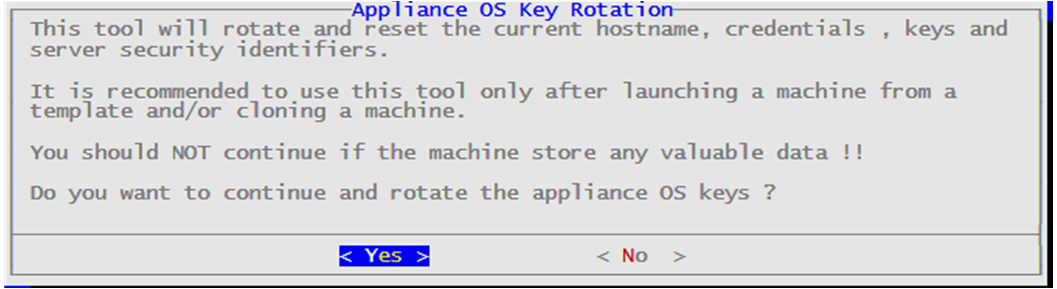

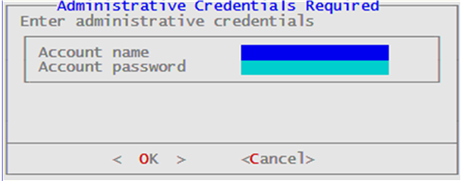

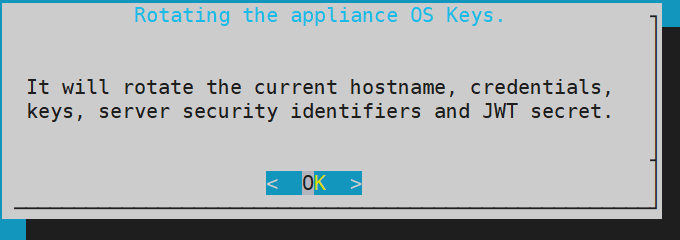

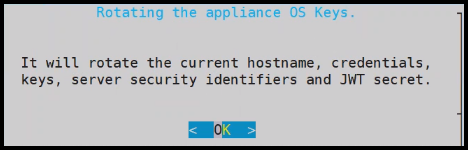

- 4.4.6.5: Rotating Appliance OS Keys

- 4.4.6.6: Managing Removable Drives

- 4.4.6.7: Tuning the Web Services

- 4.4.6.8: Tuning the Service Dispatcher

- 4.4.6.9: Working with Antivirus

- 4.4.7: Working with Preferences

- 4.4.7.1: Viewing System Monitor on OS Console

- 4.4.7.2: Setting Password Requirements for CLI System Tools

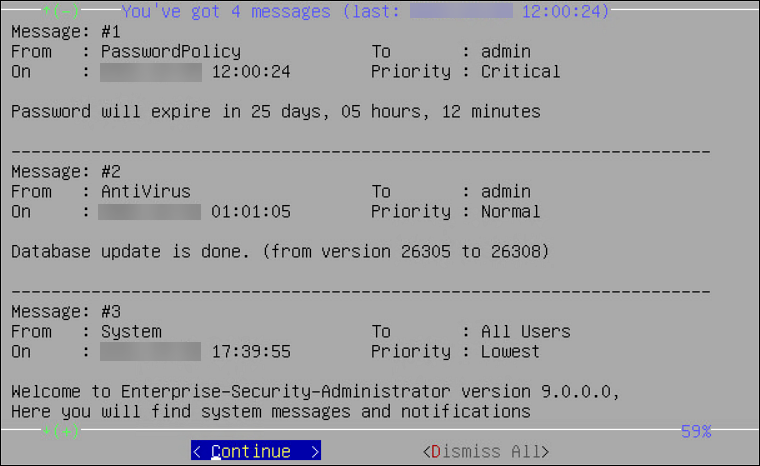

- 4.4.7.3: Viewing user notifications on CLI load

- 4.4.7.4: Minimizing the Timing Differences

- 4.4.7.5: Setting a Uniform Response Time

- 4.4.7.6: Limiting Incorrect root Login

- 4.4.7.7: Enabling Mandatory Access Control

- 4.4.7.8: FIPS Mode

- 4.4.7.9: Basic Authentication for REST APIs

- 4.4.8: Command Line Options

- 4.5: Web User Interface (Web UI) Management

- 4.5.1: Working with the Web UI

- 4.5.2: Description of ESA Web UI

- 4.5.3: Working with System

- 4.5.3.1: Working with Services

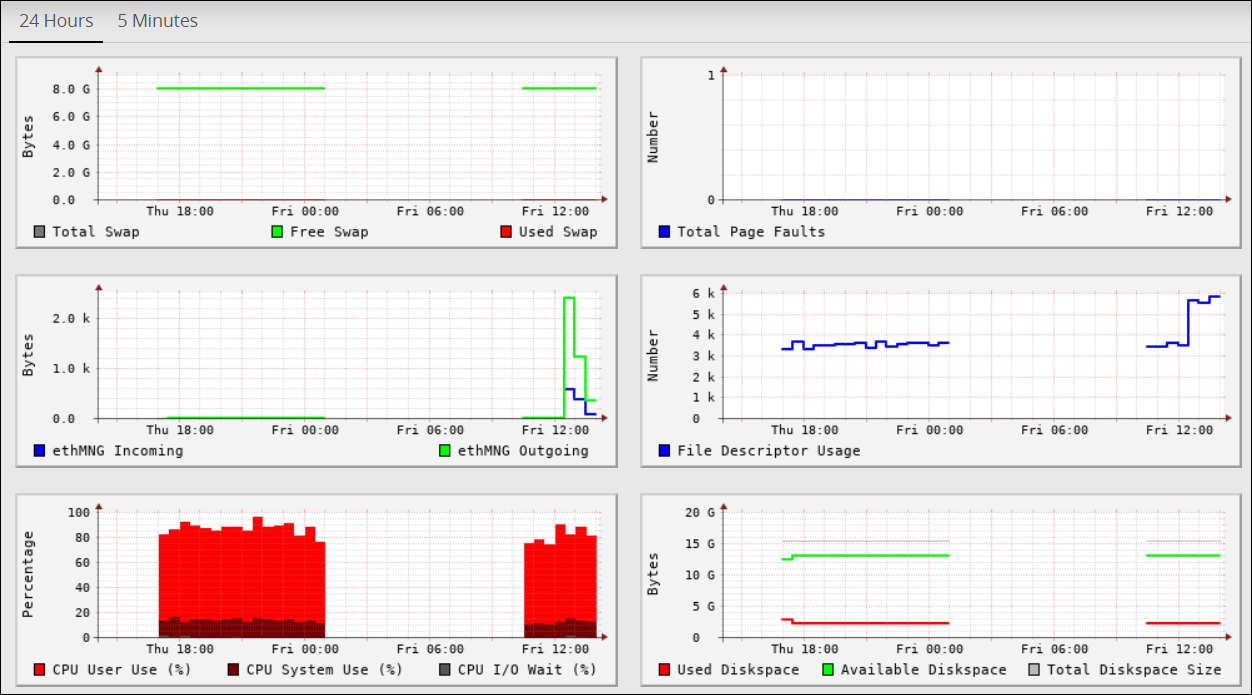

- 4.5.3.2: Viewing information, statistics, and graphs

- 4.5.3.3: Working with Trusted Appliances Cluster

- 4.5.3.4: Working with Backup and restore

- 4.5.3.4.1: Working with OS Full Backup and Restore

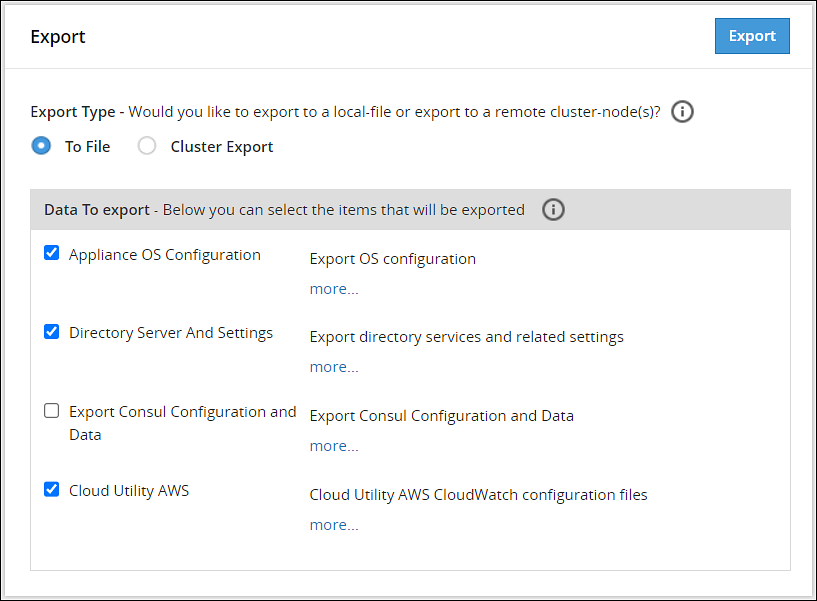

- 4.5.3.4.2: Backing up the data

- 4.5.3.4.3: Backing up custom files

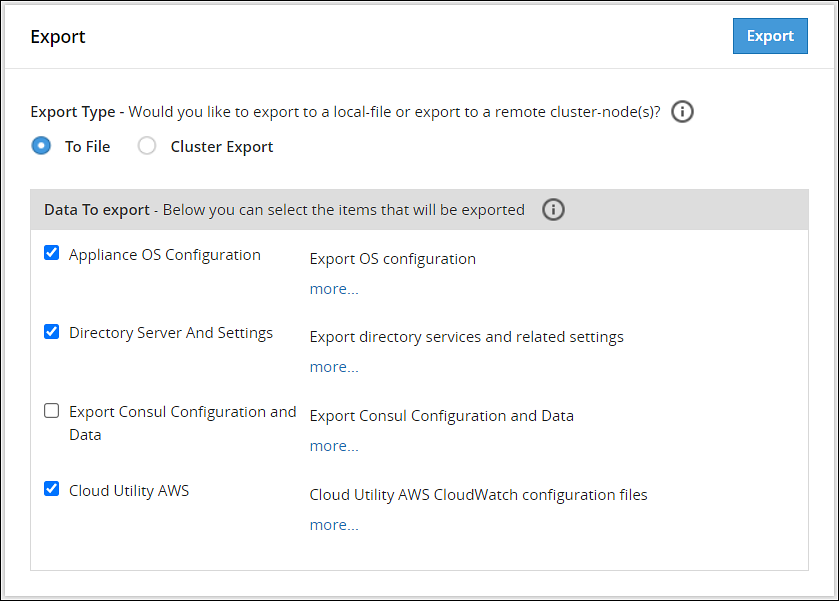

- 4.5.3.4.4: Exporting the custom files

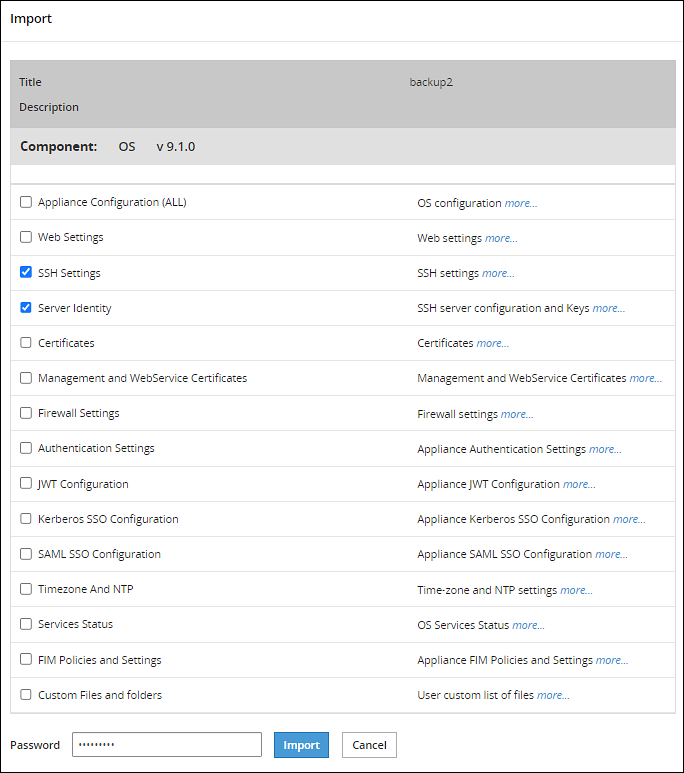

- 4.5.3.4.5: Importing the custom files

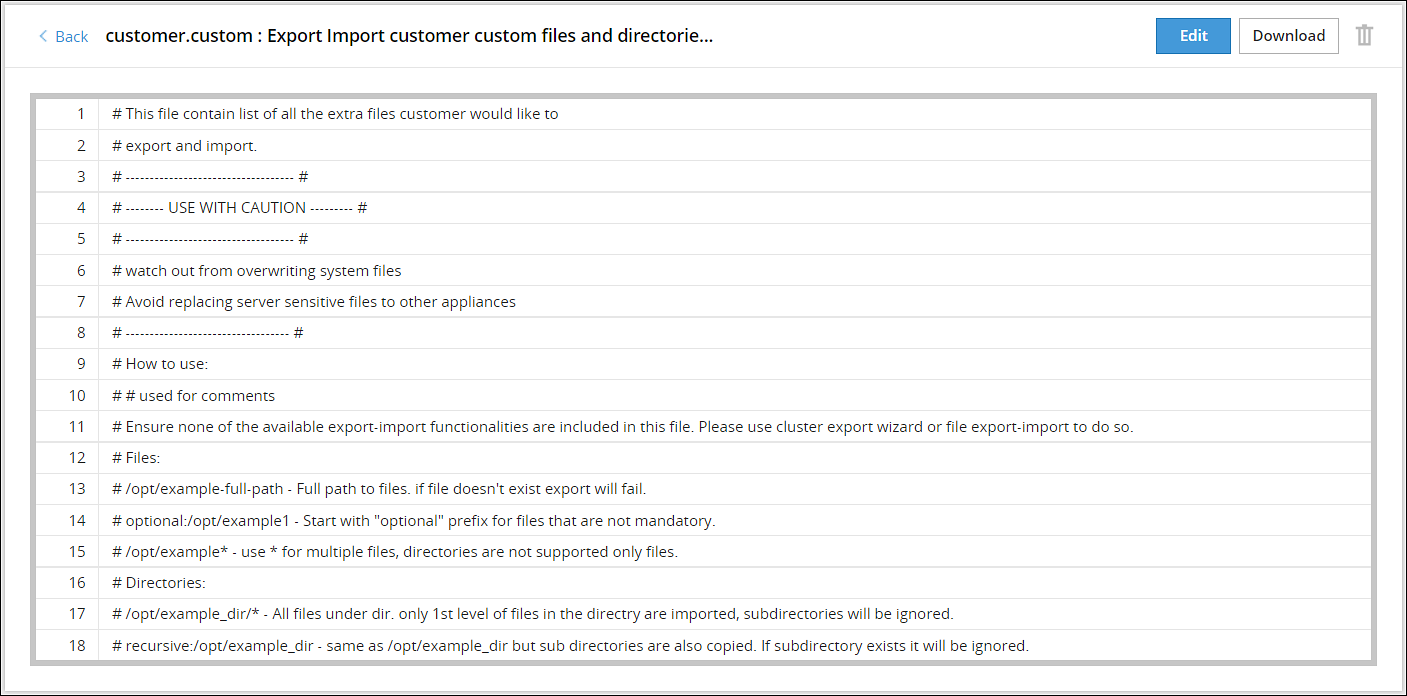

- 4.5.3.4.6: Working with the custom files

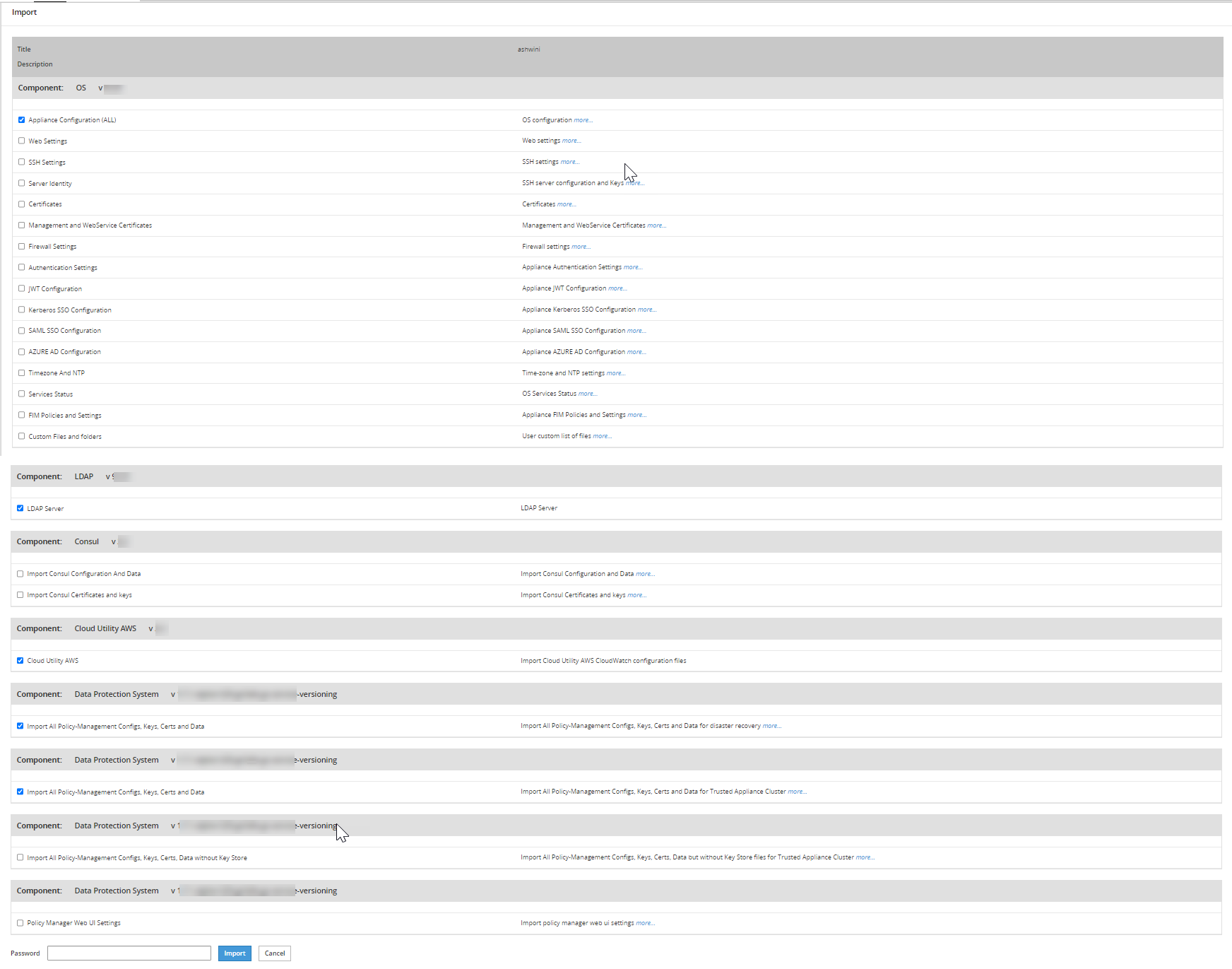

- 4.5.3.4.7: Restoring configurations

- 4.5.3.4.8: Viewing Export/Import logs

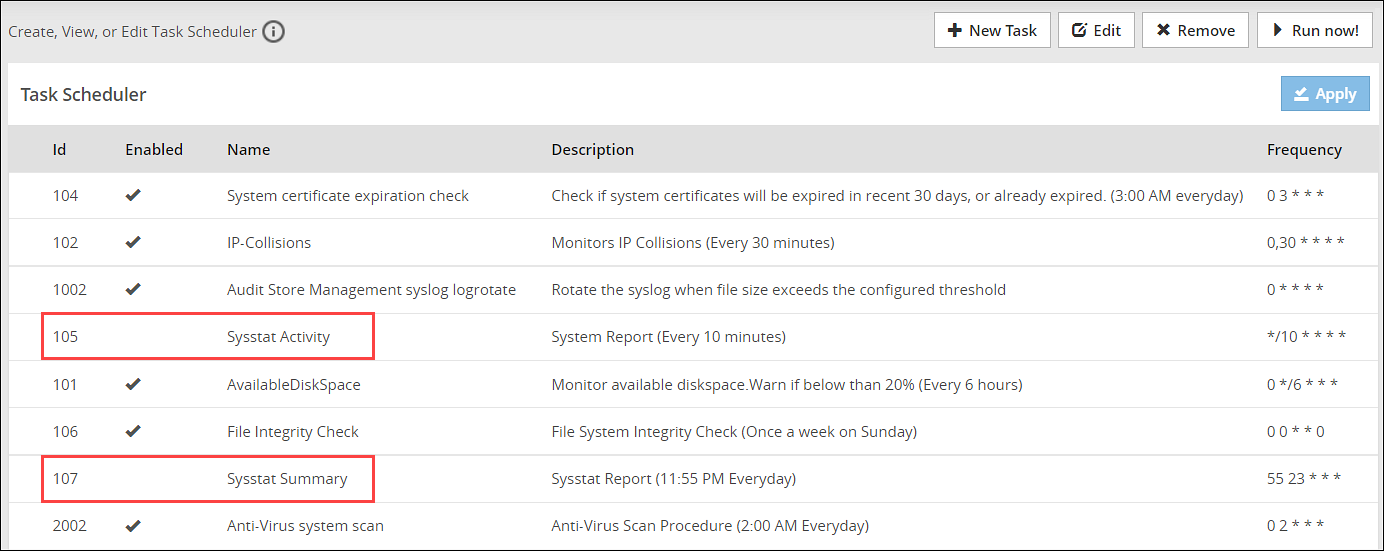

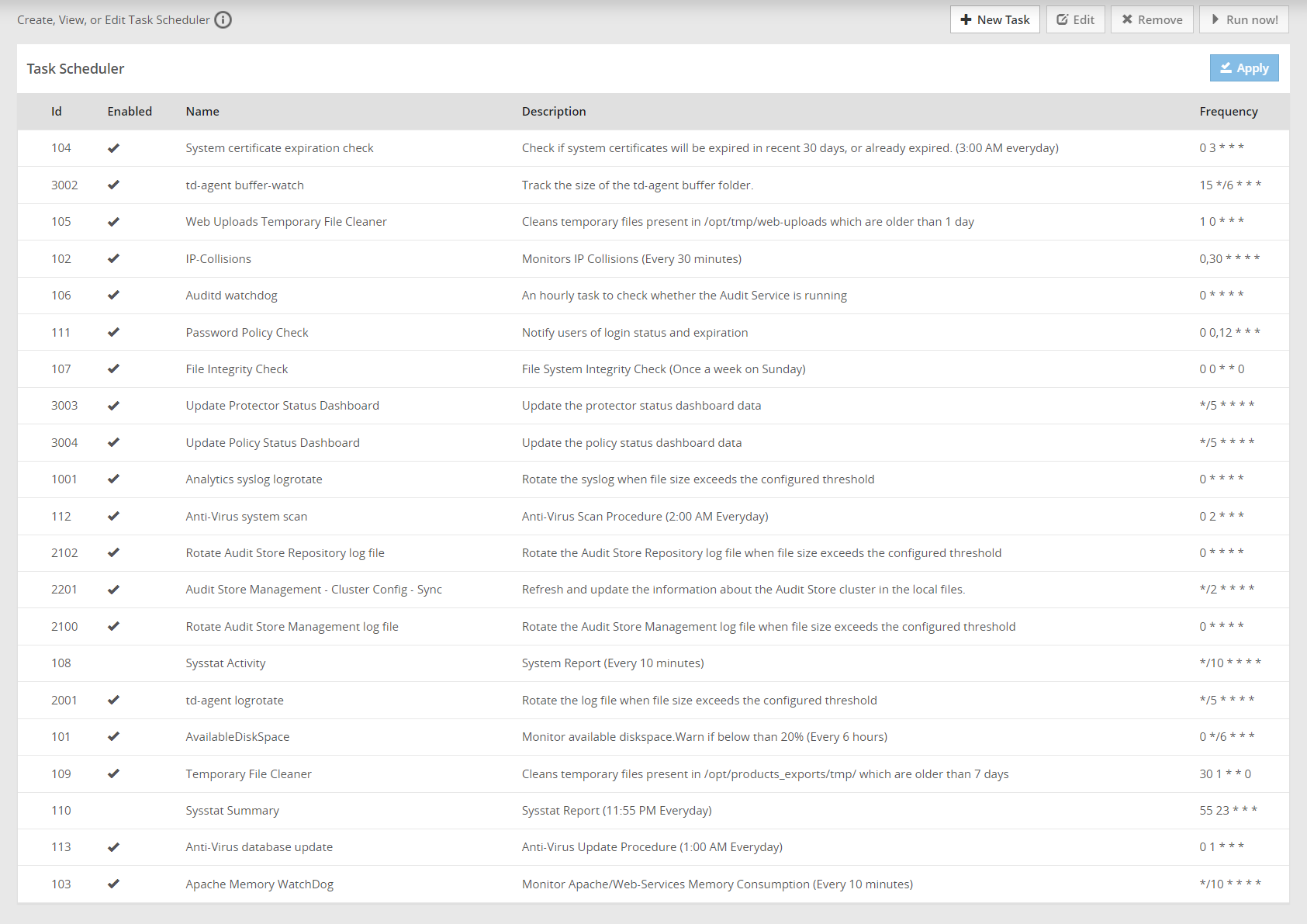

- 4.5.3.5: Scheduling appliance tasks

- 4.5.3.5.1: Viewing the scheduler page

- 4.5.3.5.2: Creating a scheduled task

- 4.5.3.5.3: Scheduling Configuration Export to Cluster Tasks

- 4.5.3.5.4: Deleting a scheduled task

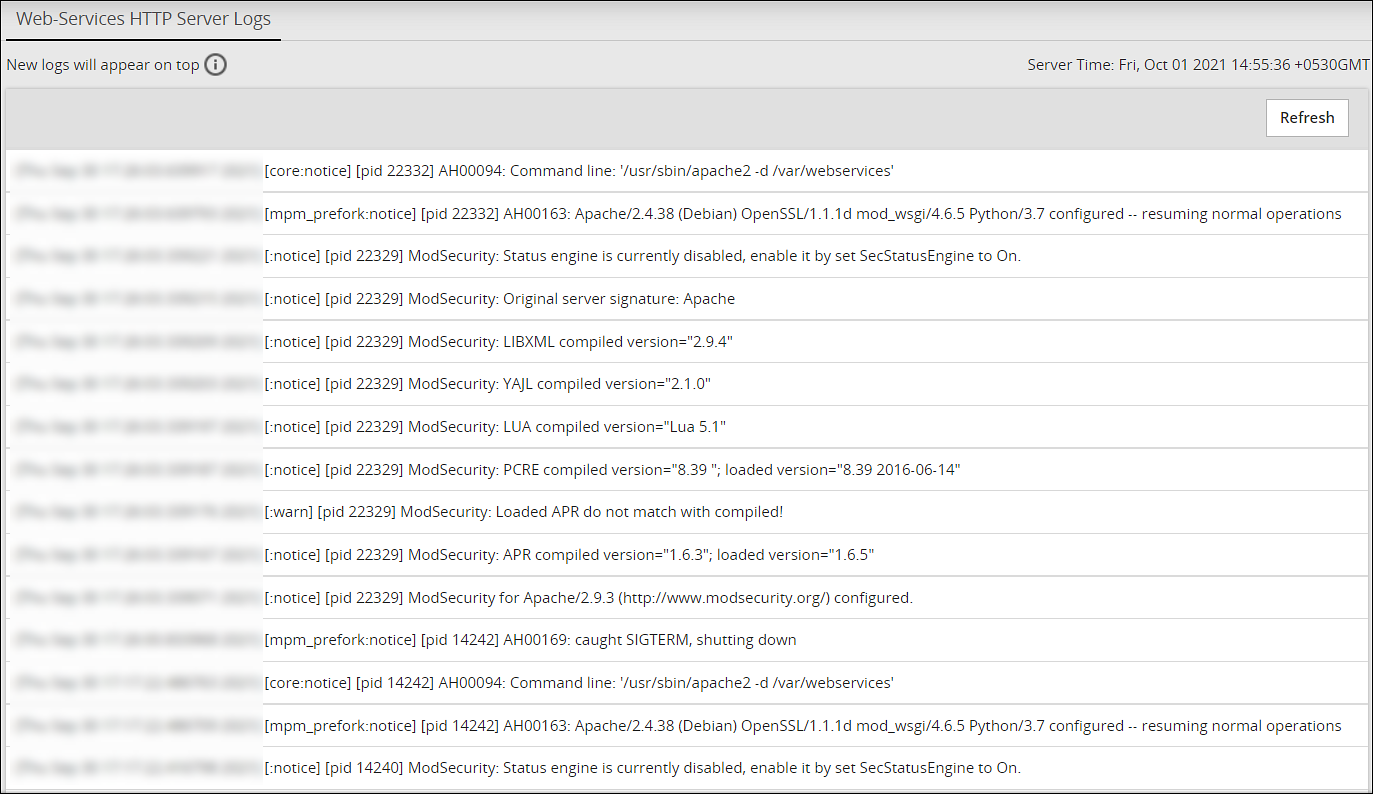

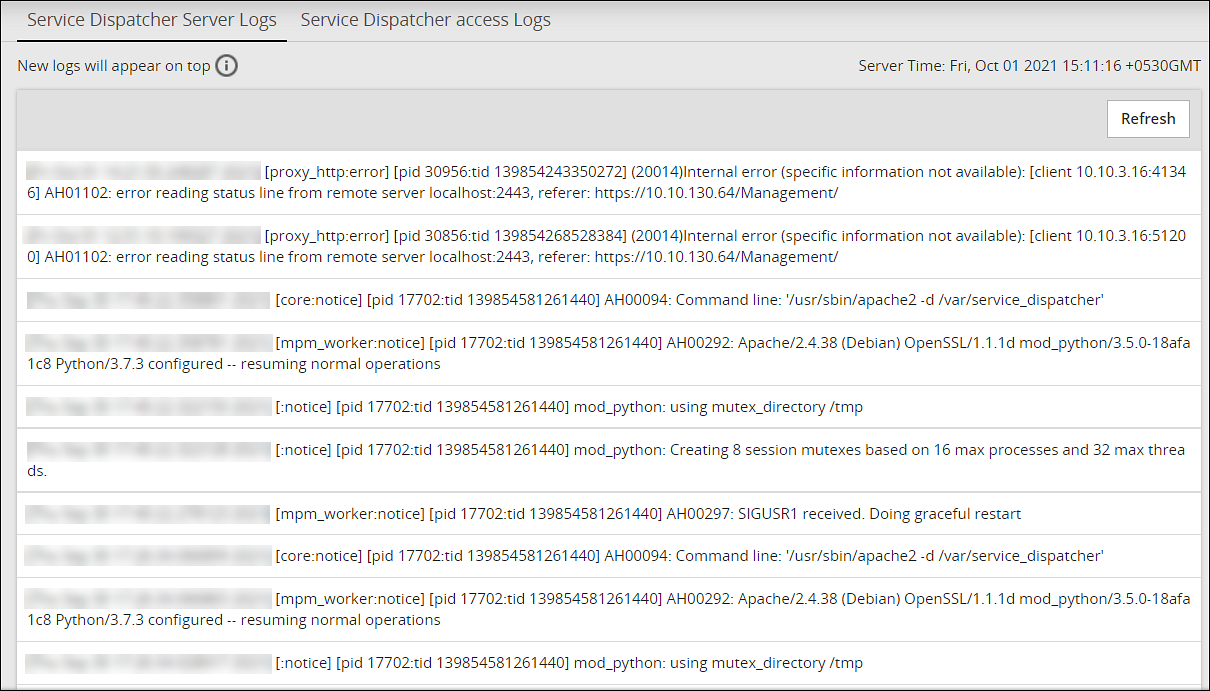

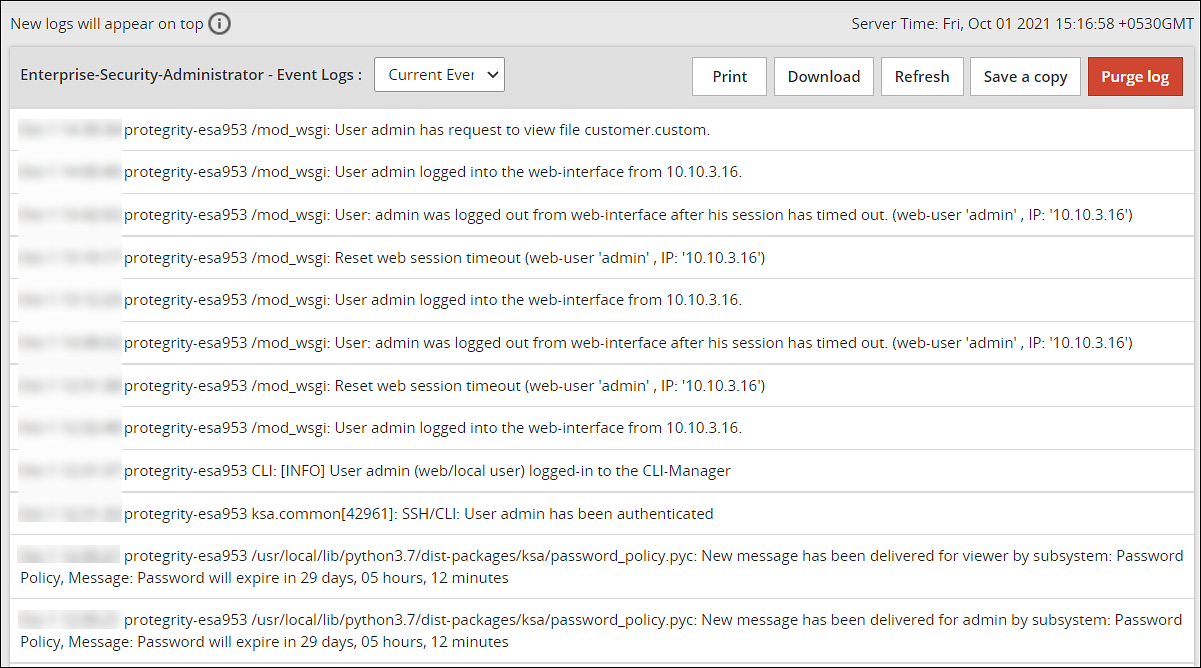

- 4.5.4: Viewing the logs

- 4.5.5: Working with Settings

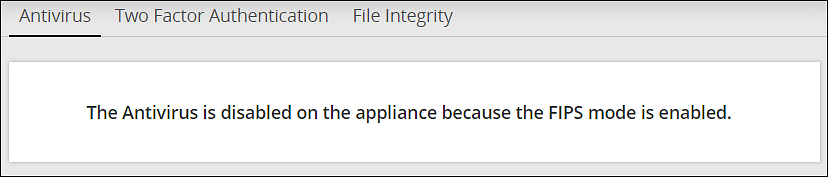

- 4.5.5.1: Working with Antivirus

- 4.5.5.1.1: Customizing Antivirus Scan Options

- 4.5.5.1.2: Scheduling Antivirus Scan

- 4.5.5.1.3: Updating the Antivirus Database

- 4.5.5.1.4: Working with Antivirus Logs

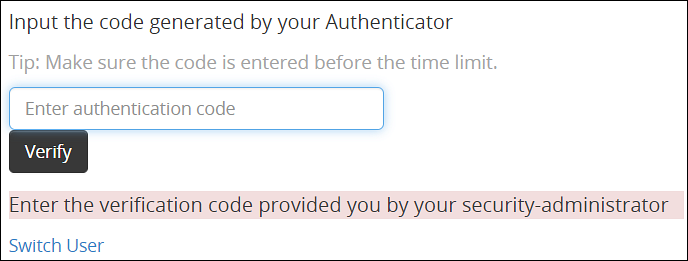

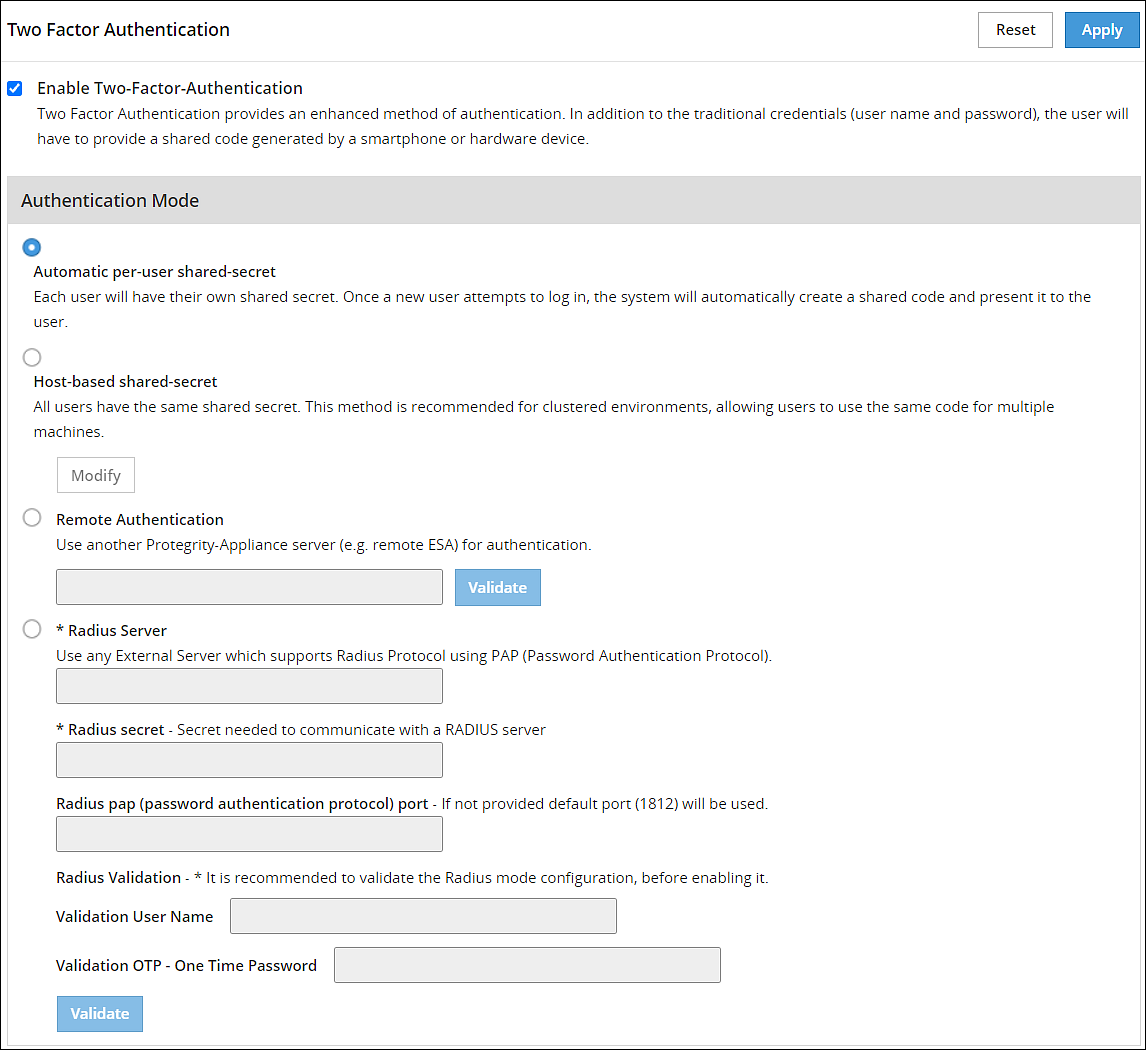

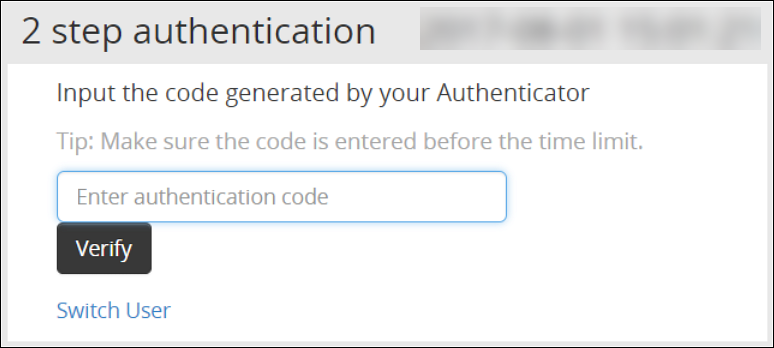

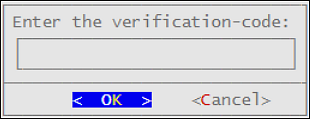

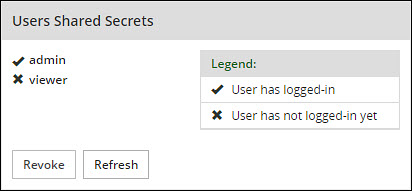

- 4.5.5.2: Configuring Appliance Two Factor Authentication

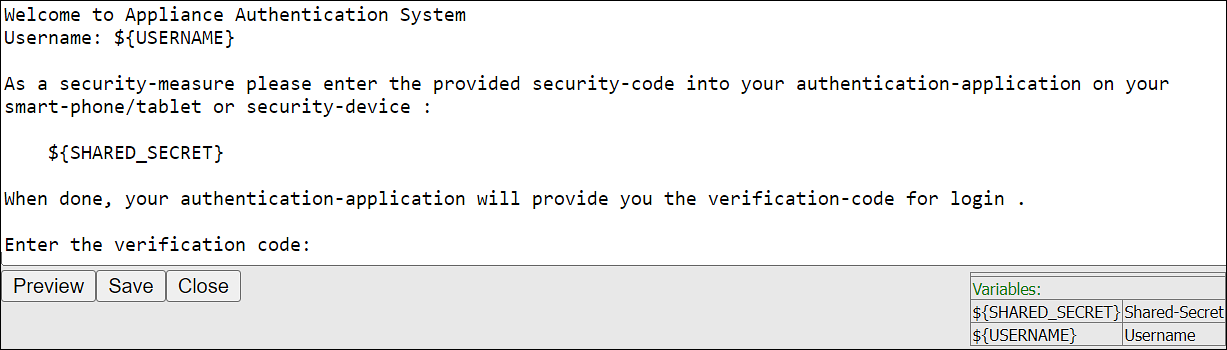

- 4.5.5.2.1: Working with Automatic Per-User Shared-Secret

- 4.5.5.2.2: Working with Host-Based Shared-Secret

- 4.5.5.2.3: Working with Remote Authentication Dial-up Service (RADIUS) Authentication

- 4.5.5.2.4: Working with Shared-Secret Lifecycle

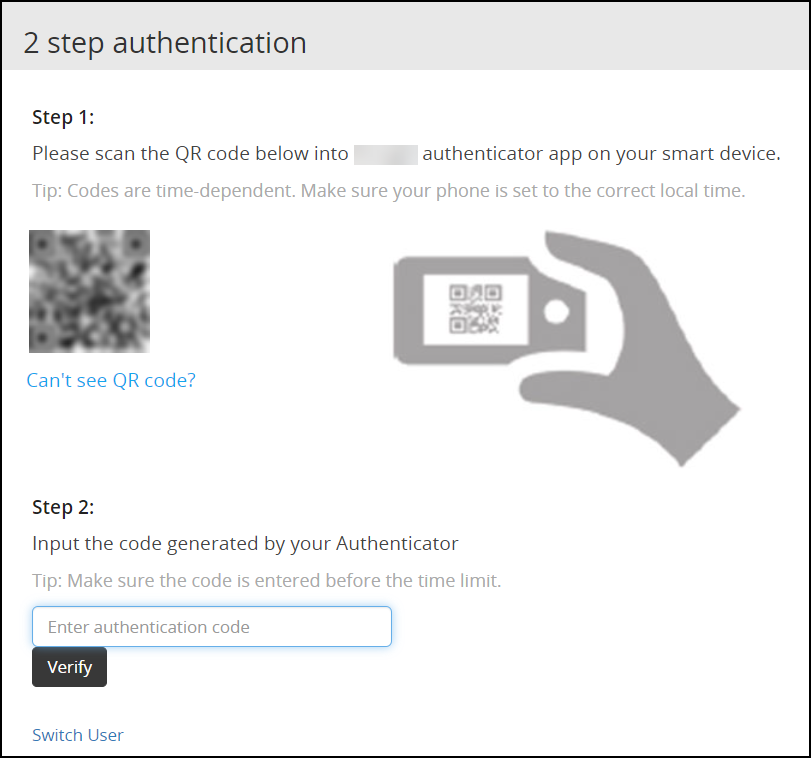

- 4.5.5.2.5: Logging in Using Appliance Two Factor Authentication

- 4.5.5.2.6: Disabling Appliance Two Factor Authentication

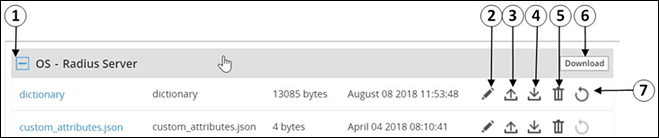

- 4.5.5.3: Working with Configuration Files

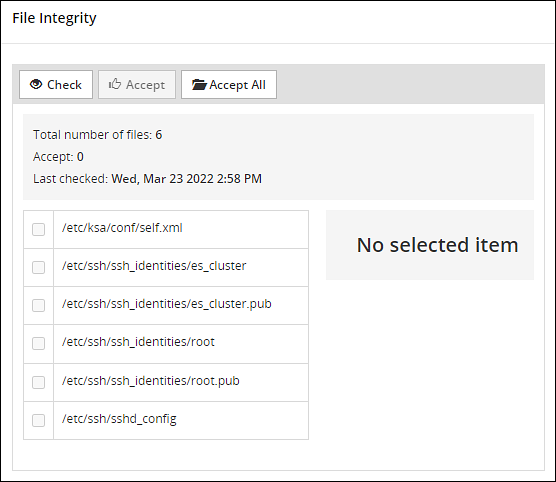

- 4.5.5.4: Working with File Integrity

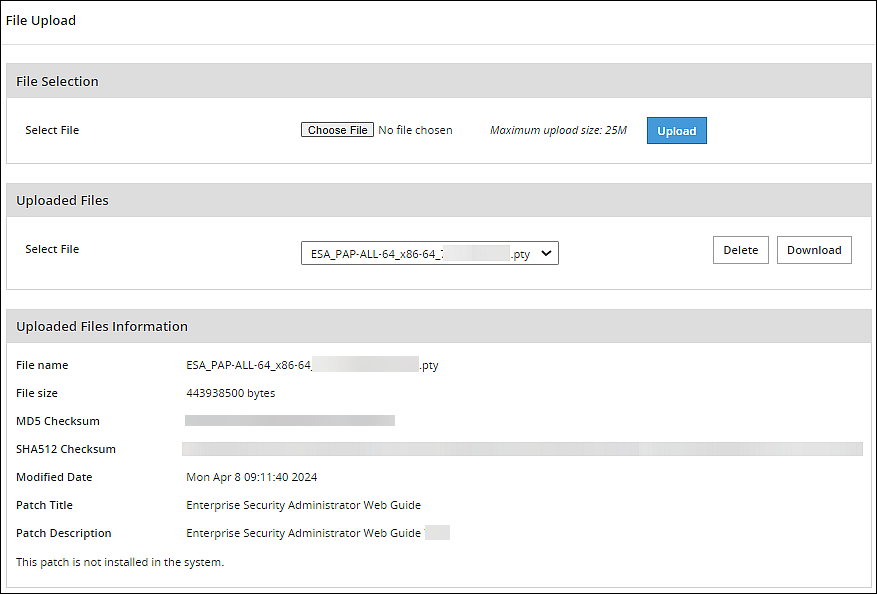

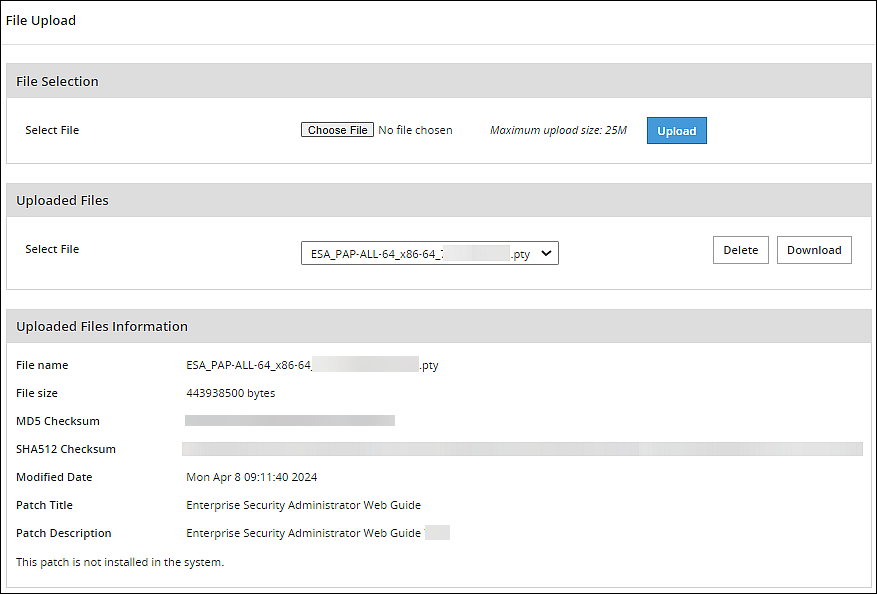

- 4.5.5.5: Managing File Uploads

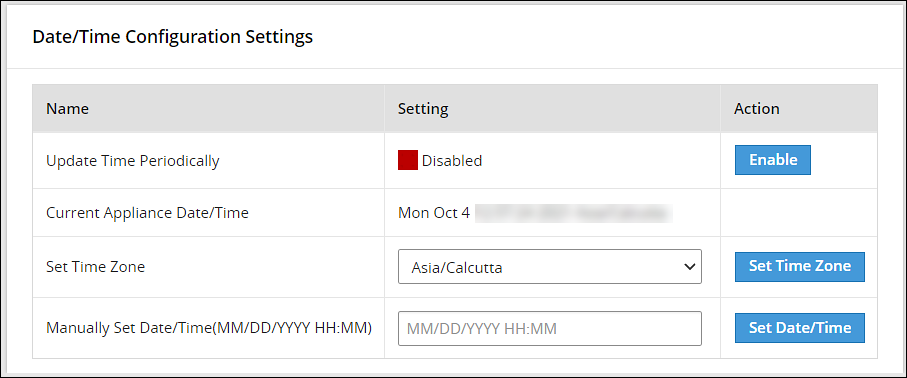

- 4.5.5.6: Configuring Date and Time

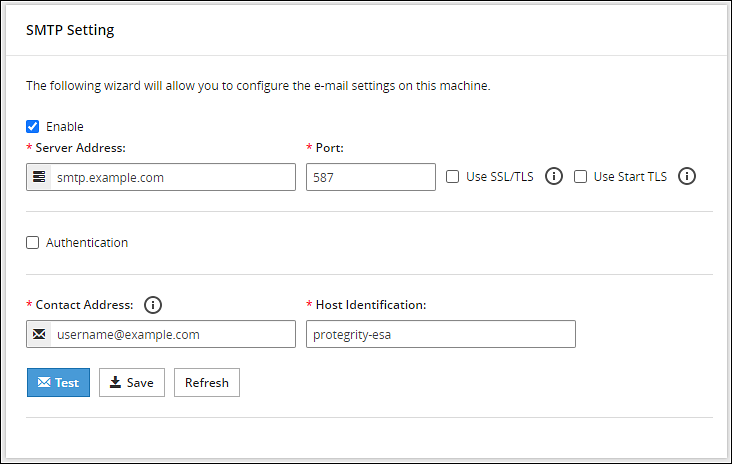

- 4.5.5.7: Configuring Email

- 4.5.5.8: Configuring Network Settings

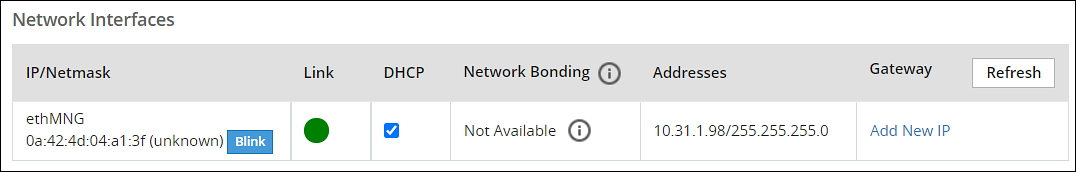

- 4.5.5.8.1: Managing Network Interfaces

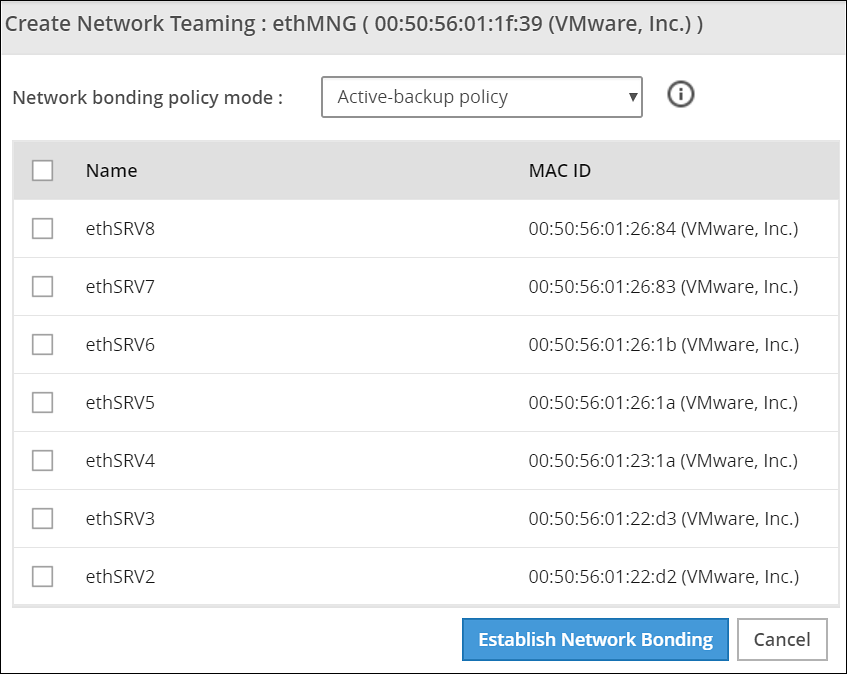

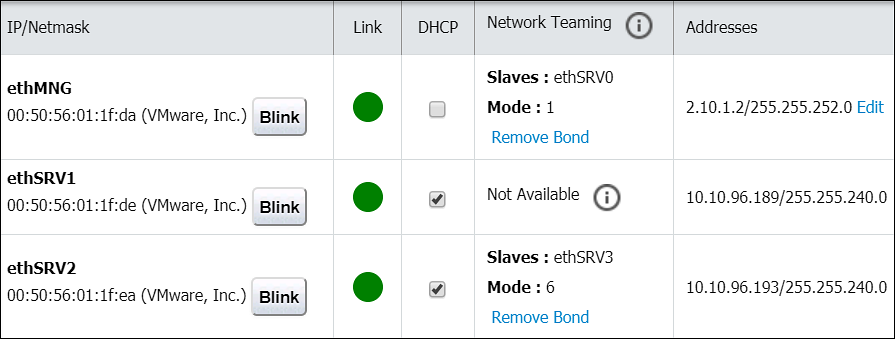

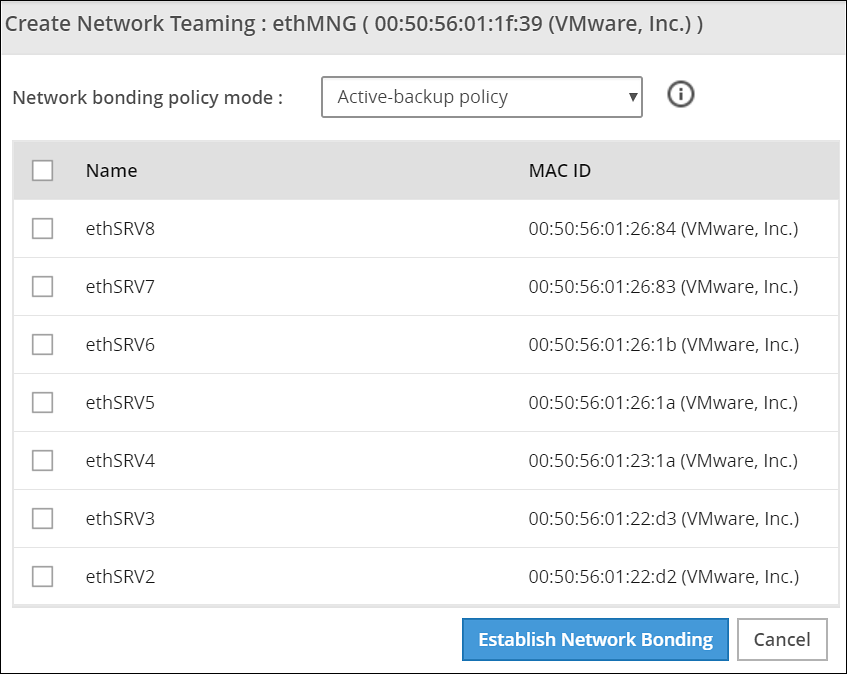

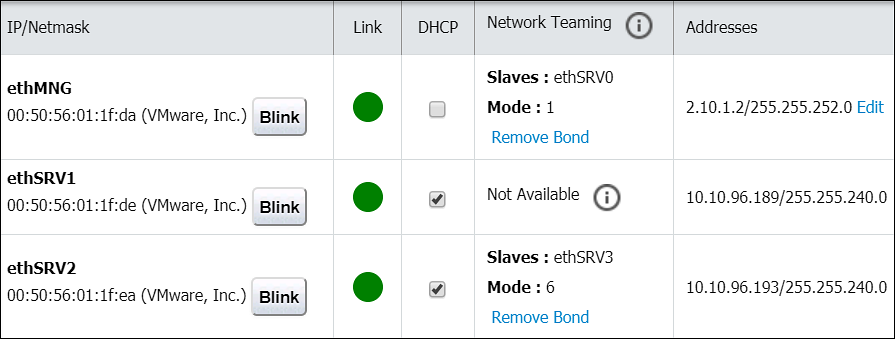

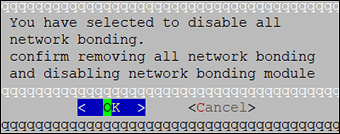

- 4.5.5.8.2: NIC Bonding

- 4.5.5.9: Configuring Web Settings

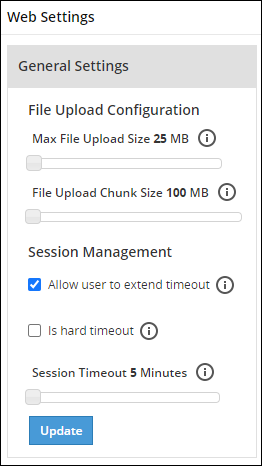

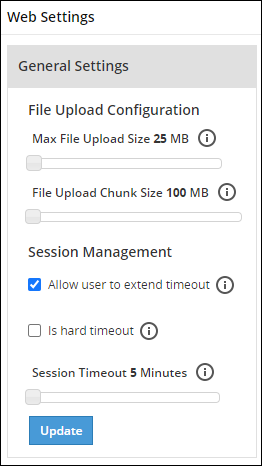

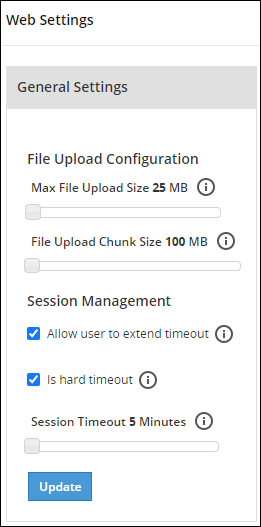

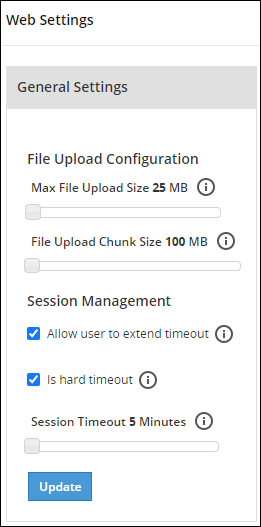

- 4.5.5.9.1: General Settings

- 4.5.5.9.2: Session Management

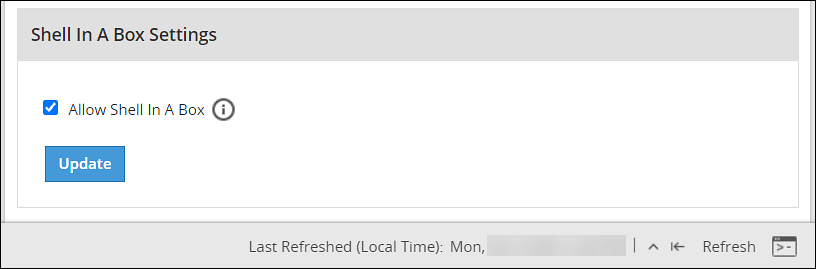

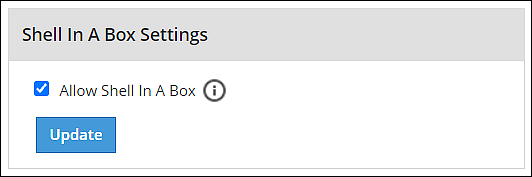

- 4.5.5.9.3: Shell in a box settings

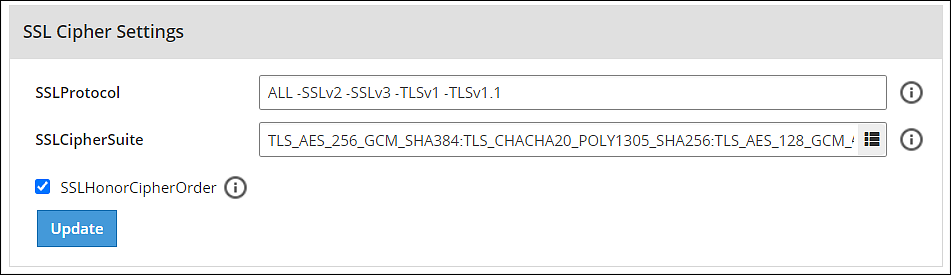

- 4.5.5.9.4: SSL cipher settings

- 4.5.5.9.5: Updating a protocol from the ESA Web UI

- 4.5.5.10: Working with Secure Shell (SSH) Keys

- 4.5.5.10.1: Configuring the authentication type for SSH keys

- 4.5.5.10.2: Configuring inbound communications

- 4.5.5.10.3: Configuring outbound communications

- 4.5.5.10.4: Configuring known hosts

- 4.5.6: Managing Appliance Users

- 4.5.7: Password Policy for all appliance users

- 4.5.7.1: Managing Users

- 4.5.7.1.1: Adding users to internal LDAP

- 4.5.7.1.2: Importing users to internal LDAP

- 4.5.7.1.3: Password policy configuration

- 4.5.7.1.4: Edit users

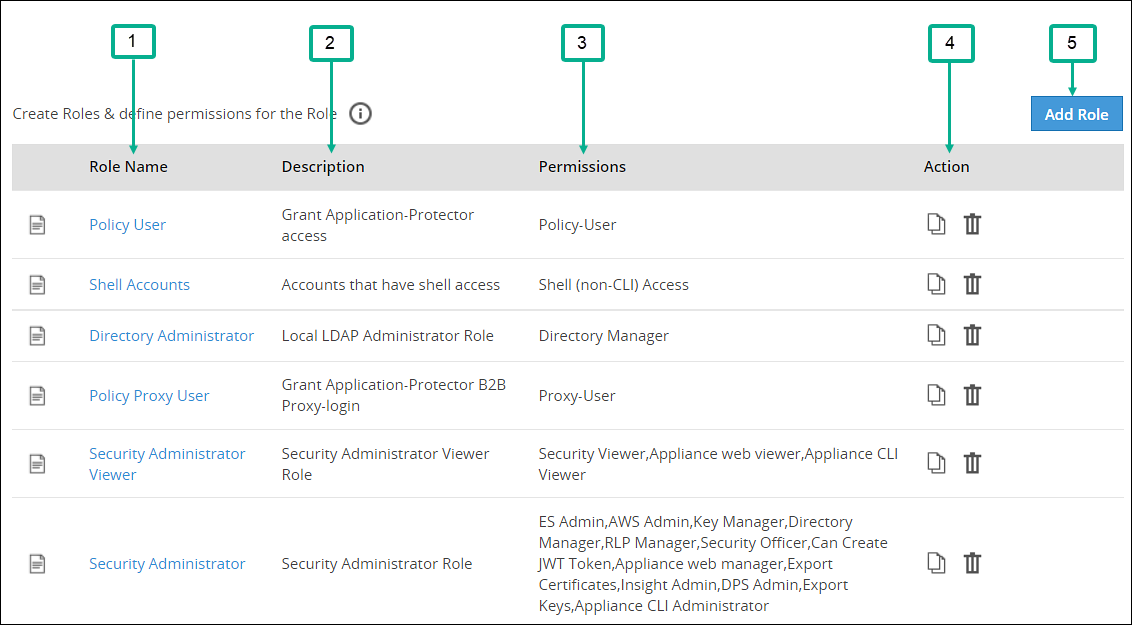

- 4.5.7.2: Managing Roles

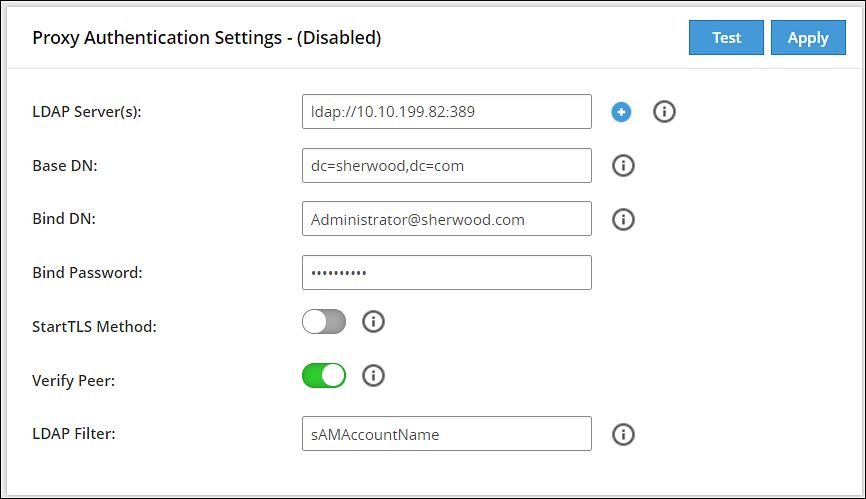

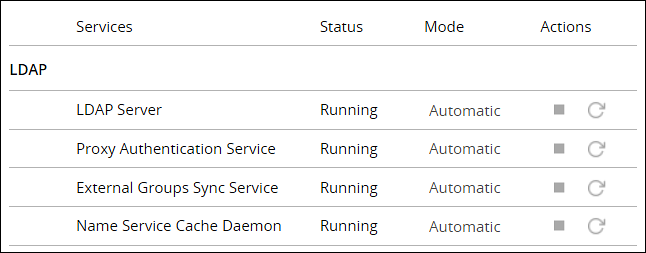

- 4.5.7.3: Configuring the proxy authentication settings

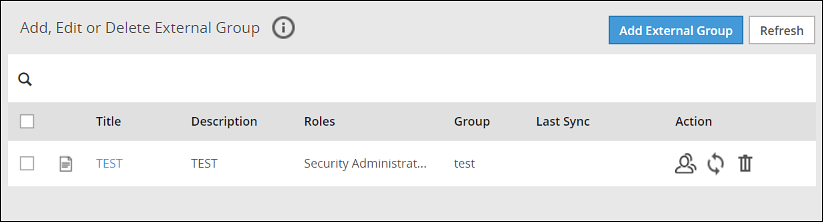

- 4.5.7.4: Working with External Groups

- 4.5.7.5: Configuring the Azure AD Settings

- 4.5.7.5.1: Importing Azure AD Users

- 4.5.7.5.2: Working with External Azure Groups

- 4.6: Trusted Appliances Cluster (TAC)

- 4.6.1: TAC Topology

- 4.6.2: Cluster Configuration Files

- 4.6.3: Deploying Appliances in a Cluster

- 4.6.4: Cluster Security

- 4.6.5: Reinstalling Cluster Services

- 4.6.6: Uninstalling Cluster Services

- 4.6.7: FAQs on TAC

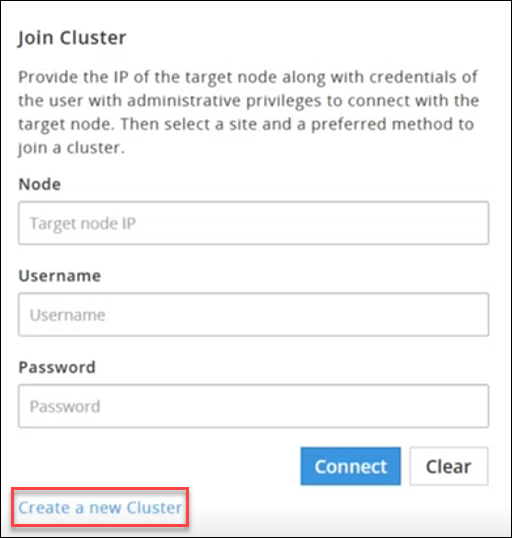

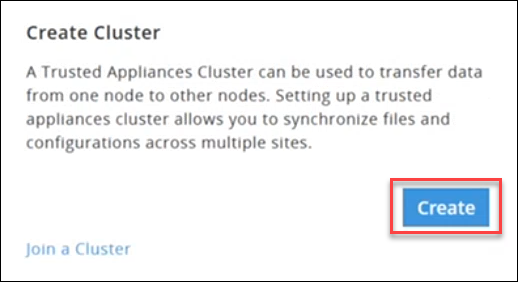

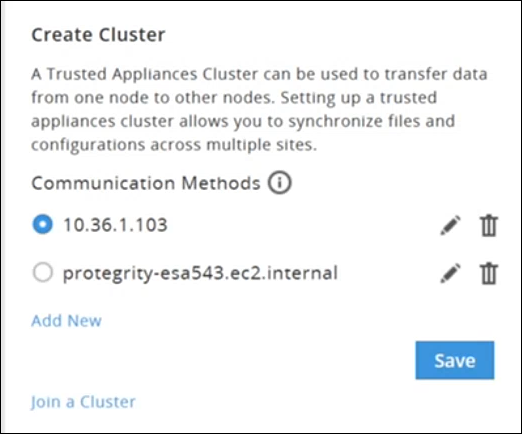

- 4.6.8: Creating a TAC using the Web UI

- 4.6.9: Connection Settings

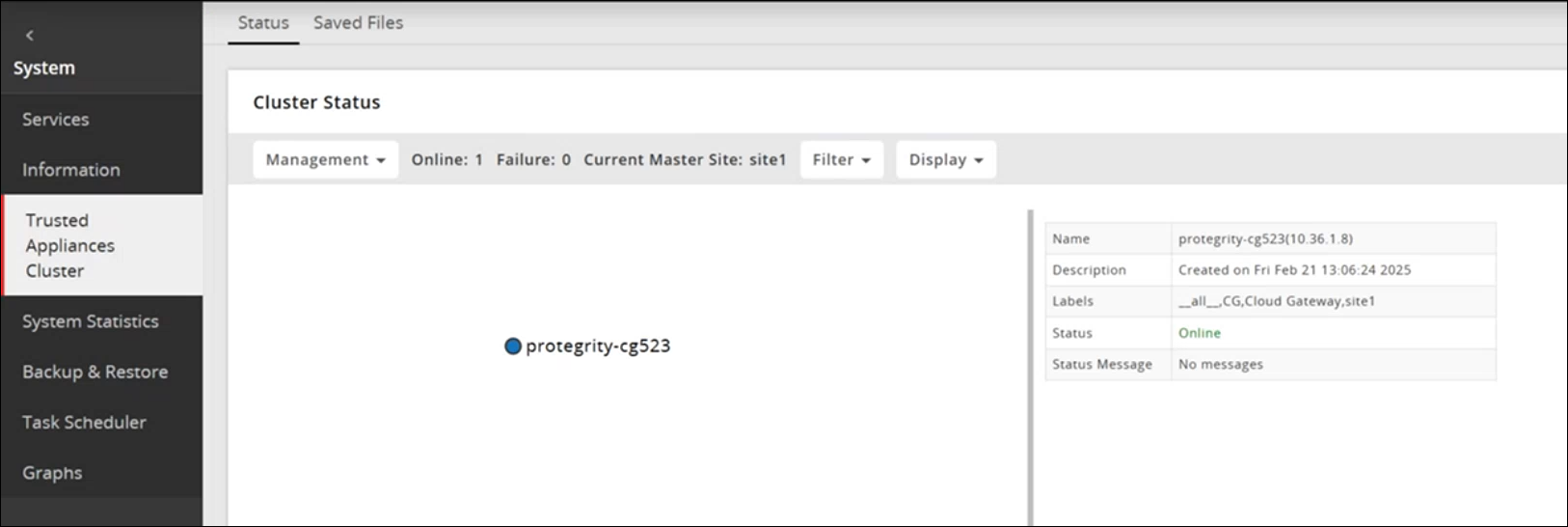

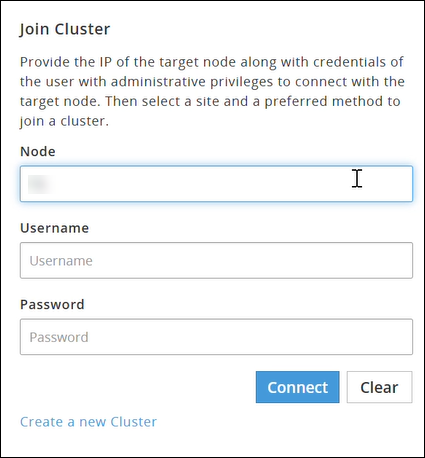

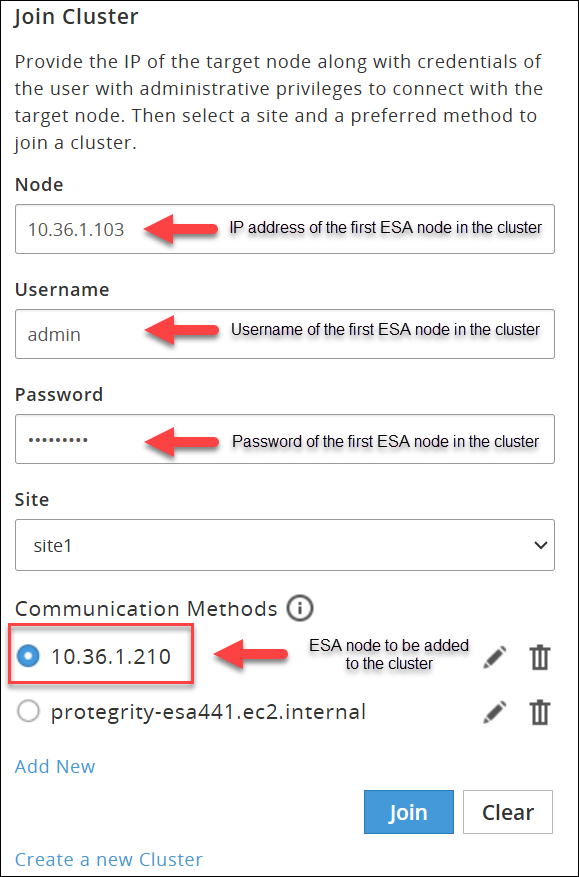

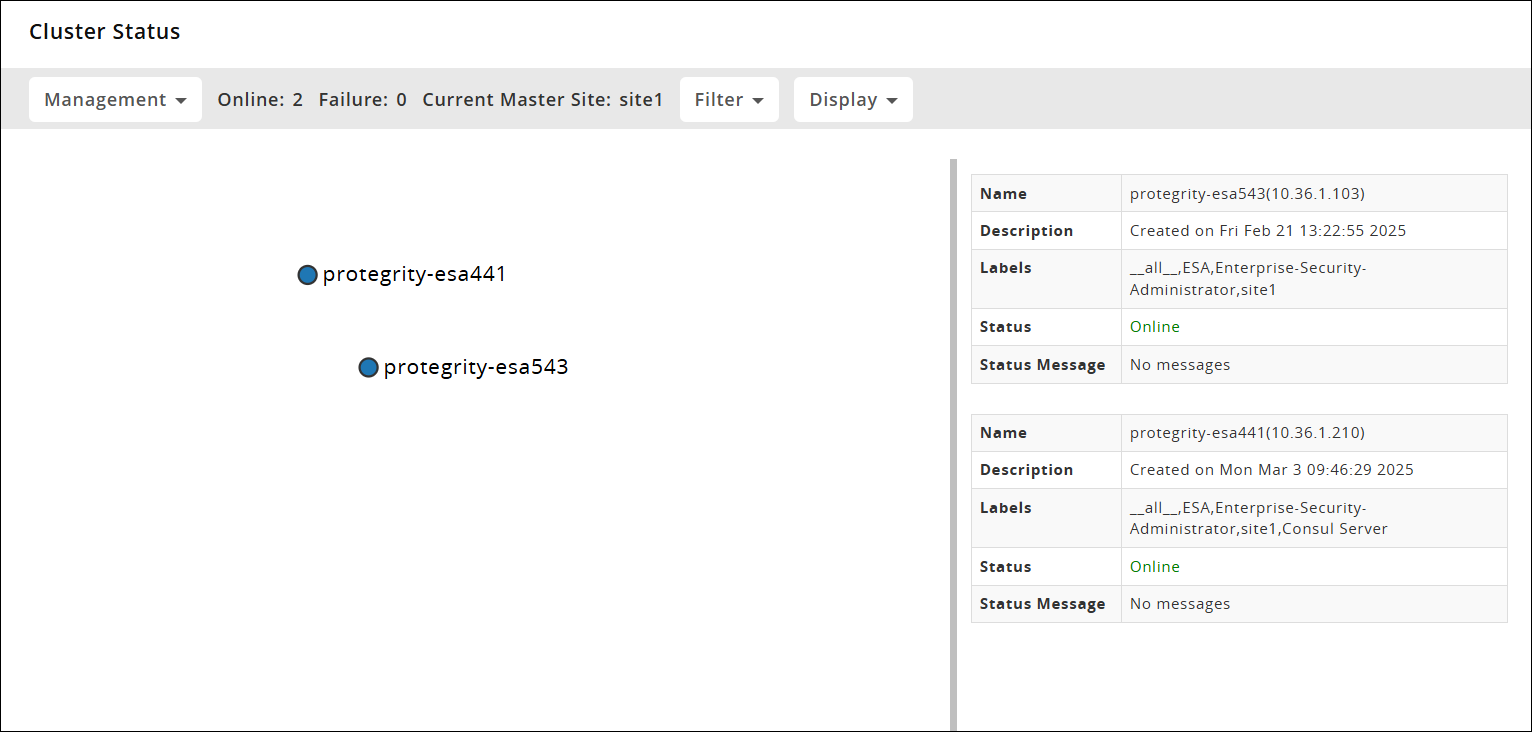

- 4.6.10: Joining an Existing Cluster using the Web UI

- 4.6.11: Managing Communication Methods for Local Node

- 4.6.12: Viewing Cluster Information

- 4.6.13: Removing a Node from the Cluster using the Web UI

- 4.7: Appliance Virtualization

- 4.7.1: Xen Paravirtualization Setup

- 4.7.1.1: Pre-Conversion Tasks

- 4.7.1.2: Paravirtualization Process

- 4.7.2: Xen Server Configuration

- 4.7.3: Installing Xen Tools

- 4.7.4: Xen Source – Xen Community Version

- 4.8: Appliance Hardening

- 4.8.1: Open listening ports

- 4.9: Increasing the Appliance Disk Size

- 4.10: Mandatory Access Control

- 4.10.1: Working with profiles

- 4.10.2: Analyzing events

- 4.10.3: AppArmor permissions

- 4.10.4: Troubleshooting for AppArmor

- 4.11: Accessing Appliances using Single Sign-On (SSO)

- 4.11.1: What is Kerberos

- 4.11.1.1: Implementing Kerberos SSO for Protegrity Appliances

- 4.11.1.1.1: Prerequisites

- 4.11.1.1.2: Setting up Kerberos SSO

- 4.11.1.1.3: Logging to the Appliance

- 4.11.1.1.4: Scenarios for Implementing Kerberos SSO

- 4.11.1.1.5: Viewing Logs

- 4.11.1.1.6: Feature Limitations

- 4.11.1.1.7: Troubleshooting

- 4.11.2: What is SAML

- 4.11.2.1: Setting up SAML SSO

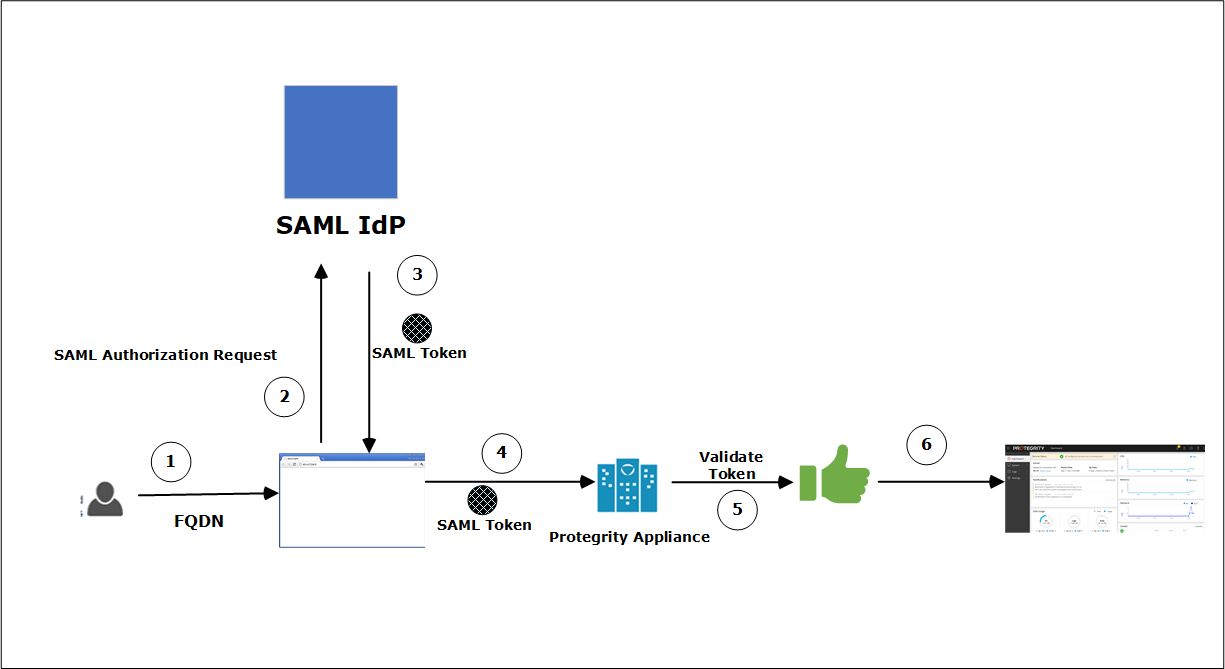

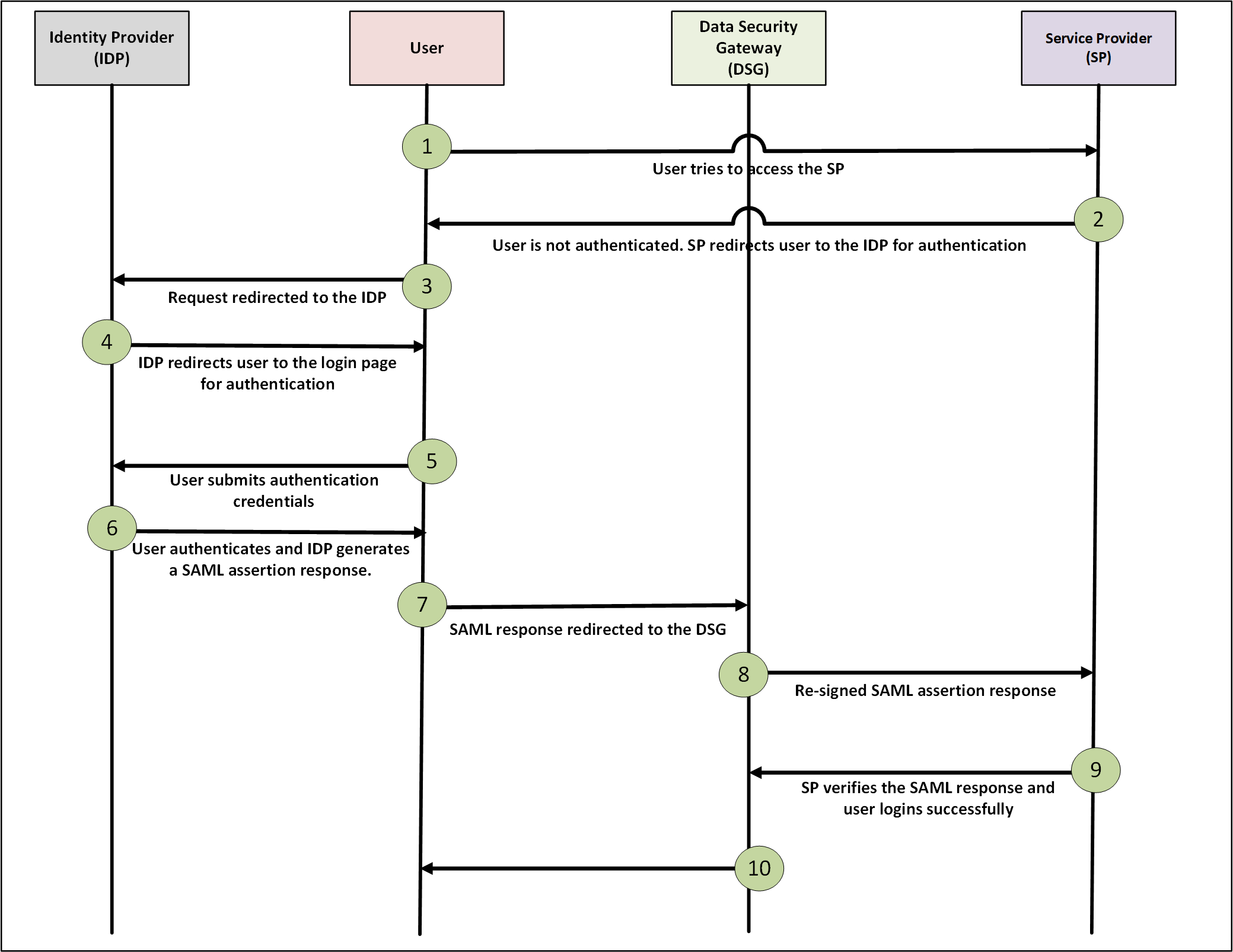

- 4.11.2.1.1: Workflow of SAML SSO on an Appliance

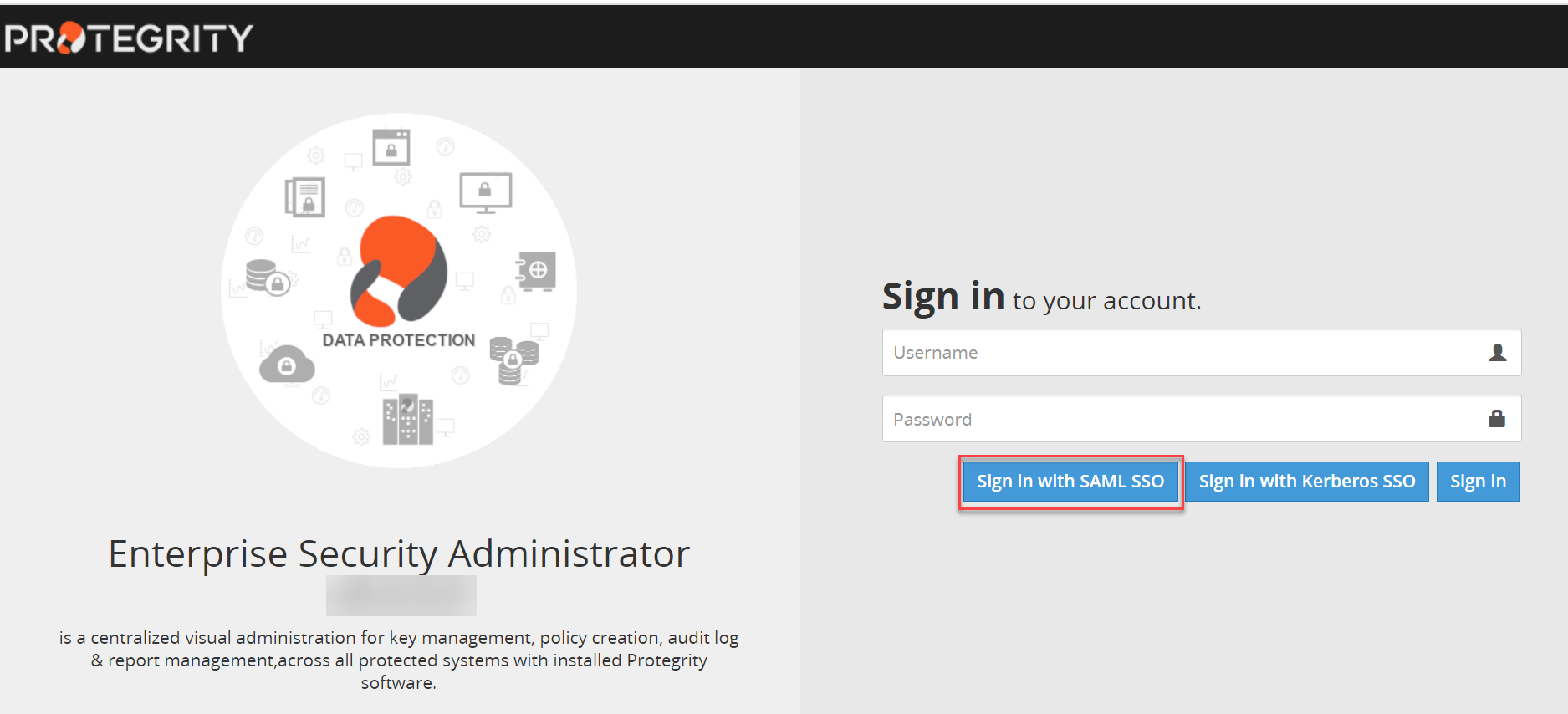

- 4.11.2.1.2: Logging on to the Appliance

- 4.11.2.1.3: Implementing SAML SSO on Azure IdP - An Example

- 4.11.2.1.4: Implementing SSO with a Load Balancer Setup

- 4.11.2.1.5: Viewing Logs

- 4.11.2.1.6: Feature Limitations

- 4.11.2.1.7: Troubleshooting

- 4.12: Sample External Directory Configurations

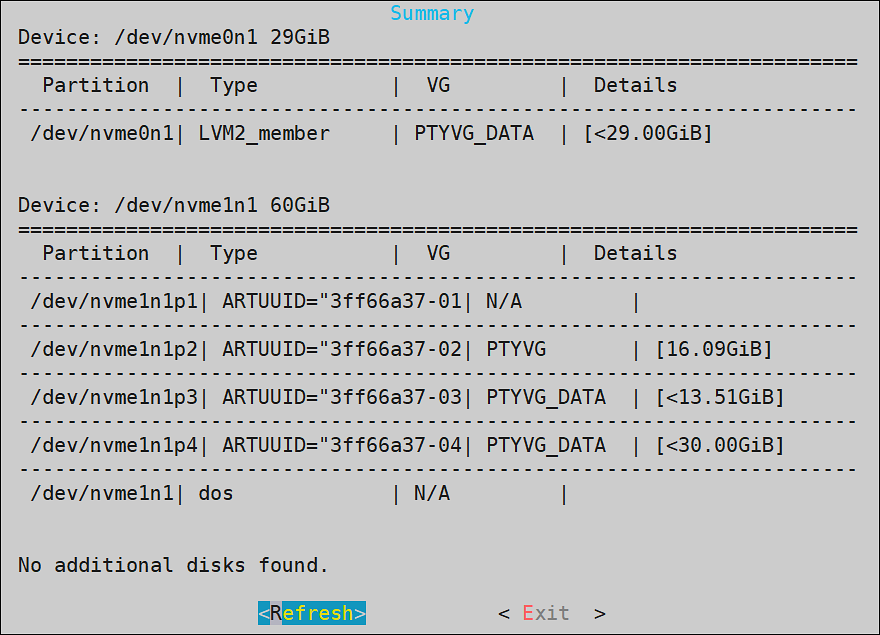

- 4.13: Partitioning of Disk on an Appliance

- 4.13.1: Partitioning the OS in the UEFI Boot Option

- 4.13.2: Partitioning the OS with the BIOS Boot Option

- 4.14: Working with Keys

- 4.15: Working with Certificates

- 4.16: Managing policies

- 4.17: Working with Insight

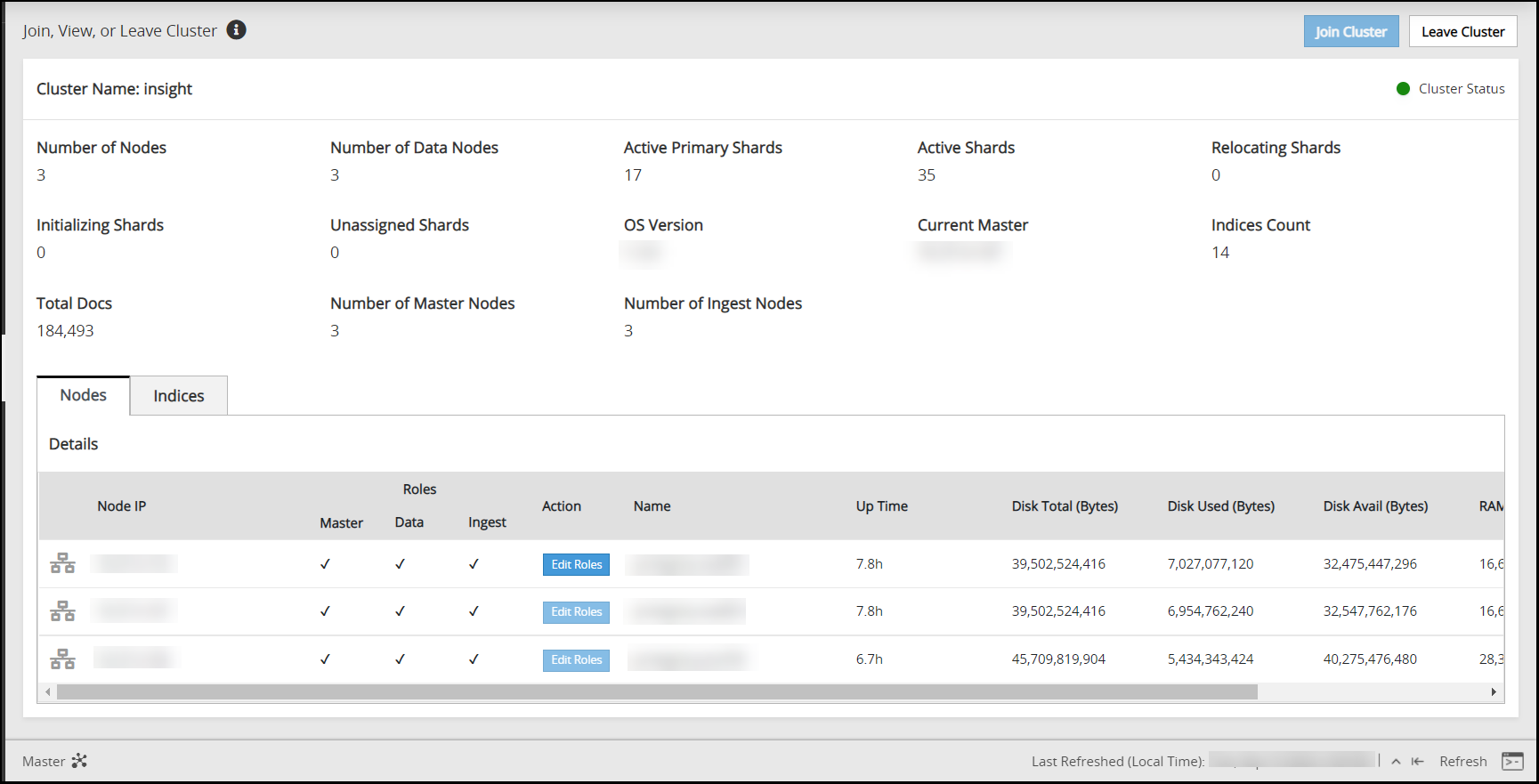

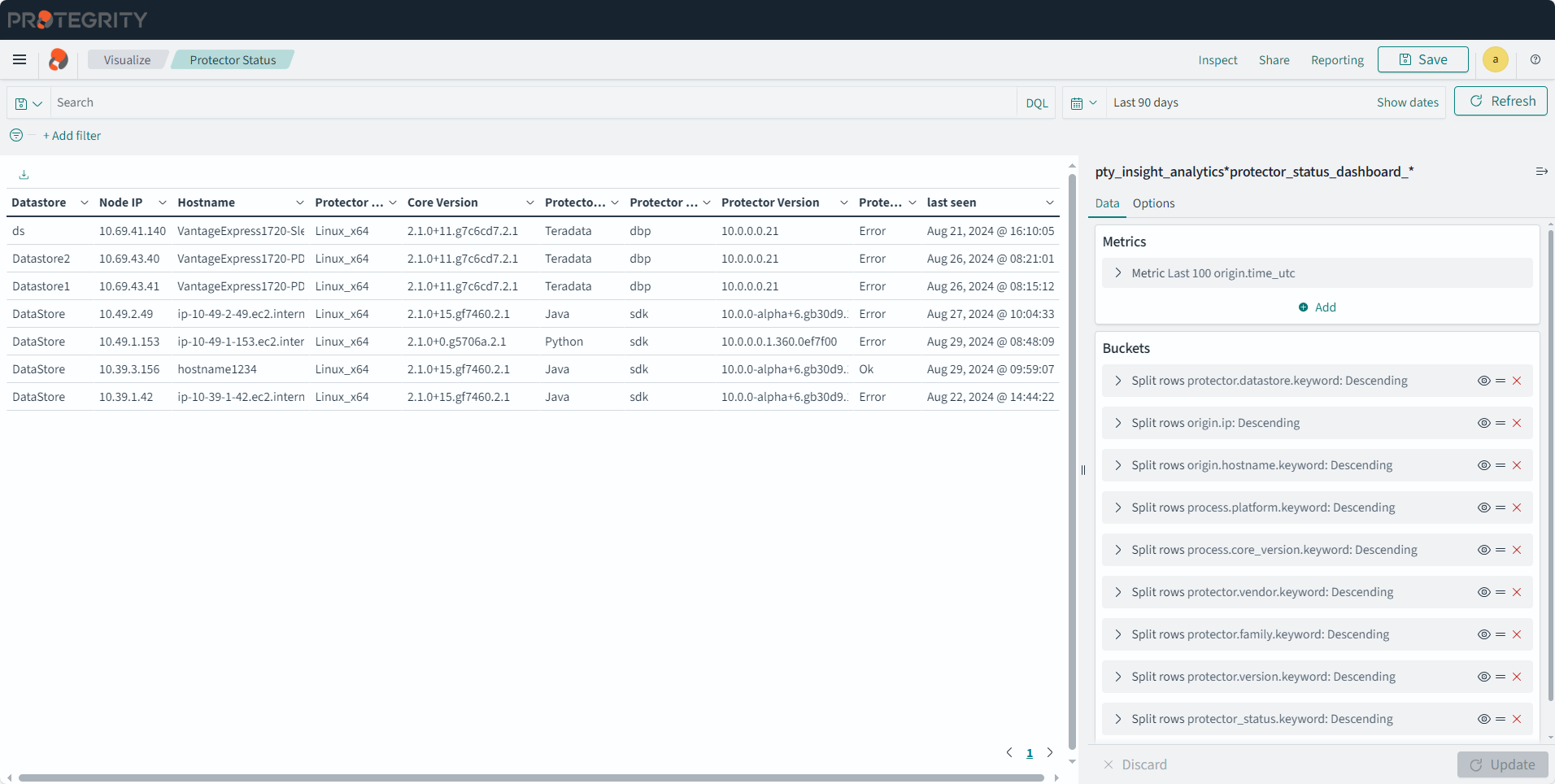

- 4.17.1: Understanding the Audit Store node status

- 4.17.2: Accessing the Insight Dashboards

- 4.17.3: Working with Audit Store nodes

- 4.17.4: Working with Discover

- 4.17.5: Working with Audit Store roles

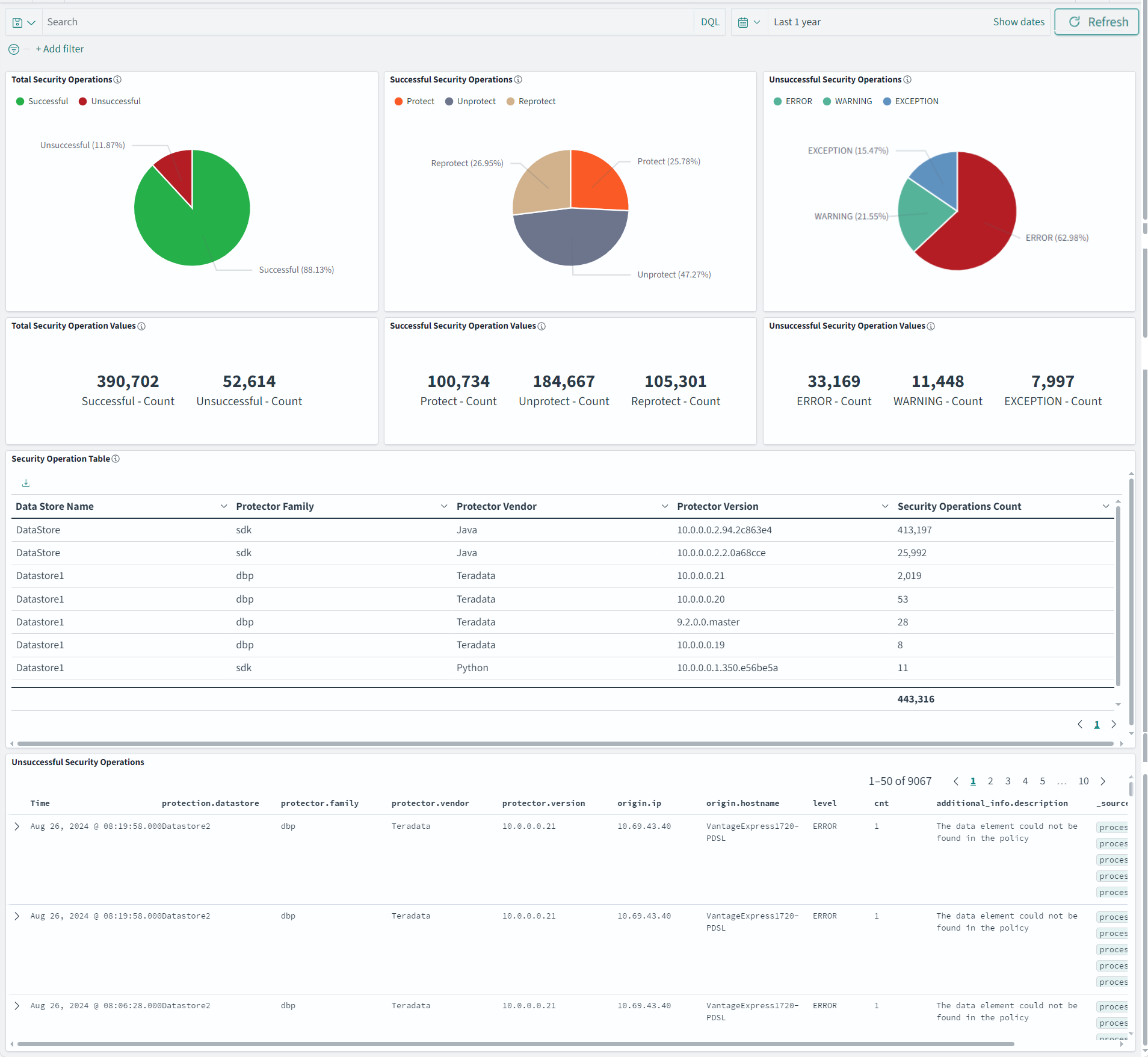

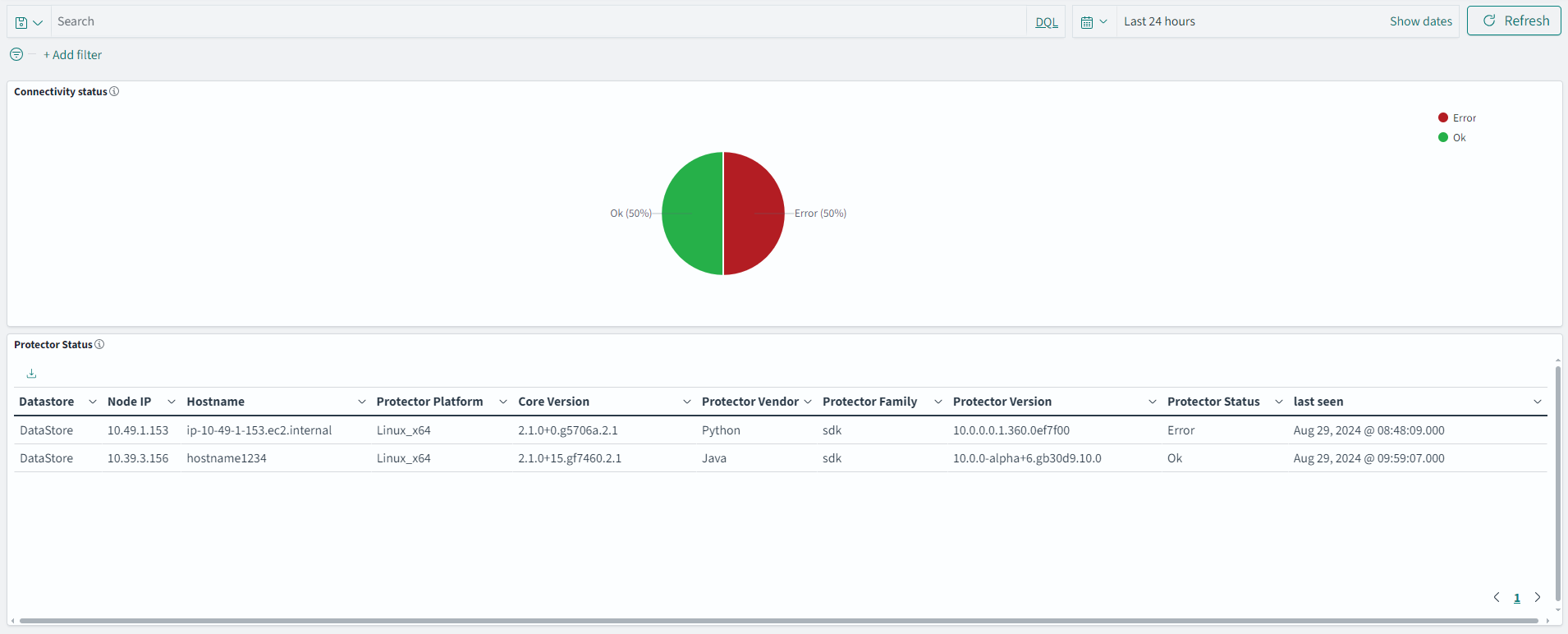

- 4.17.6: Understanding Insight Dashboards

- 4.17.7: Working with Protegrity dashboards

- 4.17.8: Working with Protegrity visualizations

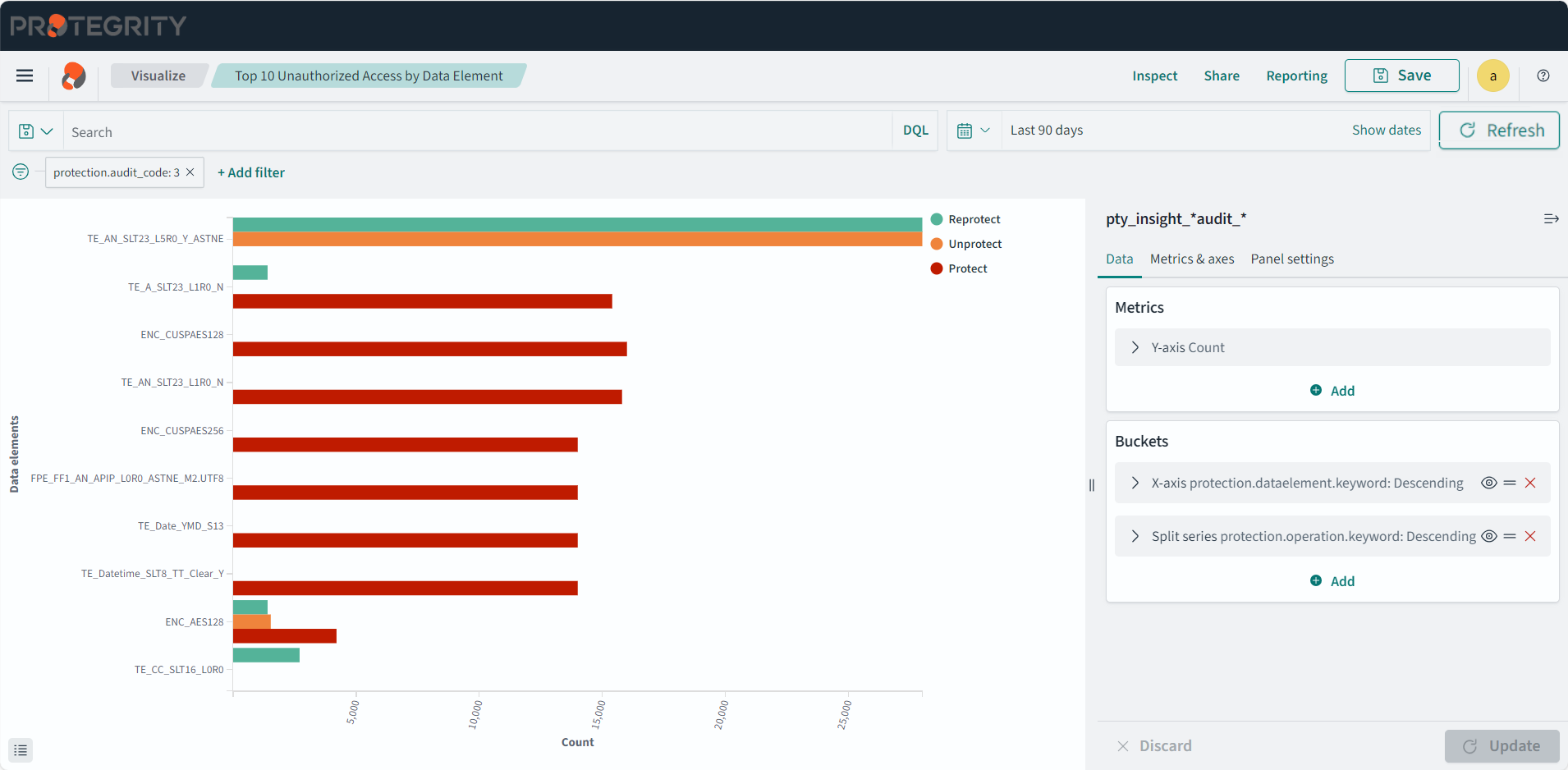

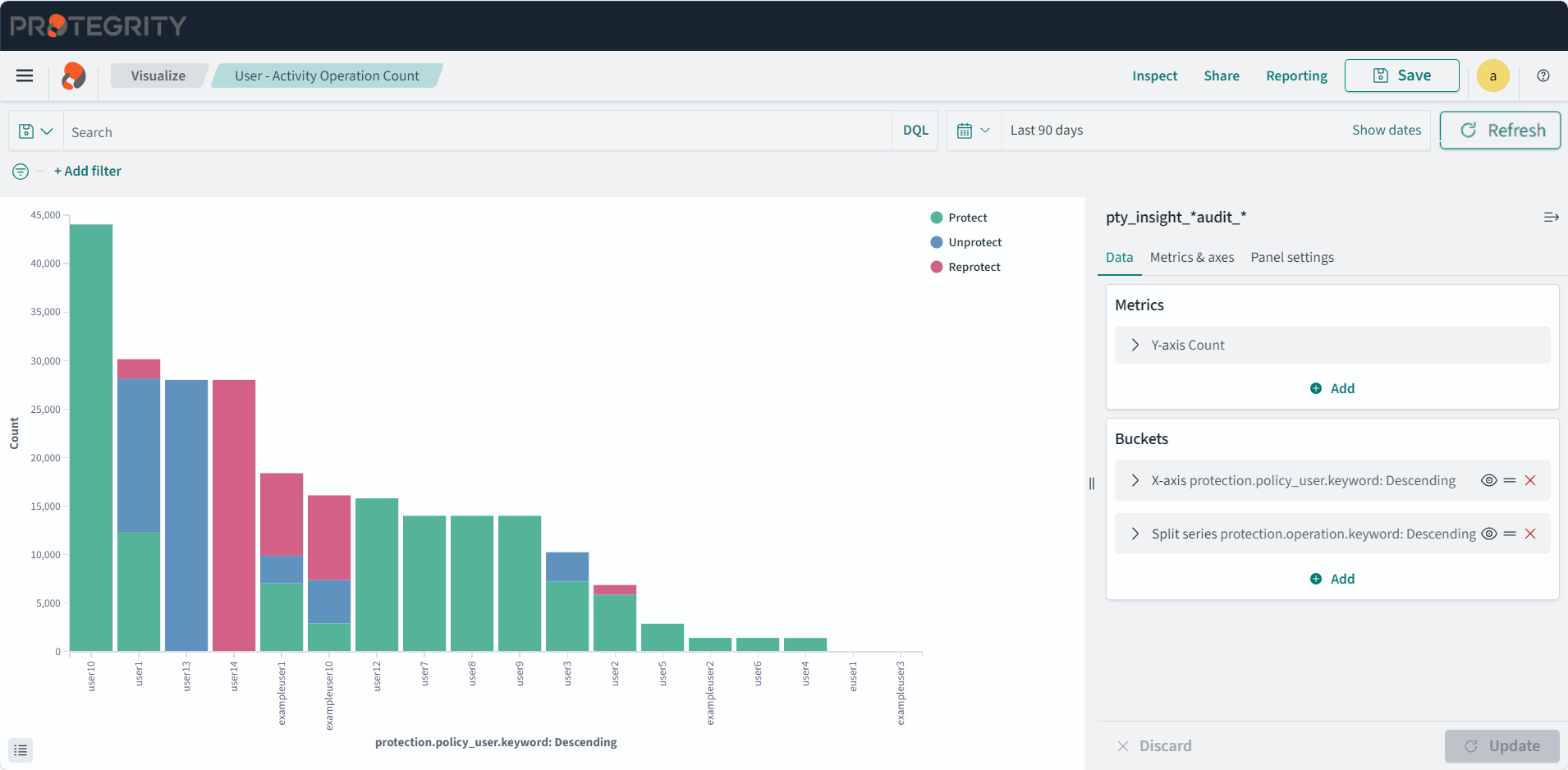

- 4.17.9: Visualization templates

- 4.18: Maintaining Insight

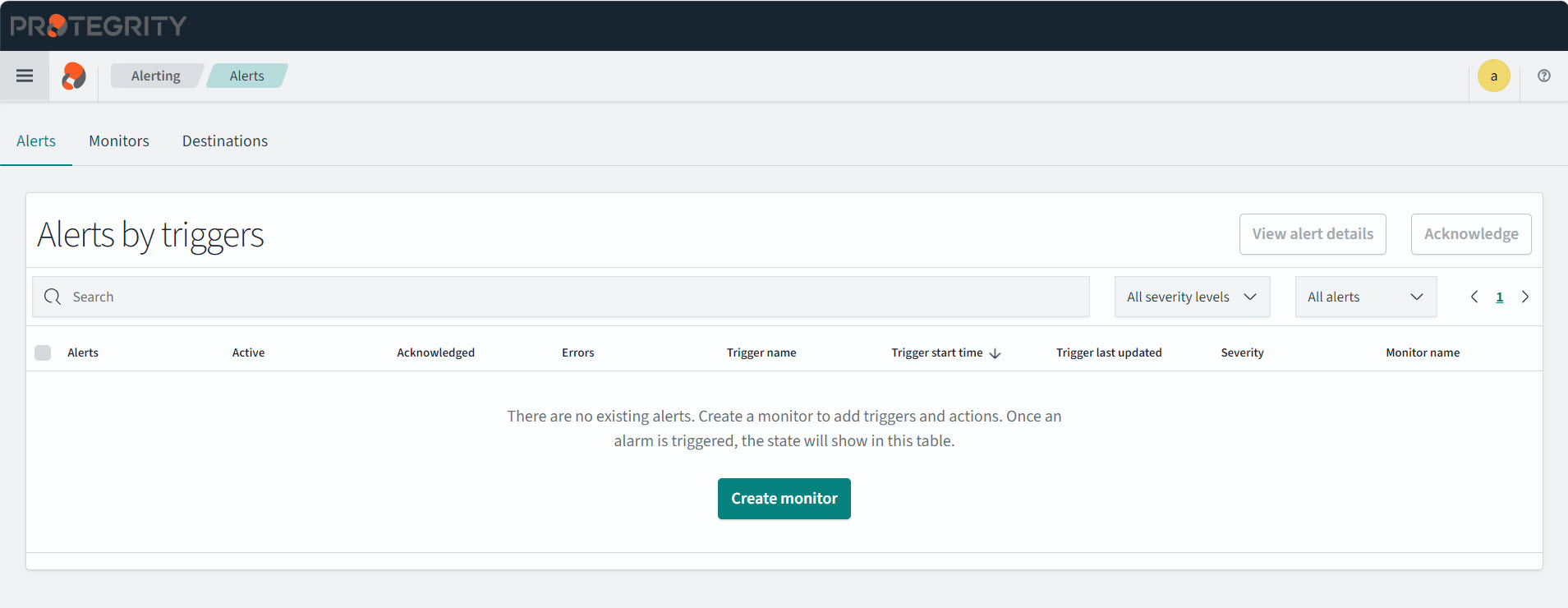

- 4.18.1: Working with alerts

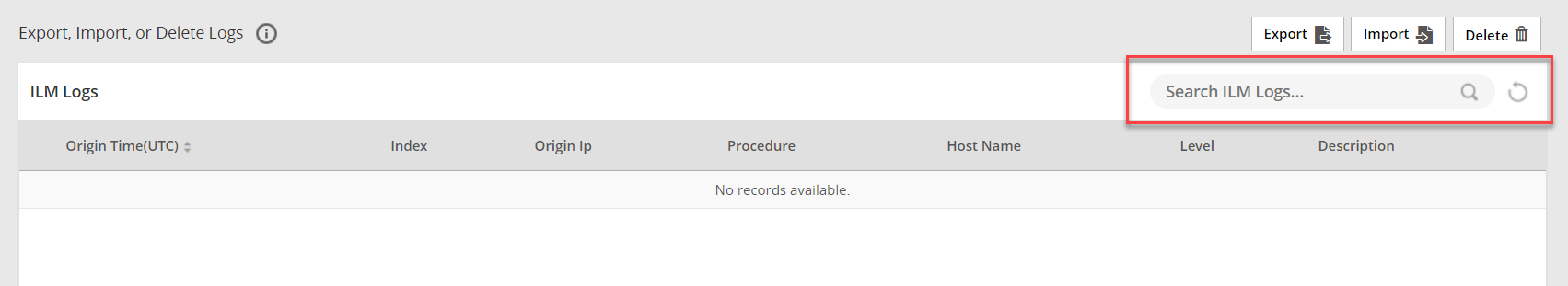

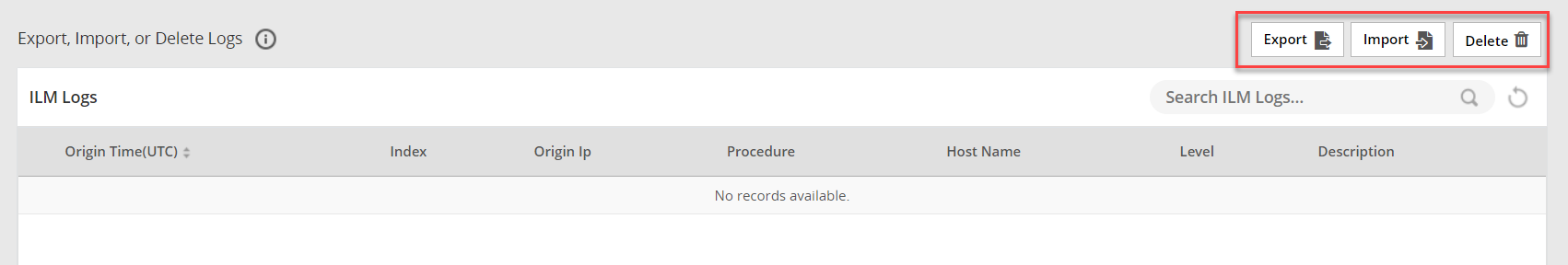

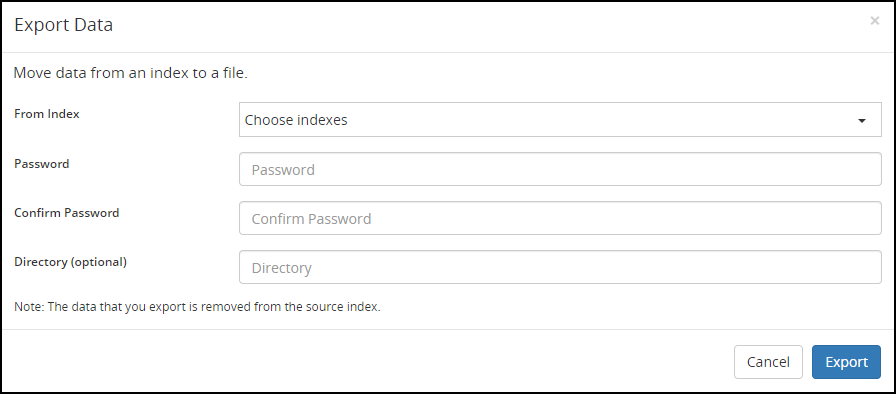

- 4.18.2: Index lifecycle management (ILM)

- 4.18.3: Viewing policy reports

- 4.18.4: Verifying signatures

- 4.18.5: Using the scheduler

- 4.19: Installing Protegrity Appliances on Cloud Platforms

- 4.19.1: Installing ESA on Amazon Web Services (AWS)

- 4.19.1.1: Verifying Prerequisites

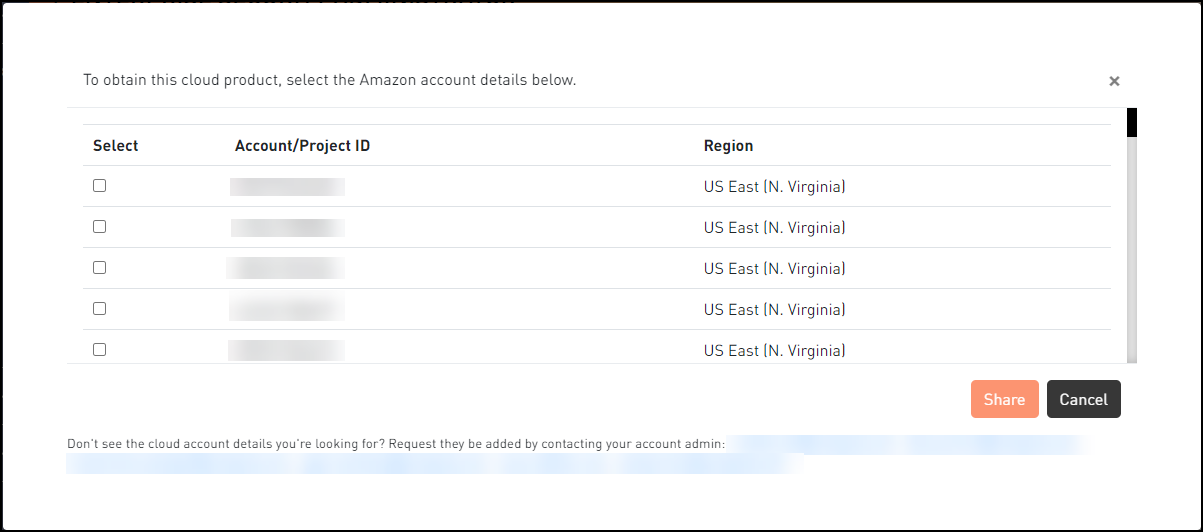

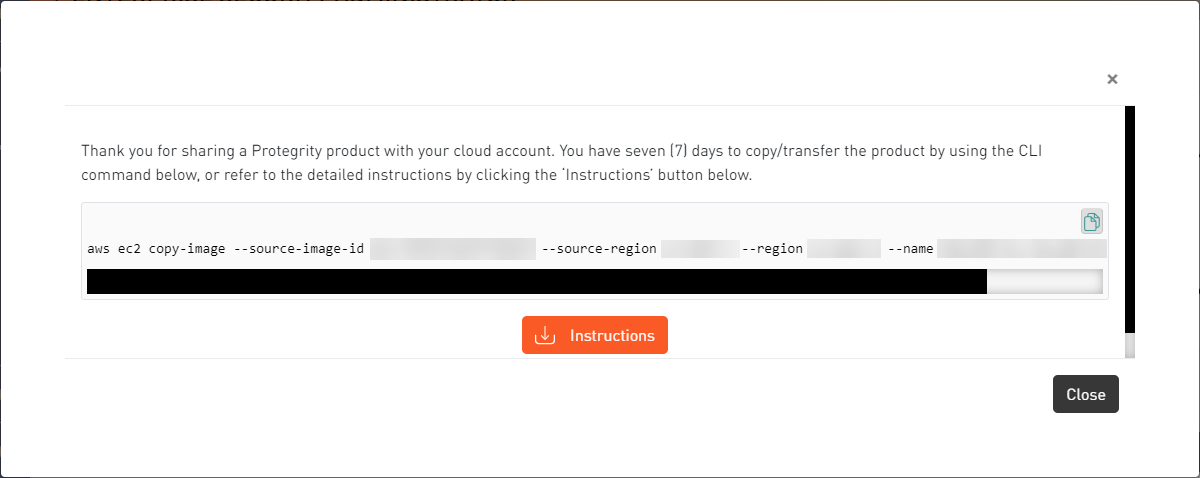

- 4.19.1.2: Obtaining the AMI

- 4.19.1.3: Loading the Protegrity Appliance from an Amazon Machine Image (AMI)

- 4.19.1.3.1: Creating an ESA Instance from the AMI

- 4.19.1.3.2: Configuring the Virtual Private Cloud (VPC)

- 4.19.1.3.3: Adding a Subnet to the Virtual Private Cloud (VPC)

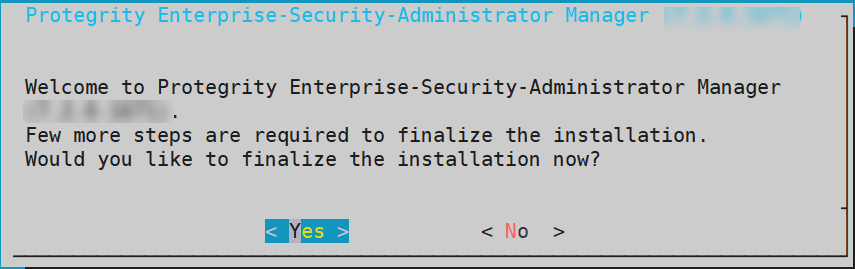

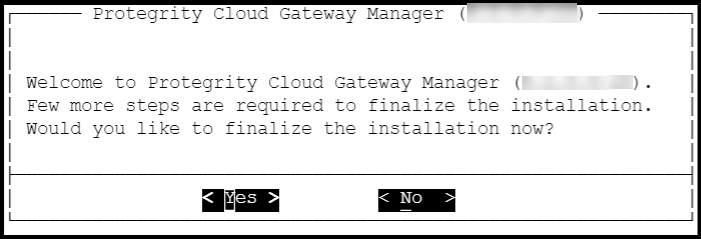

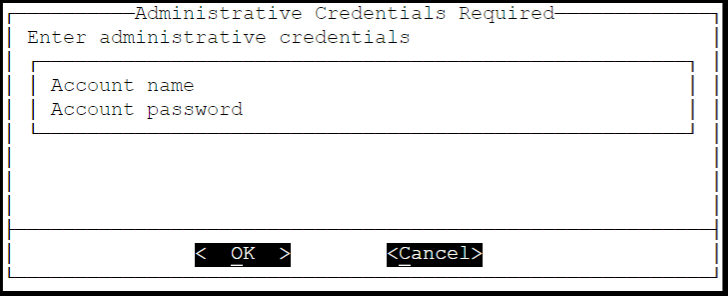

- 4.19.1.3.4: Finalizing the Installation of Protegrity Appliance on the Instance

- 4.19.1.3.4.1: Logging to the AWS Instance using the SSH Client

- 4.19.1.3.4.2: Finalizing an AWS Instance

- 4.19.1.4: Backing up and Restoring Data on AWS

- 4.19.1.5: Increasing Disk Space on the Appliance

- 4.19.1.6: Best Practices for Using Protegrity Appliances on AWS

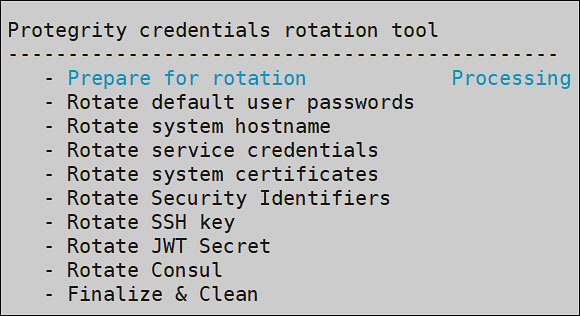

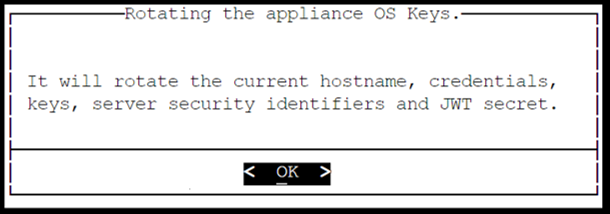

- 4.19.1.7: Running the Appliance-Rotation-Tool

- 4.19.1.8: Working with Cloud-based Applications

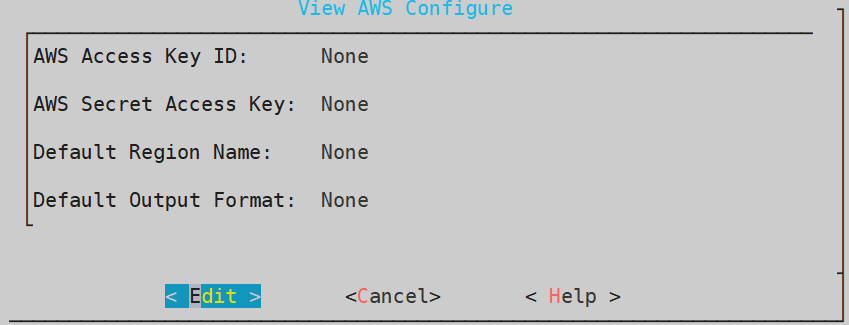

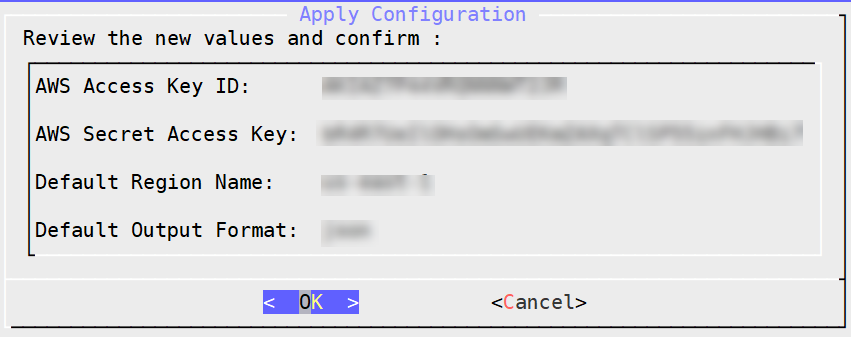

- 4.19.1.8.1: Configuring Access for AWS Resources

- 4.19.1.8.2: Working with CloudWatch Console

- 4.19.1.8.2.1: Integrating CloudWatch with Protegrity Appliance

- 4.19.1.8.2.2: Configuring Custom Logs on AWS CloudWatch Console

- 4.19.1.8.2.3: Toggling the CloudWatch Service

- 4.19.1.8.2.4: Reloading the AWS CloudWatch Integration

- 4.19.1.8.2.5: Viewing Logs on AWS CloudWatch Console

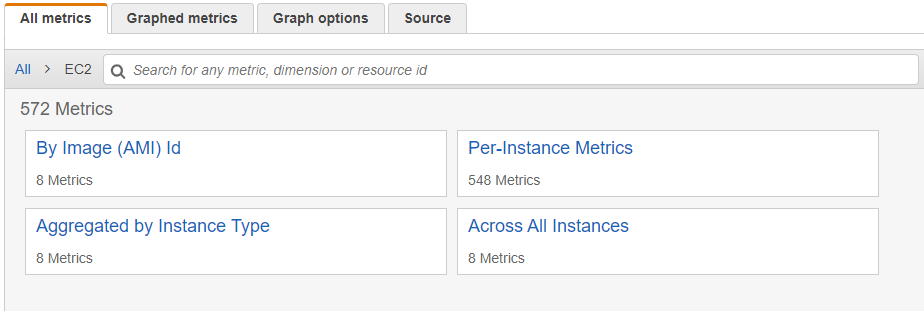

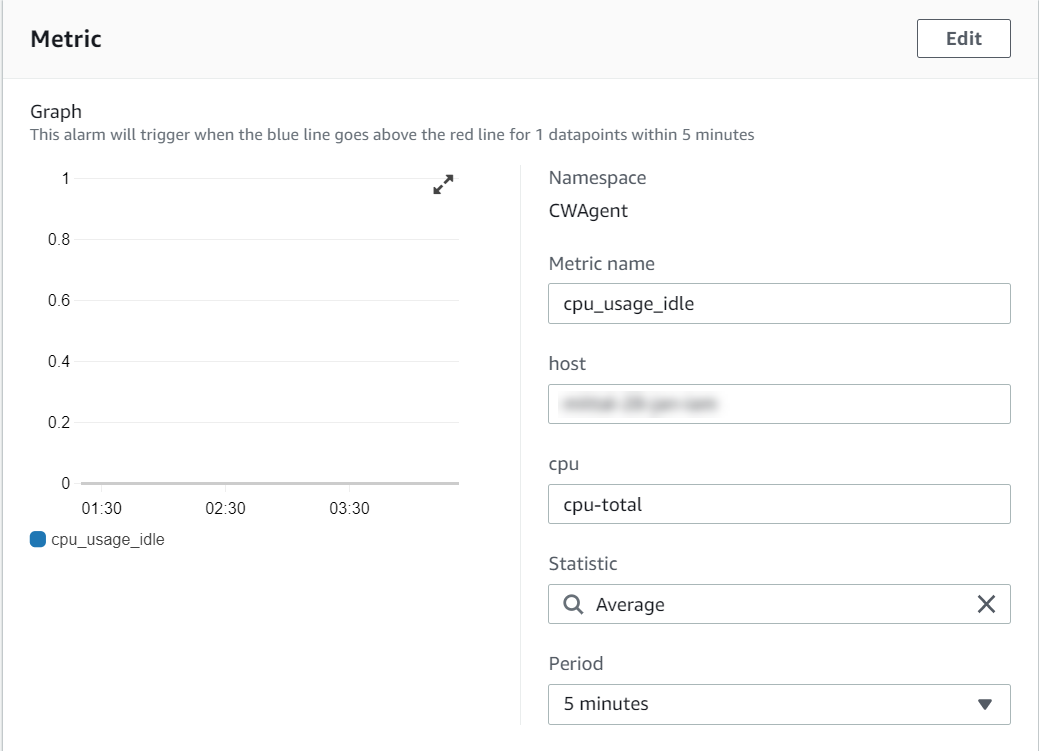

- 4.19.1.8.2.6: Working with AWS CloudWatch Metrics

- 4.19.1.8.2.7: Viewing Metrics on AWS CloudWatch Console

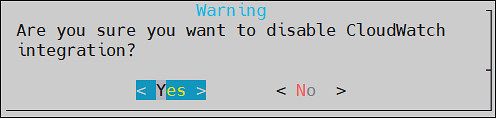

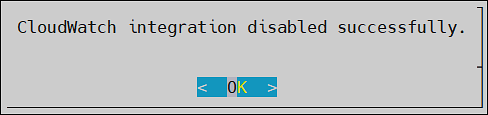

- 4.19.1.8.2.8: Disabling AWS CloudWatch Integration

- 4.19.1.8.3: Working with the AWS Cloud Utility

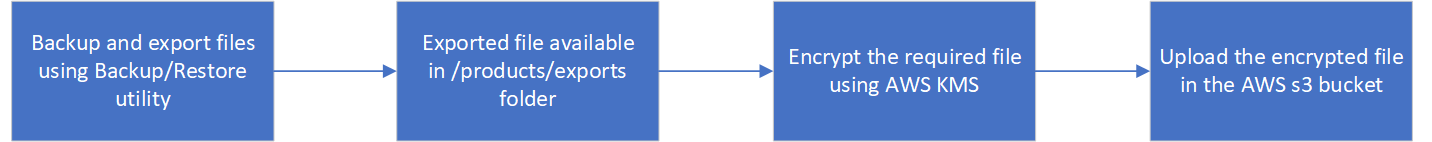

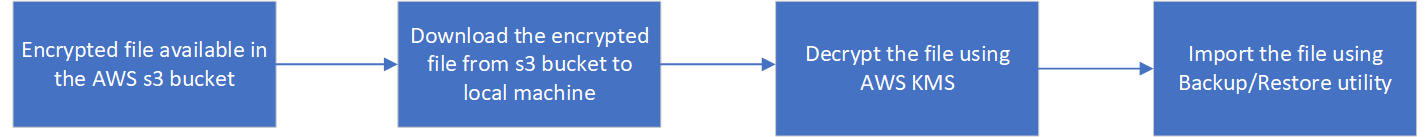

- 4.19.1.8.3.1: Storing Backup Files on the AWS S3 Bucket

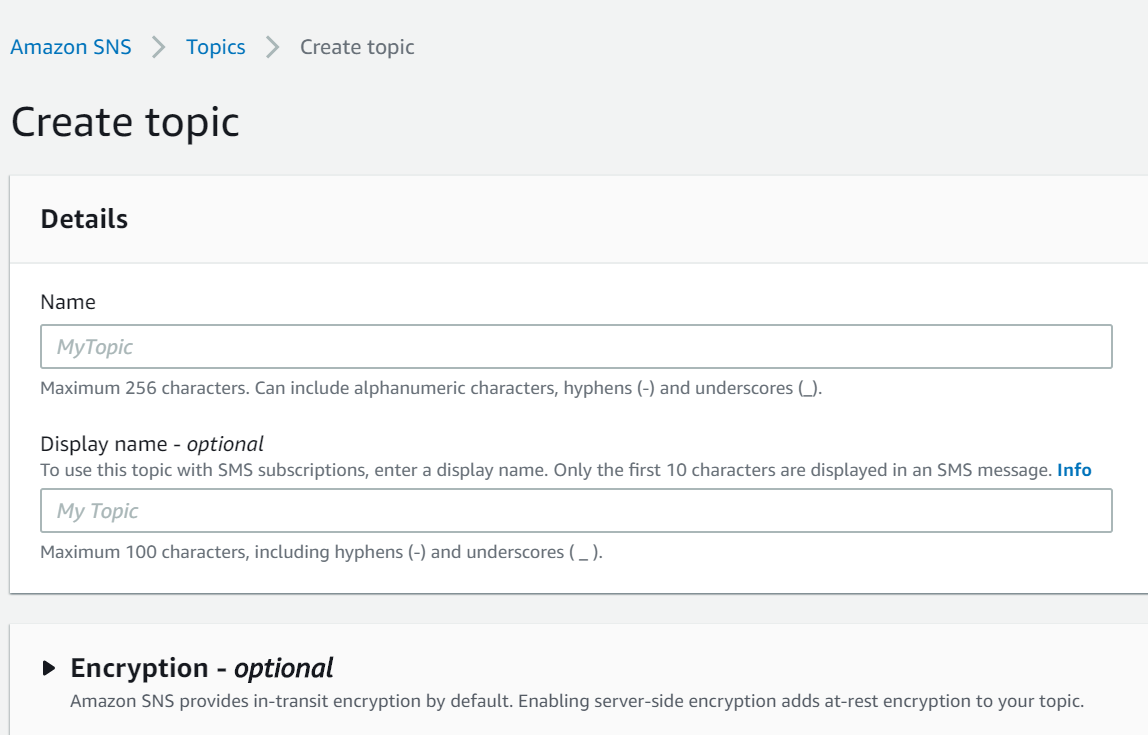

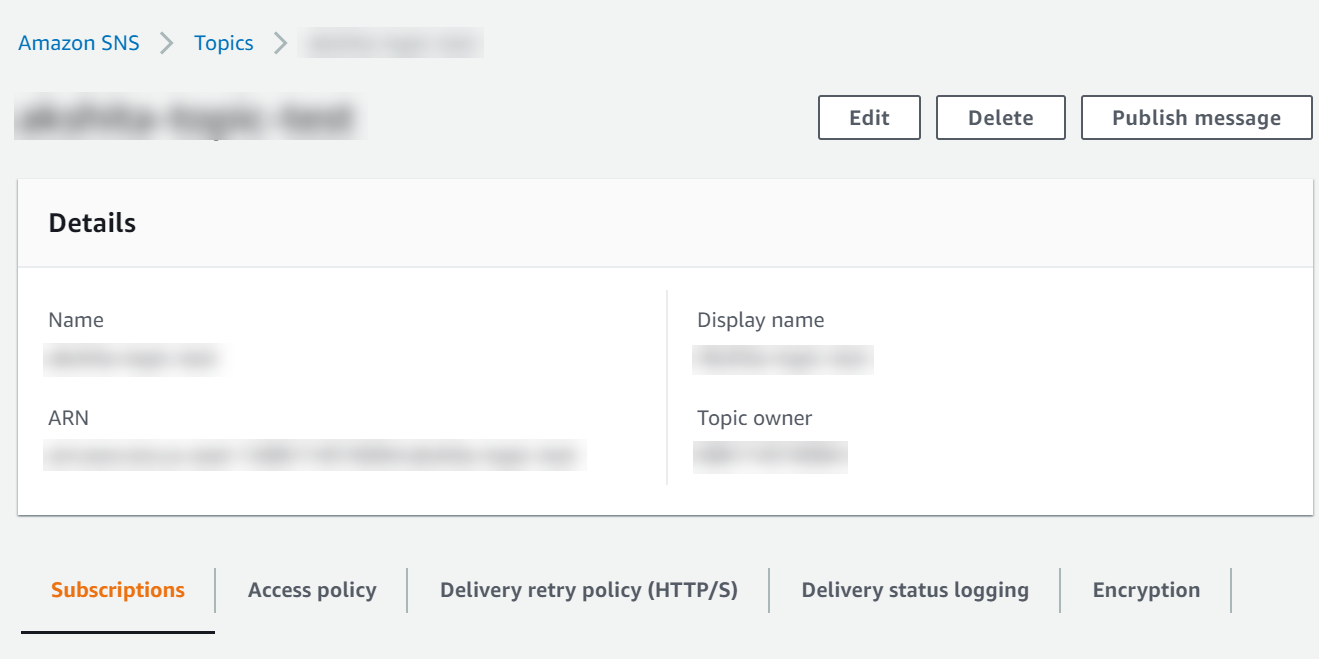

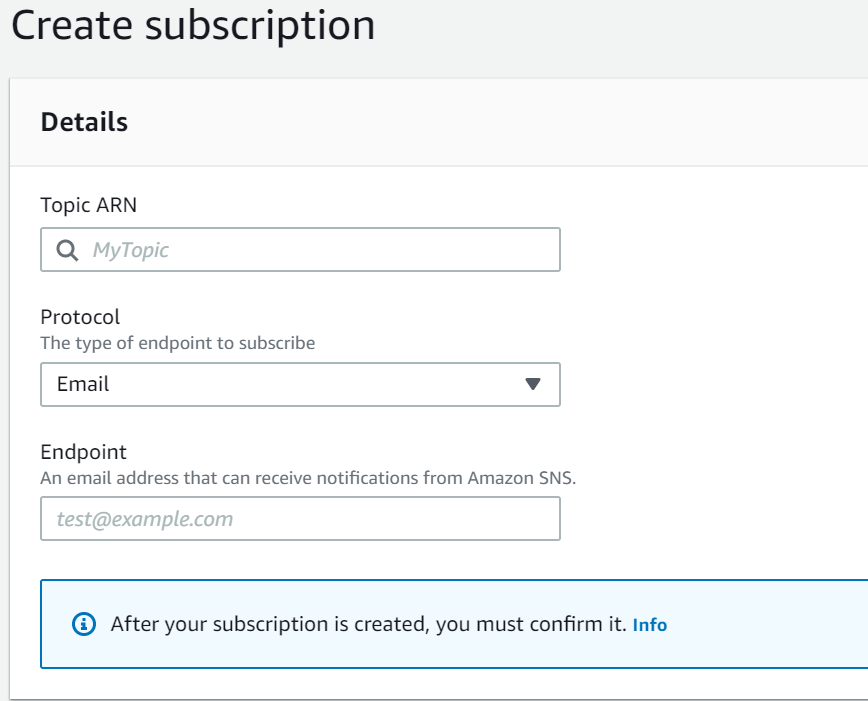

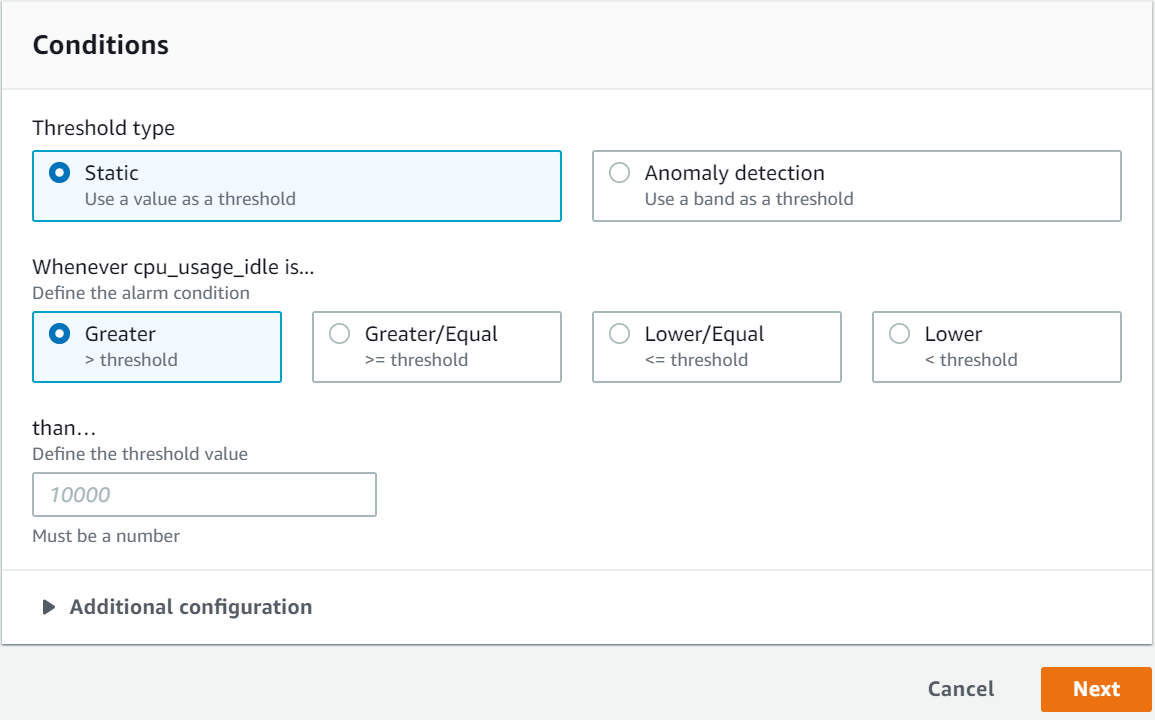

- 4.19.1.8.3.2: Set Metrics Based Alarms Using the AWS Management Console

- 4.19.1.8.4: FAQs for AWS Cloud Utility

- 4.19.1.8.5: Working with AWS Systems Manager

- 4.19.1.8.5.1: Setting up AWS Systems Manager

- 4.19.1.8.5.2: FAQs on AWS Systems Manager

- 4.19.1.8.6: Troubleshooting for the AWS Cloud Utility

- 4.19.2: Installing ESA on Google Cloud Platform (GCP)

- 4.19.2.1: Verifying Prerequisites

- 4.19.2.2: Configuring the Virtual Private Cloud (VPC)

- 4.19.2.3: Obtaining the GCP Image

- 4.19.2.4: Converting the Raw Disk to a GCP Image

- 4.19.2.5: Loading the Protegrity ESA Appliance from a GCP Image

- 4.19.2.5.1: Creating a VM Instance from an Image

- 4.19.2.5.2: Creating a VM Instance from a Disk

- 4.19.2.5.3: Accessing the Appliance

- 4.19.2.6: Finalizing the ESA Installation on the Instance

- 4.19.2.7: Deploying the Instance of the Protegrity Appliance with the Protectors

- 4.19.2.8: Backing up and Restoring Data on GCP

- 4.19.2.9: Increasing Disk Space on the Appliance

- 4.19.3: Installing Protegrity Appliances on Azure

- 4.19.3.1: Verifying Prerequisites

- 4.19.3.2: Azure Cloud Utility

- 4.19.3.3: Setting up Azure Virtual Network

- 4.19.3.4: Creating a Resource Group

- 4.19.3.5: Creating a Storage Account

- 4.19.3.6: Creating a Container

- 4.19.3.7: Obtaining the Azure BLOB

- 4.19.3.8: Creating Image from the Azure BLOB

- 4.19.3.9: Creating a VM from the Image

- 4.19.3.10: Accessing the Appliance

- 4.19.3.11: Finalizing the Installation of Protegrity Appliance on the Instance

- 4.19.3.12: Accelerated Networking

- 4.19.3.13: Backing up and Restoring VMs on Azure

- 4.19.3.14: Deploying the ESA Instance with the Protectors

- 4.20: Architectures

- 5: Data Security Gateway (DSG)

- 5.1: Protegrity Gateway Technology

- 5.2: Protegrity Gateway Product

- 5.3: Technical Architecture

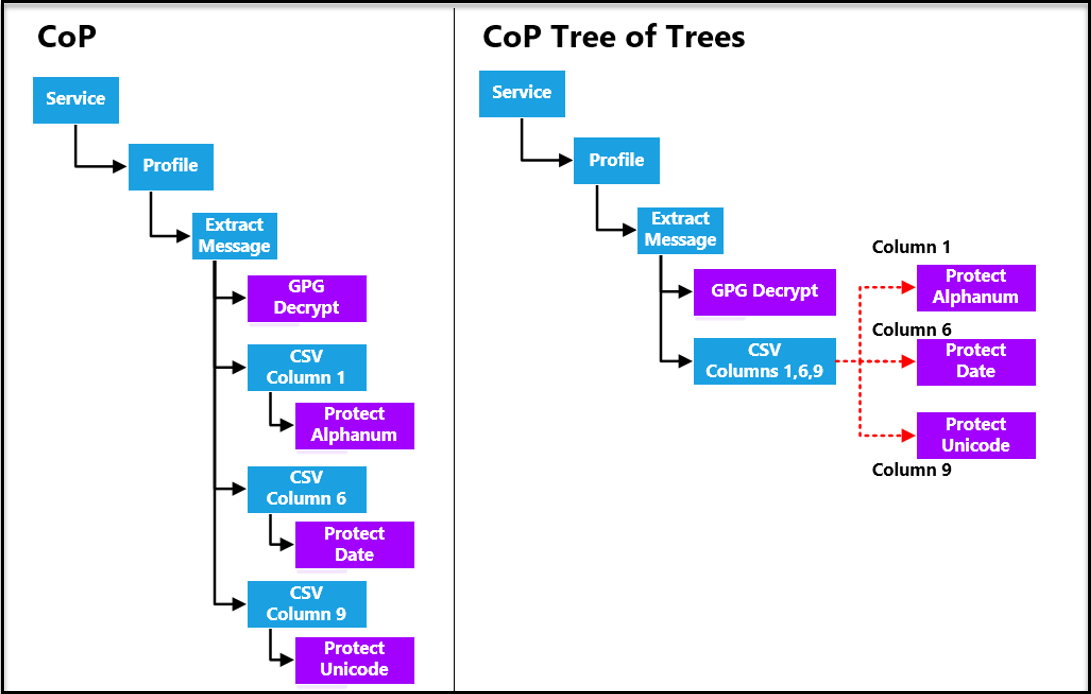

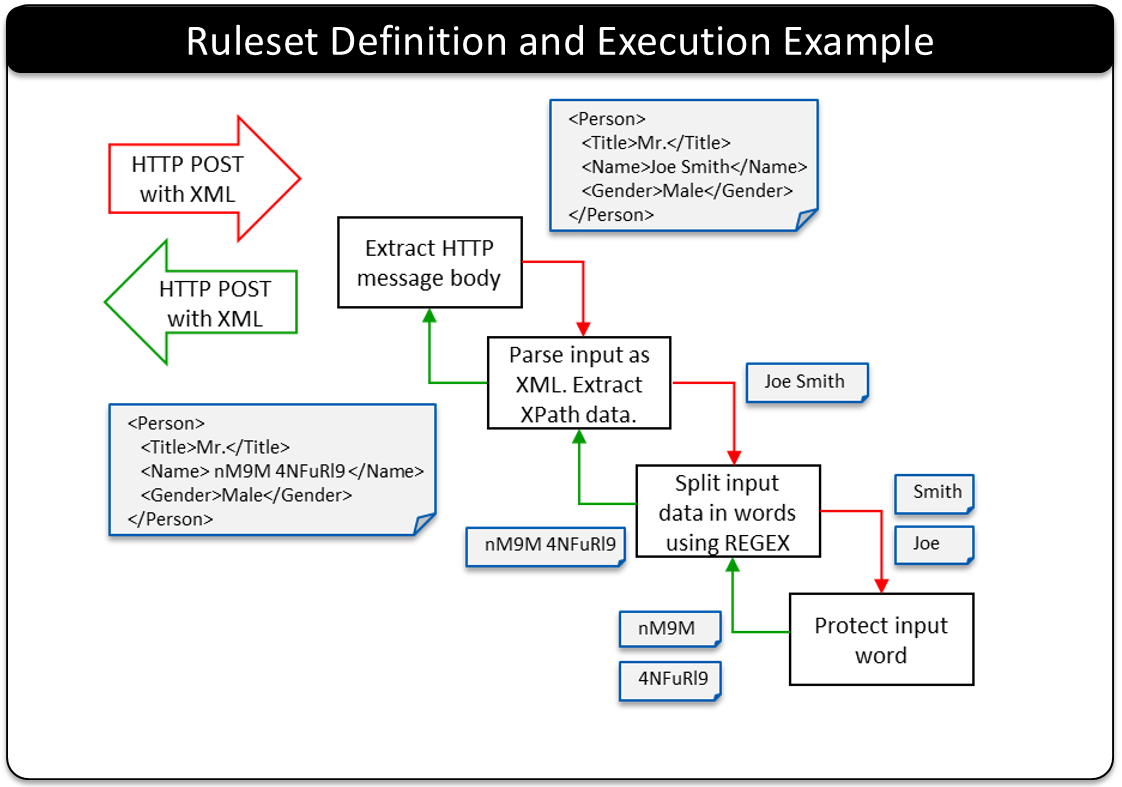

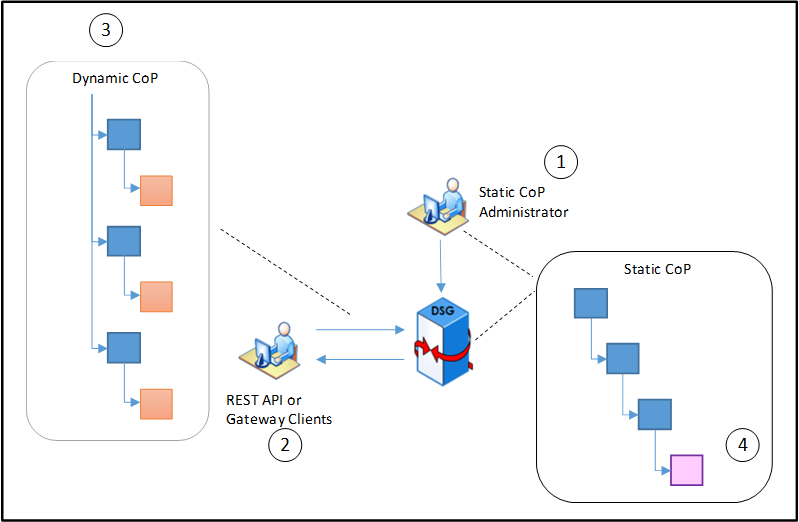

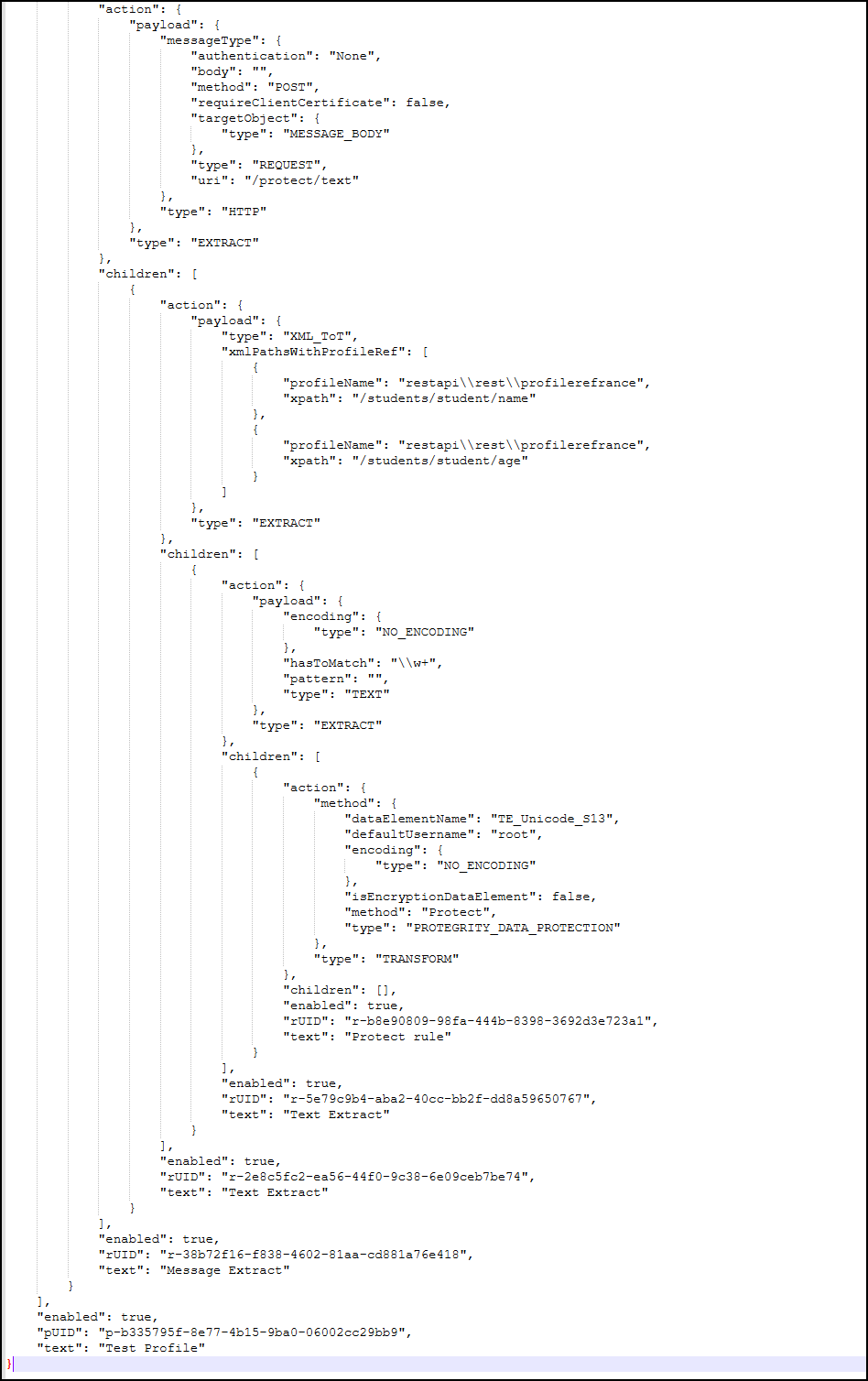

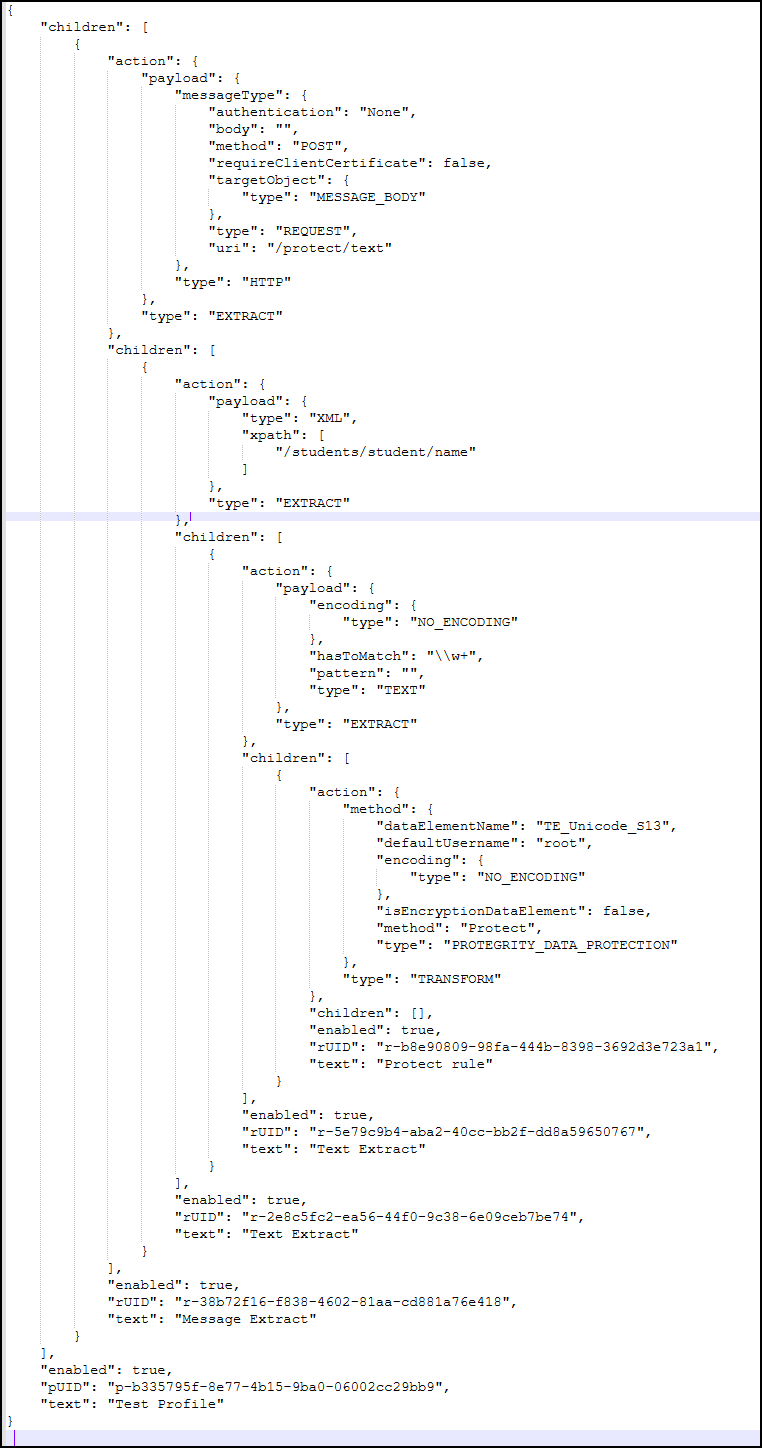

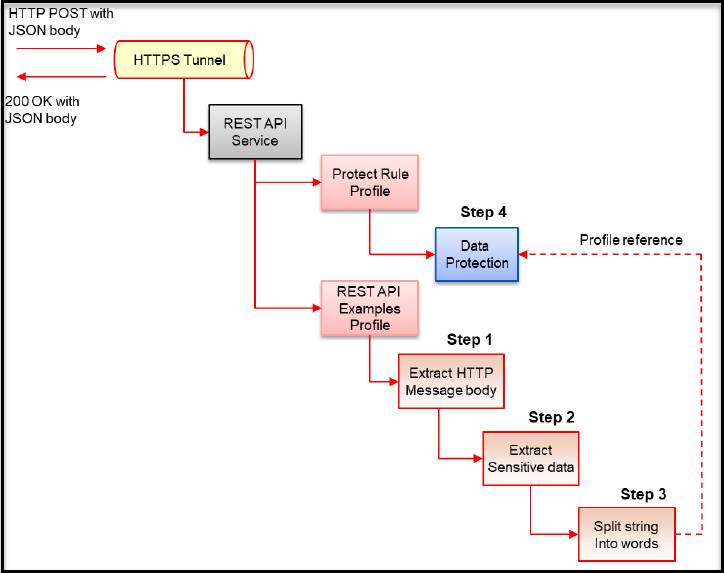

- 5.3.1: Configuration over Programming (CoP) Architecture

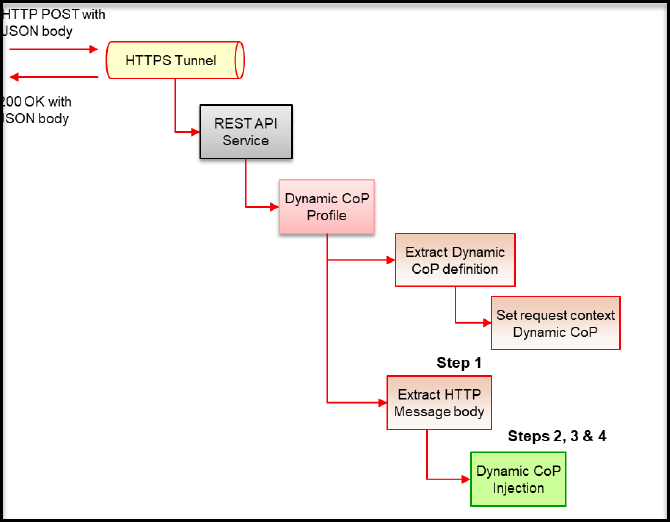

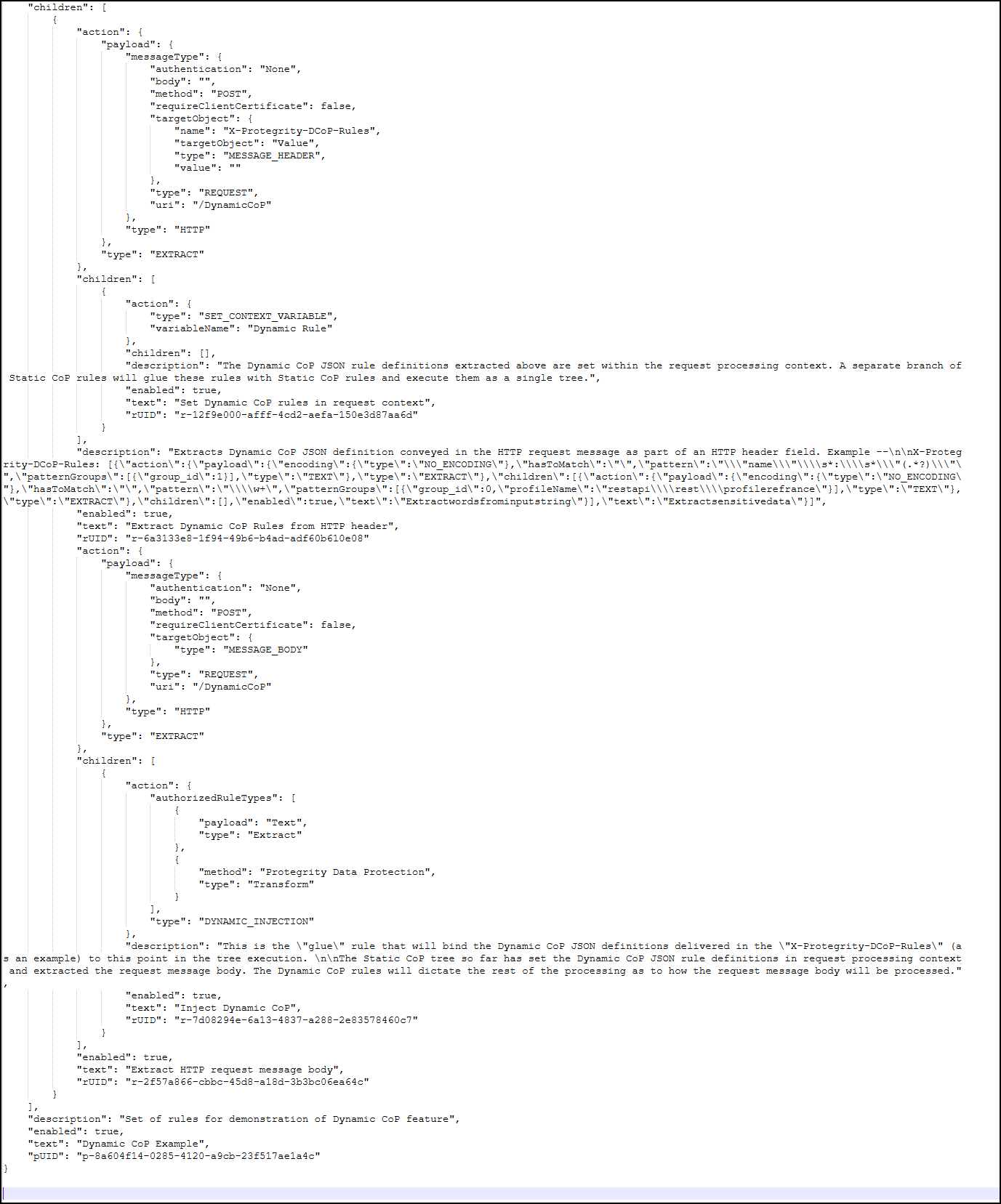

- 5.3.2: Dynamic Configuration over Programming (CoP)

- 5.4: Deployment Scenarios

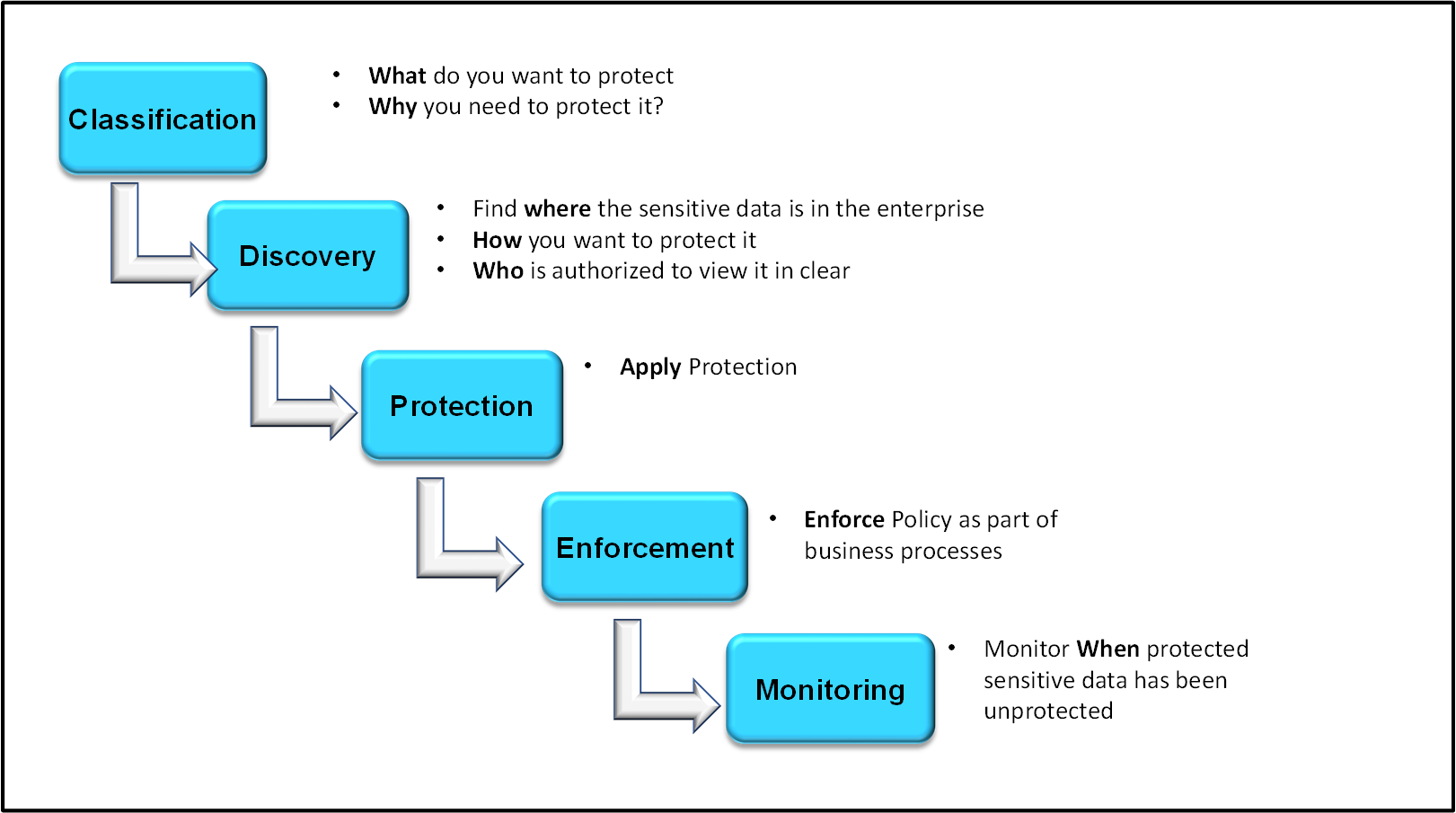

- 5.5: Protegrity Methodology

- 5.6: Planning for Gateway Installation

- 5.6.1: LDAP and SSO Configurations

- 5.6.2: Mapping of Sensitive Data Primitives

- 5.6.3: Network Planning

- 5.6.4: HTTP URL Rewriting

- 5.6.5: Clustering and Load Balancing

- 5.6.6: SSL Certificates

- 5.7: Installing the DSG

- 5.7.1: Installing the DSG On-Premise

- 5.7.2: Installing DSG on Cloud Installation

- 5.7.2.1: Installing DSG on AWS

- 5.7.2.1.1: Creating and Launching a DSG Instance from the AMI

- 5.7.2.1.2: Finalizing the DSG Installation

- 5.7.2.2: Installing DSG on Azure

- 5.7.2.2.1: Creating Image and VM on Azure

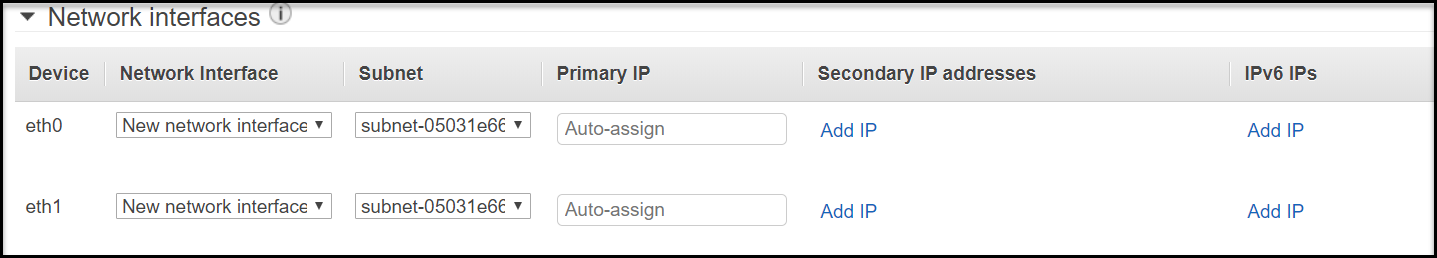

- 5.7.2.2.2: Adding and Configuring the Second Network Interface

- 5.7.2.2.3: Finalizing the DSG Installation

- 5.7.2.2.4: Azure Cloud Utility

- 5.7.2.3: Installing DSG on GCP

- 5.7.2.3.1: Creating a VM Instance from an Image

- 5.7.2.3.2: Finalizing the DSG Installation

- 5.7.3: Updating the Host Details

- 5.7.4: Additional Steps after Changing the Hostname or FQDN

- 5.7.5: Installing DSG on VMware

- 5.8: Enhancements in DSG 3.3.0.0

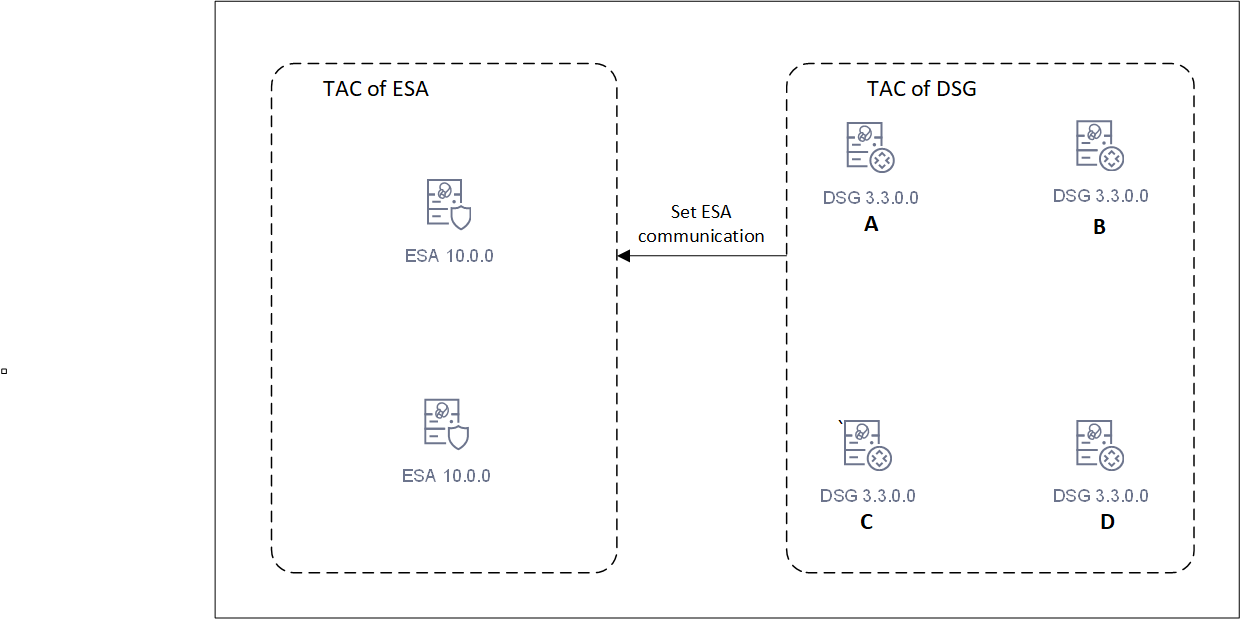

- 5.9: Trusted Appliances Cluster

- 5.10: Upgrading to DSG 3.3.0.0

- 5.10.1: Post Installation/Upgrade Steps

- 5.11: Ascertaining the Host Address in the Server Certificates

- 5.12: Web UI

- 5.12.1: Cluster

- 5.12.1.1: Monitoring

- 5.12.1.2: Log Viewer

- 5.12.2: Ruleset

- 5.12.2.1: Learn Mode

- 5.12.2.1.1: Learn Mode Scheduled Task

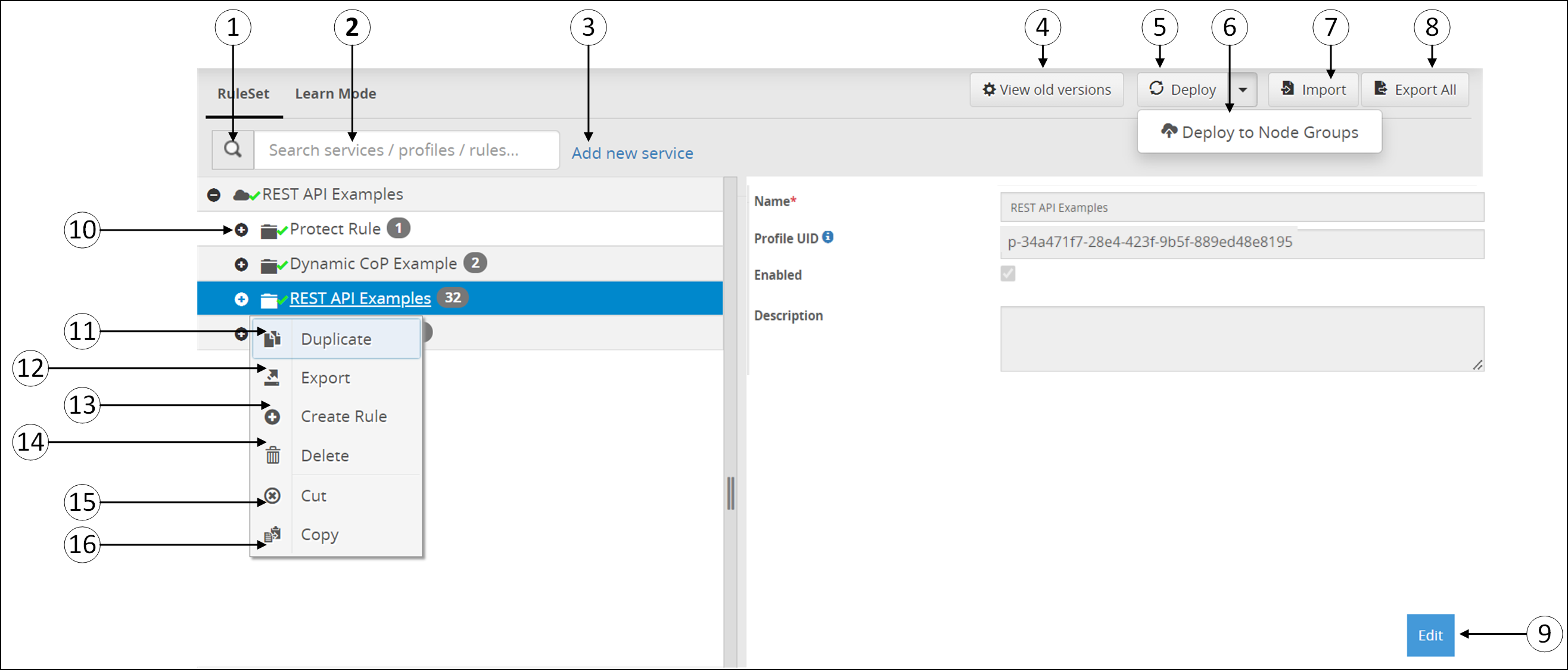

- 5.12.2.2: Ruleset Tab

- 5.12.2.2.1: Ruleset Versioning

- 5.12.3: Transport

- 5.12.3.1: Tunnels

- 5.12.3.1.1: Manage a Tunnel

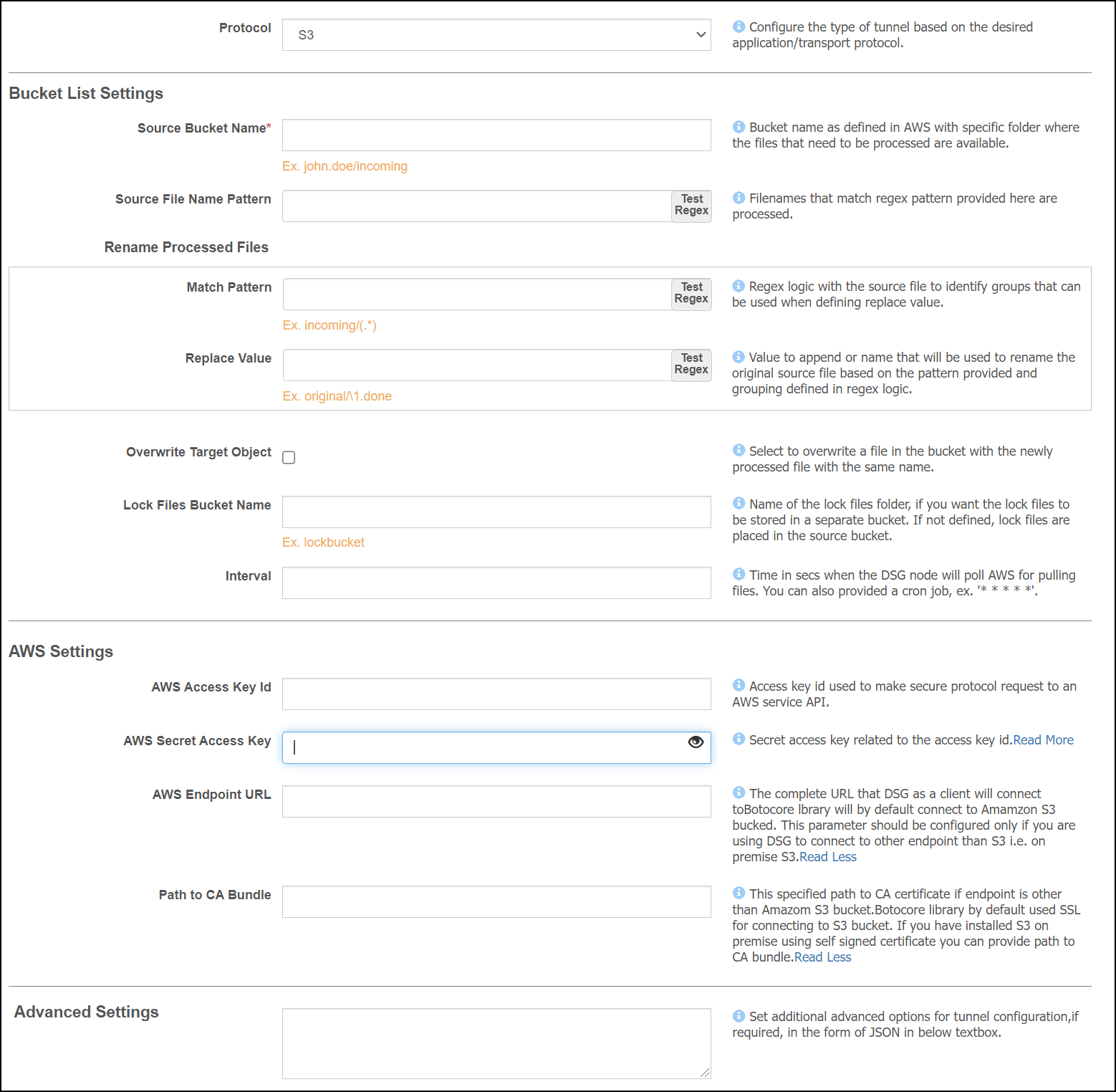

- 5.12.3.1.2: Amazon S3 Tunnel

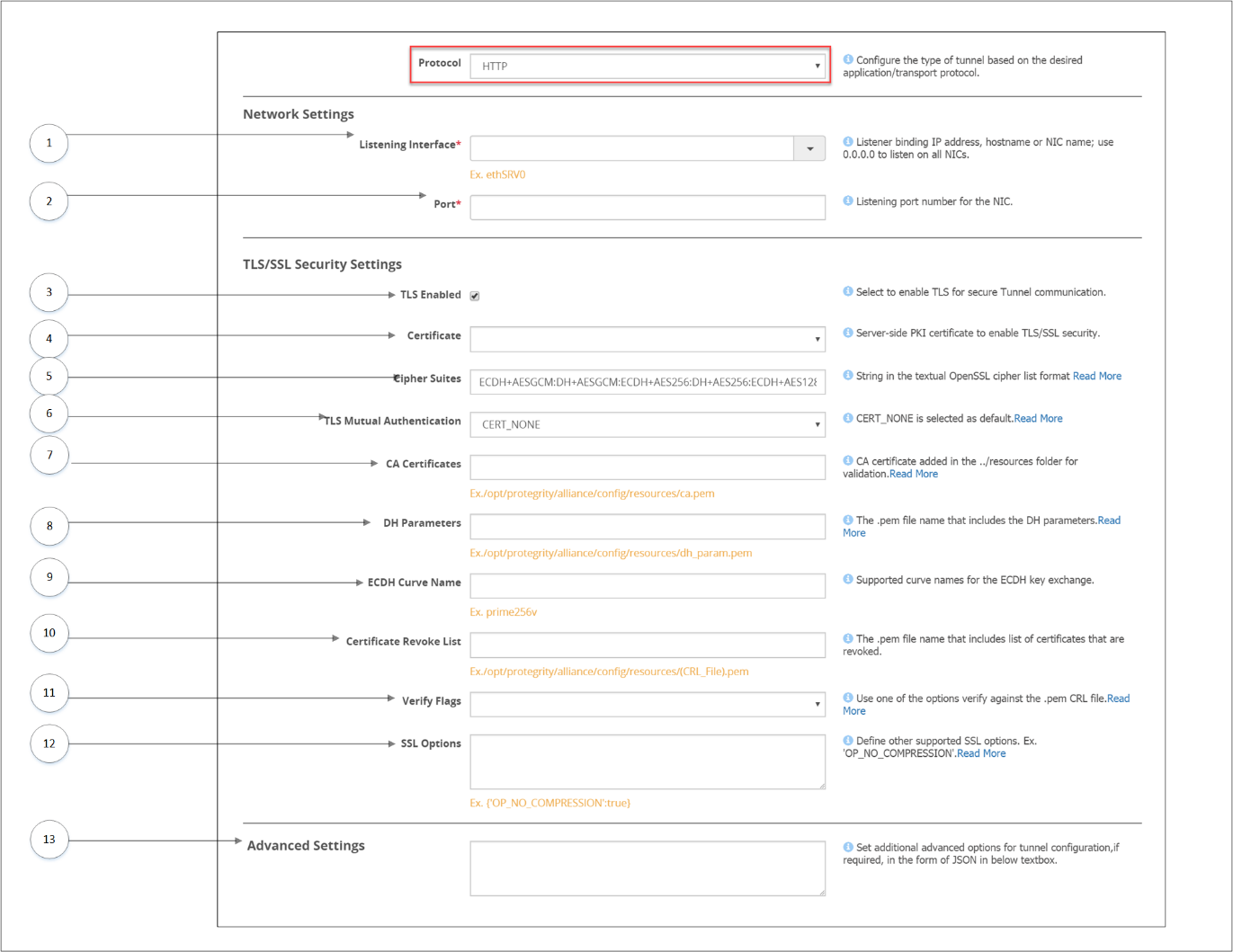

- 5.12.3.1.3: HTTP Tunnel

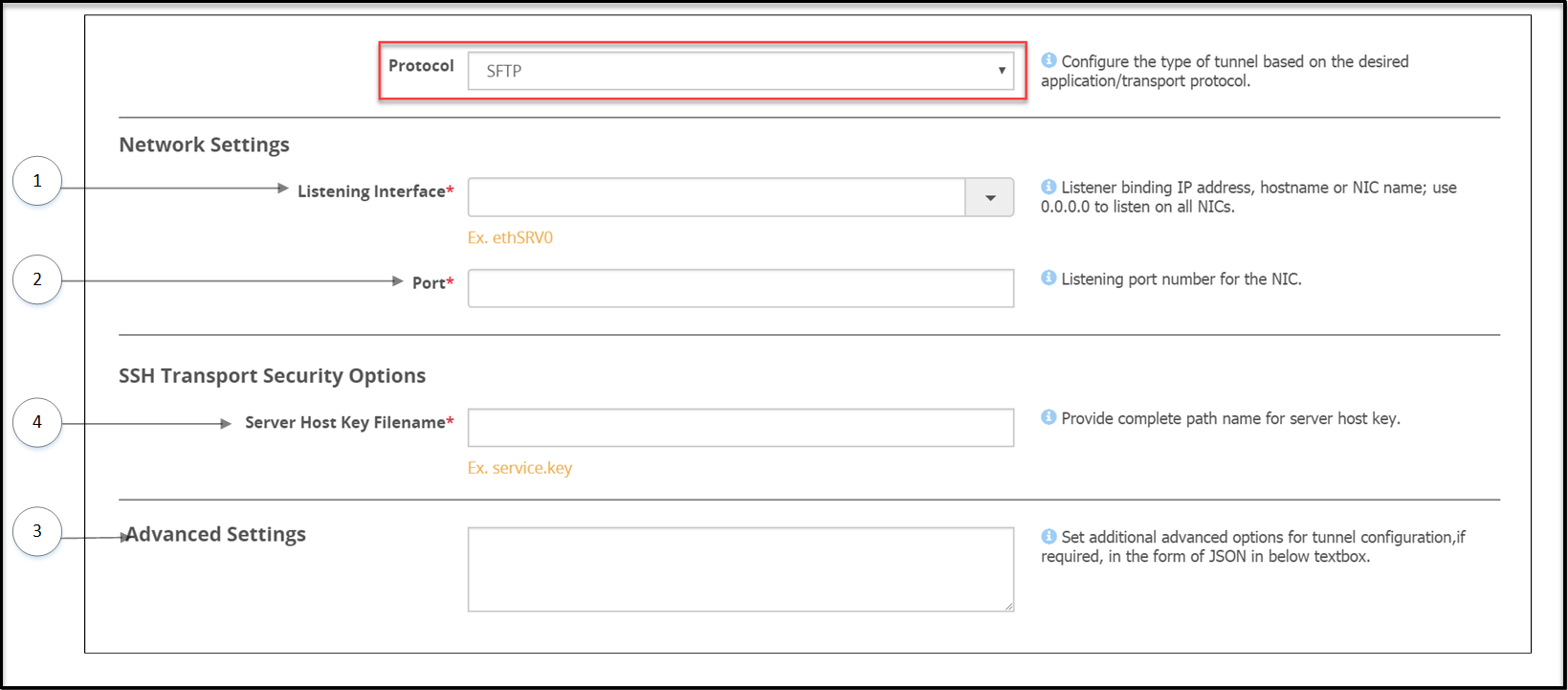

- 5.12.3.1.4: SFTP Tunnel

- 5.12.3.1.5: SMTP Tunnel

- 5.12.3.1.6: NFS/CIFS

- 5.12.3.1.6.1: NFS/CIFS

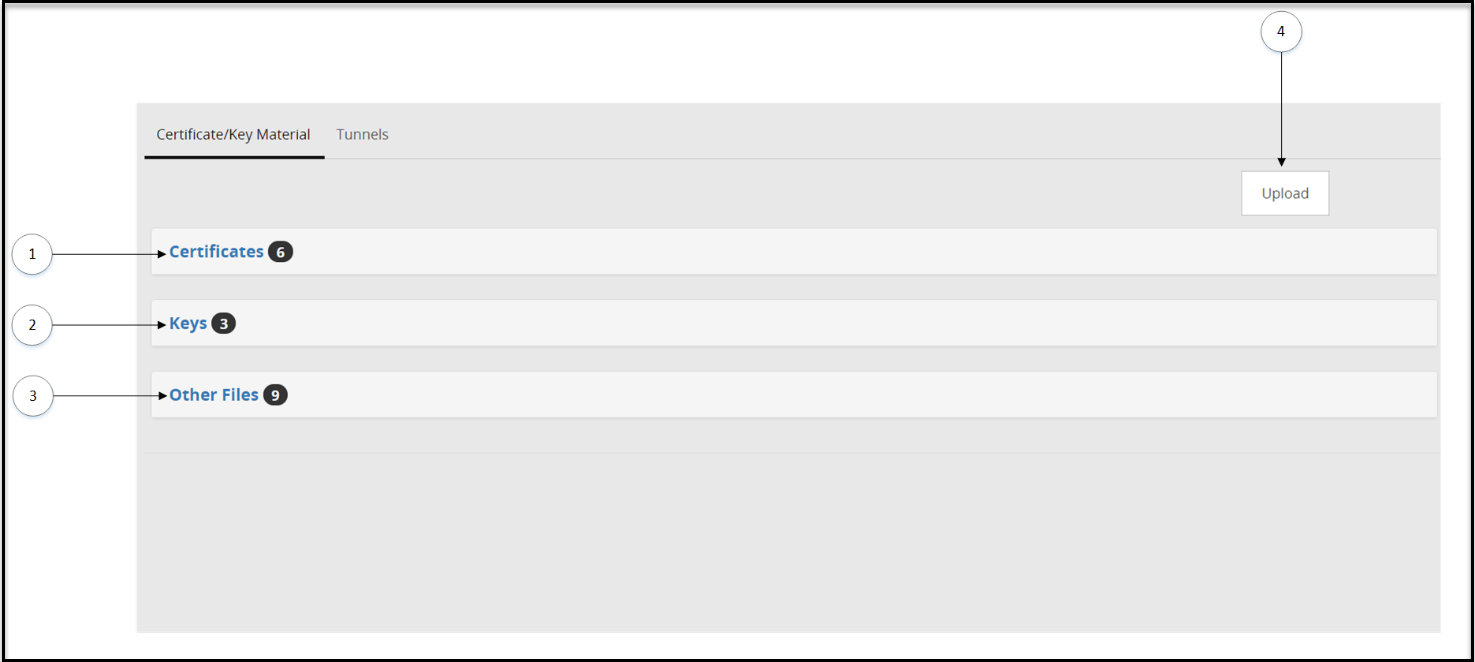

- 5.12.3.2: Certificates/Key Material

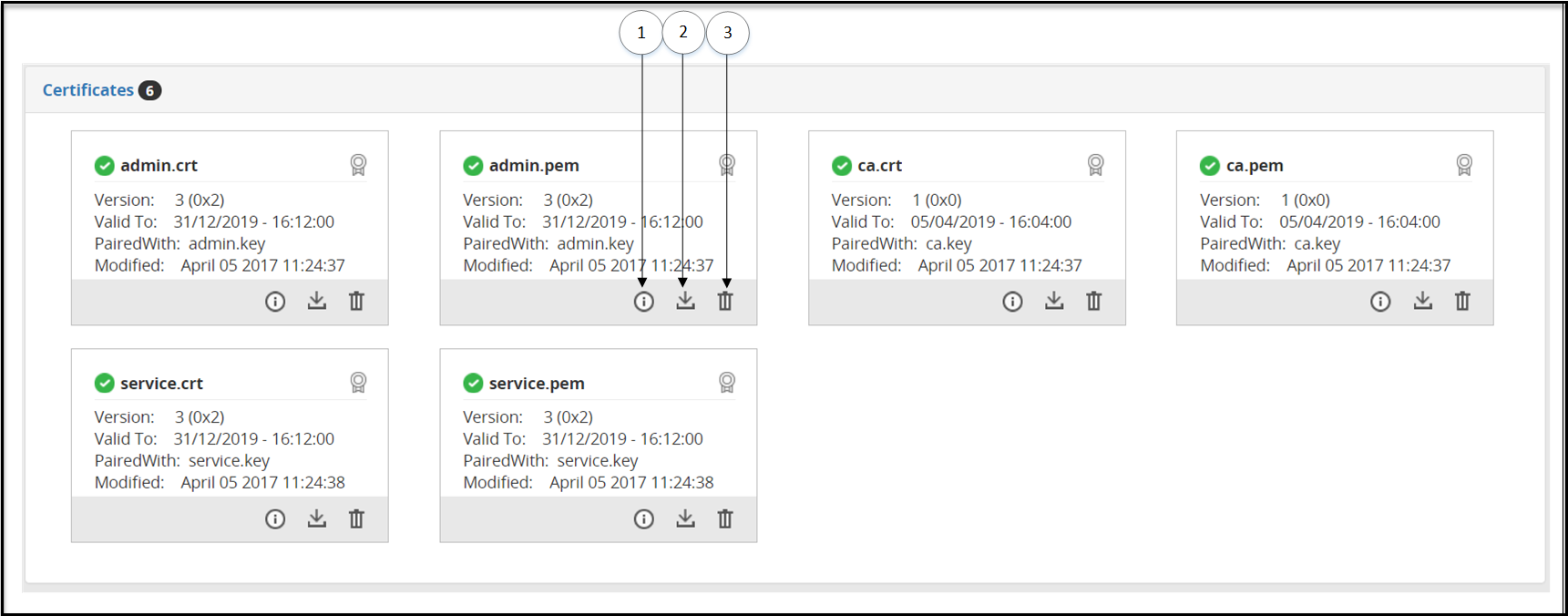

- 5.12.3.2.1: Certificates Tab

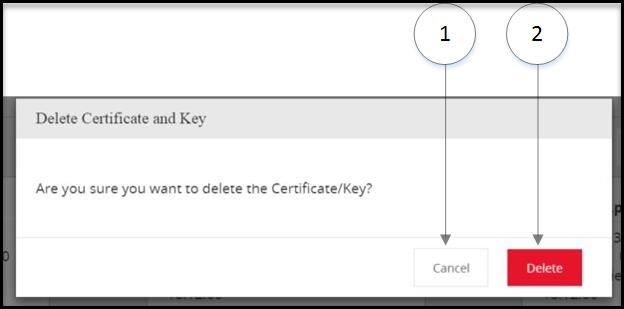

- 5.12.3.2.2: Delete Certificates and Keys

- 5.12.3.2.3: Keys Subtab

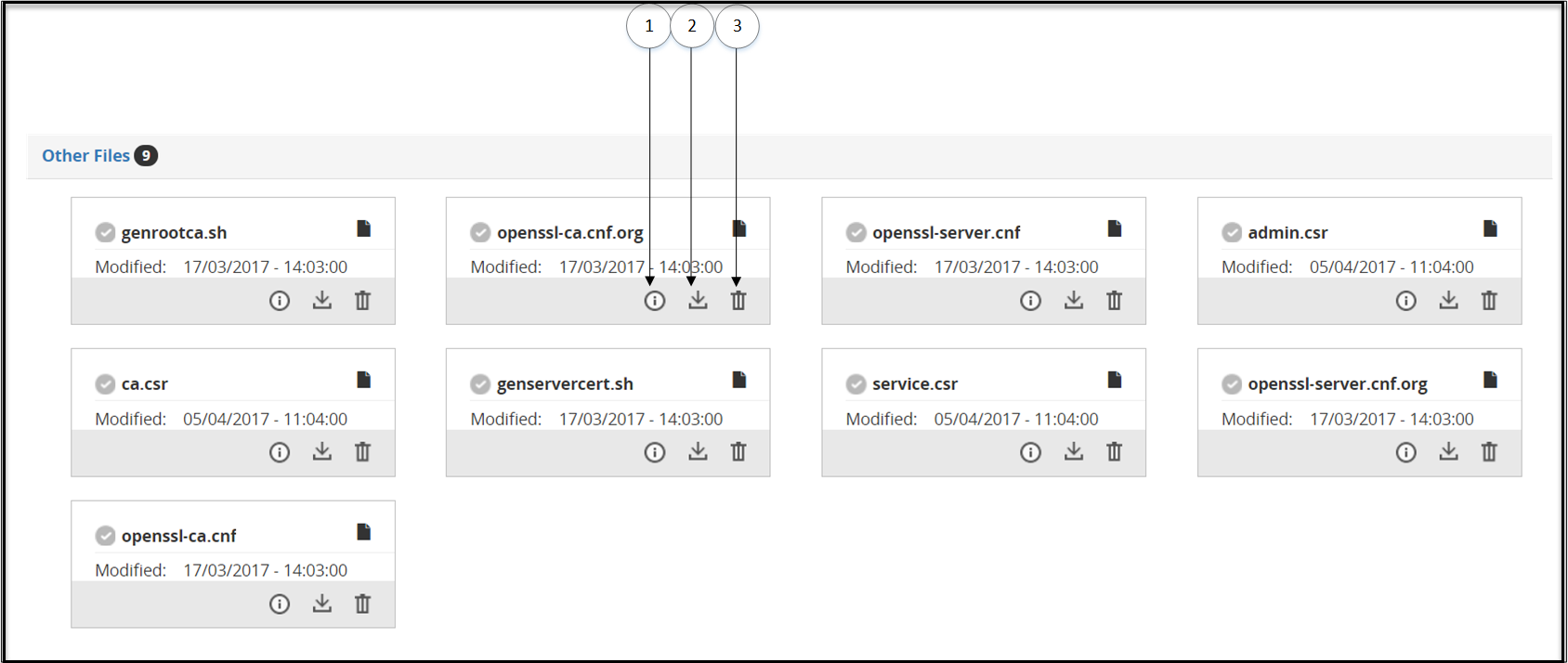

- 5.12.3.2.4: Other Files Subtab

- 5.12.3.2.5: Upload Certificate/Keys

- 5.12.4: Global Settings

- 5.12.4.1: Debug

- 5.12.4.2: Global Protocol Stack

- 5.12.4.3: Web UI

- 5.12.5: Tokenization Portal

- 5.13: Overview of Sub Clustering

- 5.14: Implementation

- 5.15: Transaction Metrics Logging

- 5.16: Error Metrics Logging

- 5.17: Usage Metrics Logging

- 5.18: Ruleset Reference

- 5.18.1: Services

- 5.18.1.1: Amazon S3 gateway

- 5.18.1.2: Mount File System out-of-band Service

- 5.18.1.3: REST API

- 5.18.1.4: Secure Web socket (WSS)

- 5.18.1.5: SFTP Gateway

- 5.18.1.6: SMTP Gateway

- 5.18.2: Profile

- 5.18.3: Actions

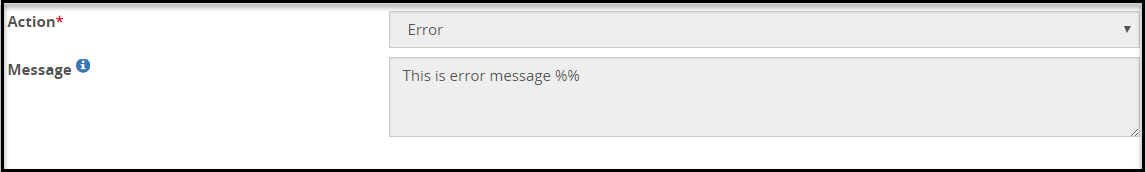

- 5.18.3.1: Error

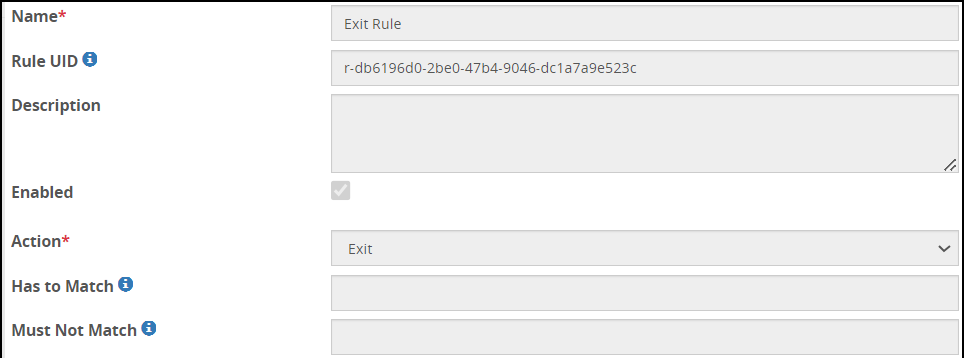

- 5.18.3.2: Exit

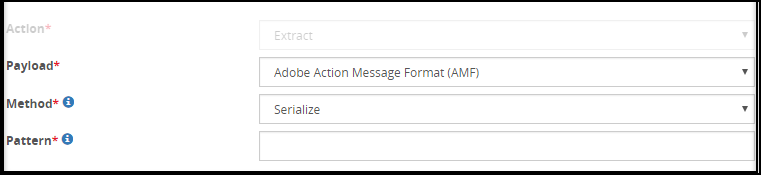

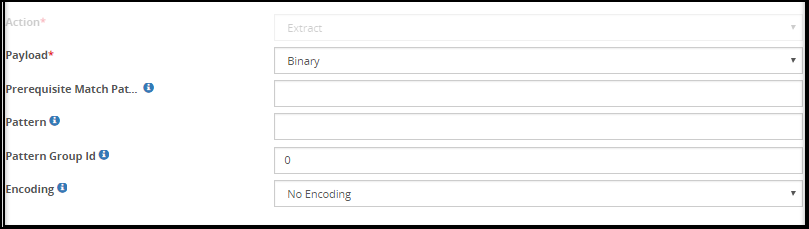

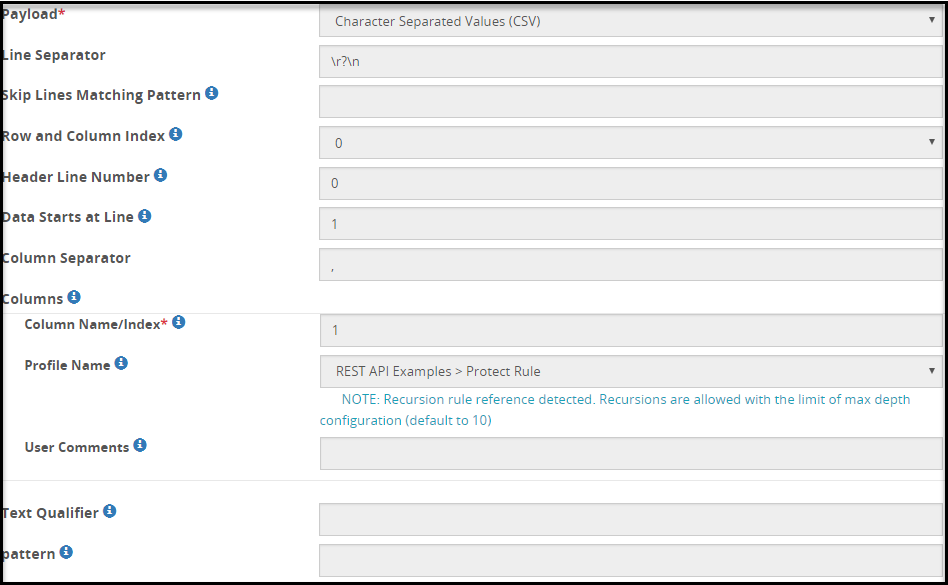

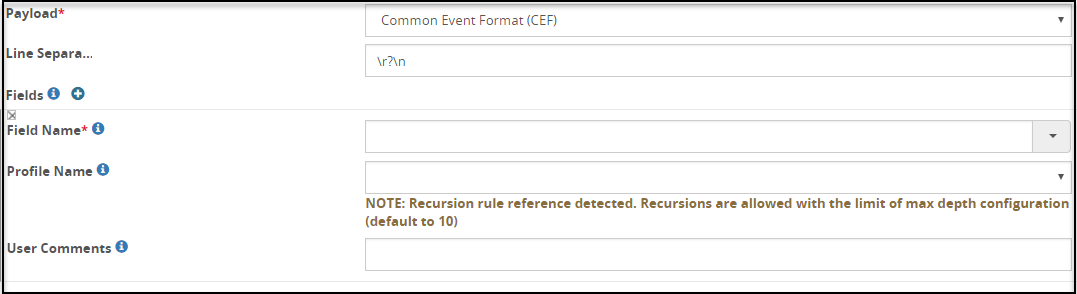

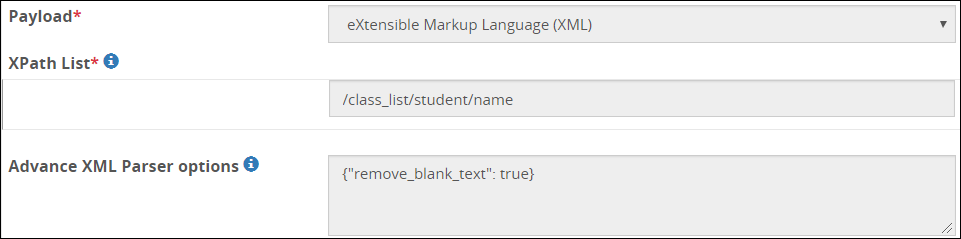

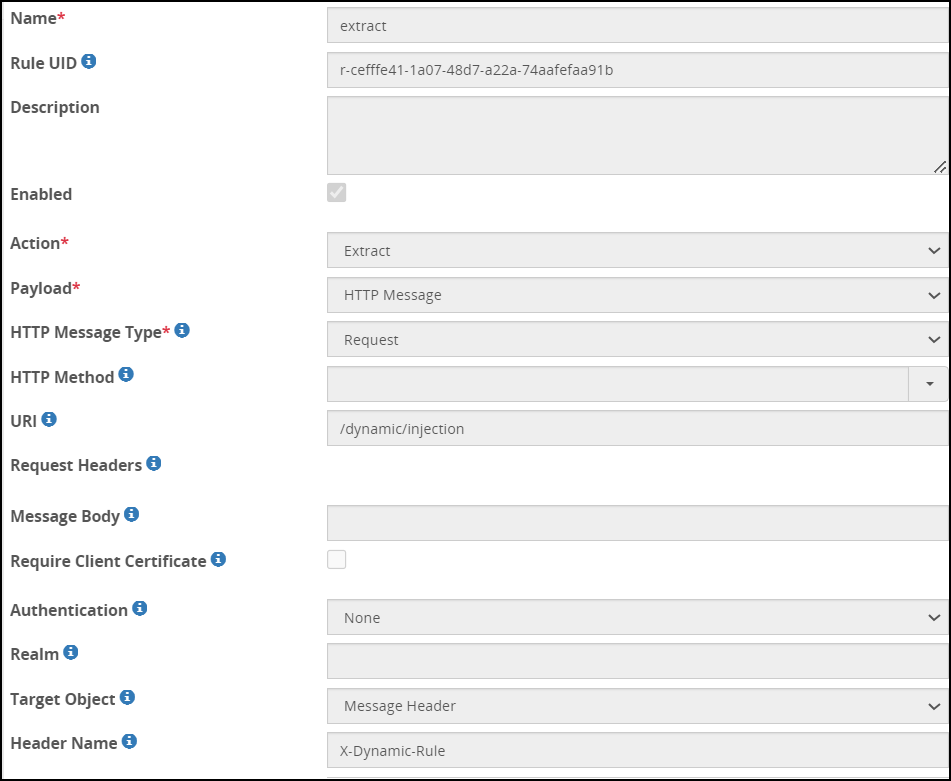

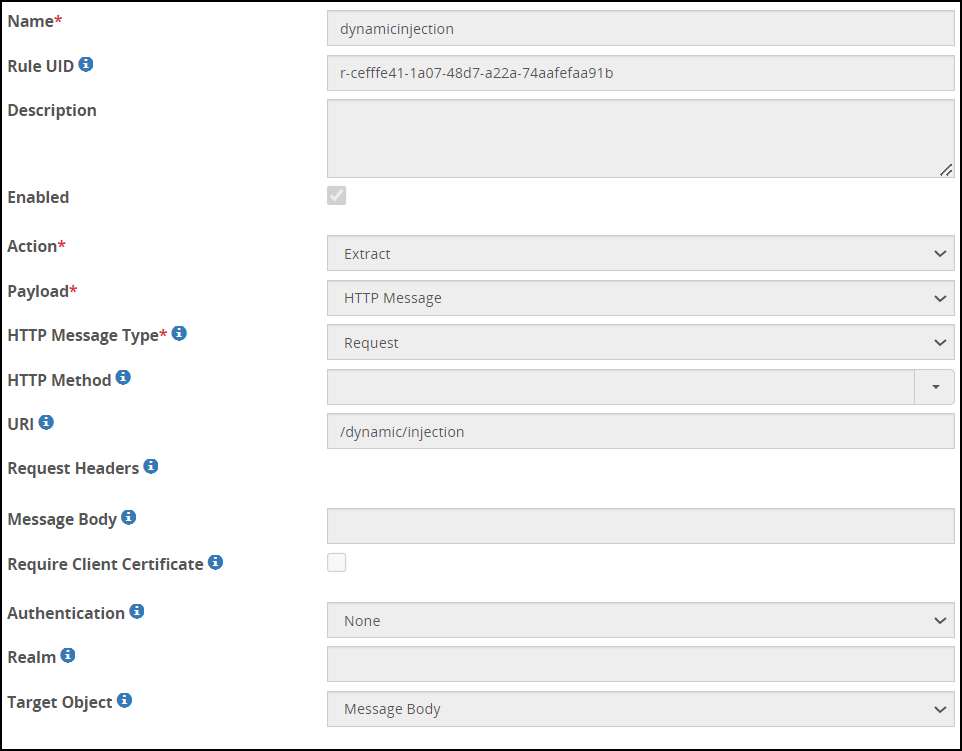

- 5.18.3.3: Extract

- 5.18.3.3.1: Adobe Action Message Format

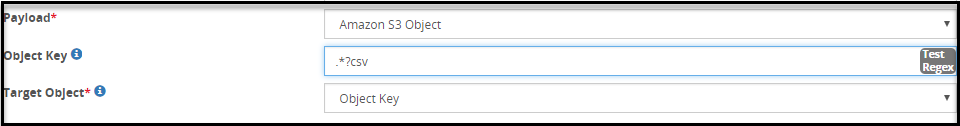

- 5.18.3.3.2: Amazon S3 Object

- 5.18.3.3.3: Binary Payload

- 5.18.3.3.4: CSV Payload

- 5.18.3.3.5: Common Event Format (CEF)

- 5.18.3.3.6: XML Payload

- 5.18.3.3.7: Date Time Format

- 5.18.3.3.8: XML with Tree-of-Trees (ToT)

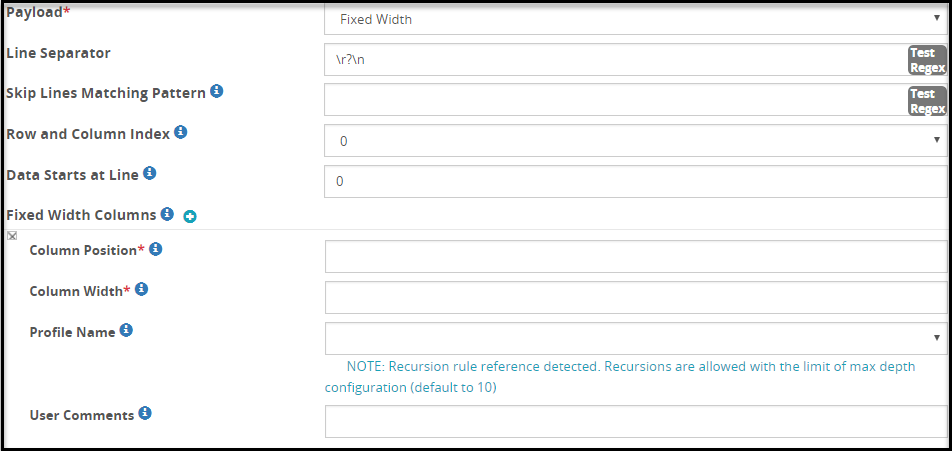

- 5.18.3.3.9: Fixed Width

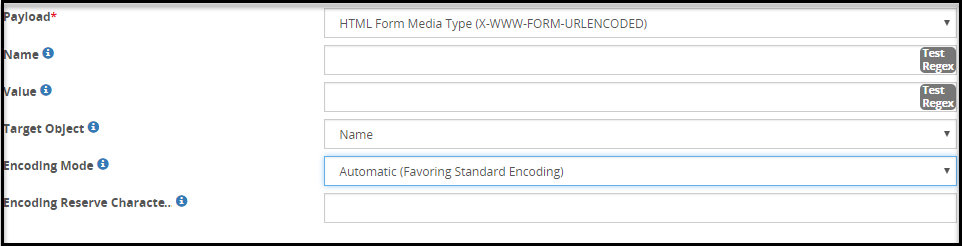

- 5.18.3.3.10: HTML Form Media Payload

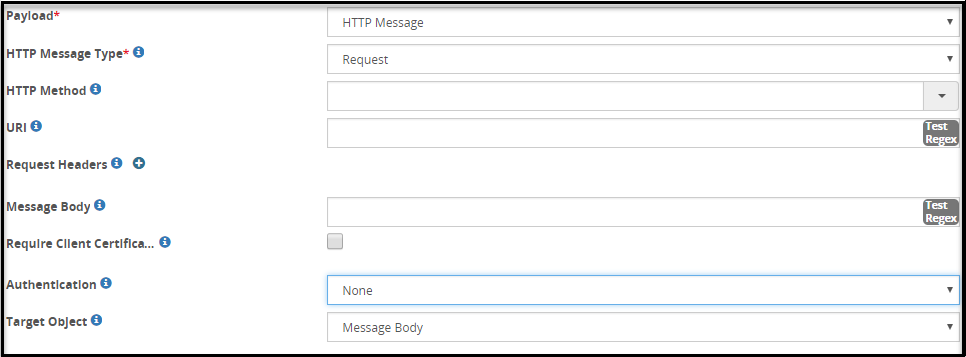

- 5.18.3.3.11: HTTP Message Payload

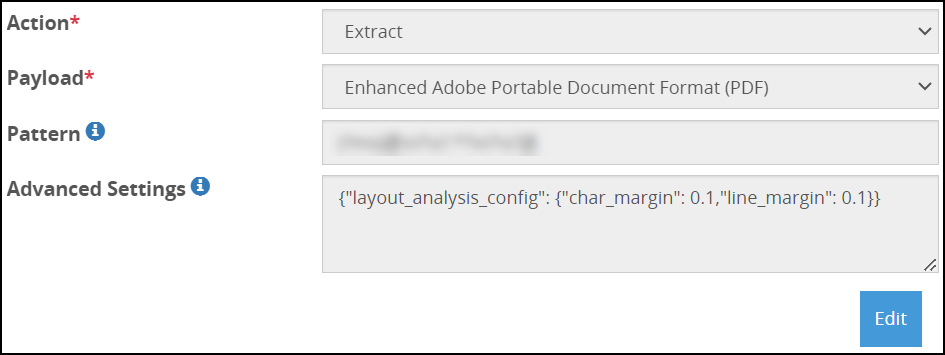

- 5.18.3.3.12: Enhanced Adobe PDF Codec

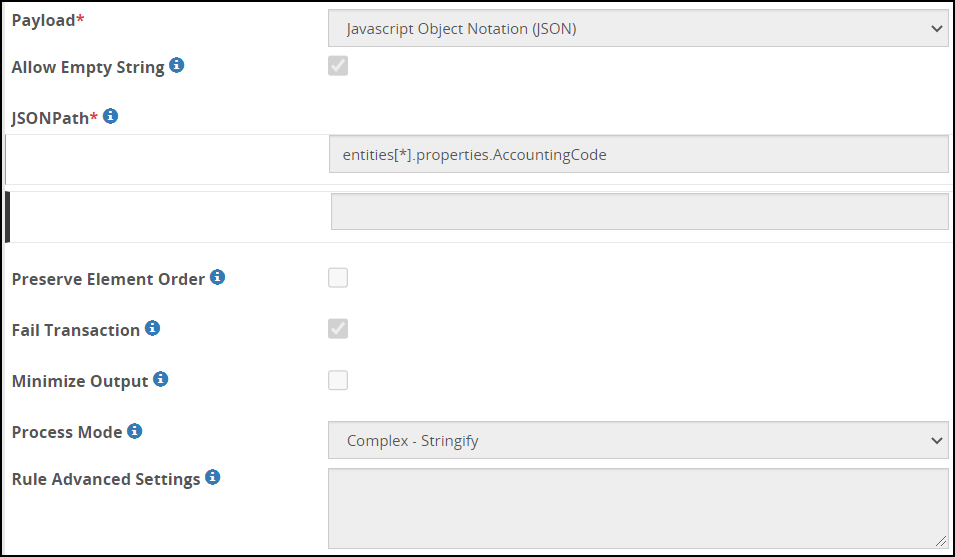

- 5.18.3.3.13: JSON Payload

- 5.18.3.3.14: JSON with Tree-of-Trees (ToT)

- 5.18.3.3.15: Microsoft Office Documents

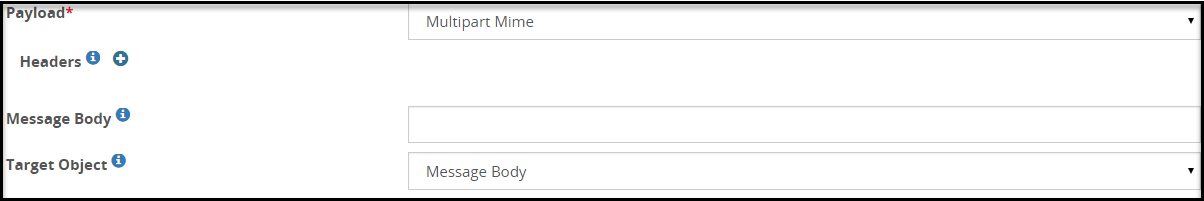

- 5.18.3.3.16: Multipart Mime Payload

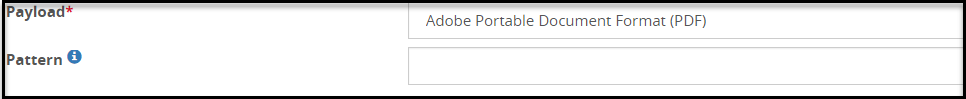

- 5.18.3.3.17: PDF Payload

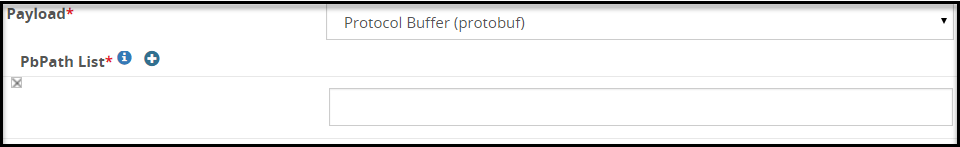

- 5.18.3.3.18: Protocol Buffer Payload

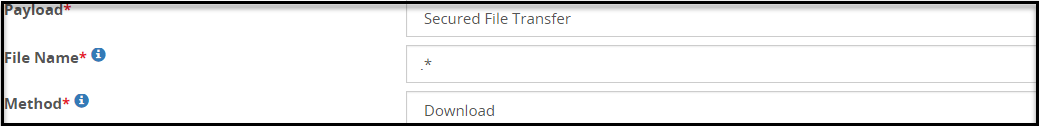

- 5.18.3.3.19: Secure File Transfer Payload

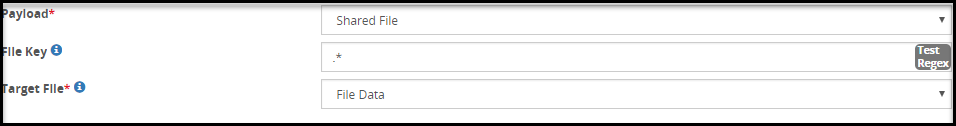

- 5.18.3.3.20: Shared File

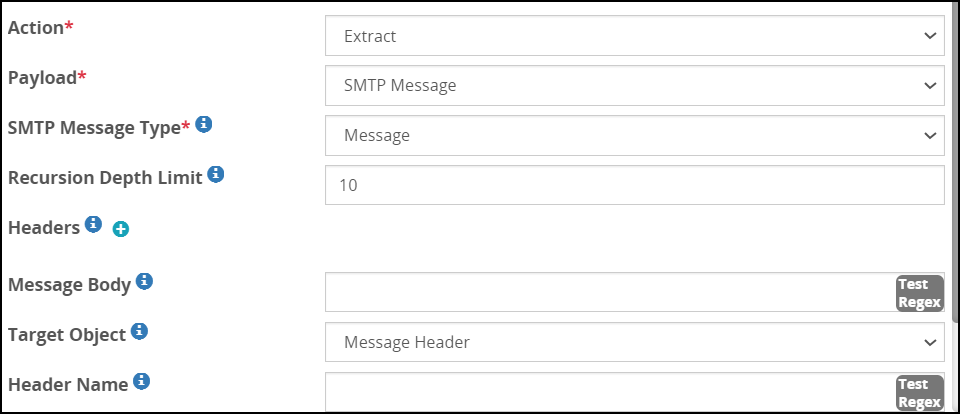

- 5.18.3.3.21: SMTP Message Payload

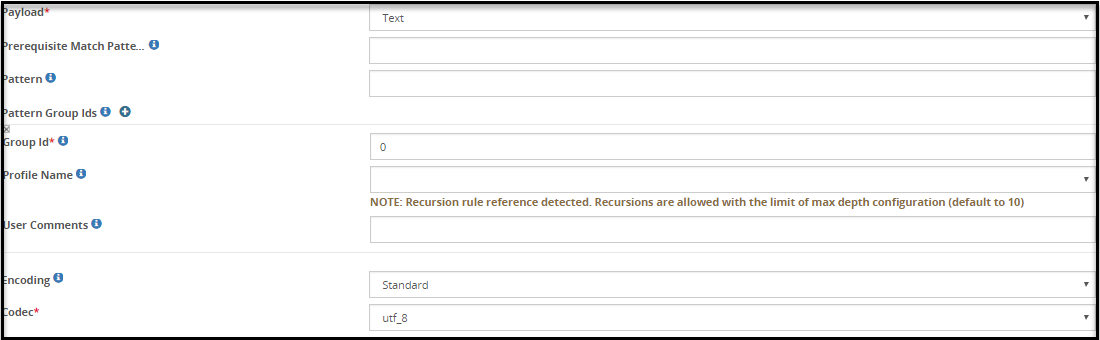

- 5.18.3.3.22: Text Payload

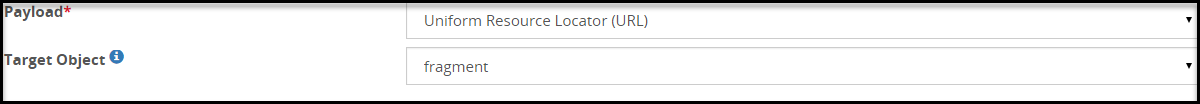

- 5.18.3.3.23: URL Payload

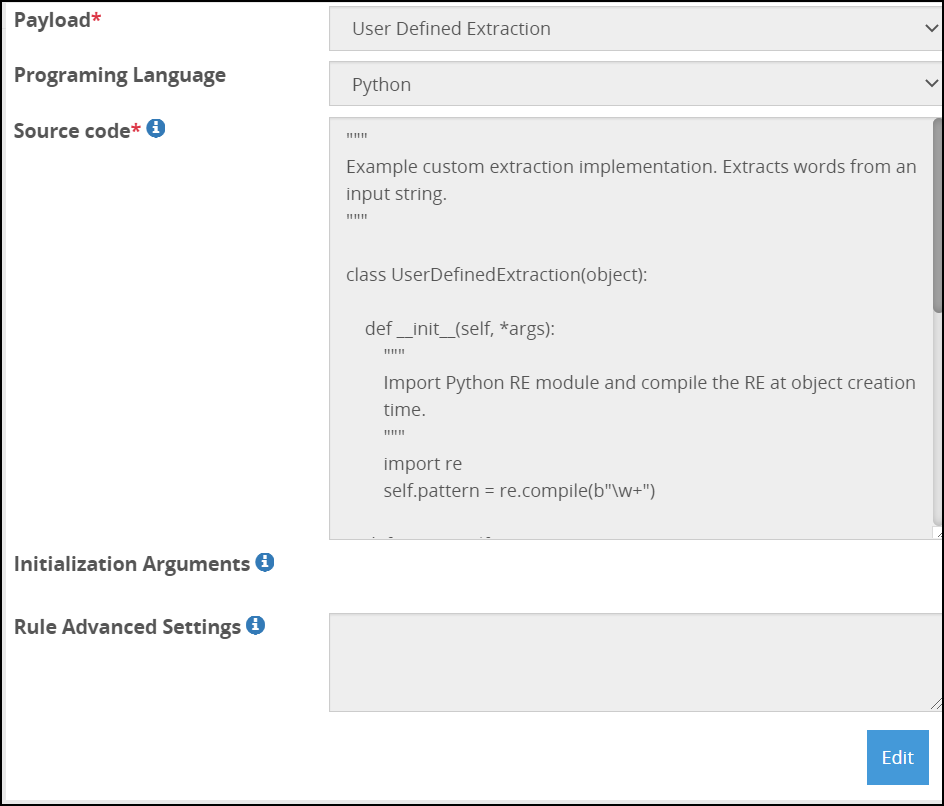

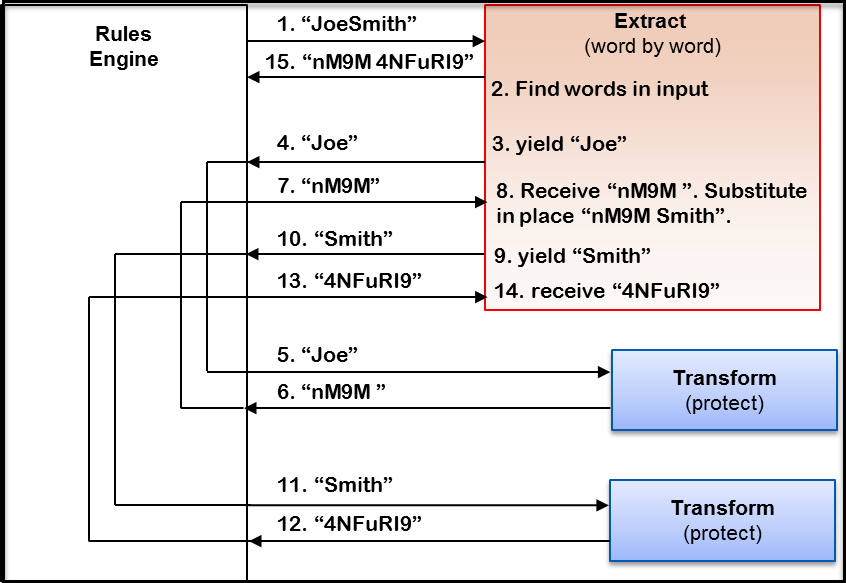

- 5.18.3.3.24: User Defined Extraction Payload

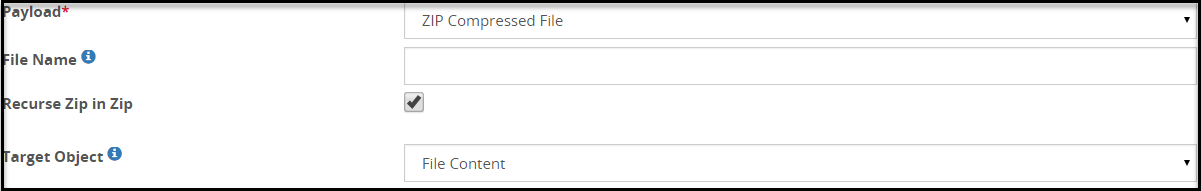

- 5.18.3.3.25: ZIP Compressed File Payload

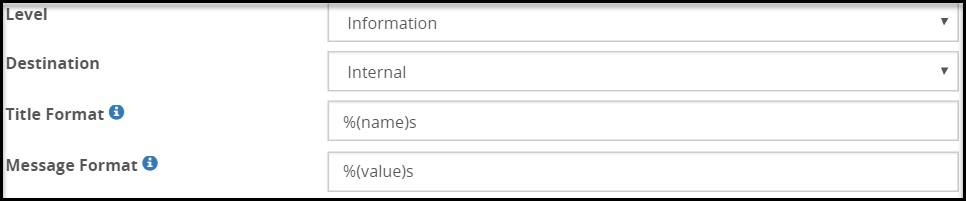

- 5.18.3.4: Log

- 5.18.3.5: Profile Reference

- 5.18.3.6: Set User Identity

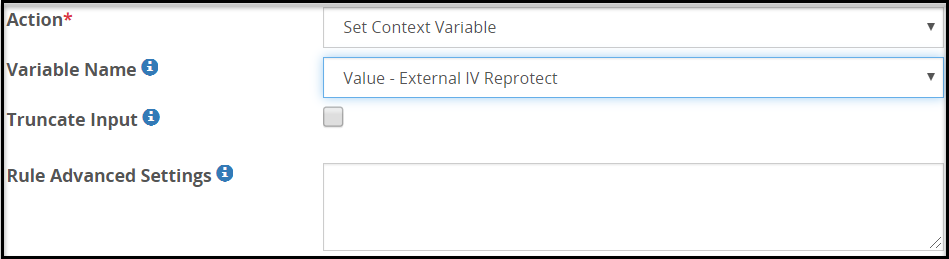

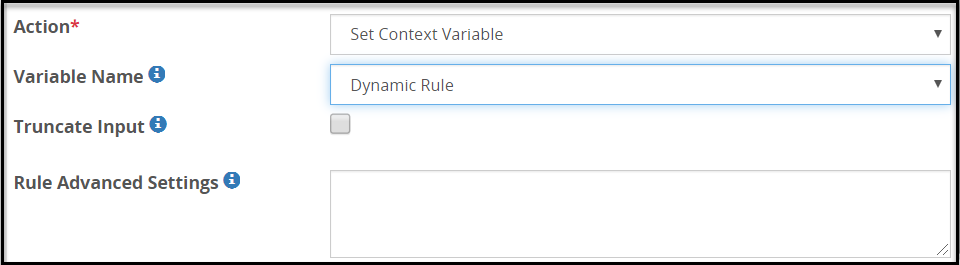

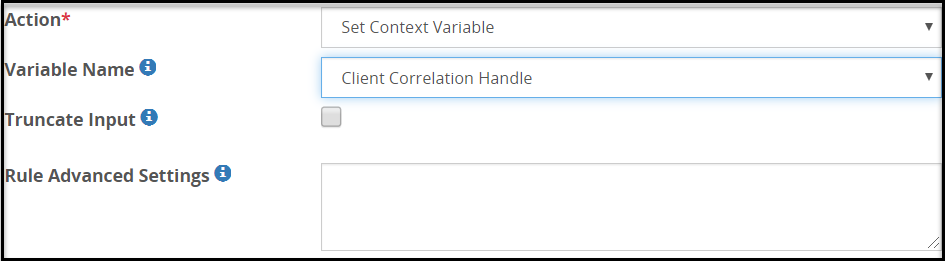

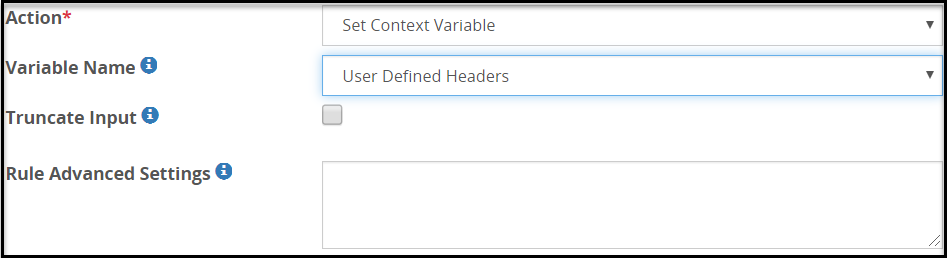

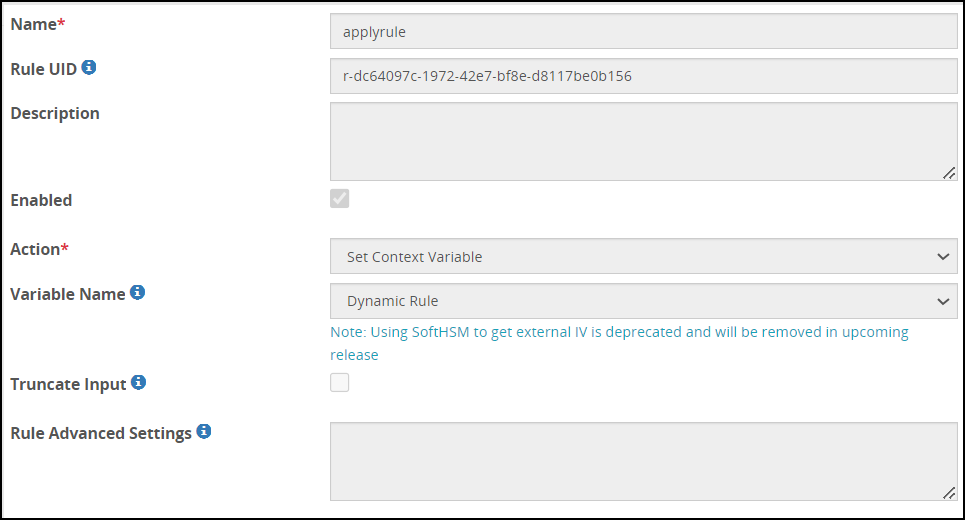

- 5.18.3.7: Set Context Variable

- 5.18.3.8: Transform

- 5.18.3.8.1: GNU Privacy Guard (GPG)

- 5.18.3.8.2: Protegrity Data Protection Method

- 5.18.3.8.3: Regular Expression Replace

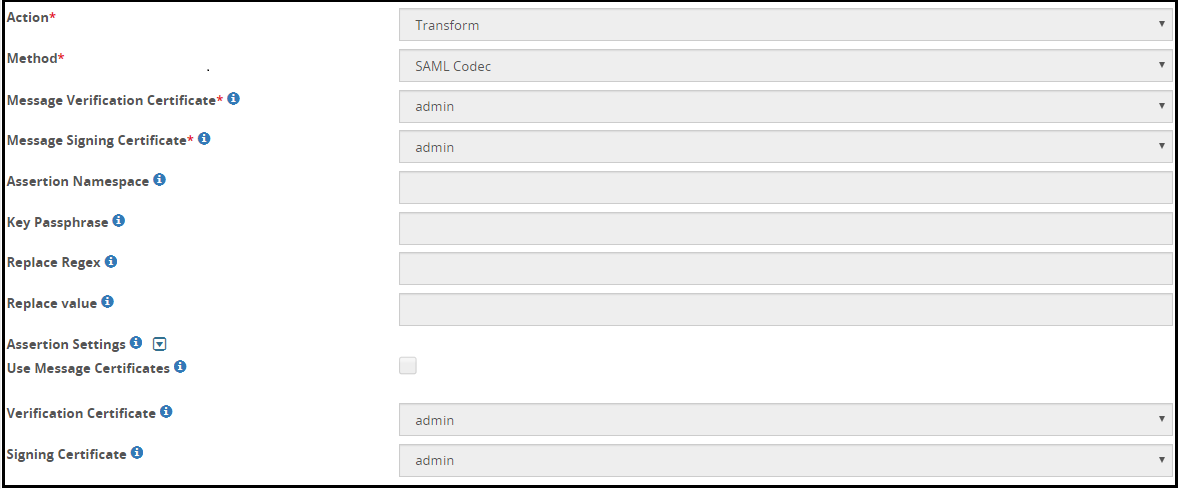

- 5.18.3.8.4: Security Assertion Markup Language (SAML) codec

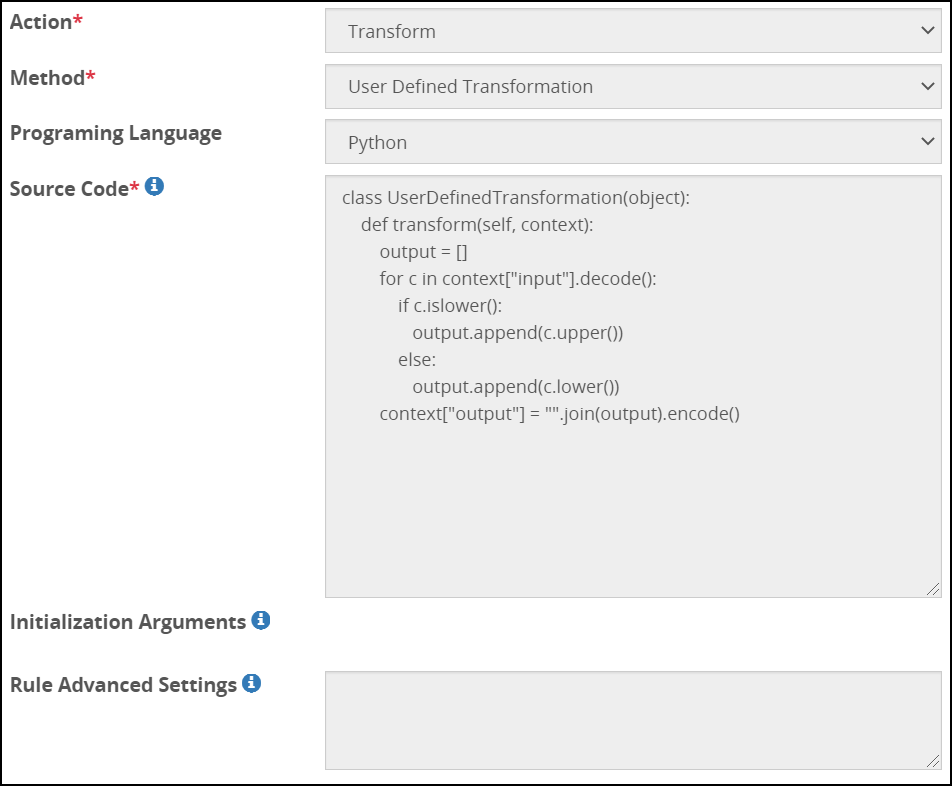

- 5.18.3.8.5: User Defined Transformation

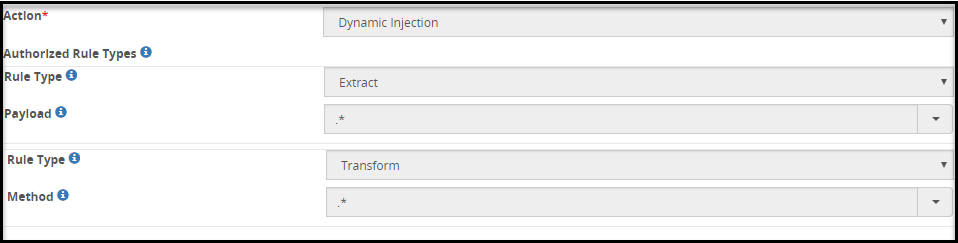

- 5.18.3.9: Dynamic Injection

- 5.19: DSG REST API

- 5.20: Enabling Selective Tunnel Loading on DSG Nodes

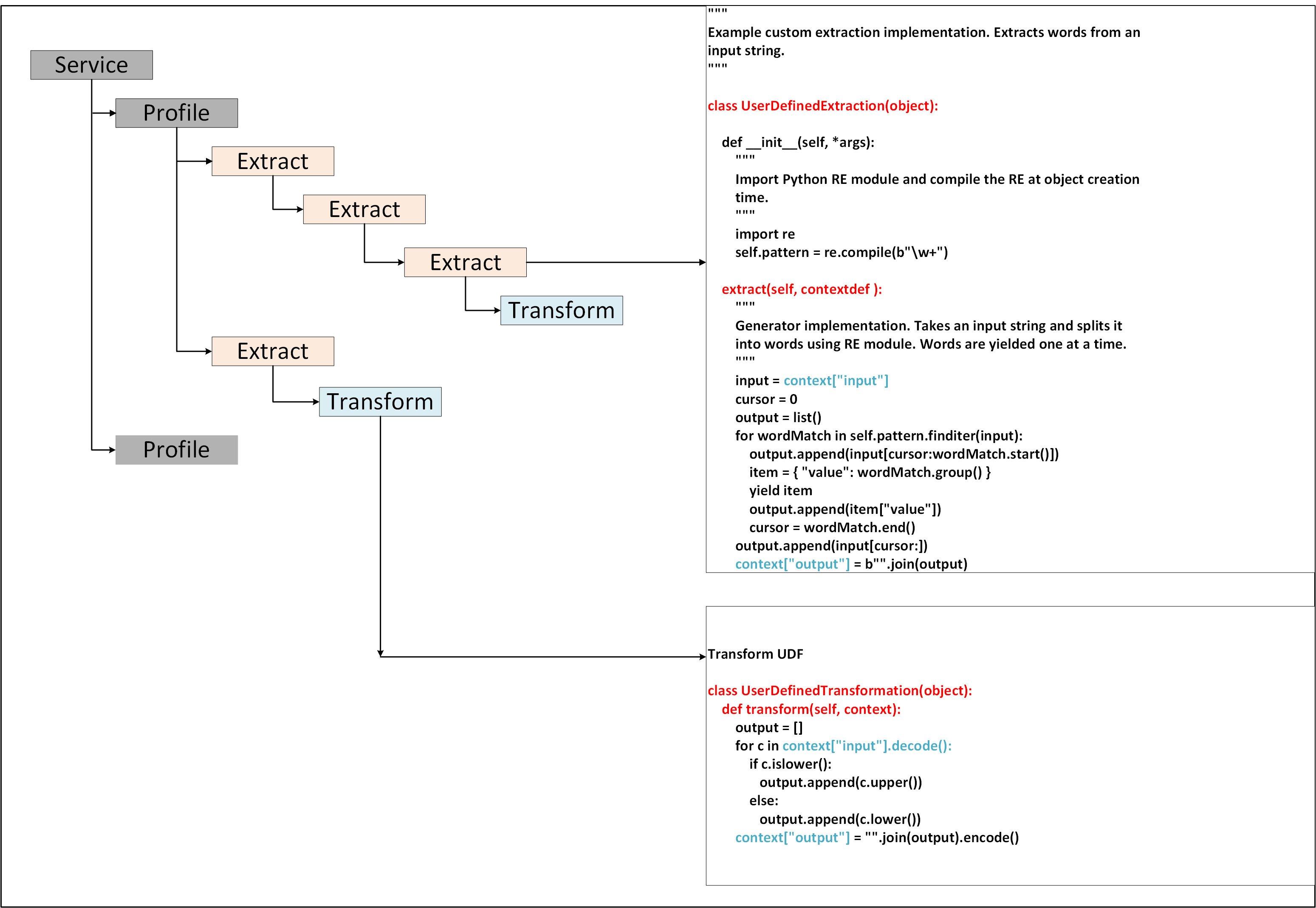

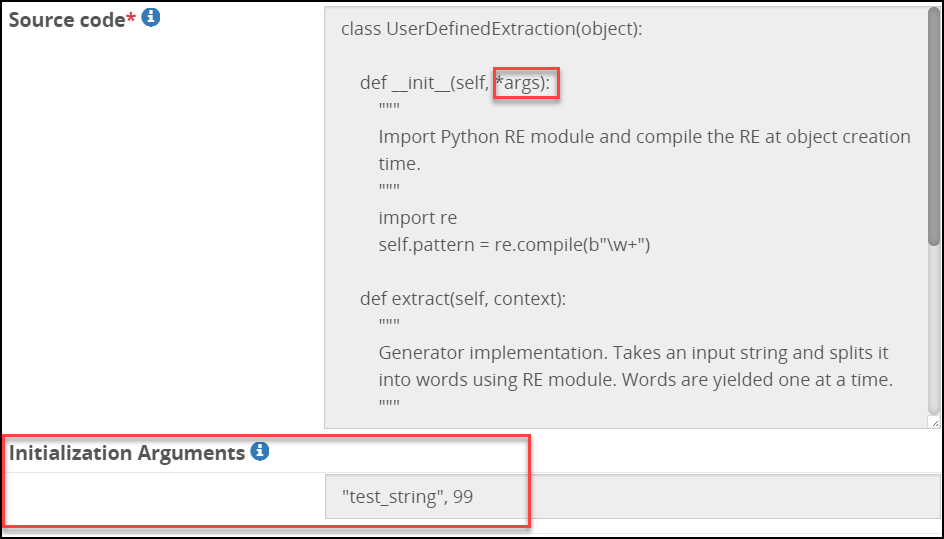

- 5.21: User Defined Functions (UDFs)

- 5.22: API for Exporting the CoP

- 5.23: Best Practices

- 5.24: Known Limitations

- 5.25: Migrate UDFs to Python 3

- 5.26: PEP Server Configuration File

- 5.27: Additional Configurations in gateway.json File

- 5.28: Auditing and Logging

- 5.29: Verifying UDF Rules for Blocked Modules and Methods

- 5.30: Managing PEP Server Configuration File

- 5.31: OpenSSL Curve Names, Algorithms, and Options

- 5.32: Configuring the DSG Cluster

- 5.33: Encoding List

- 5.34: Configuring Default Gateway

- 5.35: Forward Logs to Insight

- 5.36: Extending ESA with the DSG Web UI

- 5.37: Backing up and Restoring up the Appliance OS from the Web UI

- 5.38: Setting up ESA Communication

- 5.39: Deploying Configurations to the Cluster

- 5.40: Restarting a Node

- 5.41: Deploy Configurations to Node Groups

- 5.42: Codebook Reshuffling

- 5.43: Troubleshooting in DSG

- 6: Policy Management

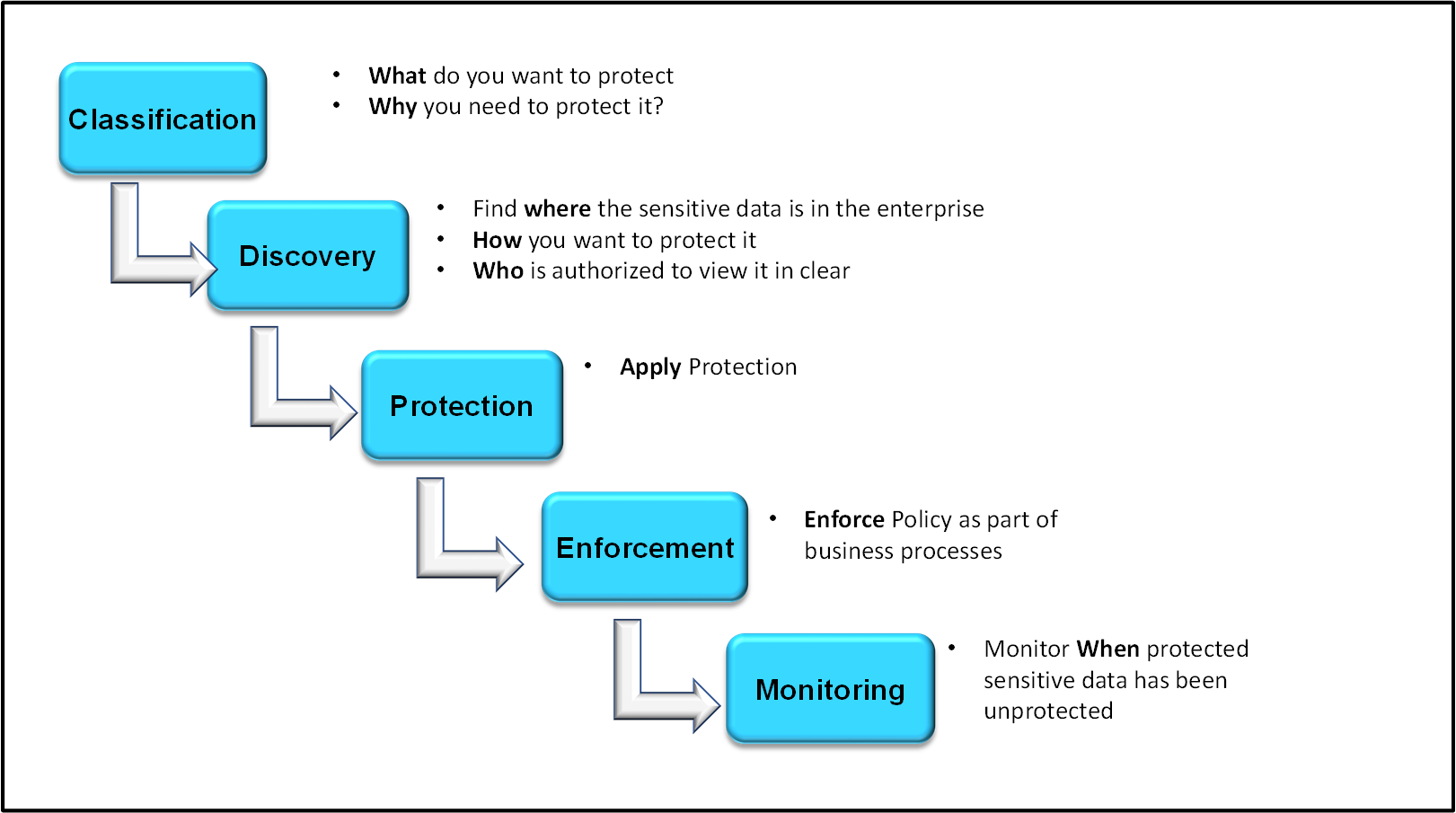

- 6.1: Protegrity Data Security Methodology

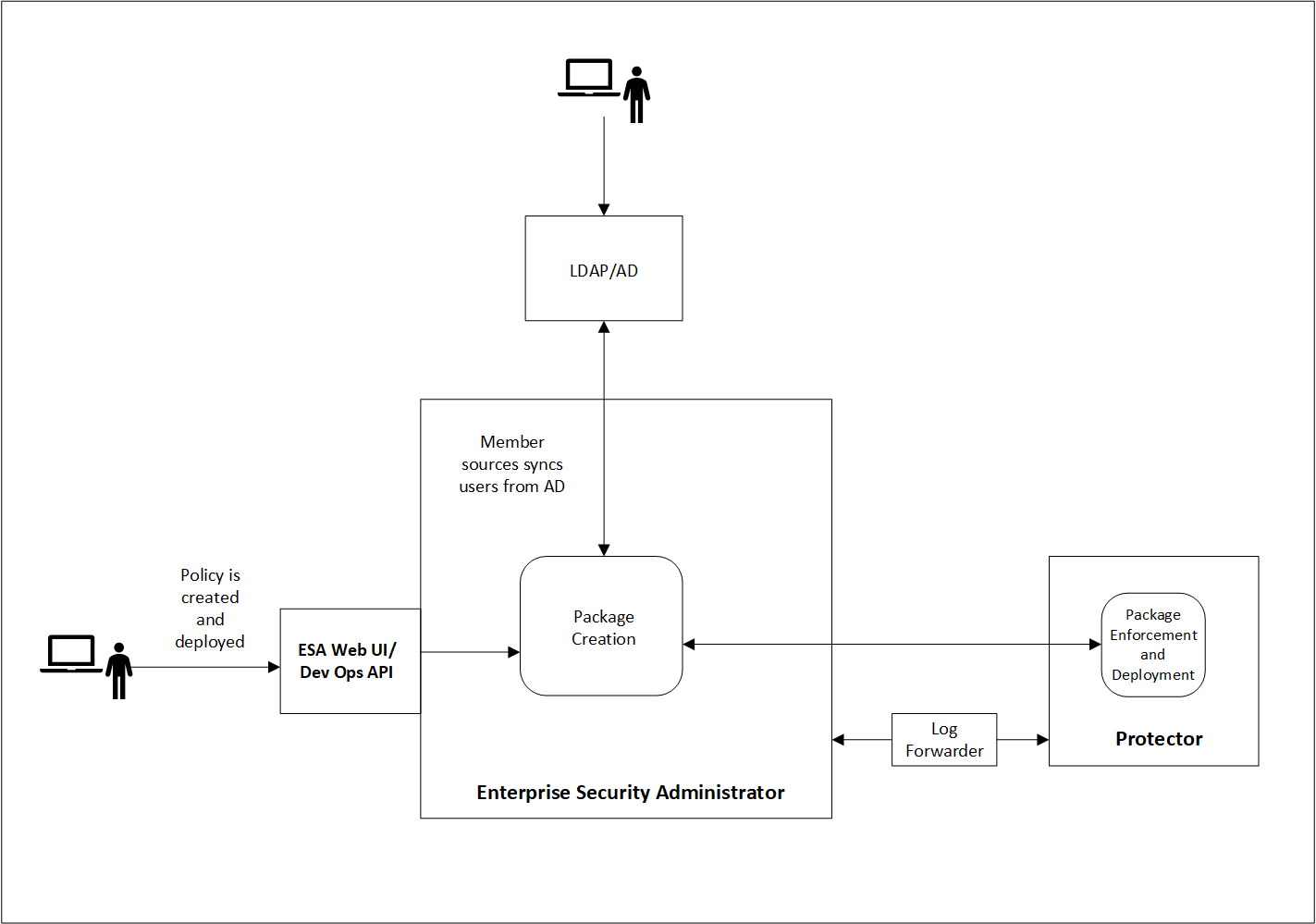

- 6.2: Package Deployment in Protectors

- 6.3: Initializing the Policy Management

- 6.4: Components of a Policy

- 6.4.1: Working With Data Elements

- 6.4.1.1: Example - Creating Token Data Elements

- 6.4.1.2: Example - Creating a FPE Data Element

- 6.4.1.3: Example - Creating Data Elements for Unstructured Data

- 6.4.2: Working With Alphabets

- 6.4.2.1: Creating an Alphabet

- 6.4.3: Working With Masks

- 6.4.3.1: Creating a Mask

- 6.4.3.2: Masking Support

- 6.4.4: Working With Trusted Applications

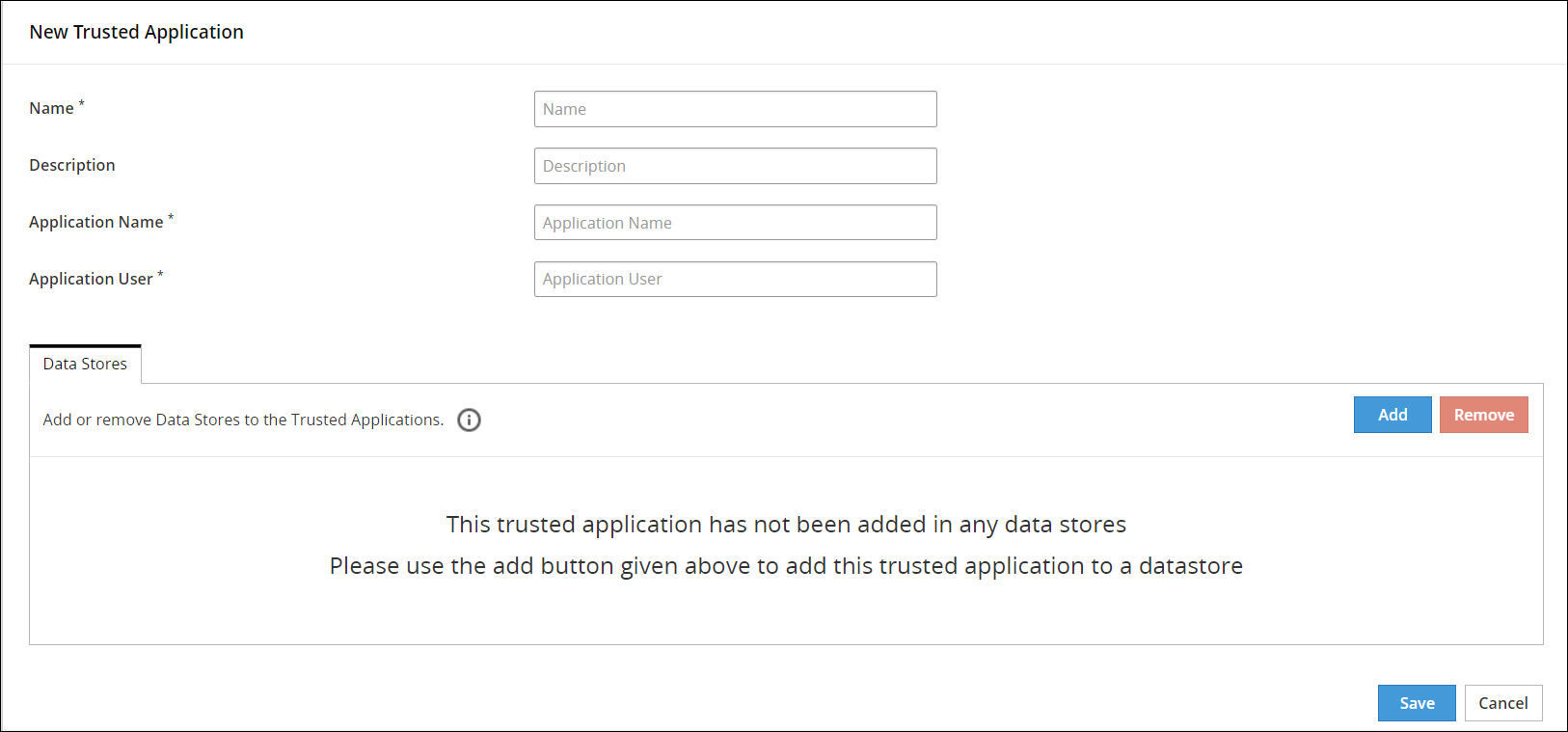

- 6.4.4.1: Creating a Trusted Application

- 6.4.4.2: Linking Data Store to a Trusted Application

- 6.4.4.3: Deploying a Trusted Application

- 6.4.5: Creating a Data Store

- 6.4.5.1: Adding Allowed Servers for the Data Store

- 6.4.5.2: Adding Policies to the Data Store

- 6.4.5.3: Adding Trusted Applications to the Data Store

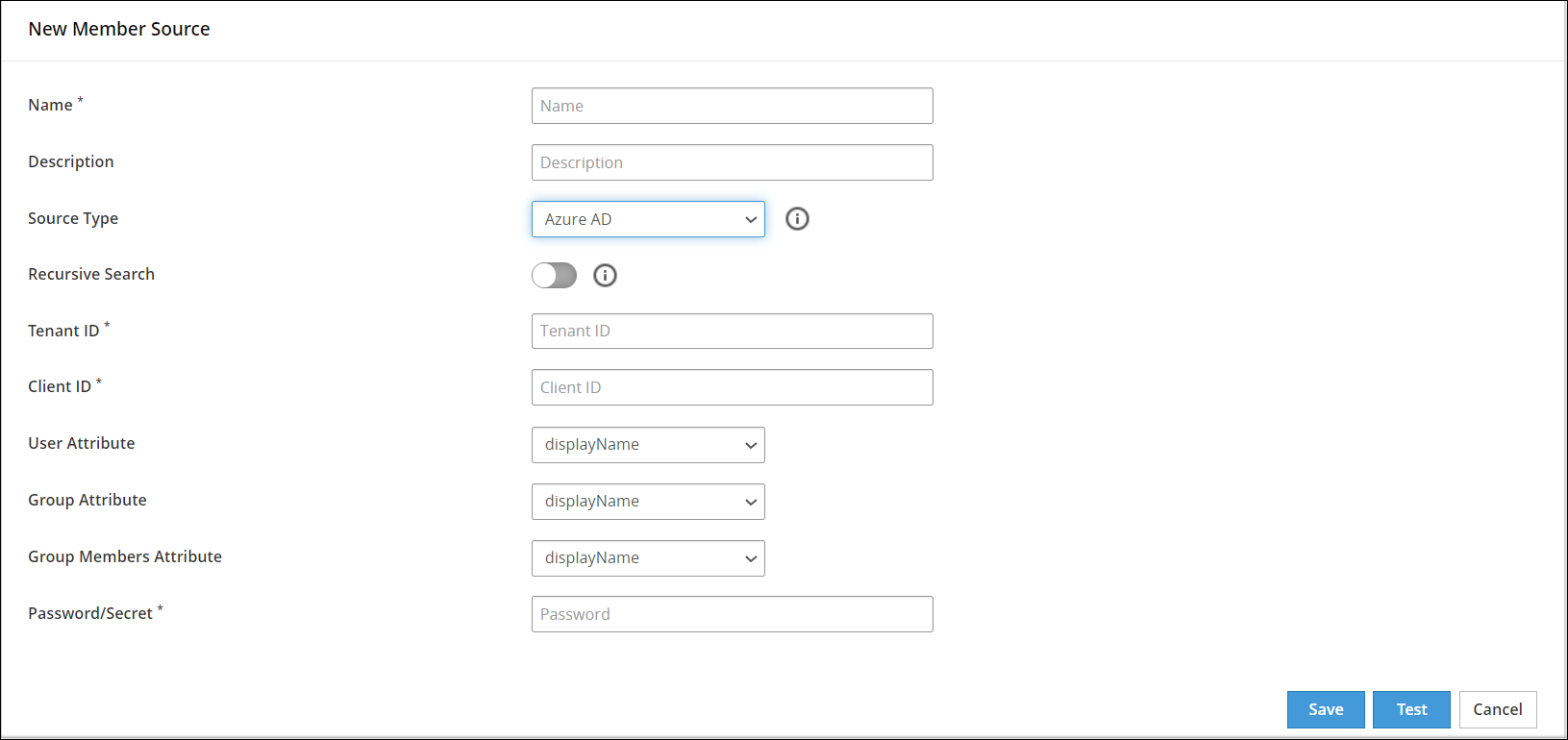

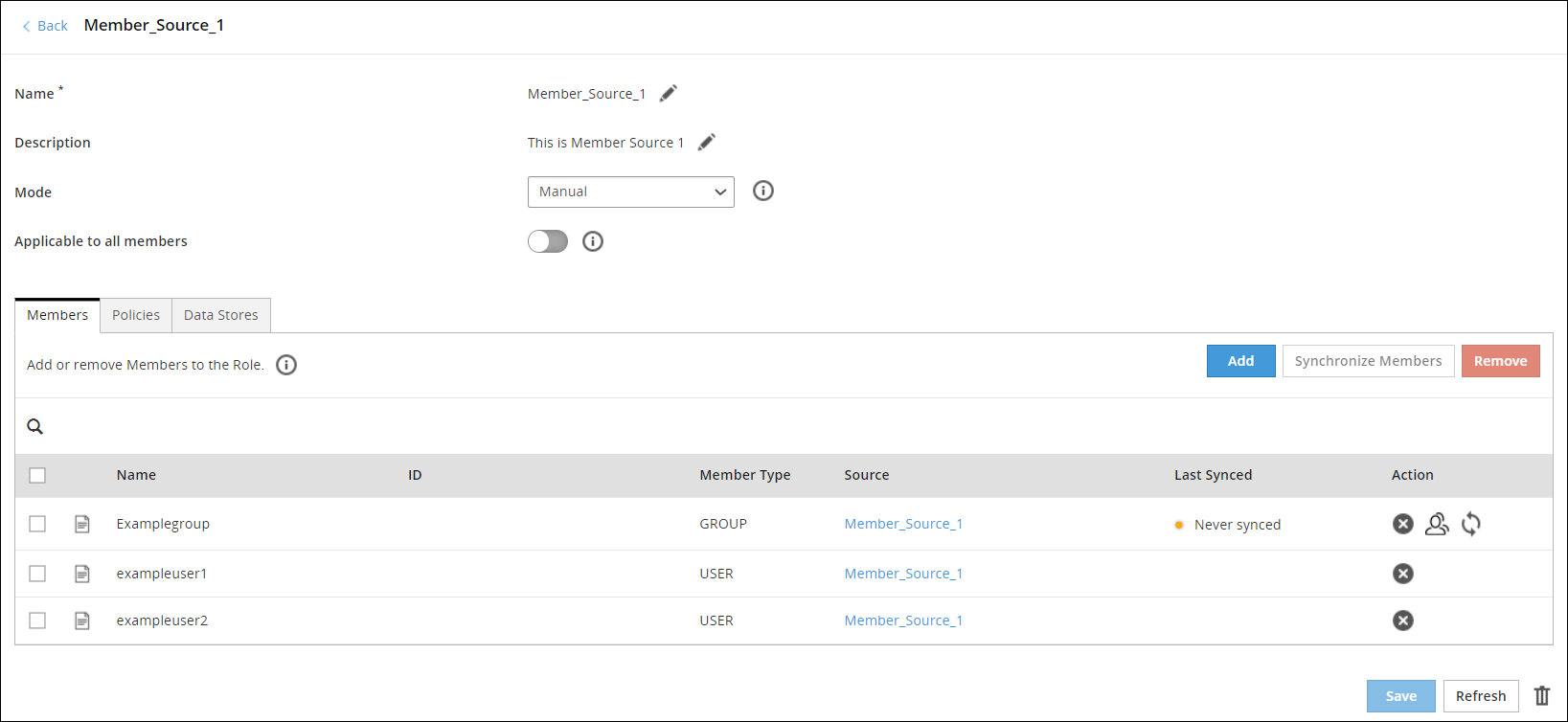

- 6.4.6: Working With Member Sources

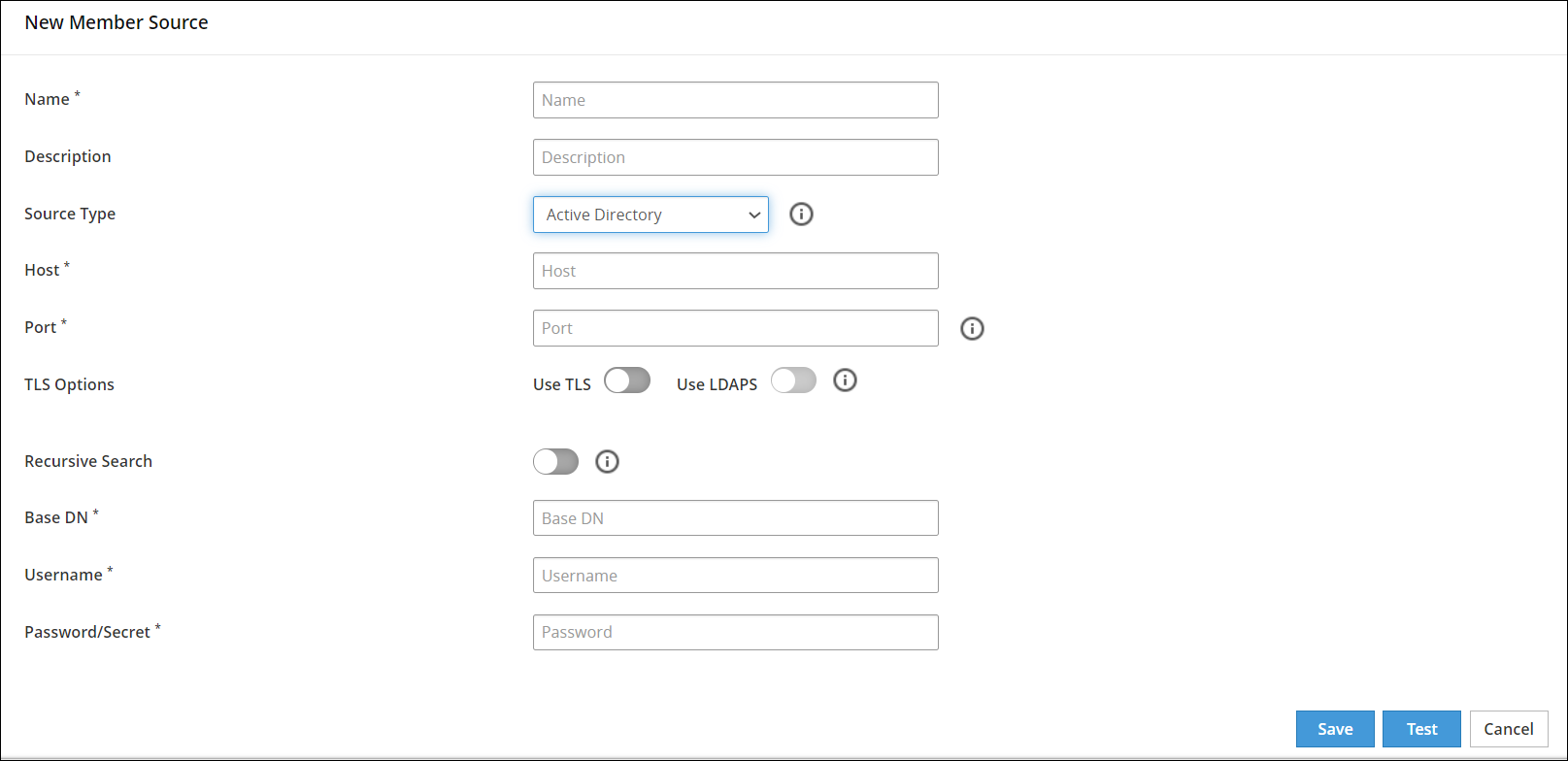

- 6.4.6.1: Configuring Active Directory Member Source

- 6.4.6.2: Configuring File Member Source

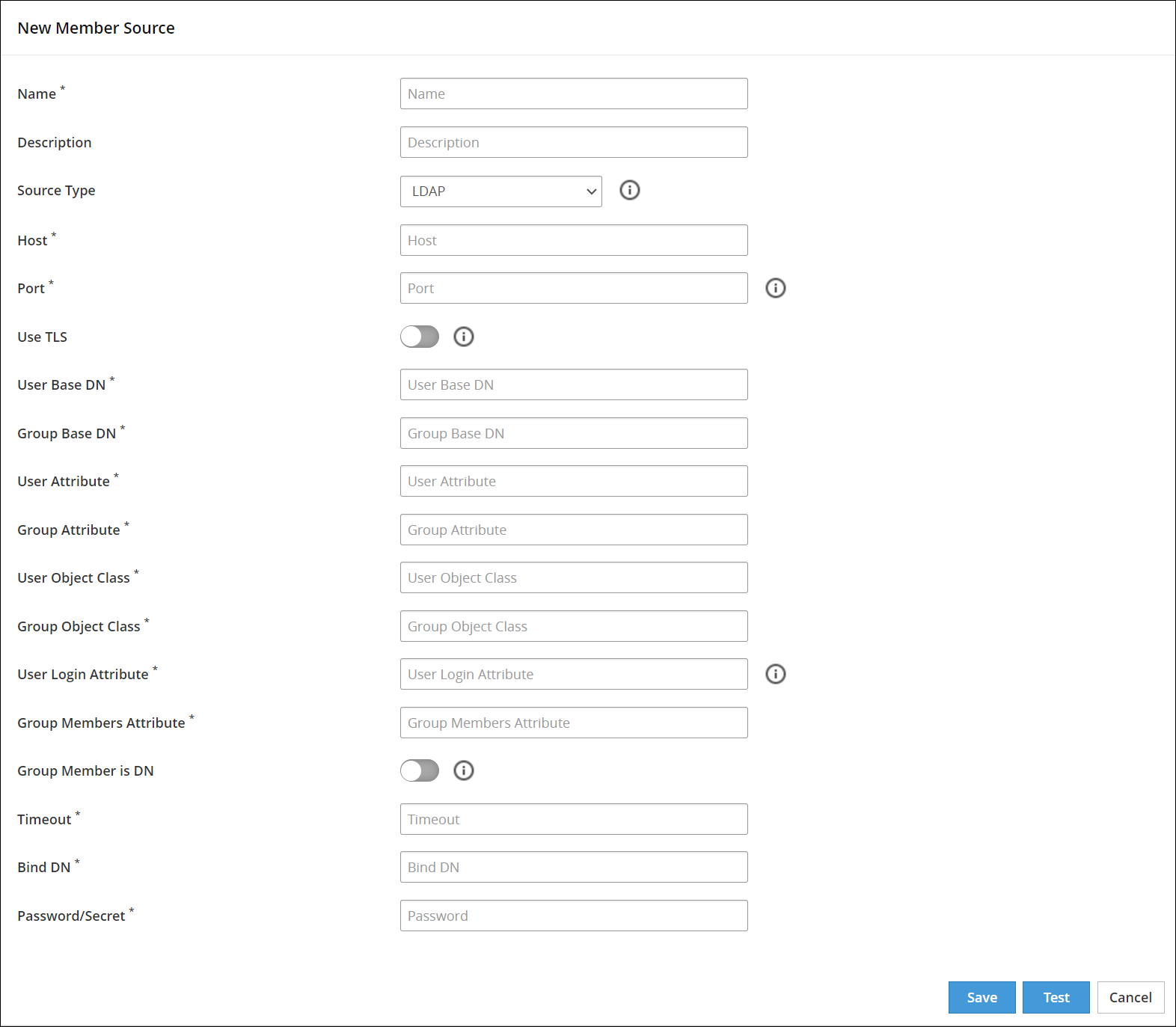

- 6.4.6.3: Configuring LDAP Member Source

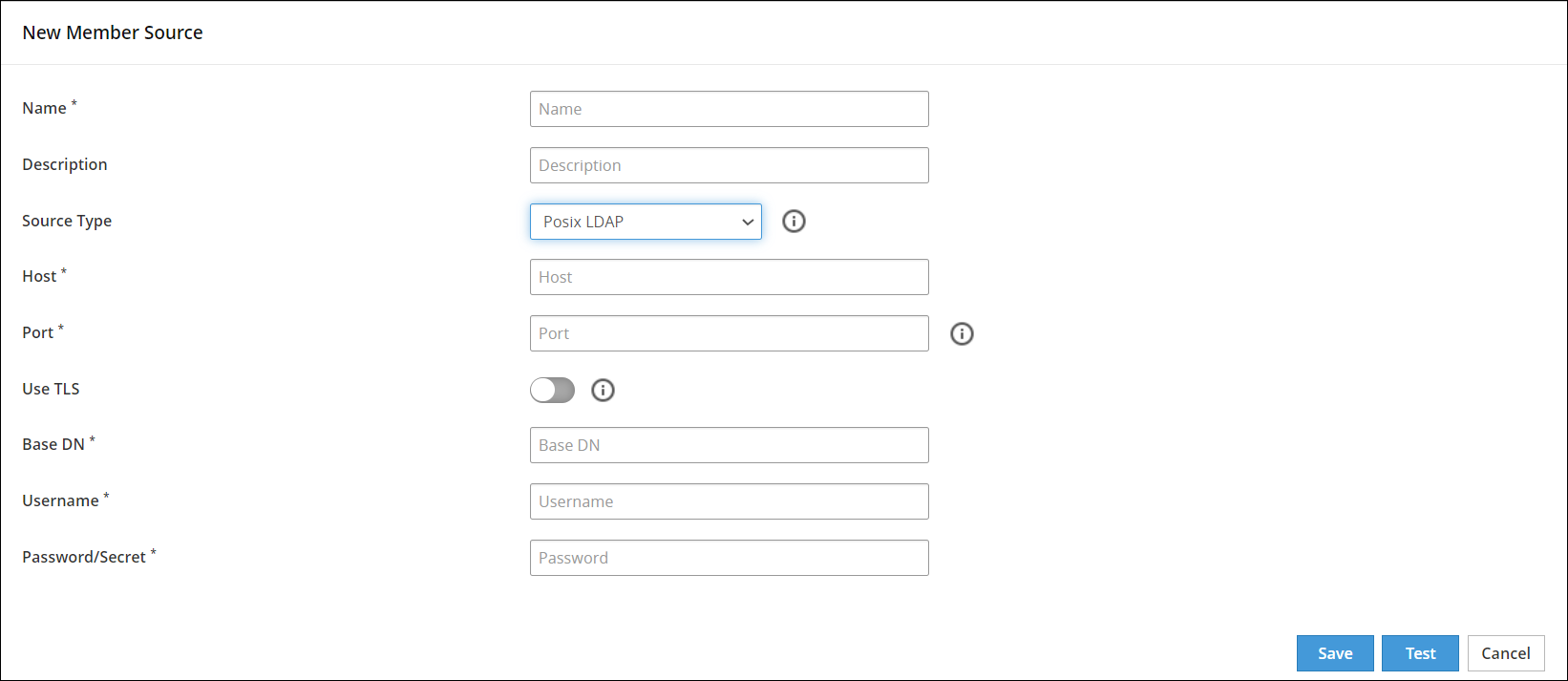

- 6.4.6.4: Configuring POSIX Member Source

- 6.4.6.5: Configuring Azure AD Member Source

- 6.4.6.6: Configuring Database Member Source

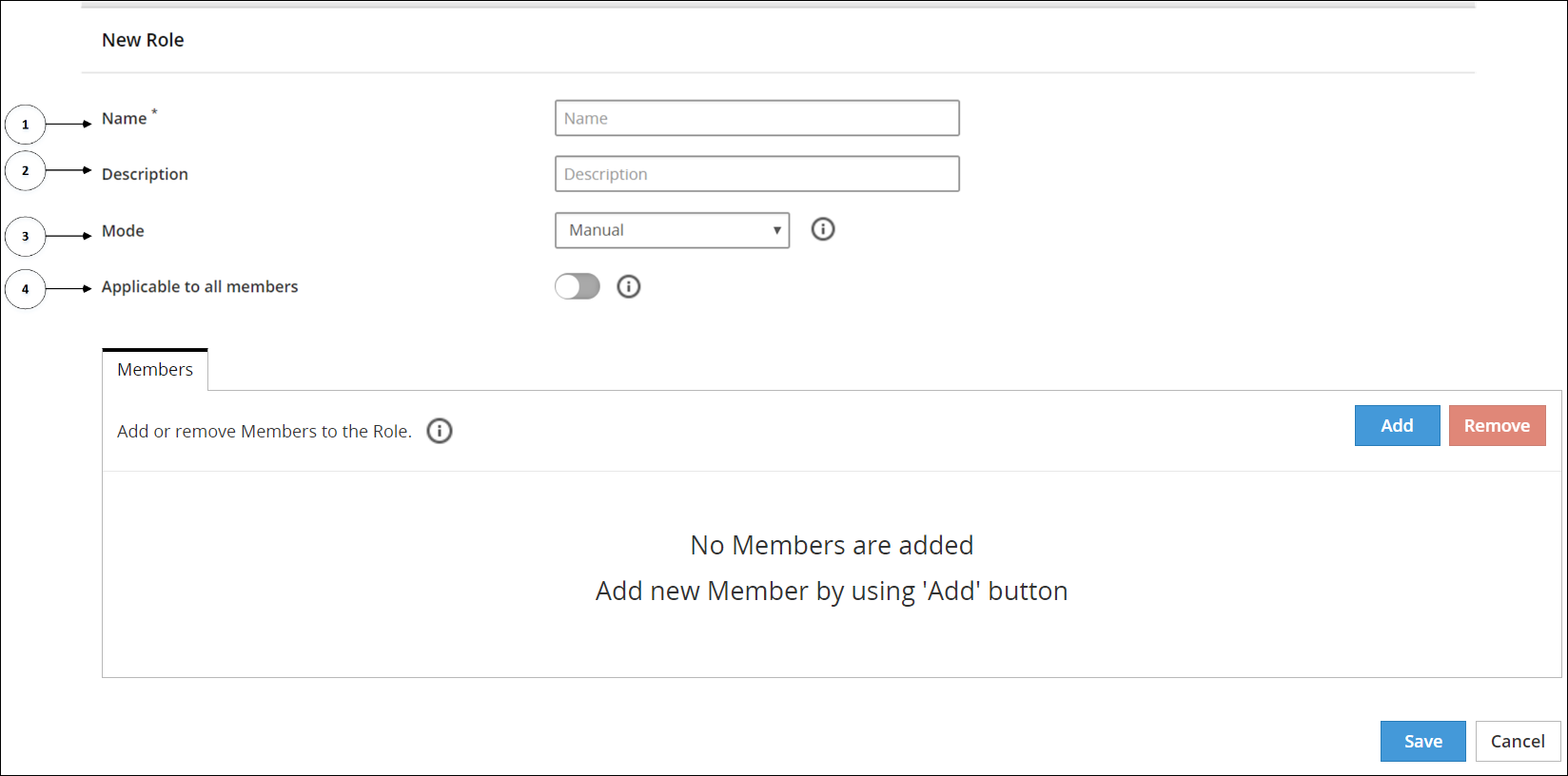

- 6.4.7: Working with Roles

- 6.4.7.1: Creating a Role

- 6.4.7.2: Mode Types for a Role

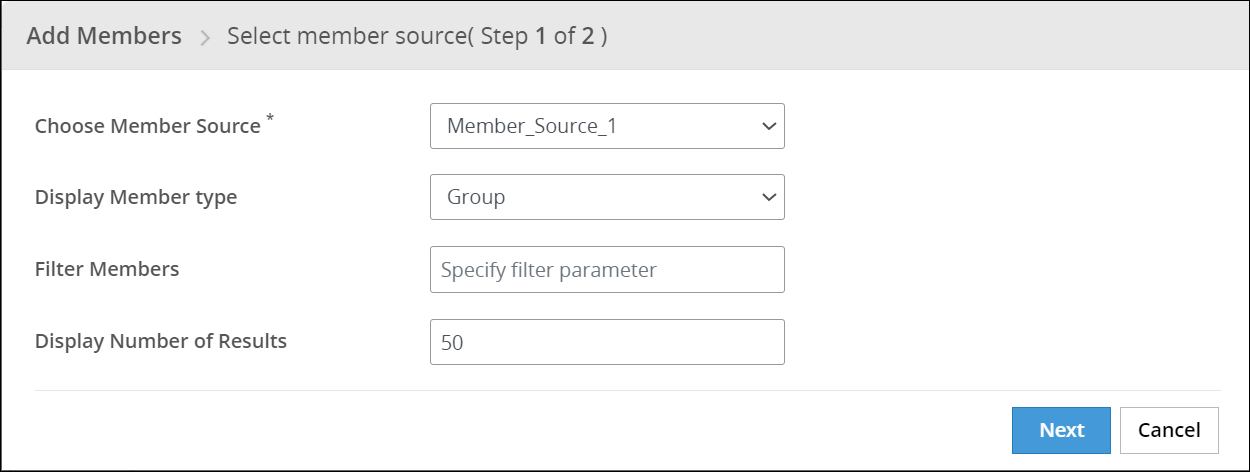

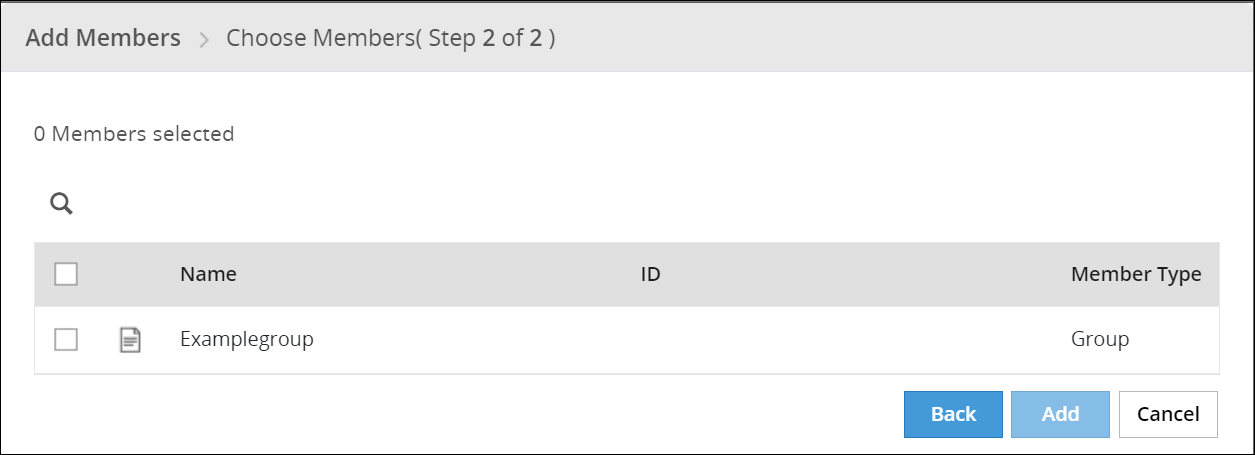

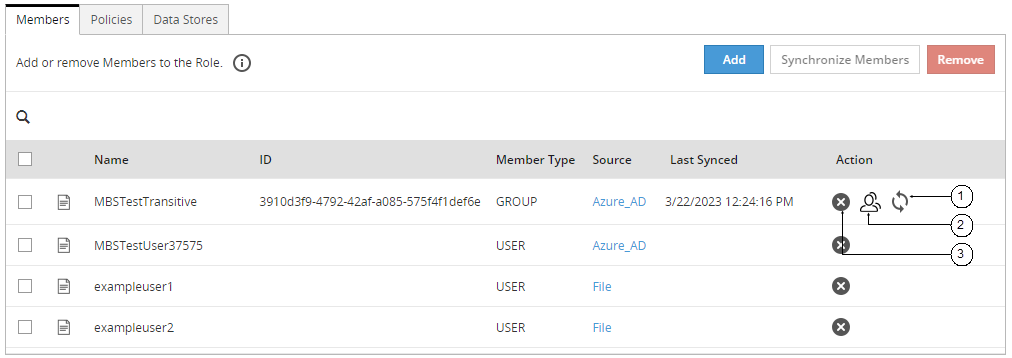

- 6.4.7.3: Adding Members to a Role

- 6.4.7.3.1: Filtering Members from AD and LDAP Member Sources

- 6.4.7.3.2: Filtering Members from Azure AD Member Source

- 6.4.7.4: Synchronizing, Listing, or Removing Members in a Role

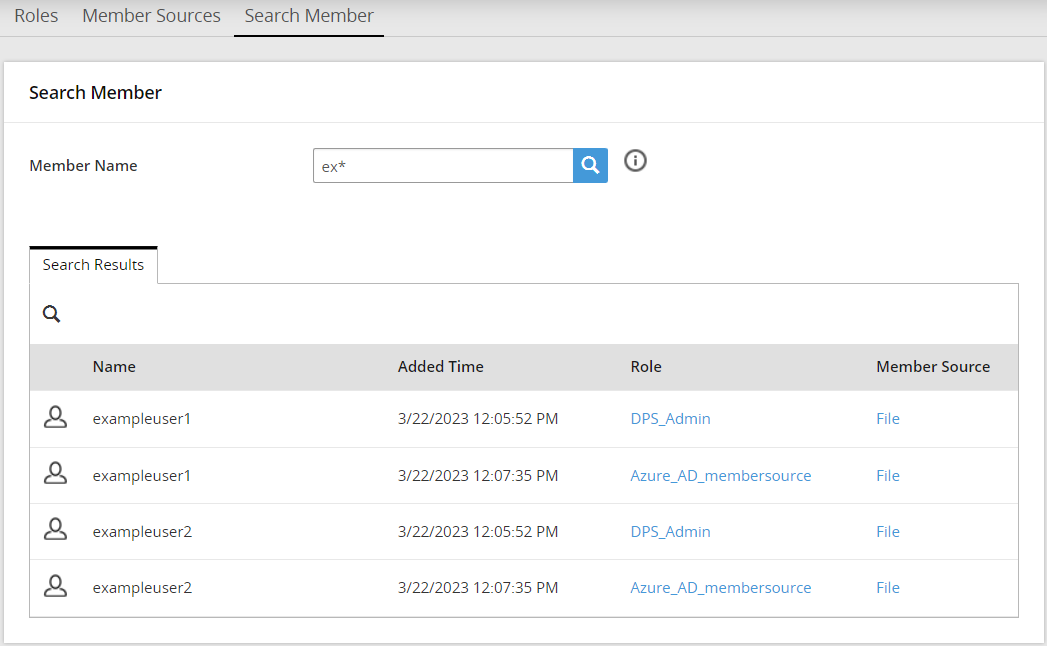

- 6.4.7.5: Searching User

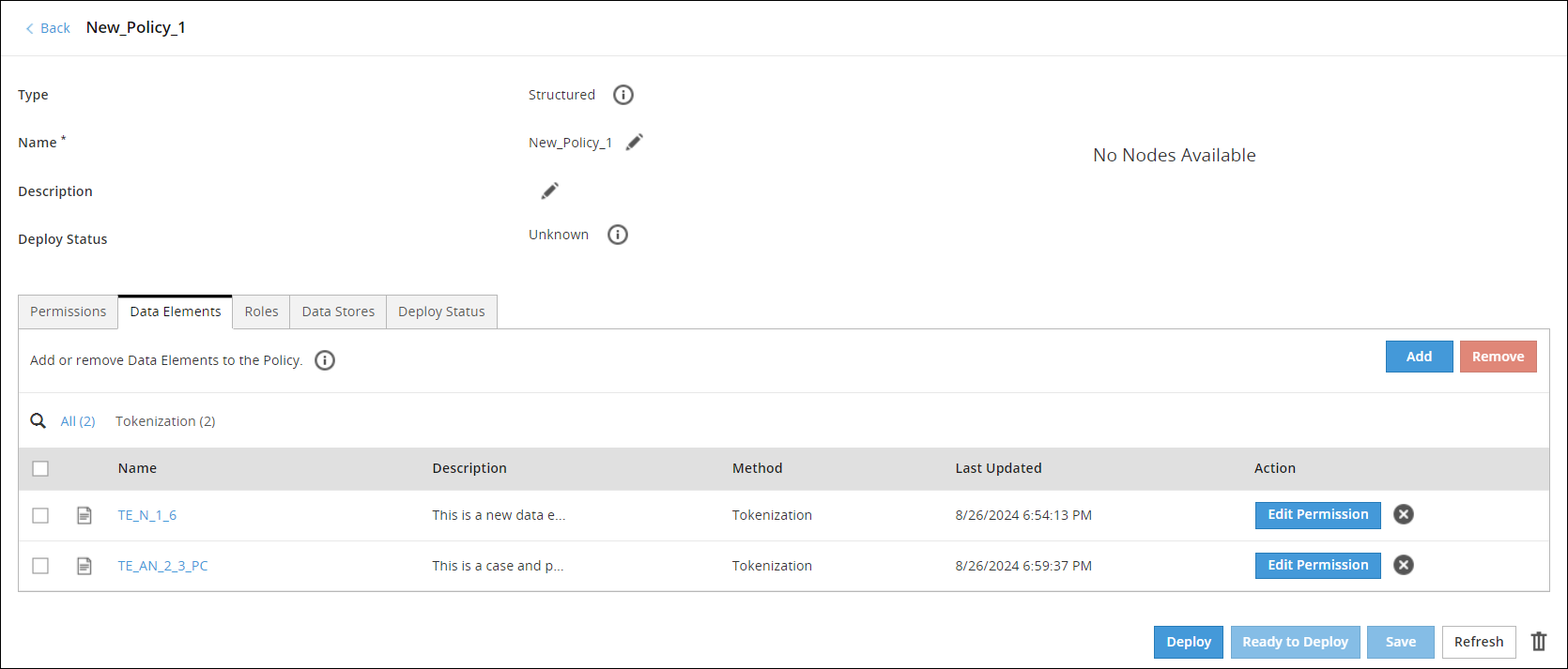

- 6.5: Creating and Deploying Policies

- 6.5.1: Creating Policies

- 6.5.2: Adding Data Elements to Policy

- 6.5.3: Adding Roles to Policy

- 6.5.4: Adding Permissions to Policy

- 6.5.5: Deploying Policies

- 6.5.6: Policy Management using the Policy API

- 6.6: Deploying Data Stores to Protectors

- 6.7: Managing Policy Components

- 6.8: Policy Management Dashboard

- 6.9: Exporting Package for Resilient Protectors

- 6.10: Legacy Features

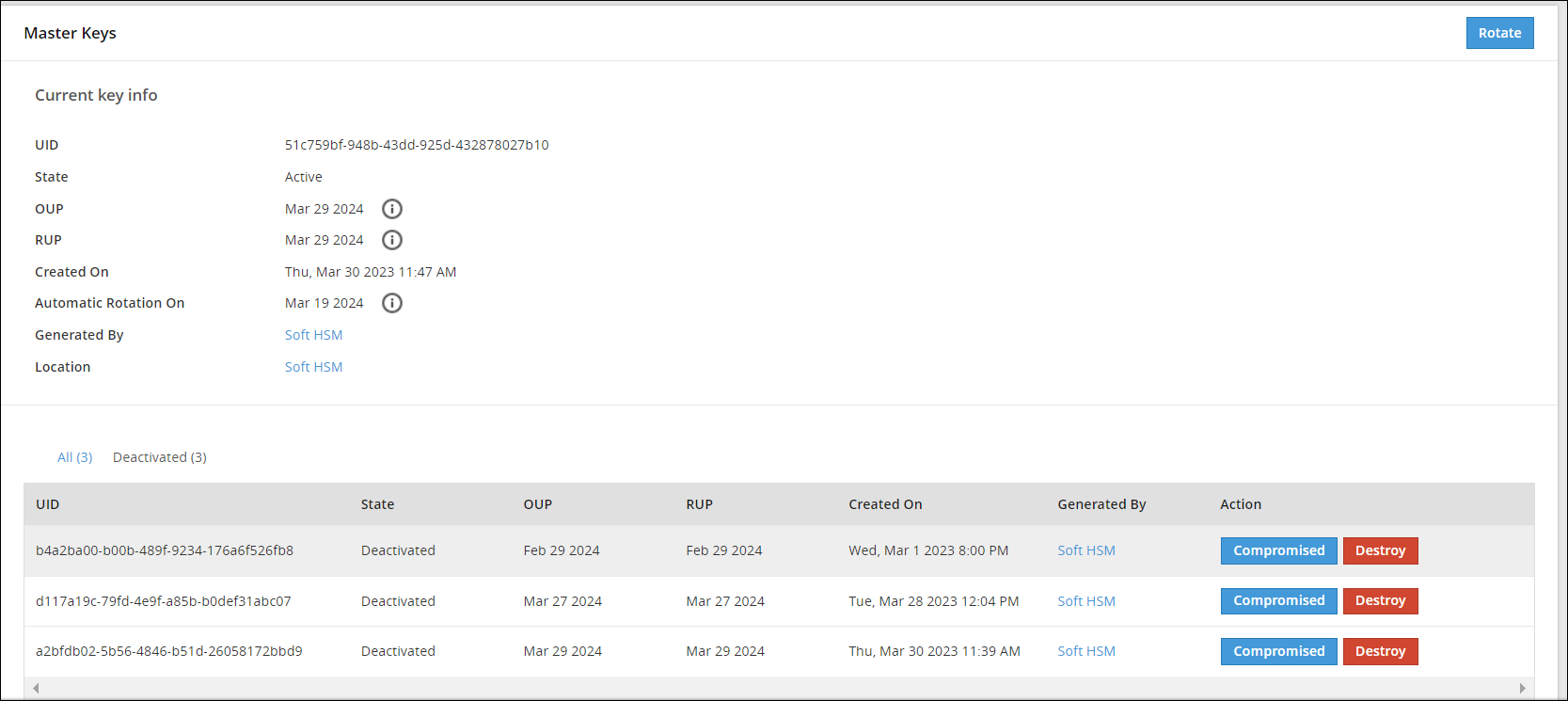

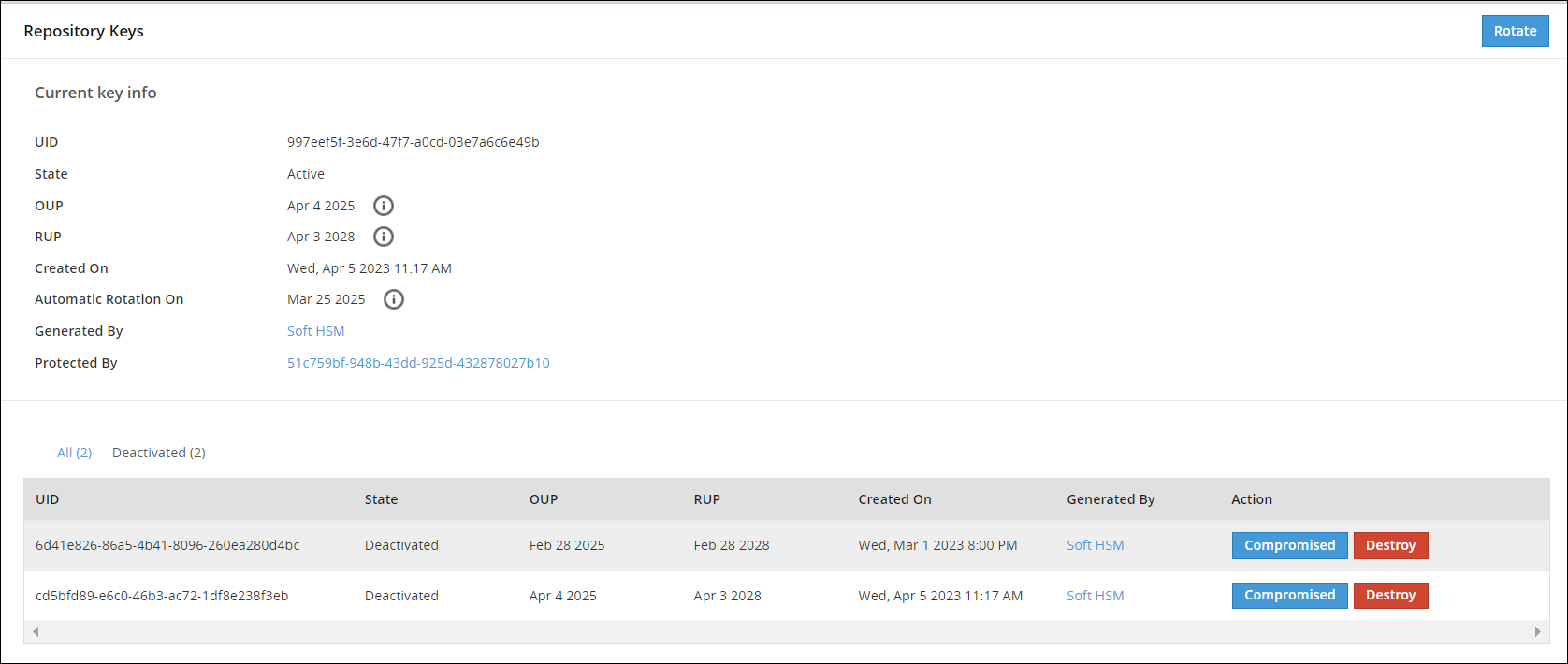

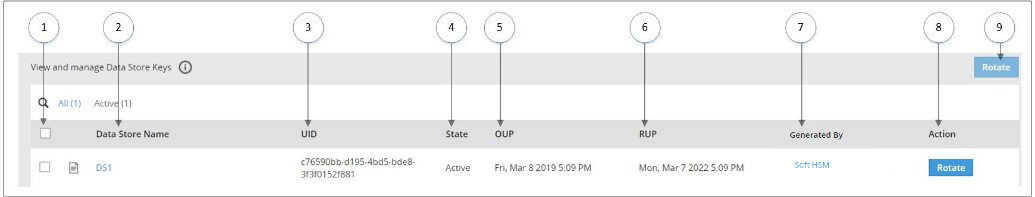

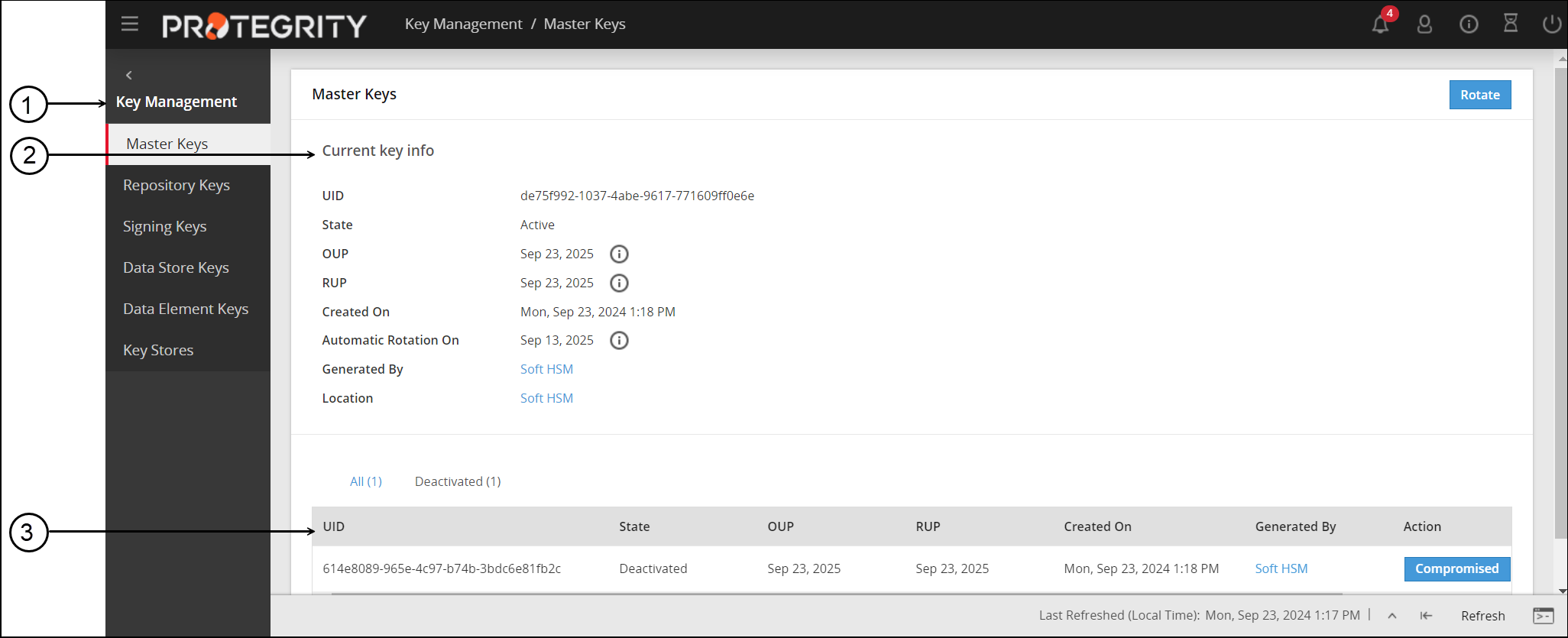

- 7: Key Management

- 7.1: Protegrity Key Management

- 7.2: Key Management Web UI

- 7.3: Working with Keys

- 7.4: Key Points for Key Management

- 7.5: Keys-Related Terminology

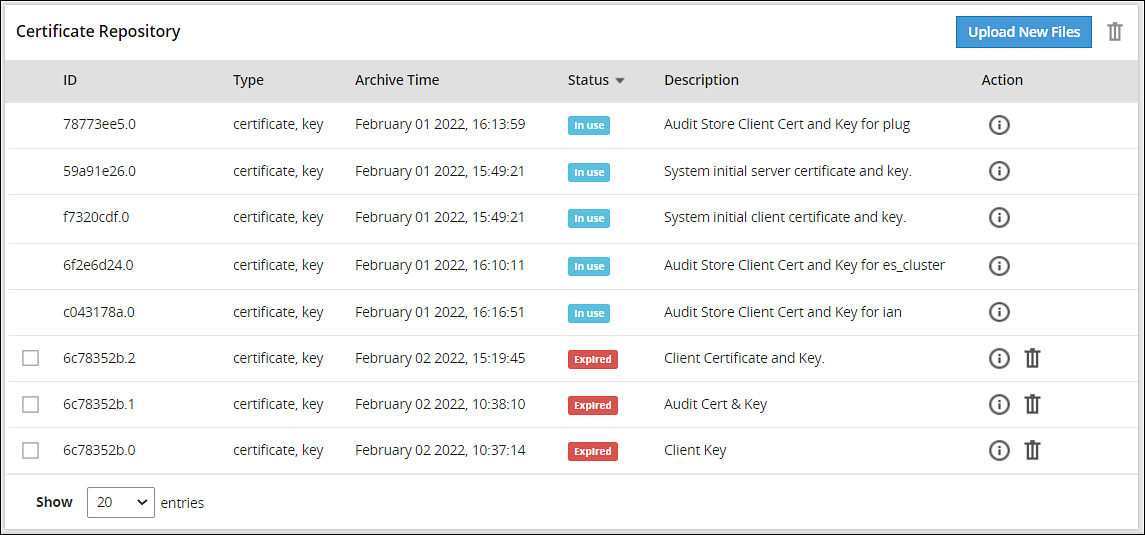

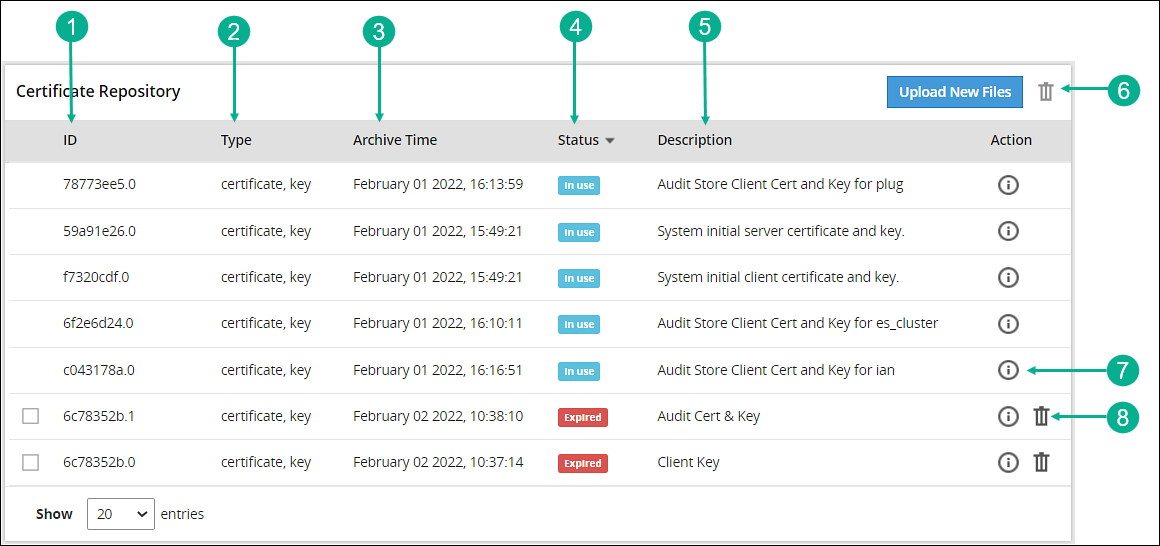

- 8: Certificate Management

- 8.1: Certificates in the ESA

- 8.2: Certificate Management in ESA

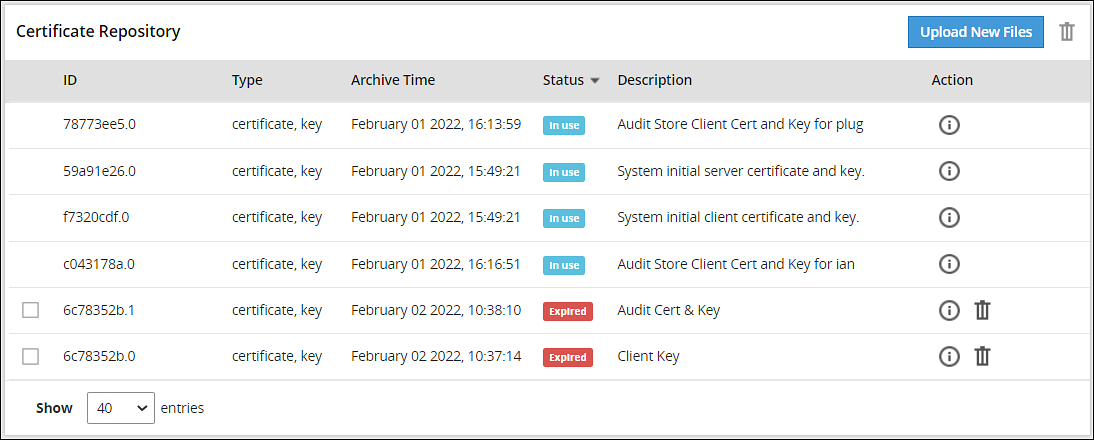

- 8.2.1: Certificate Repository

- 8.2.2: Uploading Certificates

- 8.2.3: Uploading Certificate Revocation List

- 8.2.4: Manage Certificates

- 8.2.5: Changing Certificates

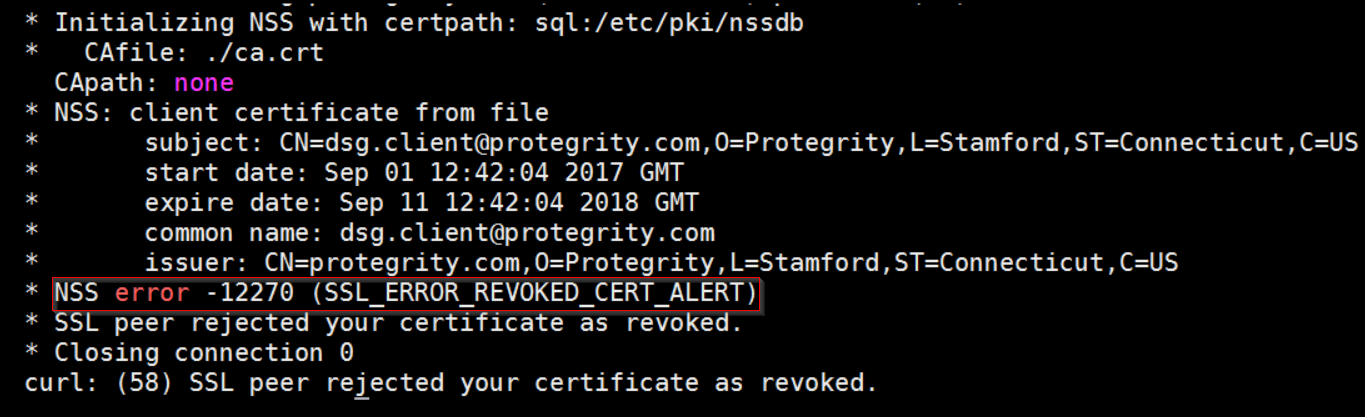

- 8.2.6: Changing CRL

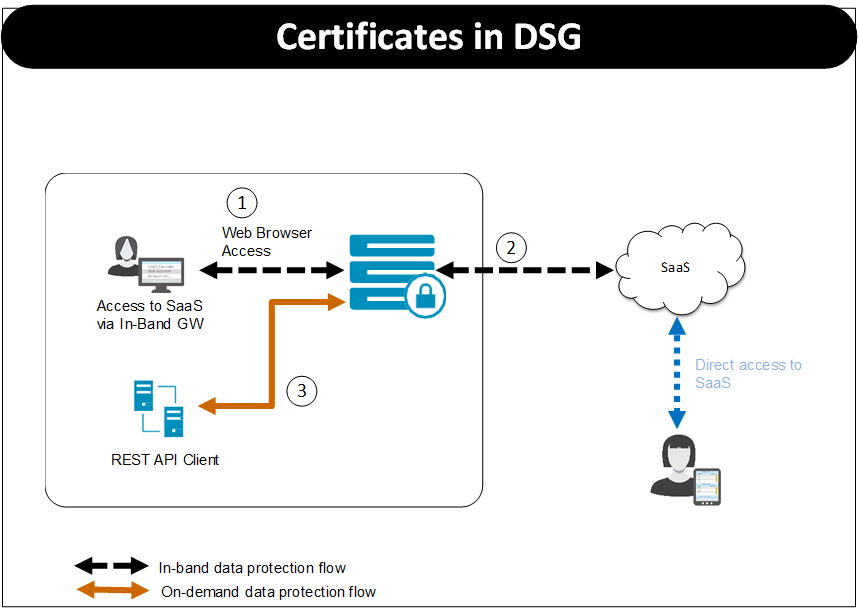

- 8.3: Certificates in DSG

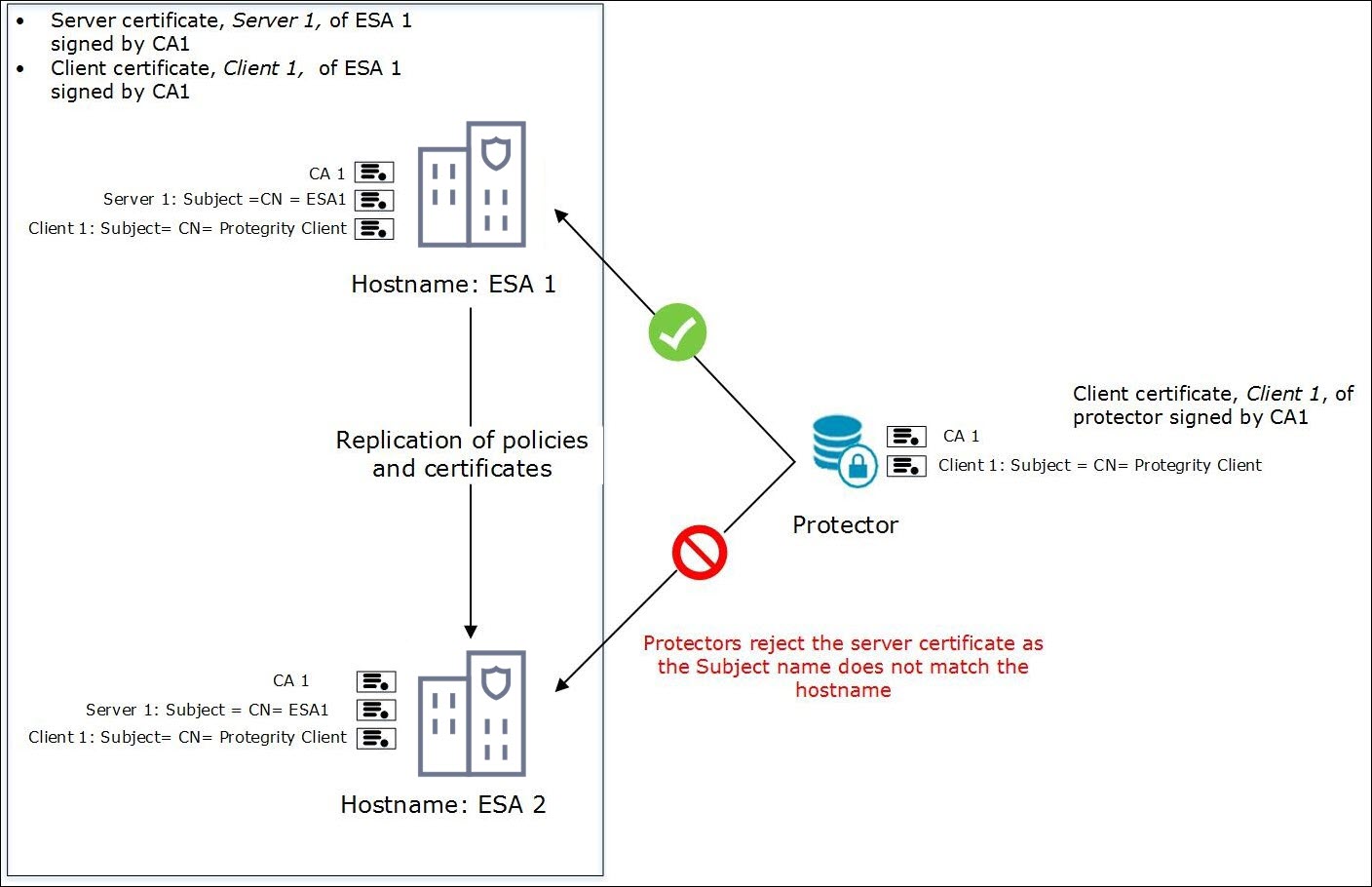

- 8.4: Replicating Certificates in a Trusted Appliance Cluster

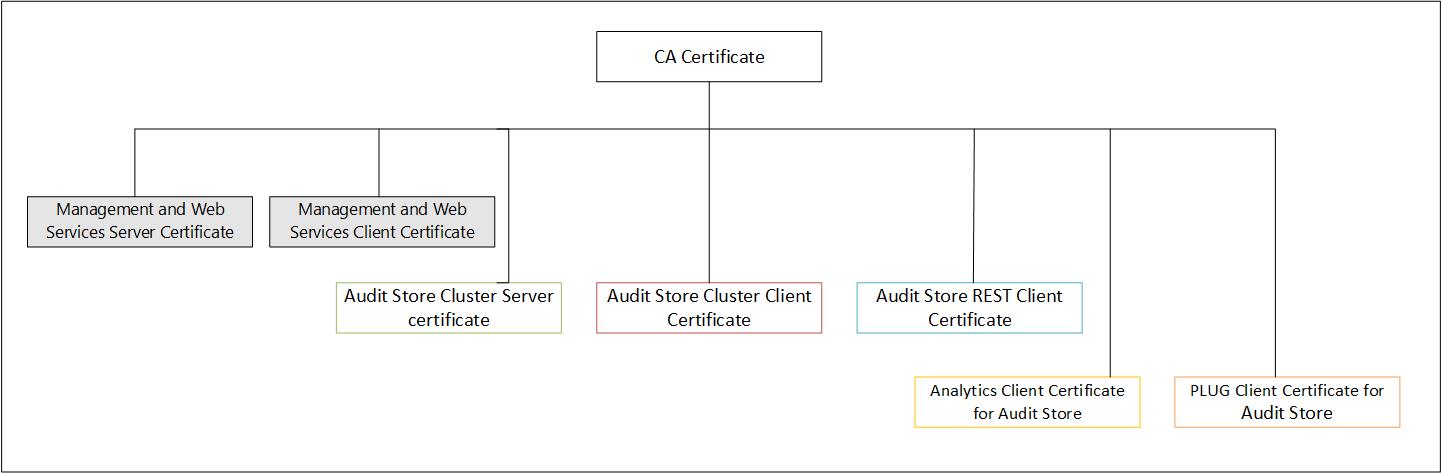

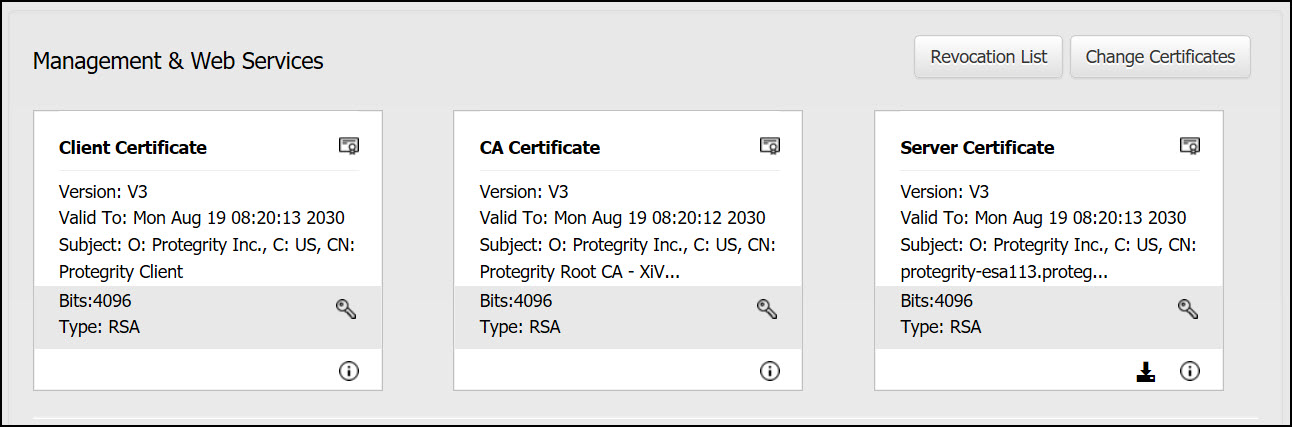

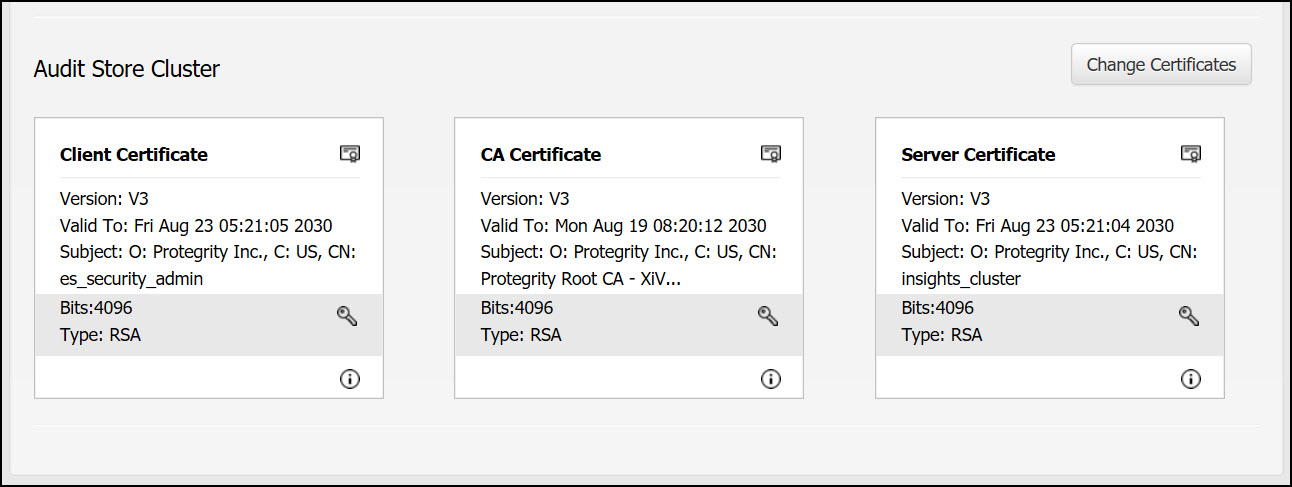

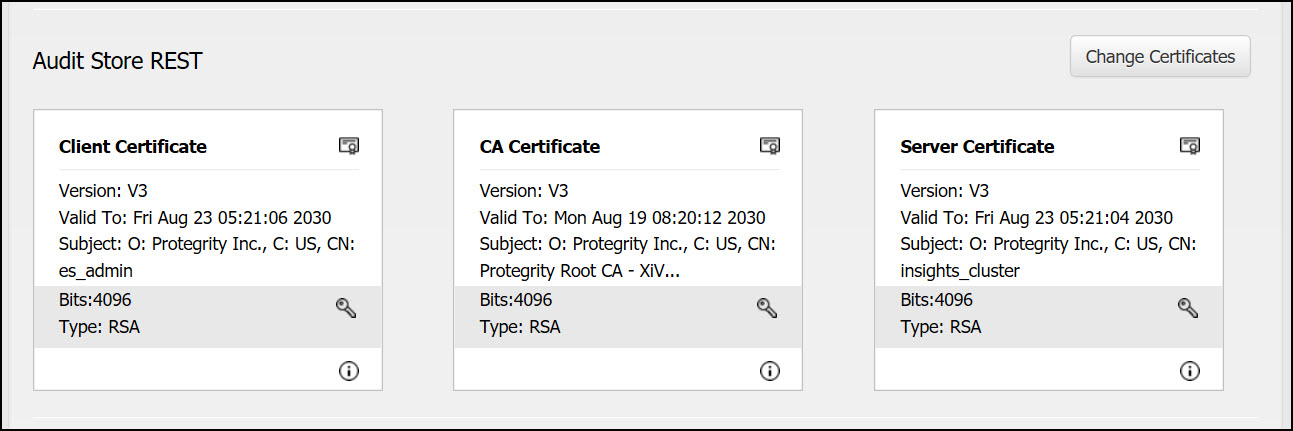

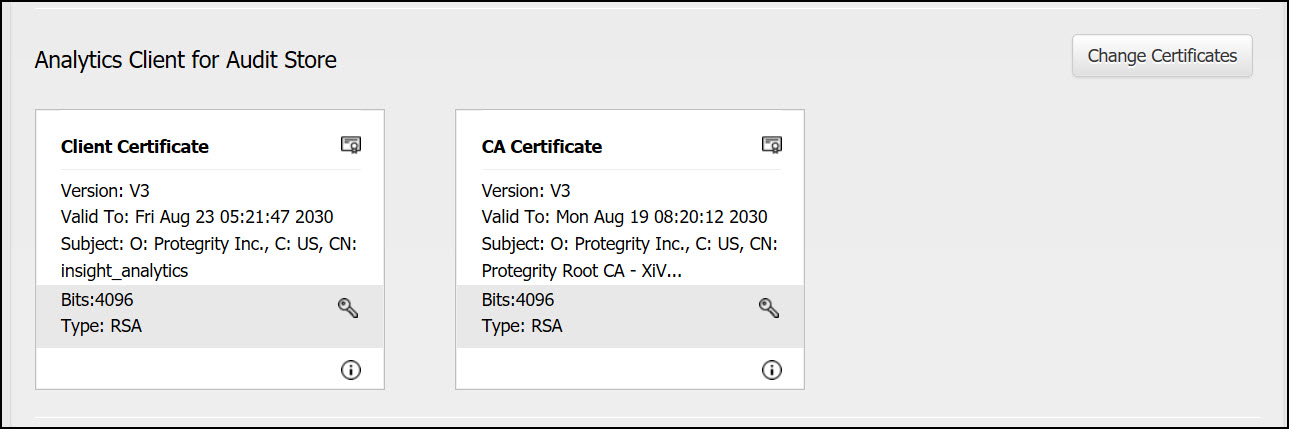

- 8.5: Insight Certificates

- 8.6: Validating Certificates

- 9: Protegrity REST APIs

- 9.1: Accessing the Protegrity REST APIs

- 9.2: View the Protegrity REST API Specification Document

- 9.3: Using the Common REST API Endpoints

- 9.4: Using the Policy Management REST APIs

- 9.5: Using the Encrypted Resilient Package REST APIs

- 10: Protegrity Data Security Platform Licensing

- 10.1: Obtaining a Validated License

- 10.2: Non-Licensed Product

- 10.3: Cluster Licensing

- 11: Troubleshooting

- 11.1: Known issues for the Audit Store

- 11.2: ESA Error Handling

- 11.2.1: Common ESA Logs

- 11.2.2: Common ESA Errors

- 11.2.3: Understanding the Insight indexes

- 11.2.4: Understanding the index field values

- 11.2.5: Index entries

- 11.2.6: Log return codes

- 11.2.7: Protectors security log codes

- 11.2.8: Policy audit codes

- 11.2.9: Additional log information

- 11.3: Known Issues for the td-agent

- 11.4: Known Issues for Protegrity Analytics

- 11.5: Known Issues for the Log Forwarder

- 11.6: Deprecations

- 11.6.1: Deprecations

- 12: Supplemental Guides

- 13: PDF Resources

- 14: Intellectual Property Attribution Statement

1 - Installation

1.1 - Overview of installation

Audience

The installation steps is intended for the following stakeholders:

- Security professionals like security officers who are responsible for protecting business systems in organizations. They plan and ensure execution of security arrangement for their organization.

- System administrators and other technical personnel who are responsible for implementing data security solutions in their organization.

- System Architects who are responsible for providing expert guidance in designing, development and implementation of enterprise data security solution architecture for their business requirements.

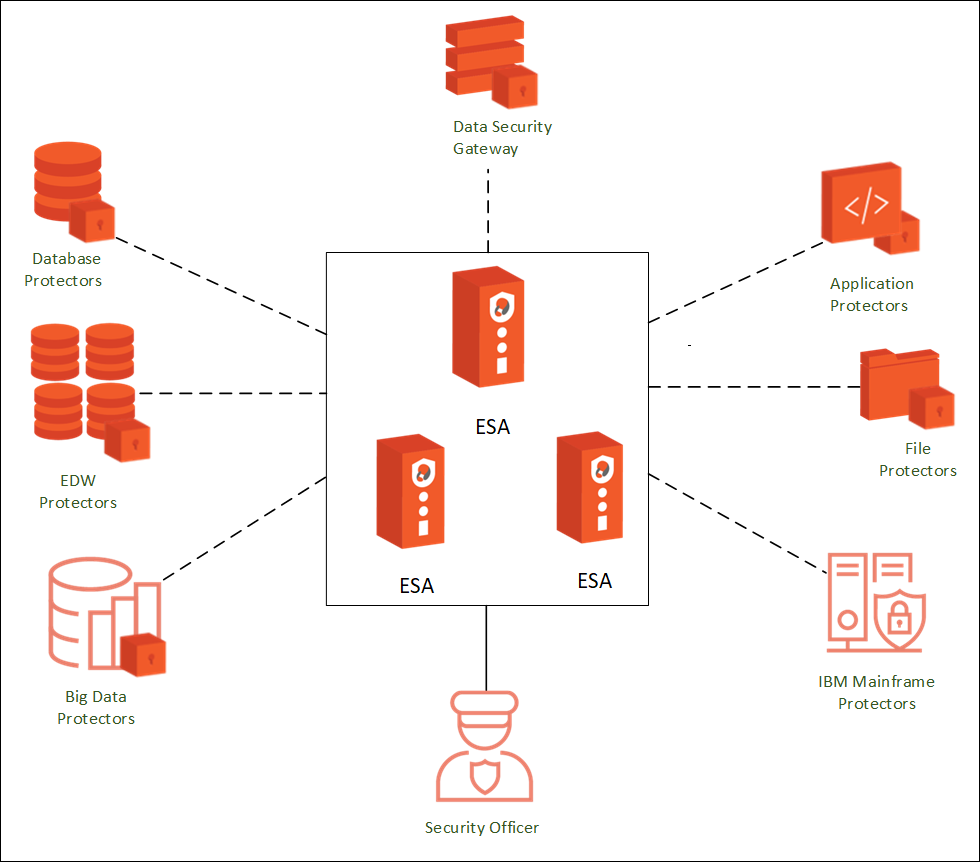

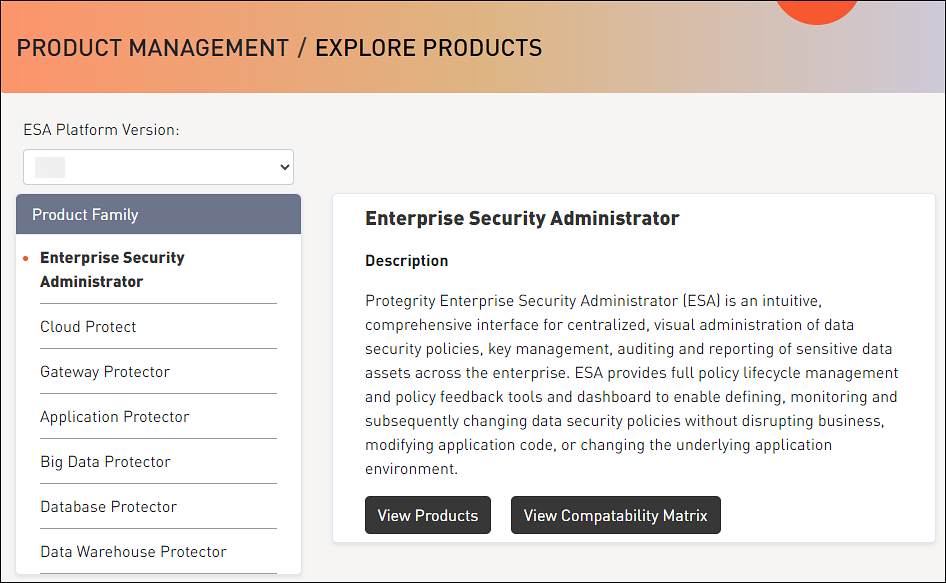

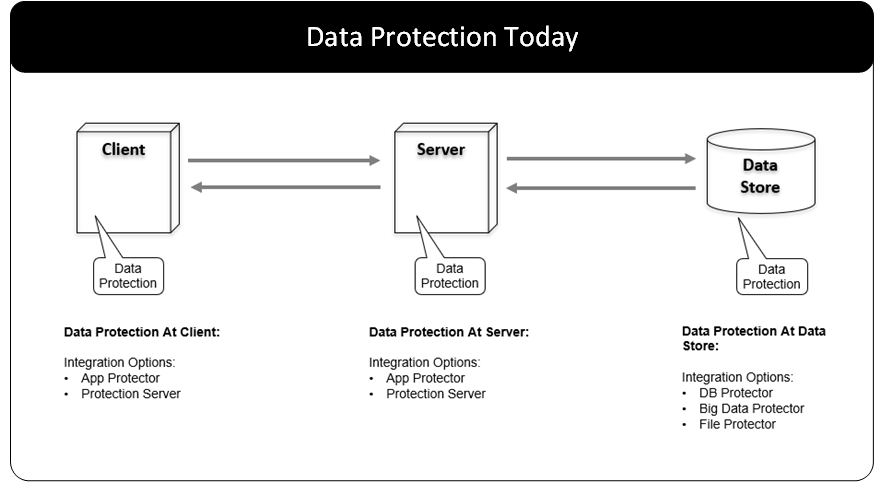

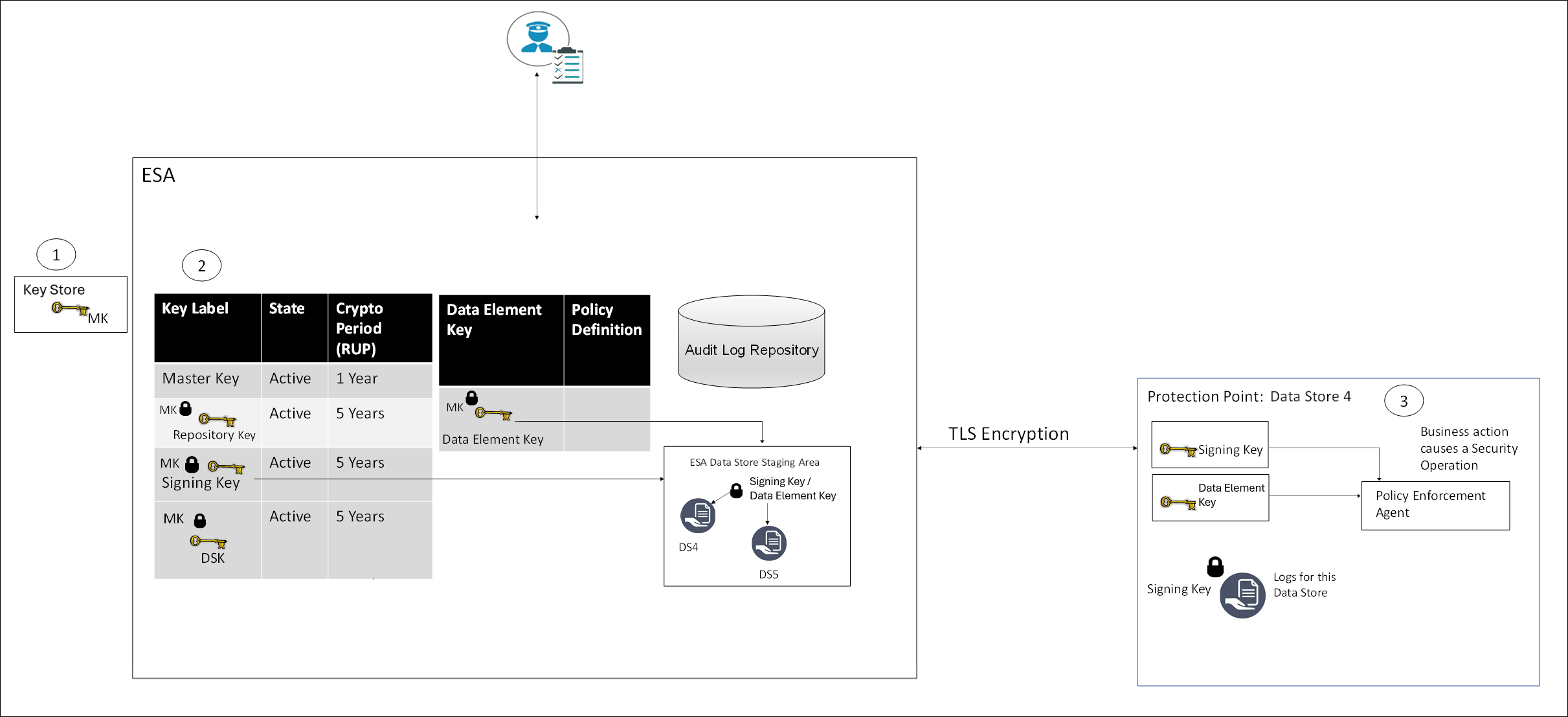

Protegrity Data Security Platform

The Protegrity Data Security Platform is a comprehensive source of enterprise data protection solutions. Its design is based on a hub and spoke deployment architecture.

The Protegrity Data Security Platform has following components:

Enterprise Security Administrator (ESA) – that handles the management of policies, keys, monitoring, auditing and reporting of protected systems in the enterprise.

Data Protectors – that protect sensitive data in the enterprise and deploy security policy for enforcement on each installed system. Policy is deployed from ESA to the Data Protectors. The Audit Logs of all activity on sensitive data are reported and stored in the Audit Store cluster on the ESA.

General Overview

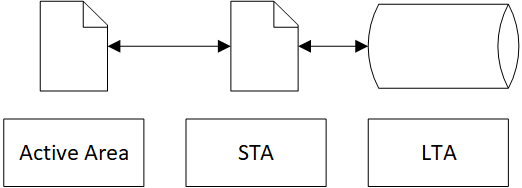

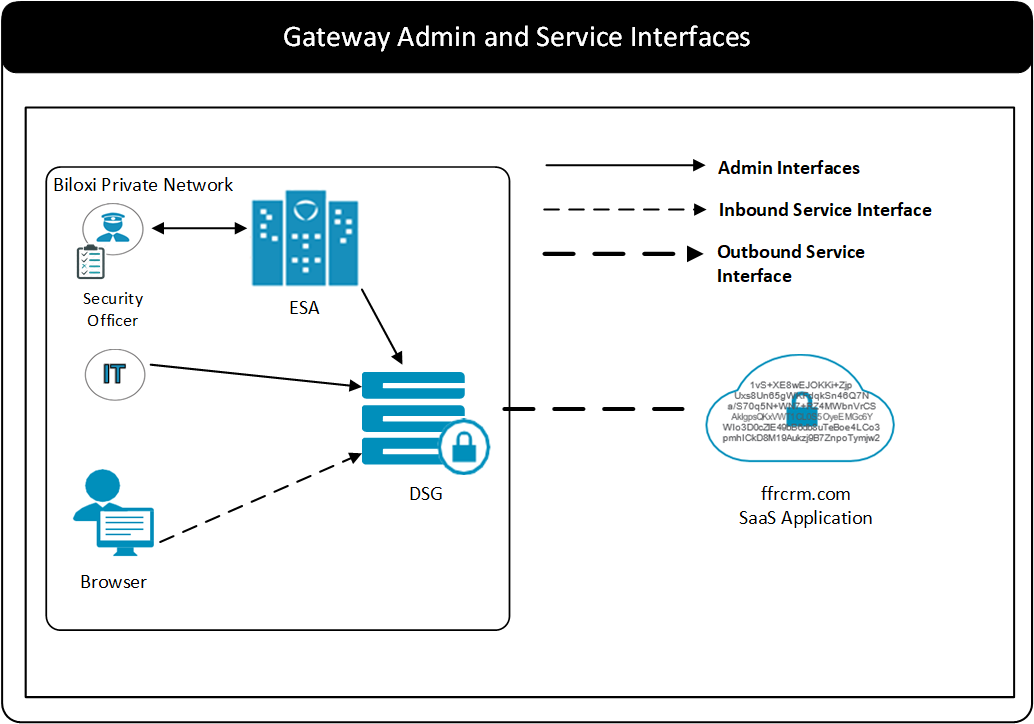

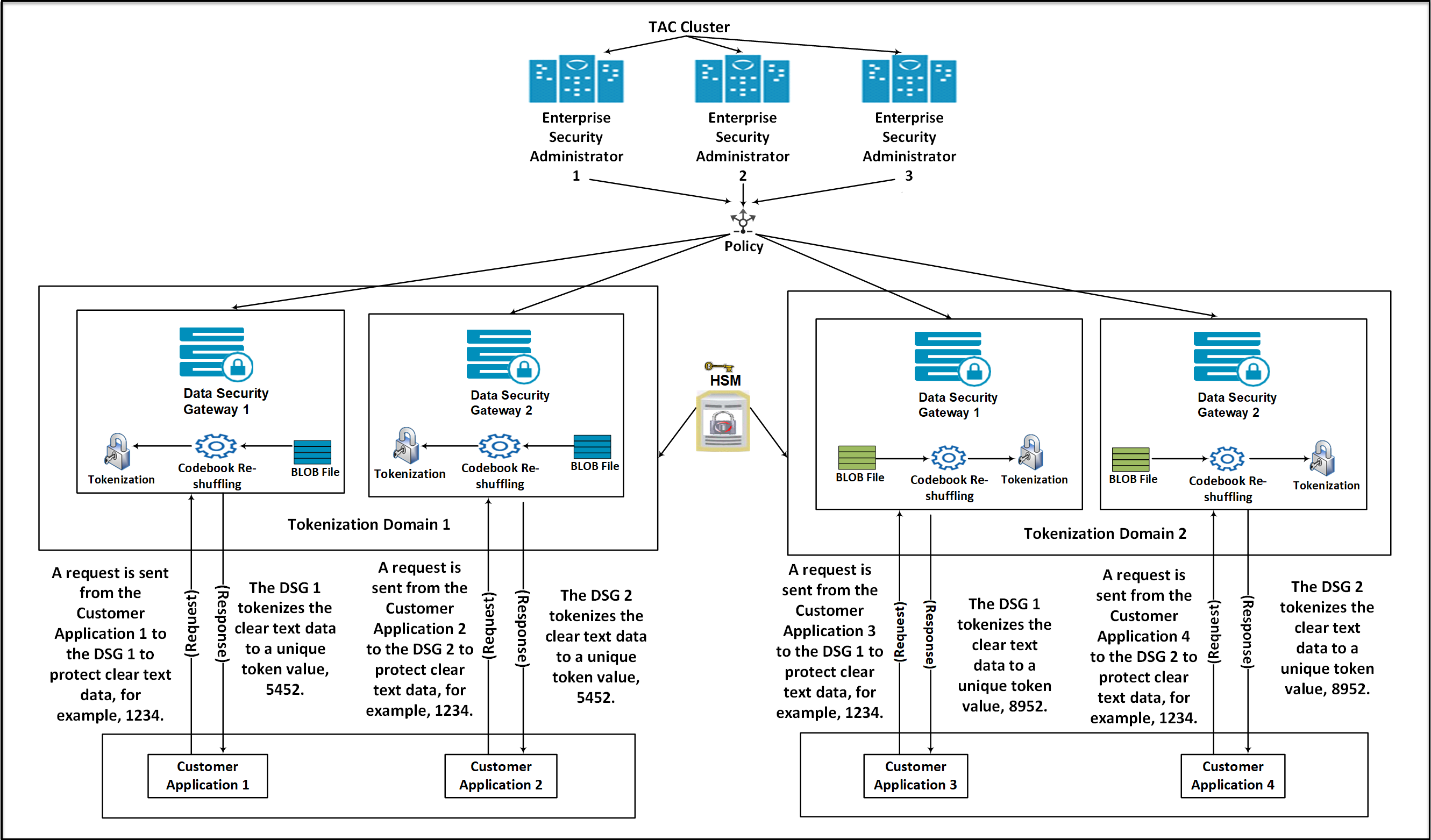

The following diagram shows the general overview of various Protegrity products.

1.2 - System Requirements

The following table lists the supported components and their compatibility settings.

| Component | Compatibility |

|---|---|

| Application Protocols | HTTP 1.0, HTTP 1.1, SSL/TLS |

| WebServices | SOAP 1.1 and WSDL 1.1 |

| Web Browsers | Minimum supported Web Browser versions are as follows: - Google Chrome version 129.0.6668.58/59 (64-bit) - Mozilla Firefox version 130.0.1 (64-bit) or higher - Microsoft Edge version 128.0.2739.90 (64-bit) |

The following table lists the minimum hardware configurations.

| Hardware Components | Configuration |

|---|---|

| CPU | Multicore Processor, with minimum 8 CPUs |

| RAM | 32 GB |

| Hard Disk | 320 GB |

| CPU Architecture | x86 |

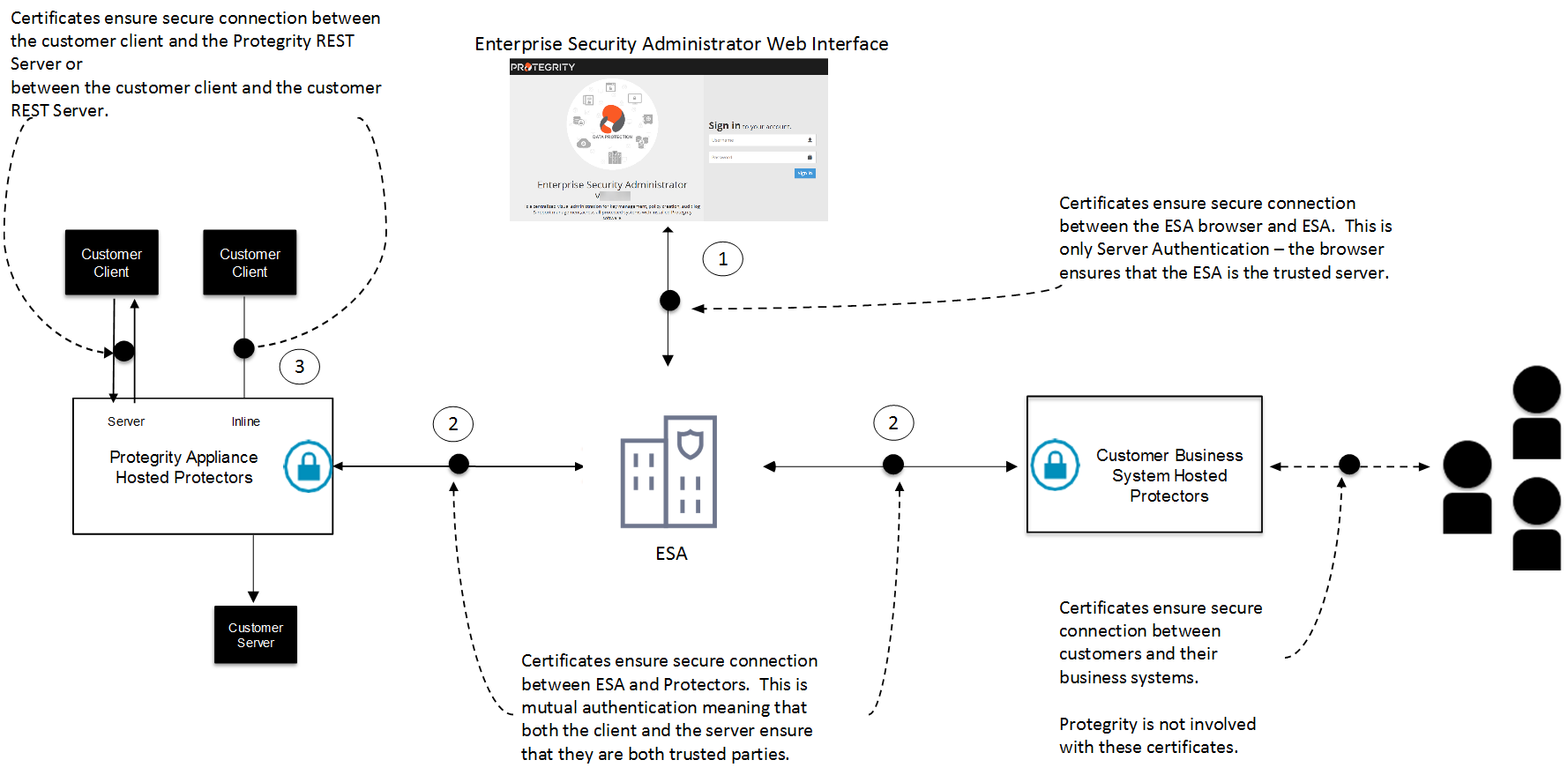

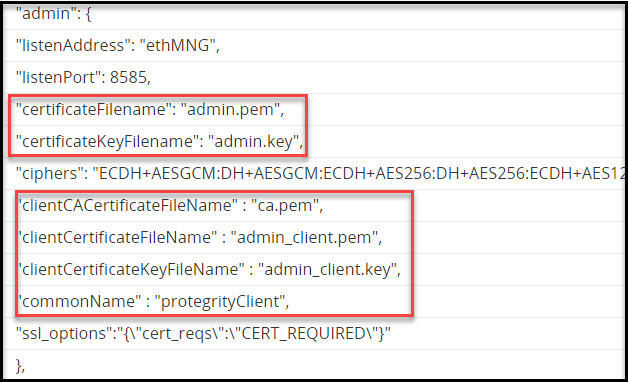

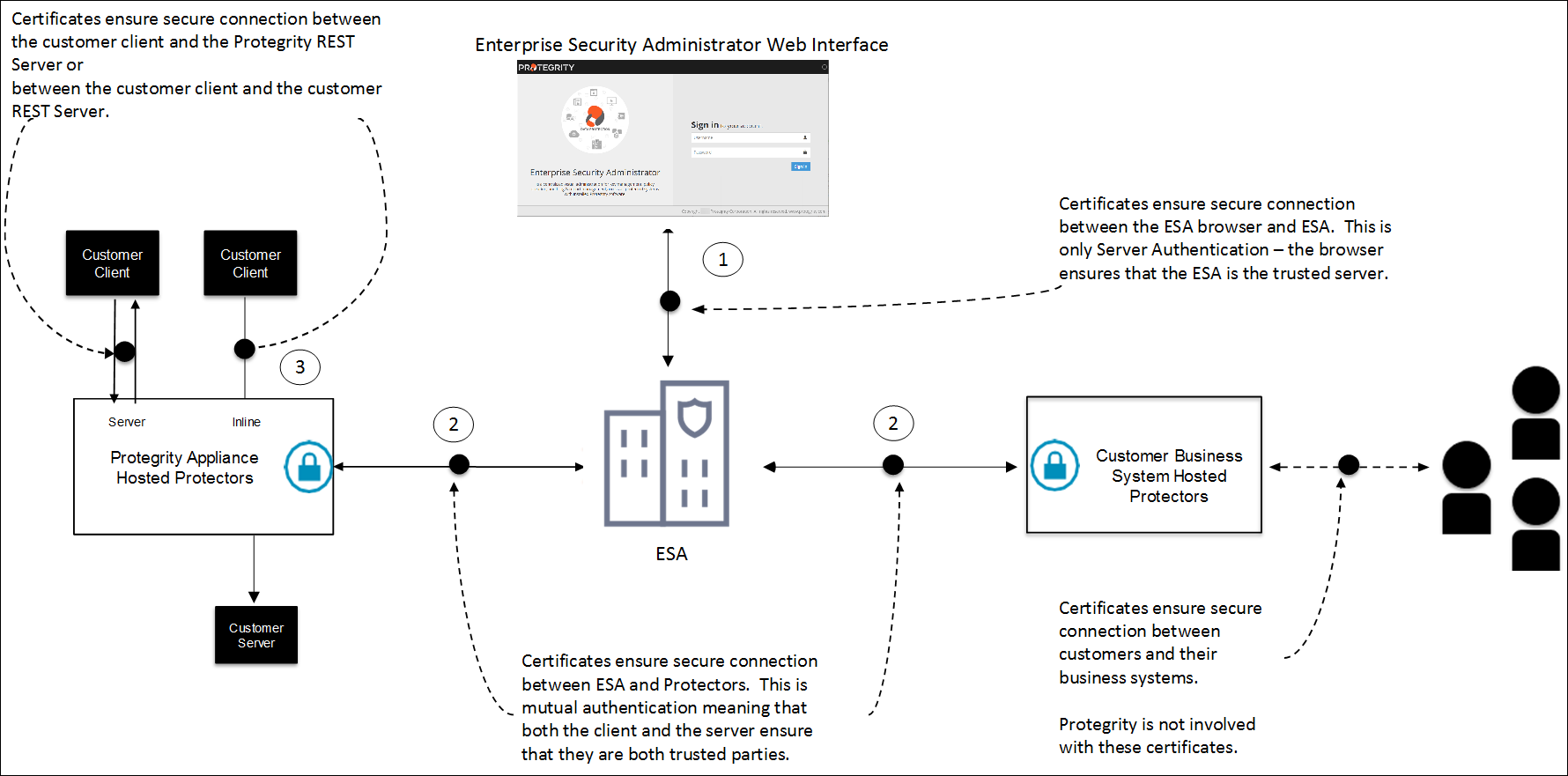

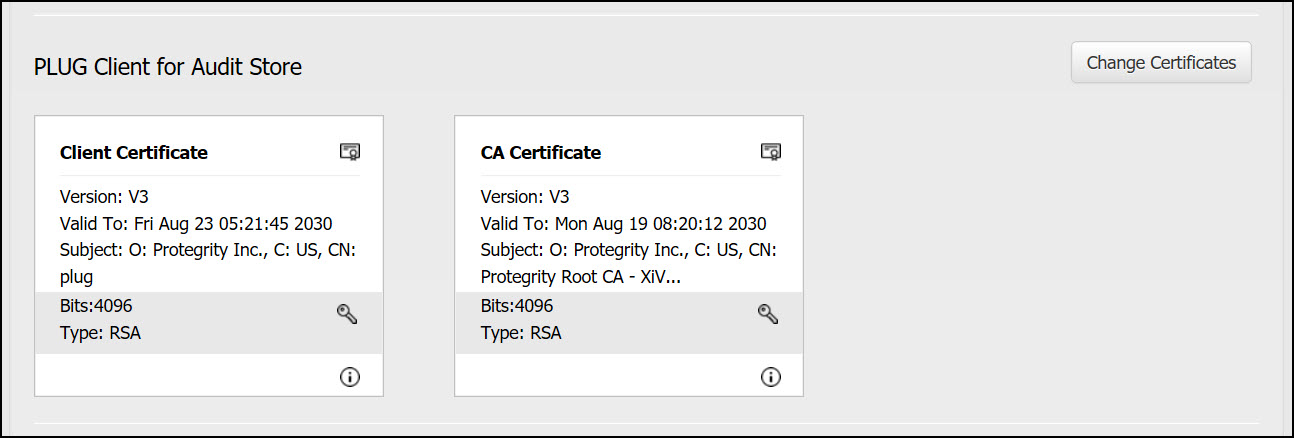

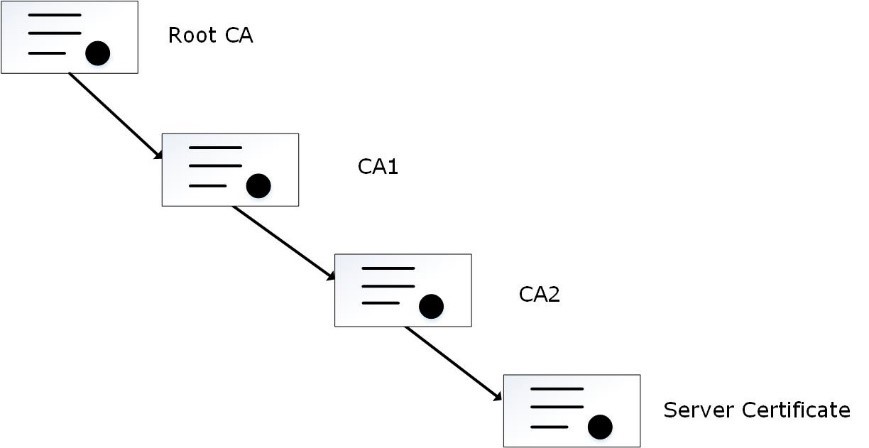

Certificate Requirements

Certificates are used for secure communication between the ESA and protectors. The certificate-based communication and authentication involves a client certificate, server certificate, and a certifying authority that authenticates the client and server certificates.

The various components within the Protegrity Data Security Platform that communicate with and authenticate each other through digital certificates are:

- ESA Web UI and ESA

- Insight

- ESA and Protectors

- Protegrity Appliances and external REST clients

Protegrity client and server certificates are self-signed by Protegrity. However, you can replace them by certificates signed by a trusted and commercial CA. These certificates are used for communication between various components in ESA.

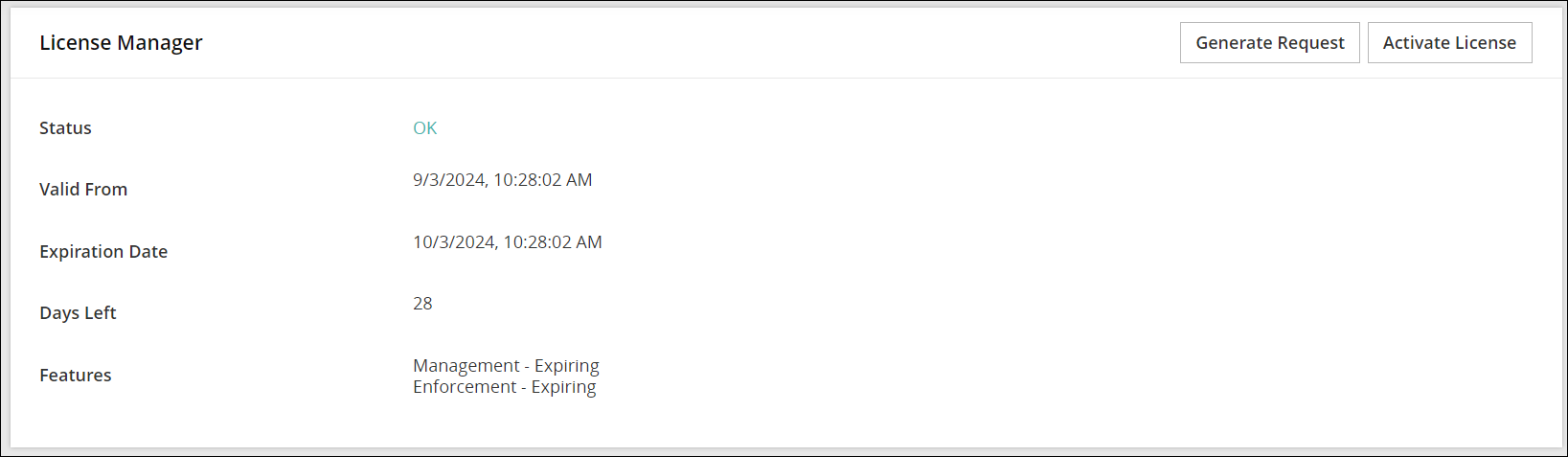

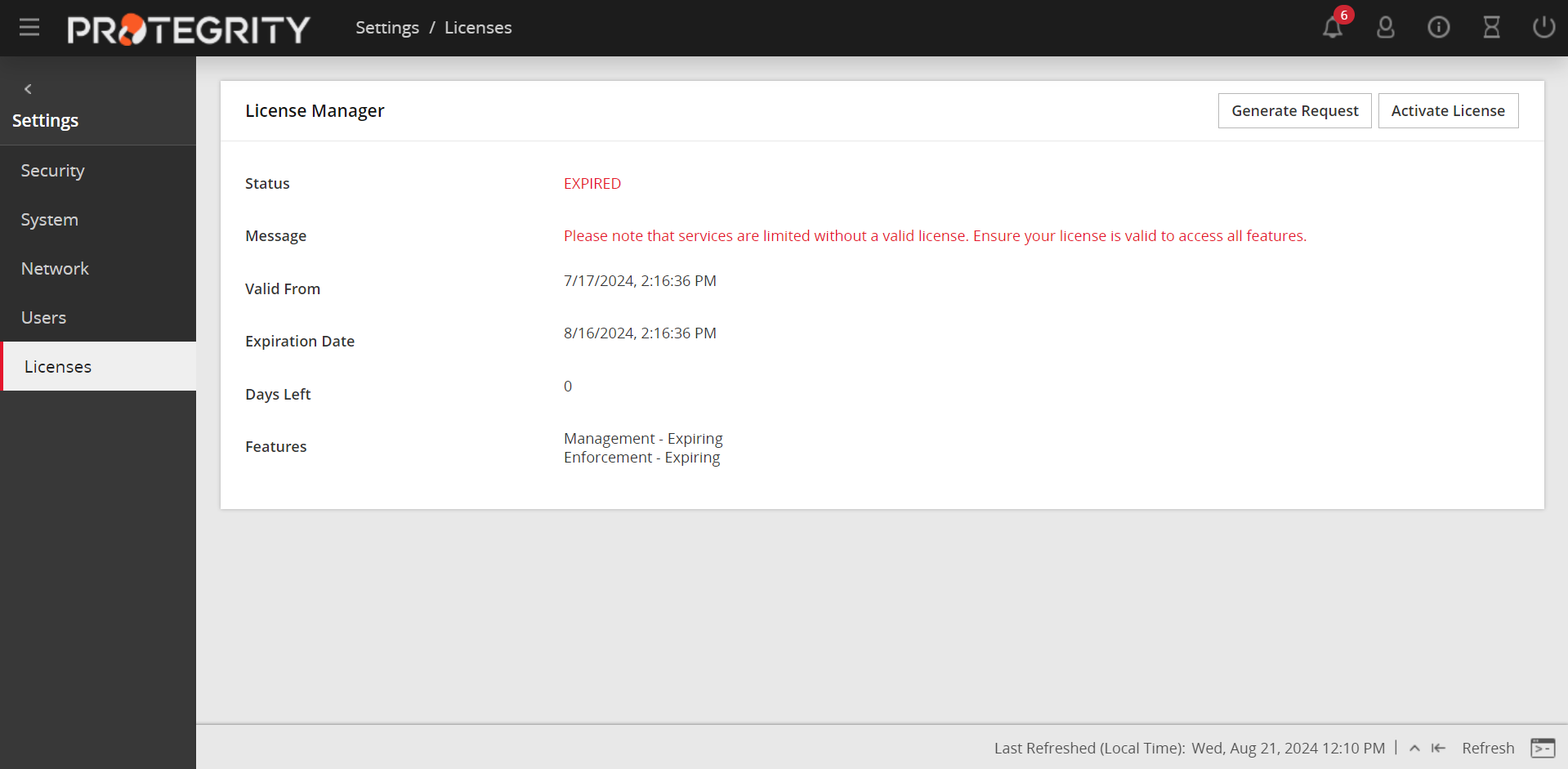

Licensing Requirements

Ensure that a valid license is available before upgrading. After migration, if the license status is invalid, then contact Protegrity Support.

1.3 - Partitioning of Disk on ESA

BIOS is amongst the oldest systems used as a boot loader to perform the initialization of the hardware. UEFI is a comparatively newer system that defines a software interface between the operating system and the platform firmware. The UEFI is more advanced than the BIOS and most of the systems are built with support for UEFI and BIOS.

Disk Partitioning is a method of dividing the hard drive into logical partitions. When a new hard drive is installed on a system, the disk is segregated into partitions. These partitions are utilized to store data, which the operating system reads in a logical format. The information about these partitions is stored in the partition table.

There are two types of partition tables, the Master Boot Record (MBR) and the GUID Partition Table (GPT). These form a special boot section in the drive that provides information about the various disk partitions. They help in reading the partition in a logical manner.

Depending on the requirements, you can extend the size of the partitions in a physical volume to accommodate all the logs and other ESA related data. You can utilize the Logical Volume Manager (LVM) to increase the partitions in the physical volume. Using LVM, you can manage hard disk storage to allocate, mirror, or resize volumes.

In ESA, the physical volume is divided into the following three logical volume groups:

| Partition | Description |

|---|---|

| Boot | Contains the boot information. |

| PTYVG | Contains the files and information about OS and logs. |

| Data Volume Group | Contains the data that is in the /opt directory. |

1.4 - Installing the ESA

The ESA appliance can be installed on any of the following platforms.

- On-premise (ISO)

- Cloud platforms

- Amazon Web Services (AWS)

- Microsoft Azure

- Google Cloud Platform (GCP)

- VMWare (OVA)

1.4.1 - Installing the ESA On-Premise

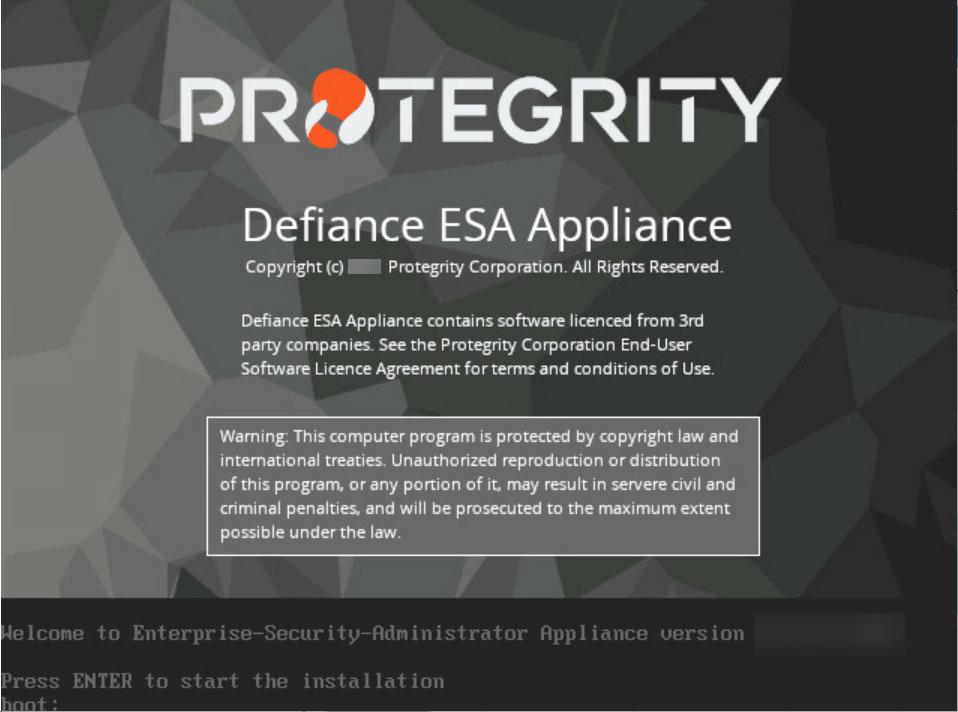

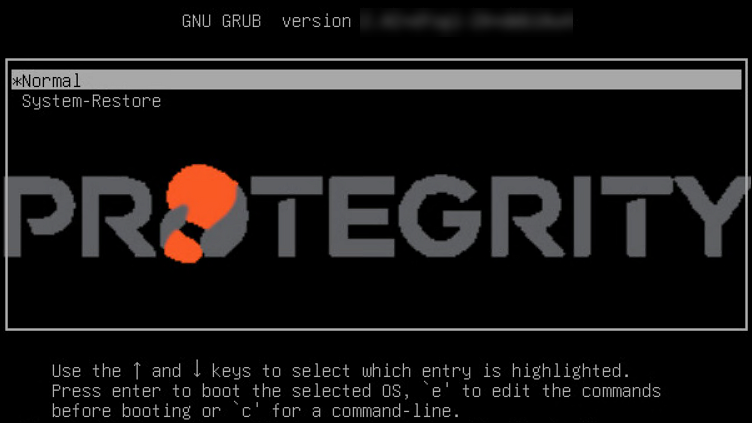

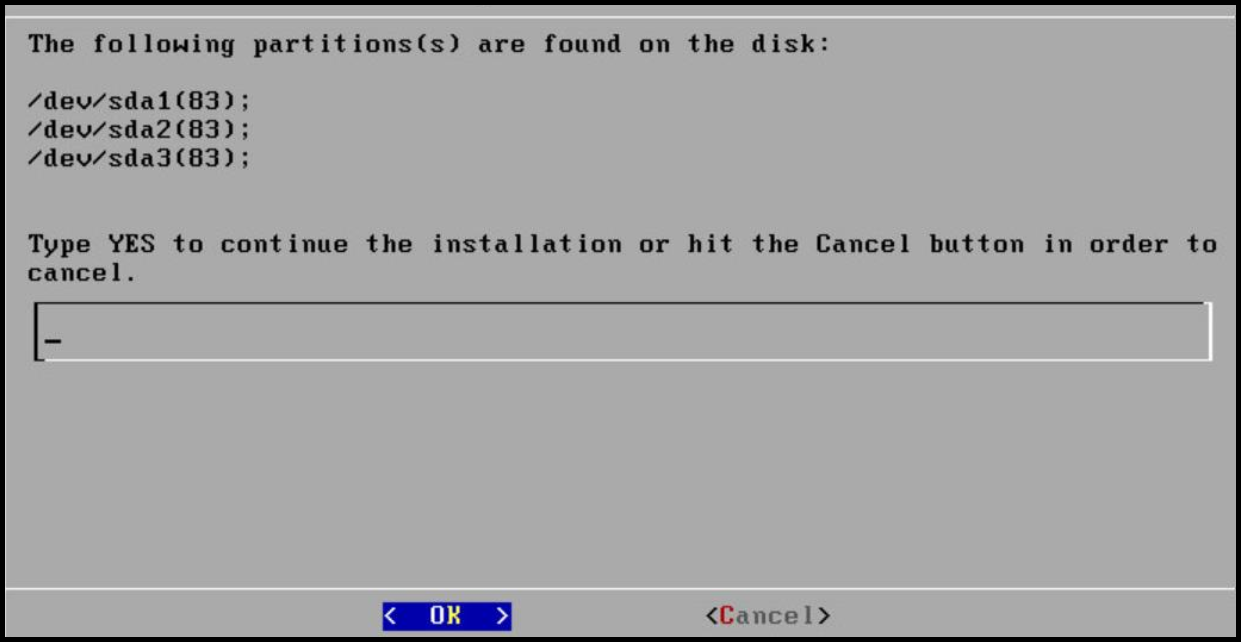

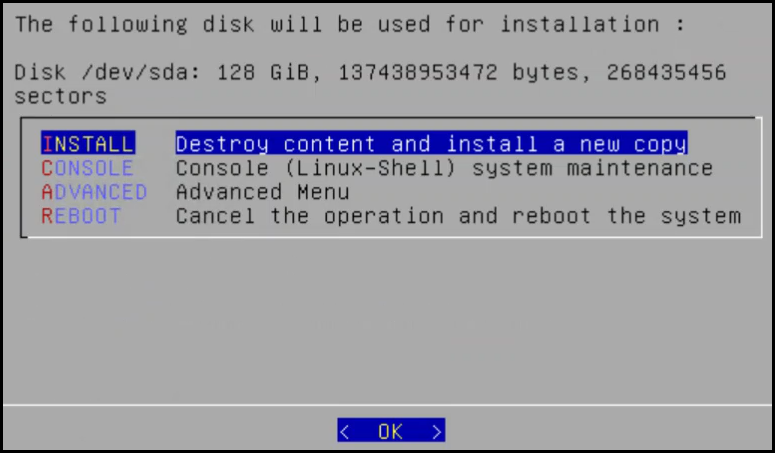

1. Starting the installation

To install the ESA:

Insert the ESA installation media in the system disk drive.

Boot the system from the disk drive.

The following screen appears.

Press ENTER to start the installation.

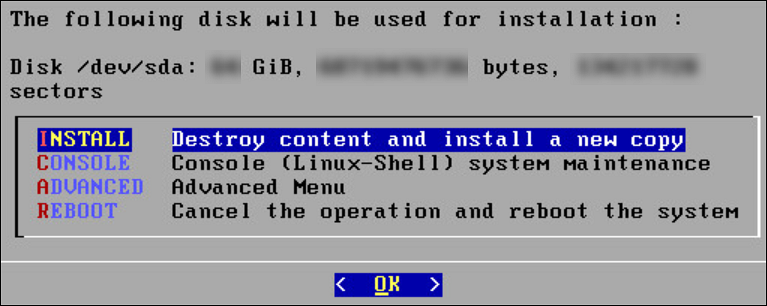

The following screen appears.

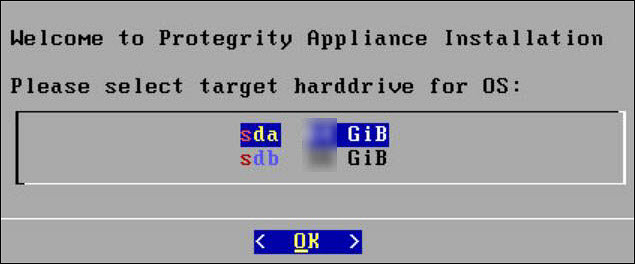

The system will detect the number of hard drives that are present. If there are multiple hard drives, then it will allow you to choose the hard drive where you want to install the OS partition and the /opt partition.

If there are multiple hard drives, then the following screen appears.

For storing the operating system-related data, select the hard drive where you want to install the OS partition and select OK.

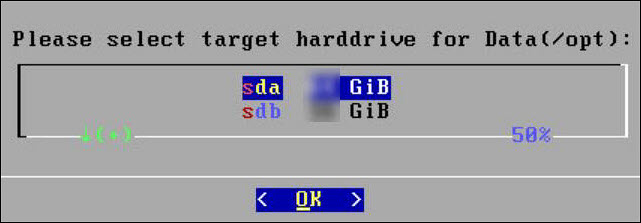

The following screen appears.

For storing the logs, configuration data, and so on select the hard drive where you want to install the /opt partition and select OK.

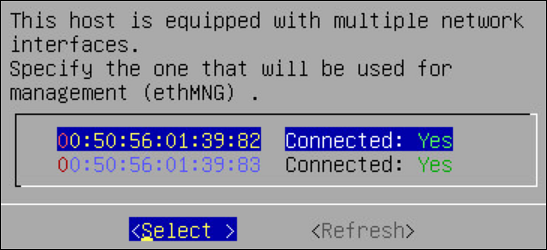

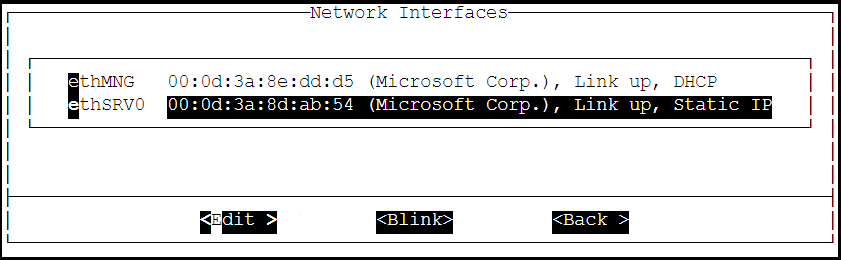

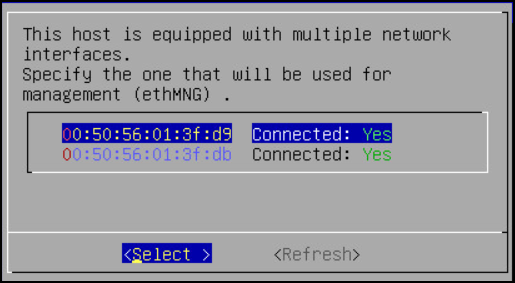

2. Selecting Network Interface Cards (NICs)

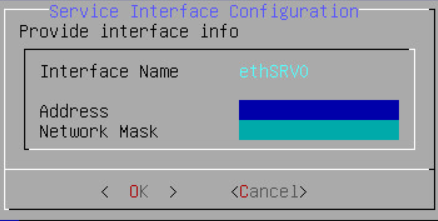

The Network Interface Card (NIC) is a device through which appliances, such as, the ESA or the DSG, connect to each other on a network. You can configure multiple network interface cards (NICs) on the appliance.

The ethMNG interface is generally used for managing the appliance and ethSRV interface is used for binding the appliances for using other services.

For example, the appliance can use the ethMNG interface for the ESA Web UI and the ethSRV interface for enabling communication with different applications in an enterprise.

The following task describes how to select management interfaces.

To select multiple NICs:

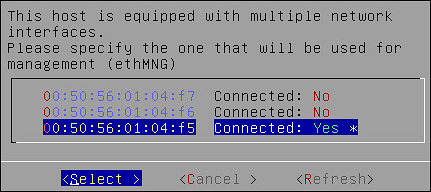

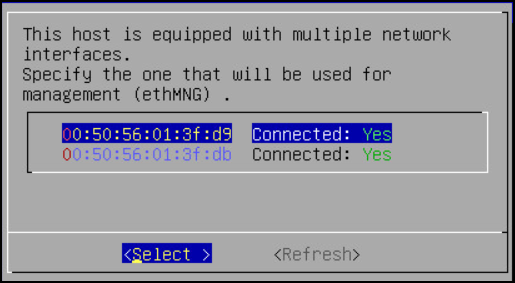

If there are multiple NICs, then the following screen appears.

Select the required NIC for management interface.

Choose Select and press ENTER.

3. Configuring Network Settings

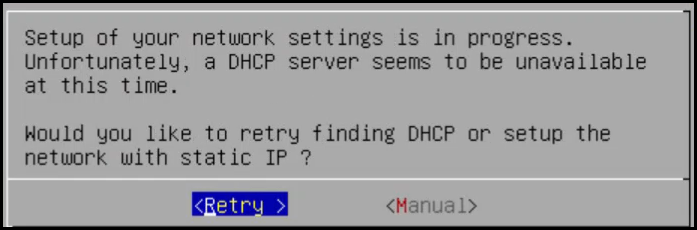

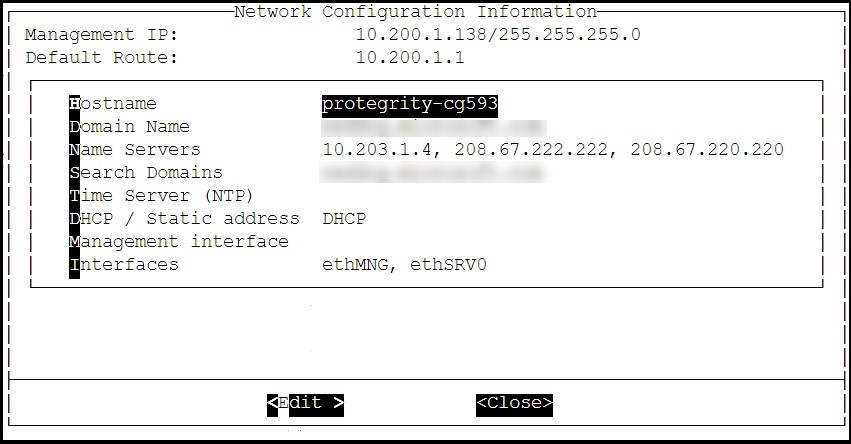

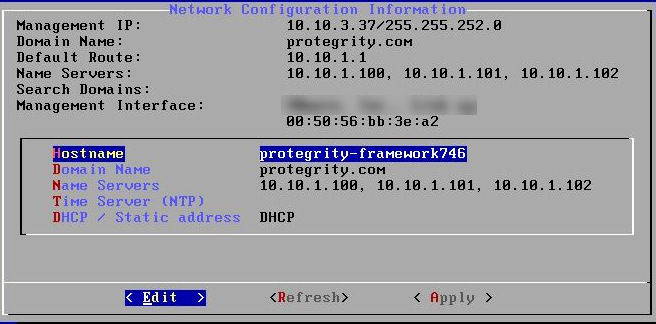

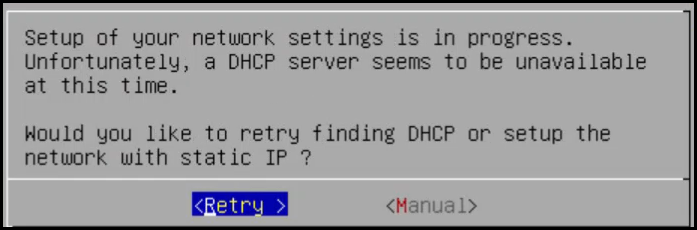

After selecting the NIC for management, you configure the network for the ESA. During the network configuration, the system tries to connect to a DHCP server to obtain the hostname, default gateway, and IP addresses for the ESA. If the DHCP is not available, then you can configure the network information manually.

To configure the network settings:

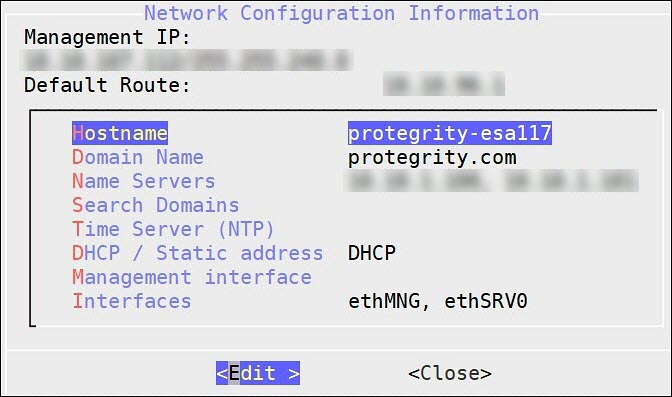

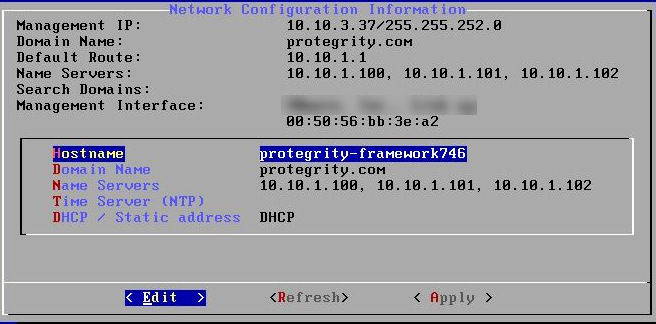

If the DHCP server is configured, then the following screen containing the network information appears.

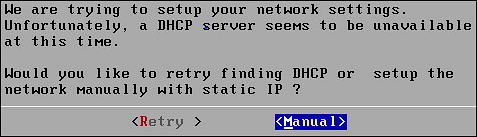

If the DHCP server is not available, then the following screen appears.

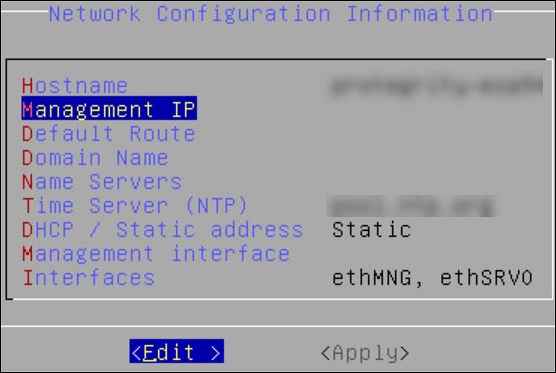

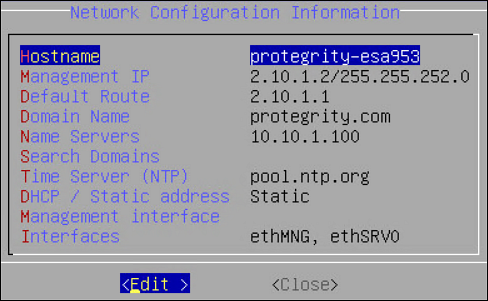

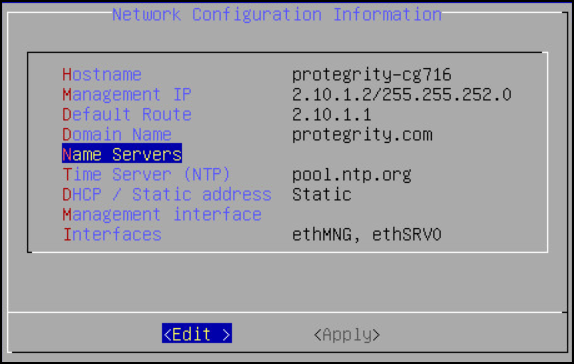

The Network Configuration Information screen appears.

Select Manual and press ENTER.

The following screen appears.

Select DHCP / Static address to configure the DHCP / Static address for the ESA and choose Edit.

Select Static address and choose Update.

If you want to change the hostname of the ESA, then perform the following steps.

- Select Hostname and select Edit.

- Change the Hostname and select OK.

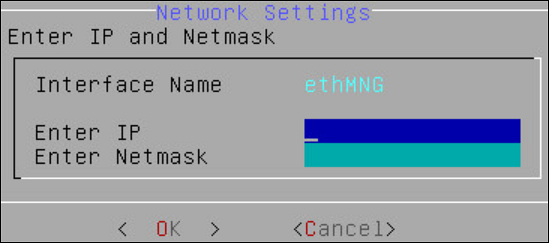

Select Management IP to configure the management IP address for the ESA and choose Edit.

- Add the IP address assigned to the ethMNG interface. This IP address configures the ESA to use the Web UI.

- Enter the Netmask. The ethMNG interface must be connected to the LAN with this Netmask value.

- Select OK.

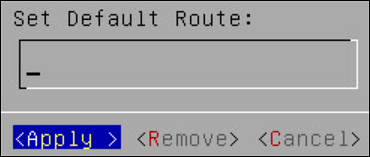

Select Default Route to configure the default route for the ESA and press Edit.

- Enter the IP address for the default network traffic.

- Select Apply.

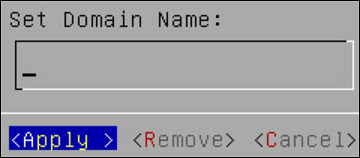

Select Domain Name and press Edit.

- Enter the Domain Name. For example, protegrity.com.

- Press Apply.

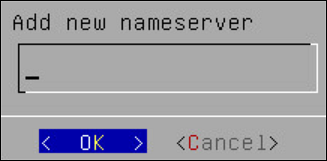

Select Name Servers and press Edit.

- Add the IP address of the name server.

- Press OK.

If you want to configure the NTP, then perform the following steps.

- Select Time Server (NTP), and press Edit.

- Add NTP time server on a TCP/IP network.

- Select Apply.

Select Apply.

The network settings are configured.

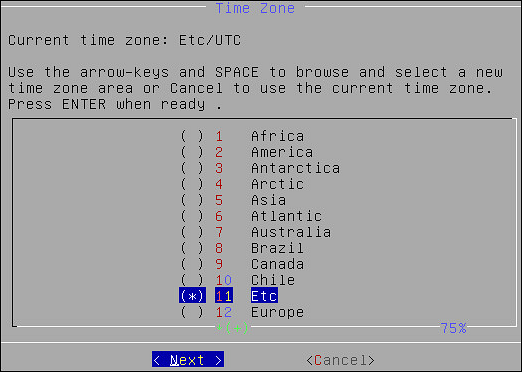

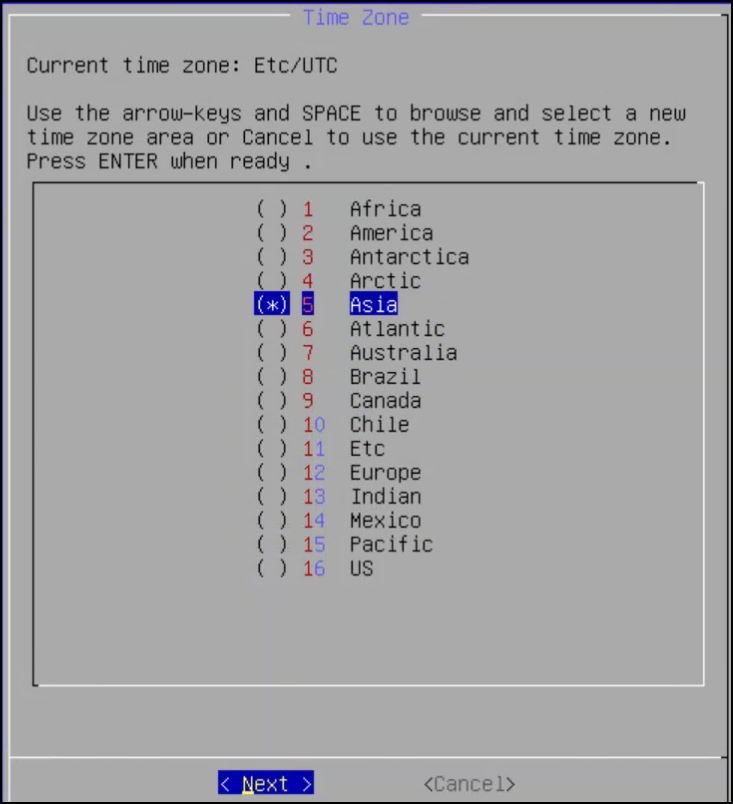

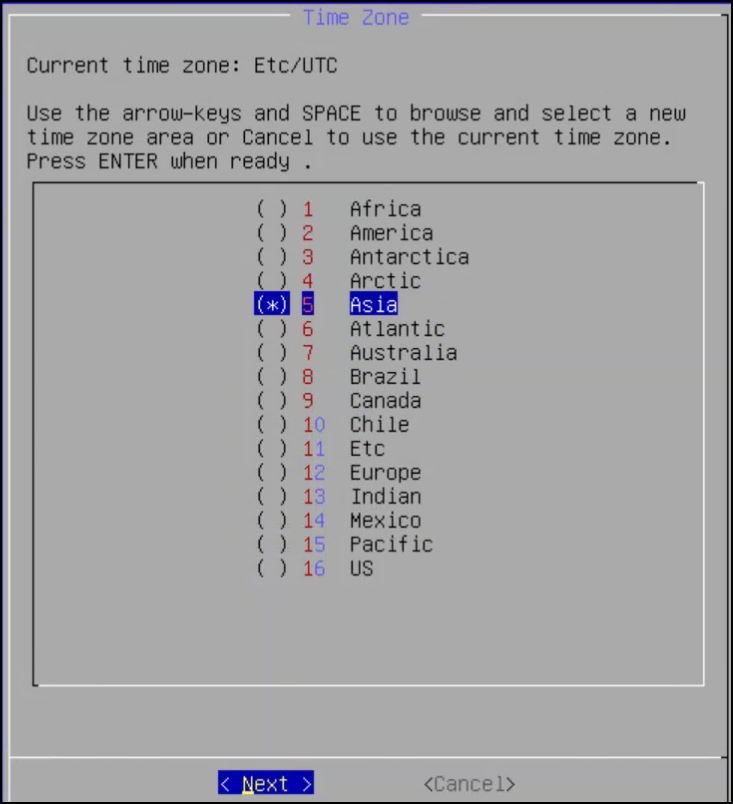

4. Configuring Time Zone

After you configure the network settings, the Time Zone screen appears. This section explains how to set the time zone.

To set the Time Zone:

On the Time Zone screen, select the time zone.

Press Next.

The time zone is configured.

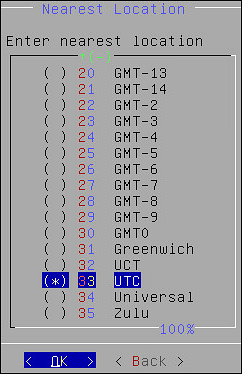

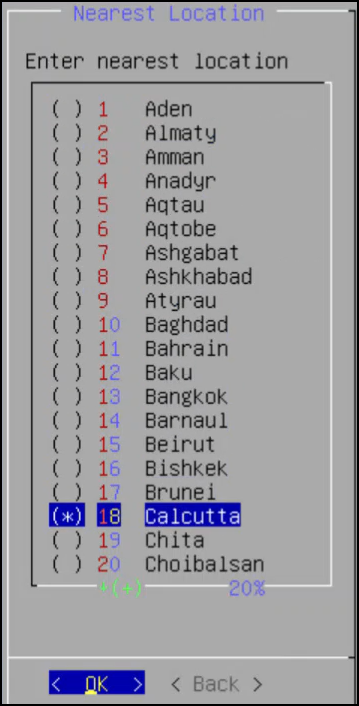

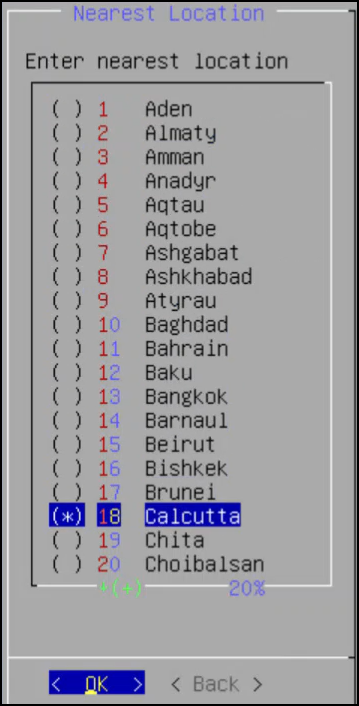

5. Configuring the Nearest Location

After configuring the time zone, the Nearest Location screen appears.

To Set the Nearest Location:

On the Nearest Location screen, enter the nearest location in GMT or UTC.

Press OK.

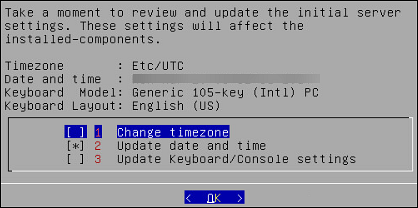

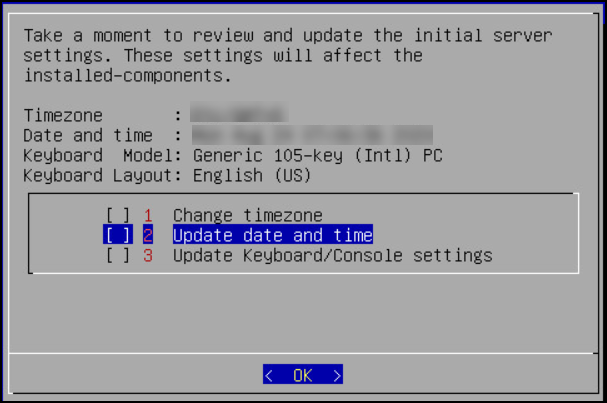

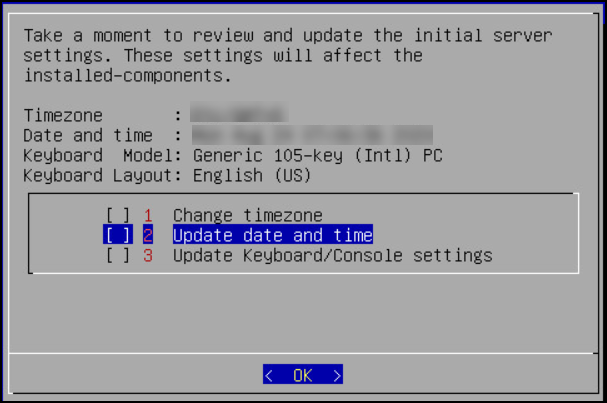

The following screen appears.

This screen also allows you to update the default settings of date and time, keyboard manufacturer, keyboard model, and keyboard layout.

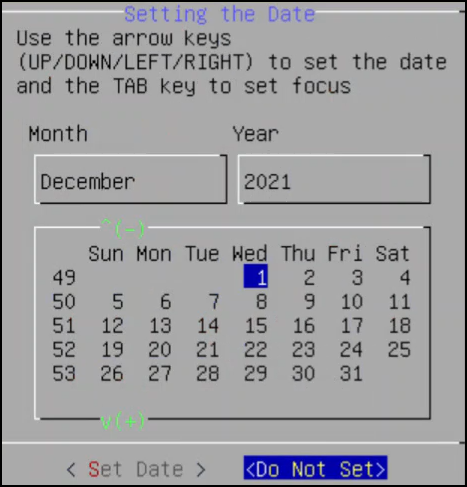

6. Updating the Date and Time

To Update the Date and Time:

Press SPACE and select Update date and time.

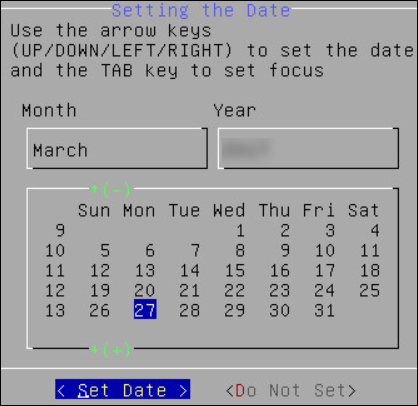

Press ENTER.

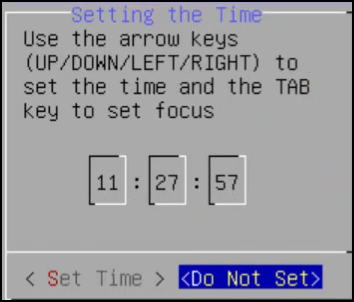

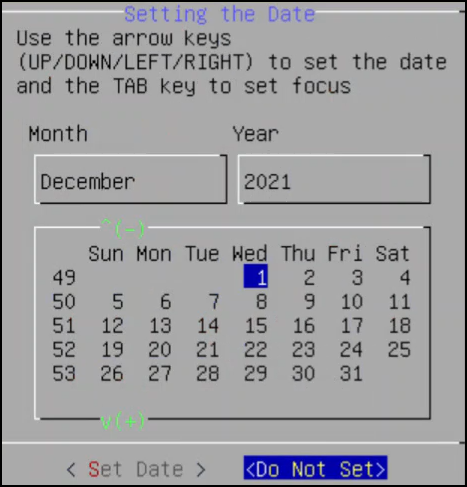

The following screen appears.

Select the date.

Select Set Date and press ENTER.

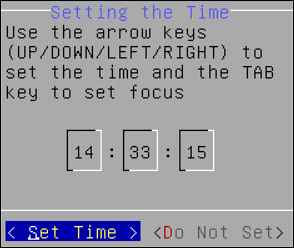

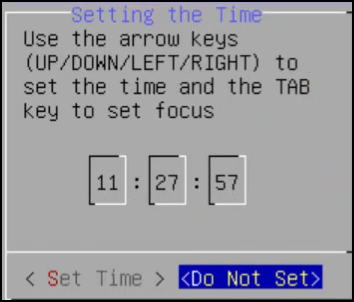

The next screen appears.

Set the time.

Click Set Time and press ENTER.

The date and time settings are configured.

7. Updating the Keyboard Settings

To Update the Keyboard Settings:

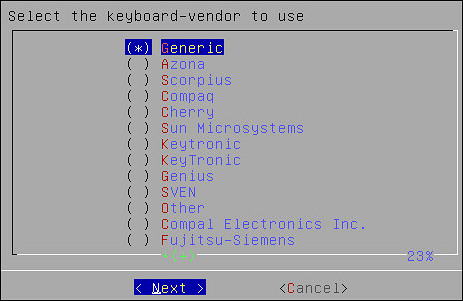

Select Update Keyboard or Console settings.

Press ENTER.

Select the vendor and press the SPACEBAR.

Select Next.

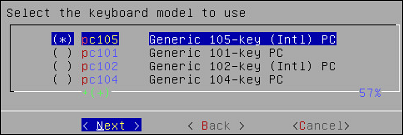

If you select Generic, then a window with the list of generic keyboard models appears.

Select the model you use and press Next.

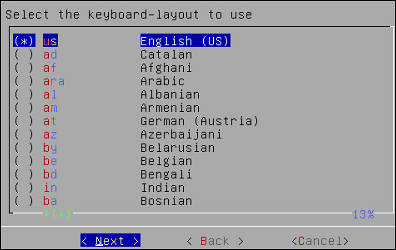

On the next window, select the keyboard language. The default is English (US).

Select Next.

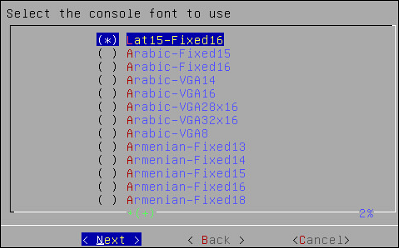

On the next window, select the console font. The default is Lat15-Fixed16.

Press Next.

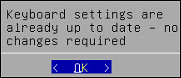

A confirmation message appears.

Press OK to confirm.

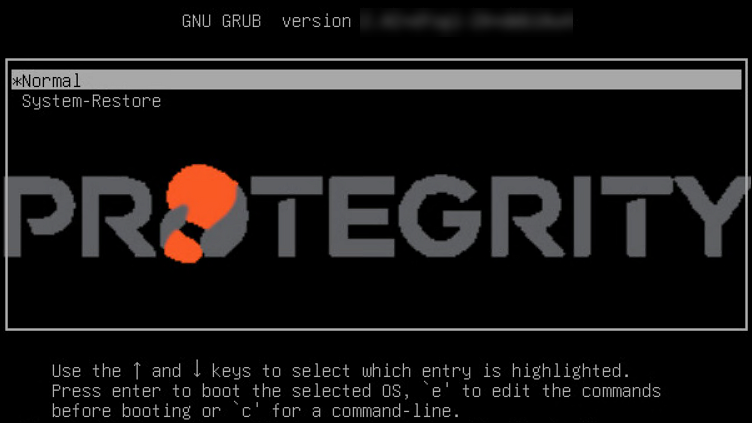

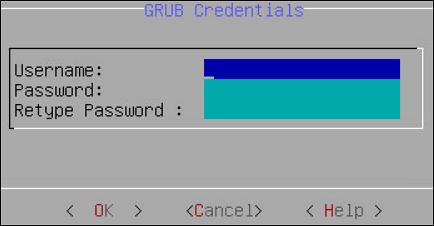

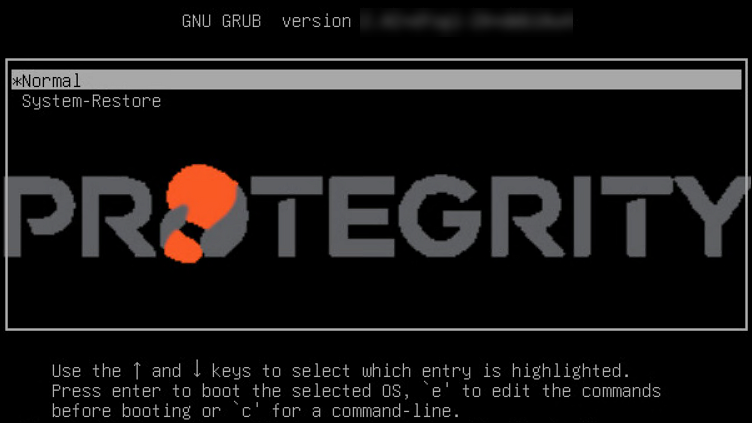

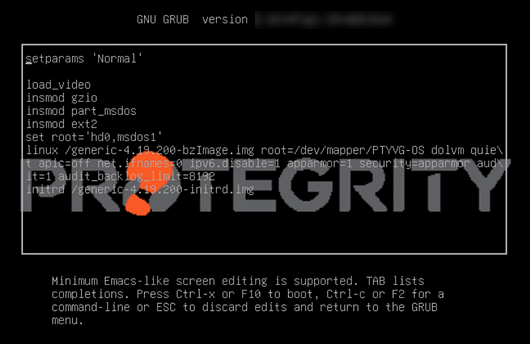

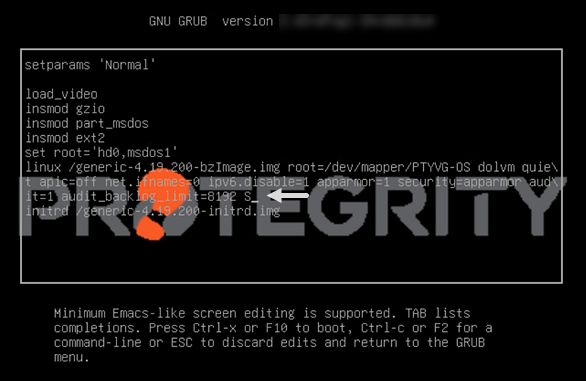

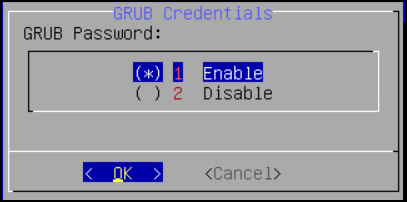

8. Configuring GRUB Settings

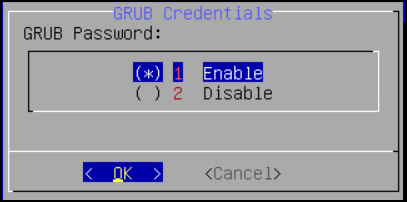

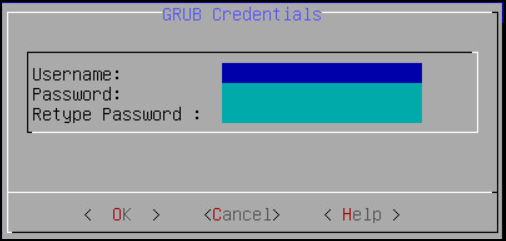

On the ESA, GRUB version 2 (GRUB2) is used for loading the kernel. If you want to protect the boot configurations, then you can secure it by enforcing a username and password combination for the GRUB menu.

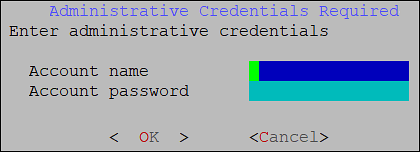

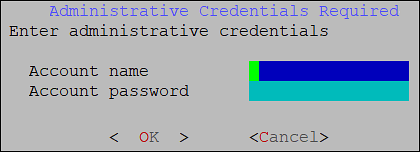

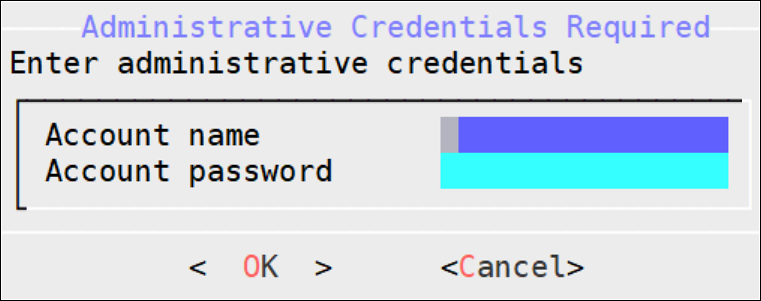

During installation for the ESA on-premise, a screen to configure GRUB credentials appears. If you want to protect the boot configurations, then you can secure it by enforcing a username and password combination for the GRUB menu. While installing the ESA v9.2.0.0, you can secure the GRUB menu by creating a username and setting password as described in the following task.

To configure GRUB settings:

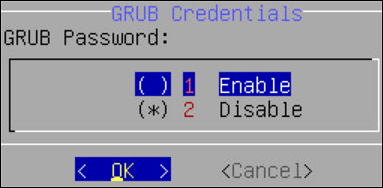

From the GRUB Credentials page, press the SPACEBAR to select Enable.

By default the Disable is selected. If you continue to choose Disable, then the security for the GRUB menu is disabled. It is recommended to enable GRUB to secure the ESA.

You can enable this feature from the CLI Manager after the installation is completed. On the CLI Manager, navigate to Administration > GRUB Credential Settings to enable the GRUB settings.

For more information about GRUB, refer to the section Securing the GRand Unified Bootloader (GRUB).

Select OK.

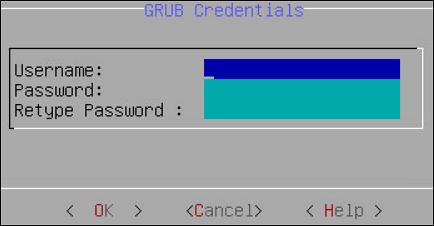

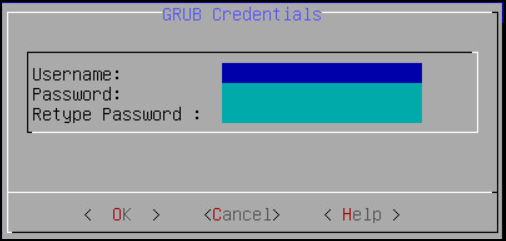

The following screen appears.

Enter a username in the Username text box.

Note:

The requirements for the Username are as follows:

- It should contain a minimum of three and maximum of 16 characters

- It should not contain numbers and special characters

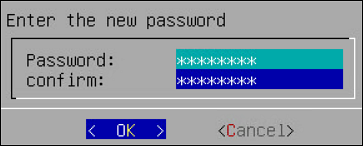

Enter a password in the Password and Re-type Password text boxes.

Note:

The requirements for the Password are as follows:

- It must contain at least eight characters

- It must contain a combination of alphabets, numbers, and printable characters

Select OK and press ENTER.

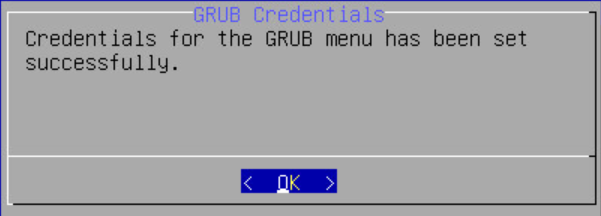

A message

Credentials for the GRUB menu has been set successfullyappears.

Select OK.

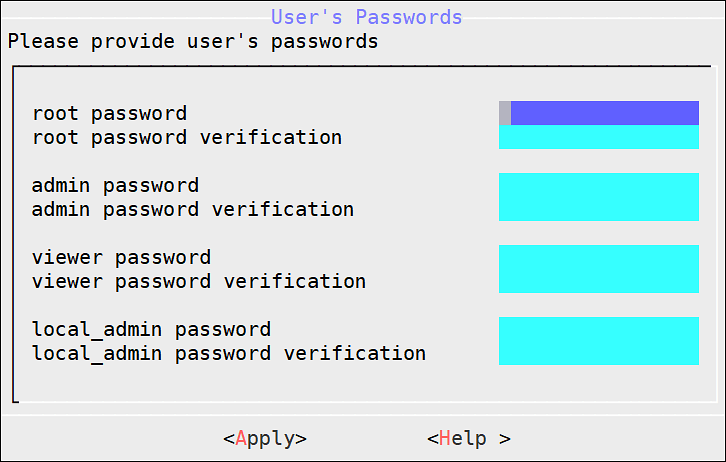

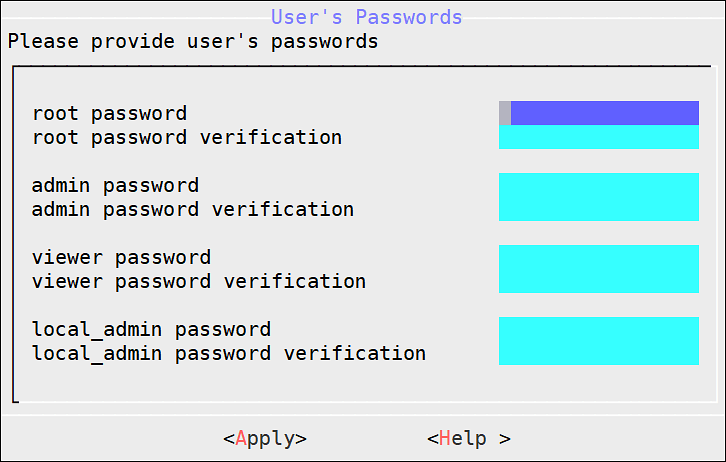

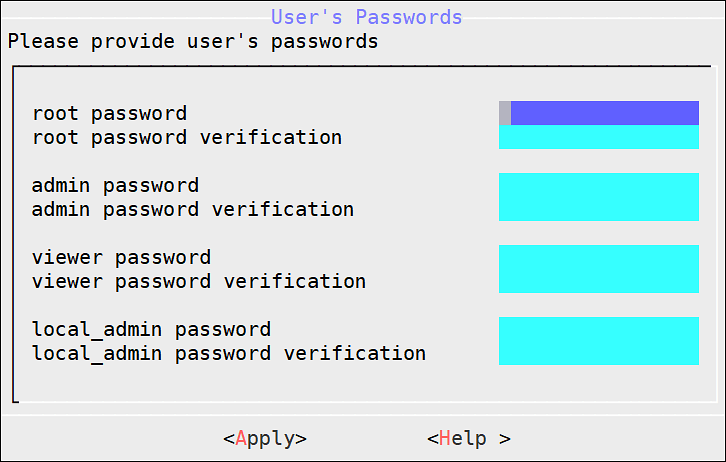

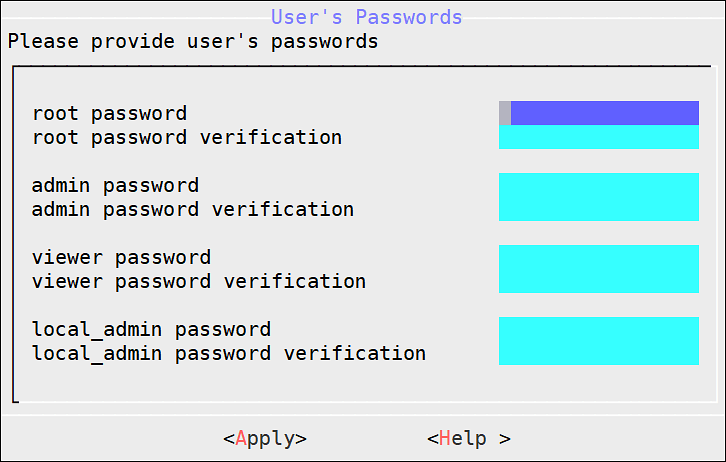

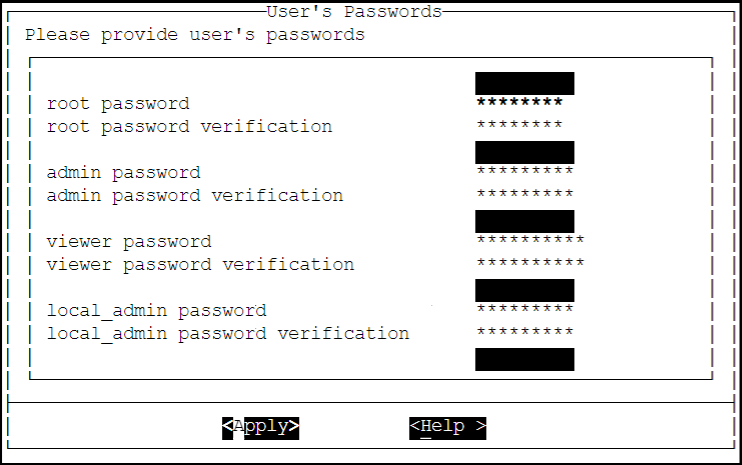

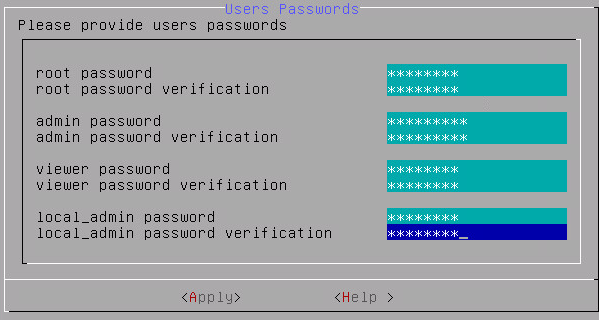

9. Setting up Users and Passwords

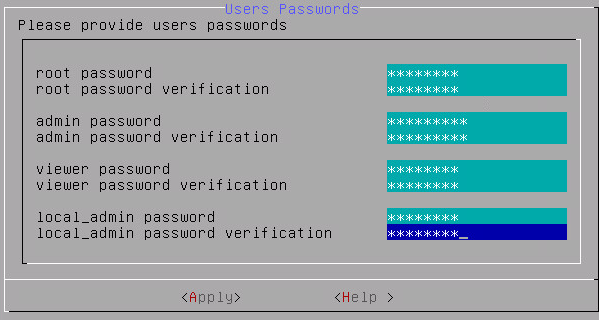

Only authorized users can access the ESA. The Protegrity Data Security Platform defines a list of roles for each user who can access the ESA. These are system users and LDAP administrative users who have specific roles and permissions. When you install the ESA, the default users configured are as follows:

- root: Super user with access to all commands and files.

- admin: User with administrative privileges to perform all operations.

- viewer: User who can view, but does not have edit permissions.

- local_admin: Local administrator that can be used when the admin user is not accessible.

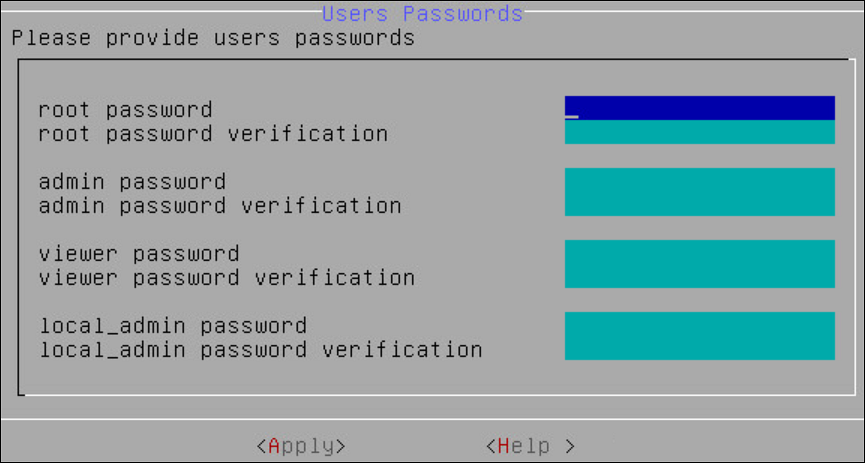

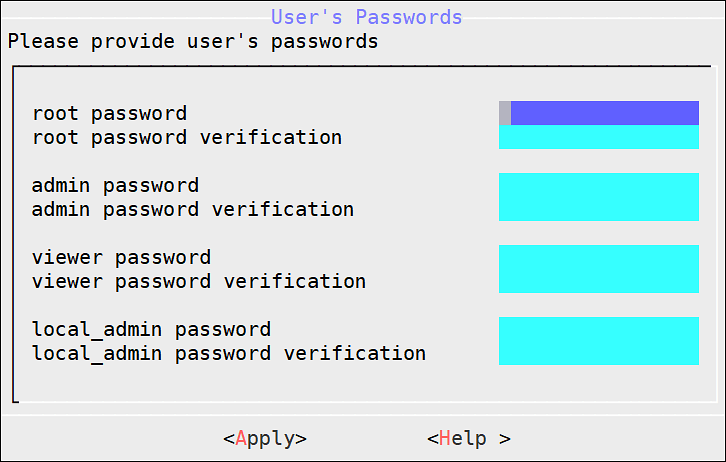

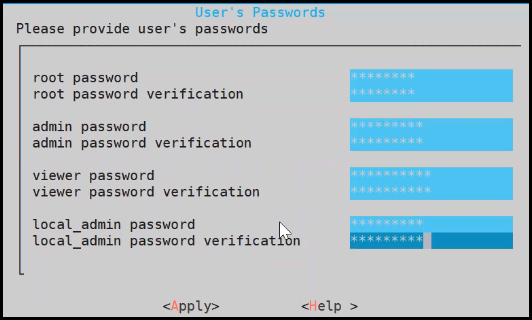

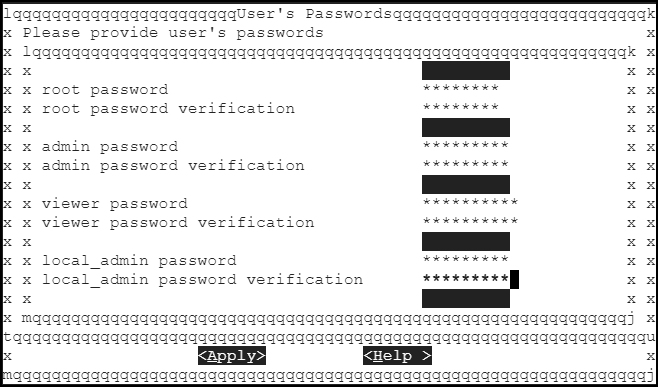

After completing the server settings, the Users Passwords screen appears that allows you set the passwords for the users.

To set the LDAP Users Passwords:

Add the passwords of the users.

Note: Ensure that the passwords for the users comply with the password polices.

For more information about the password policies, refer to the section Password Policy Configuration in the Protegrity Enterprise Security Administrator Guide 9.2.0.0.

Select Apply.

The user passwords are set.

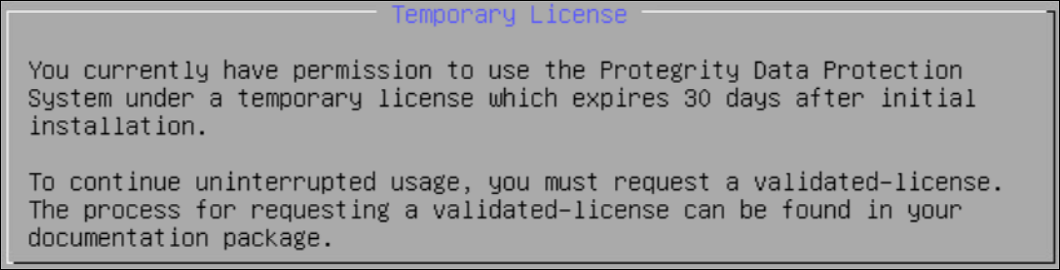

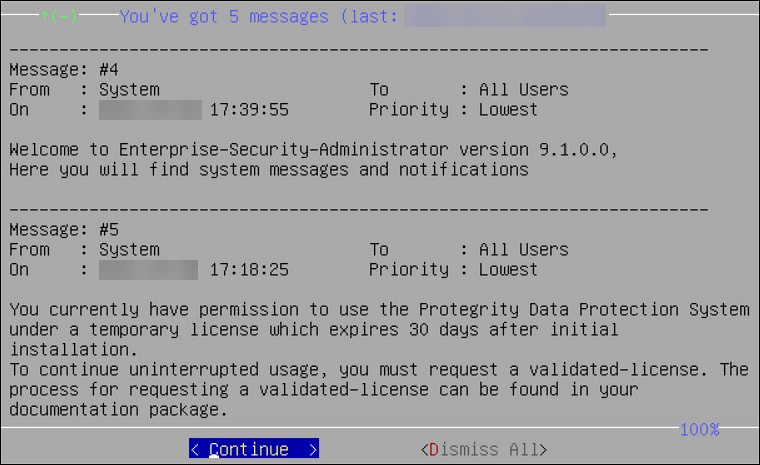

10. Licensing

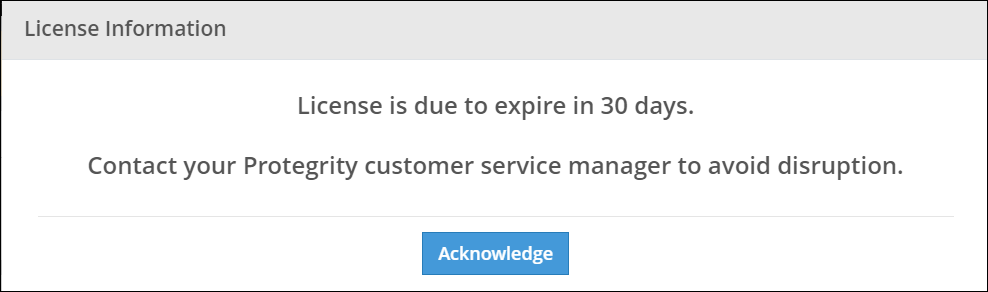

After the ESA components are installed, the Temporary License screen appears. This system takes time. It is recommended to wait for few minutes before proceeding.

Note: After the ESA is installed, you must apply for a valid license within 30 days.

For more information about licenses, refer Licensing.

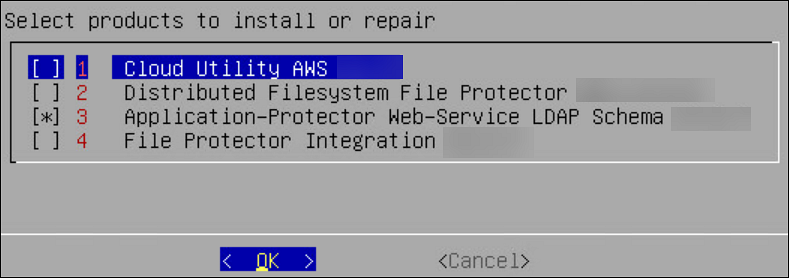

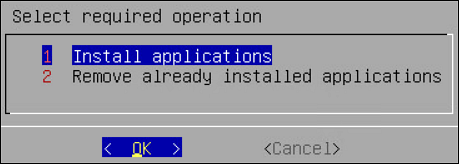

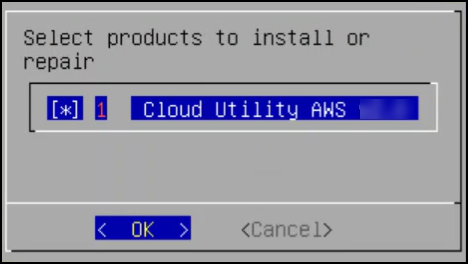

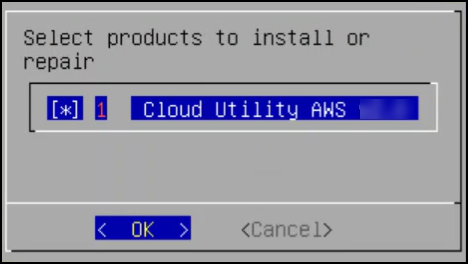

11. Installing Products

In the final steps of installing the ESA, you are prompted to select the components to install.

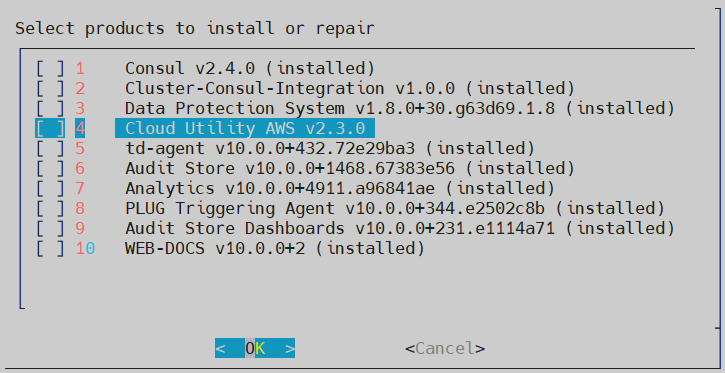

To select products to install:

Press space and select the necessary products to install the following products.

Click OK.

The selected products are installed.

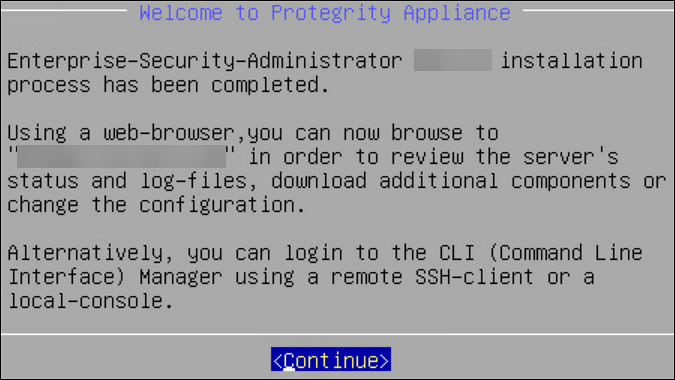

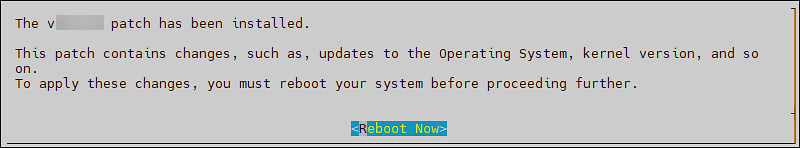

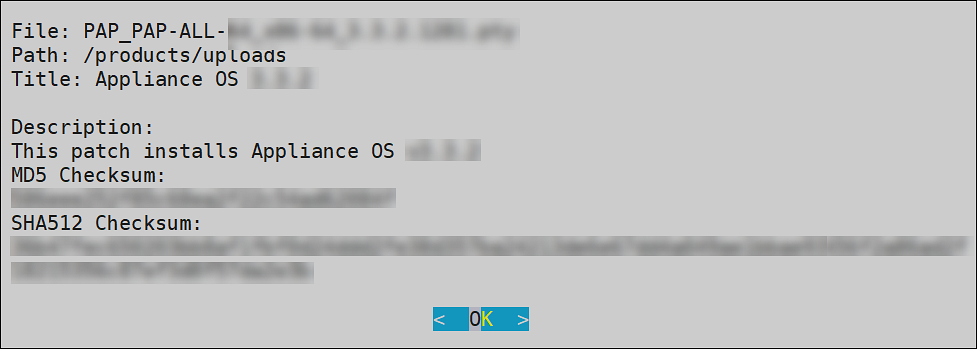

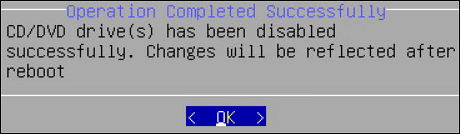

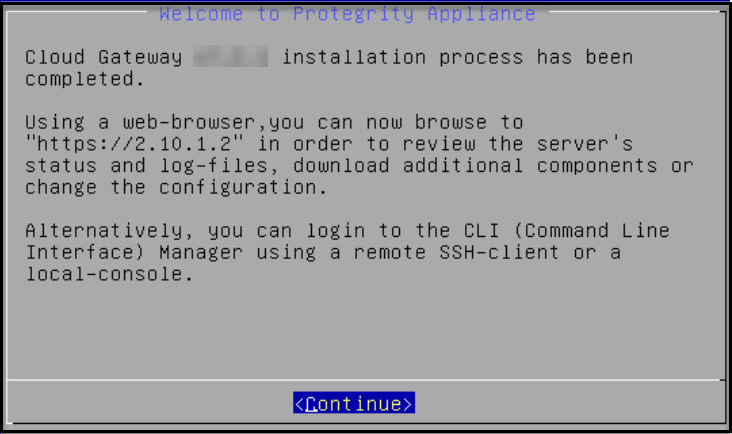

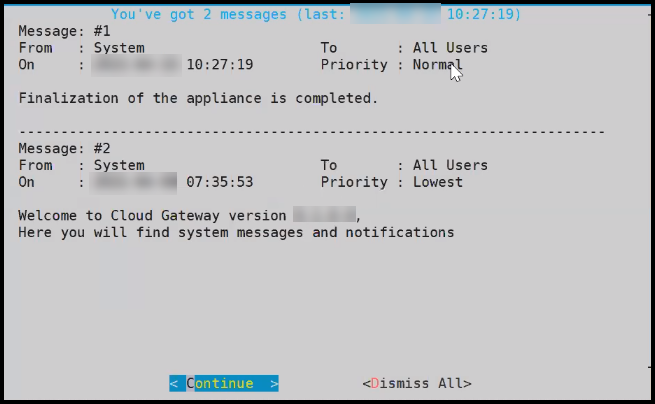

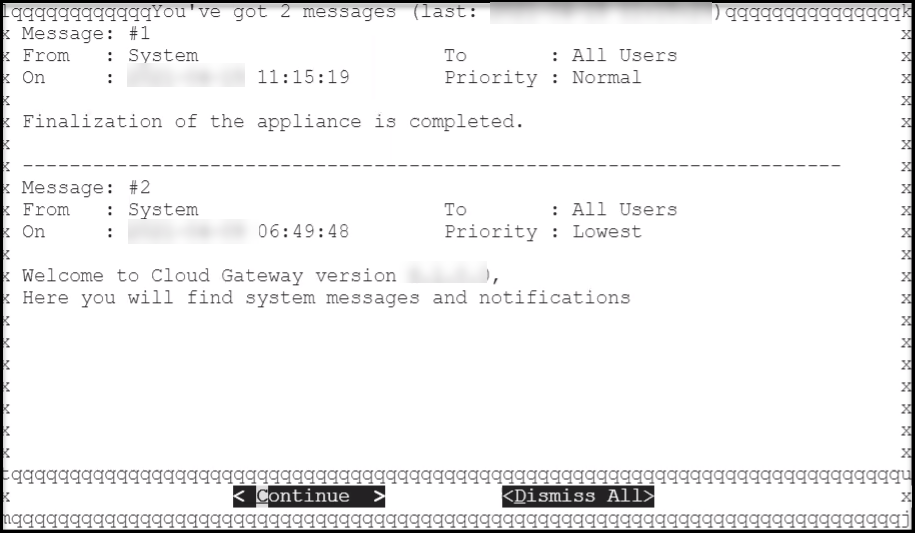

After installation is completed, the following screen appears.

Select Continue to view the CLI Login screen.

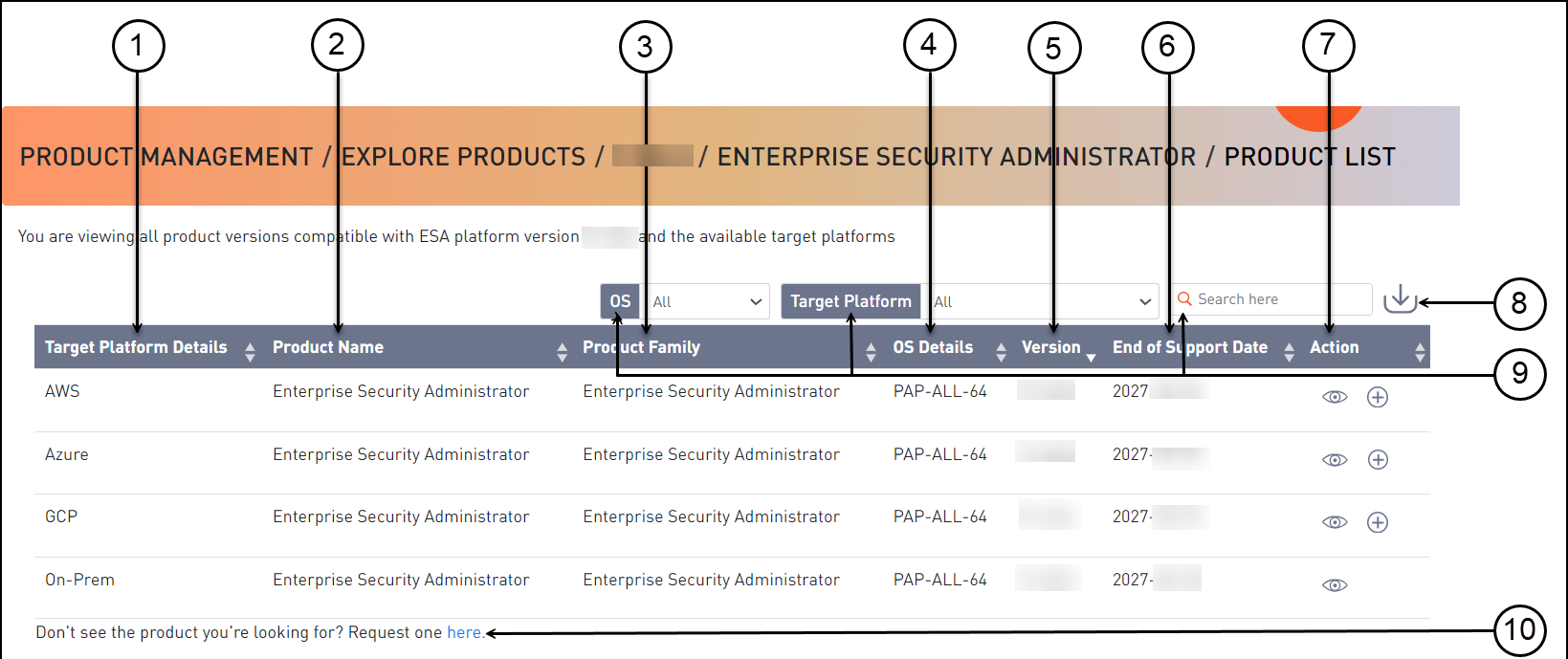

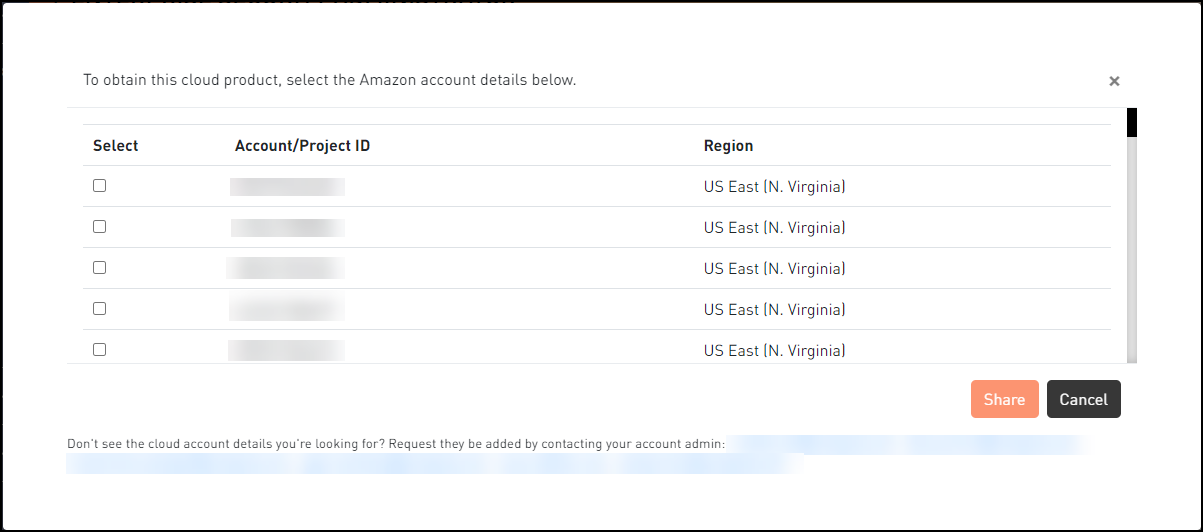

1.4.2 - Installing ESA on Cloud Platforms

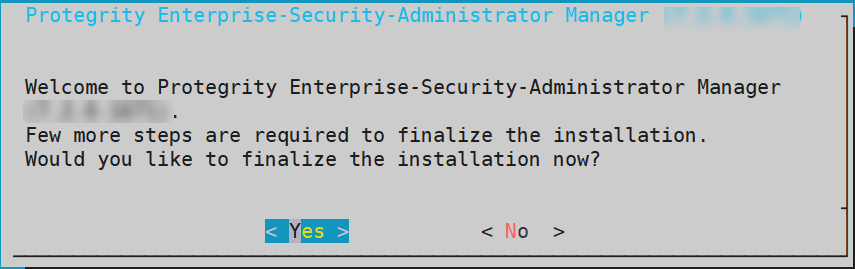

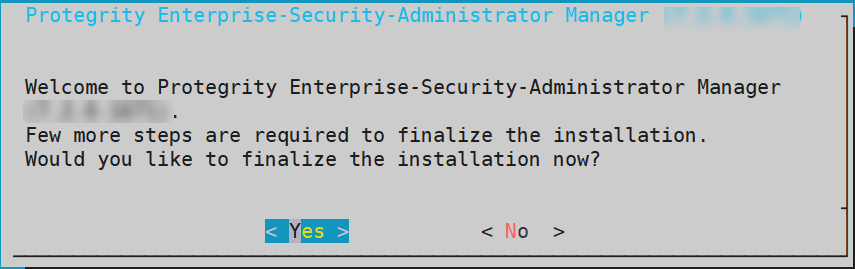

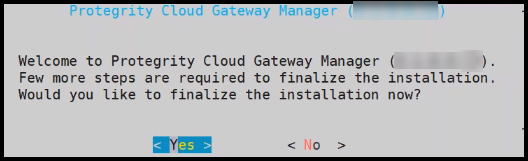

This section describes installing the ESA on Cloud platforms, such as, Amazon Web Services (AWS), Azure, or Google Cloud Platform (GCP). For installing the ESA on cloud platforms, you must mount the image containing the ESA on a cloud instance or a virtual machine. After mounting the image, you must run the finalization procedure to install the ESA components.

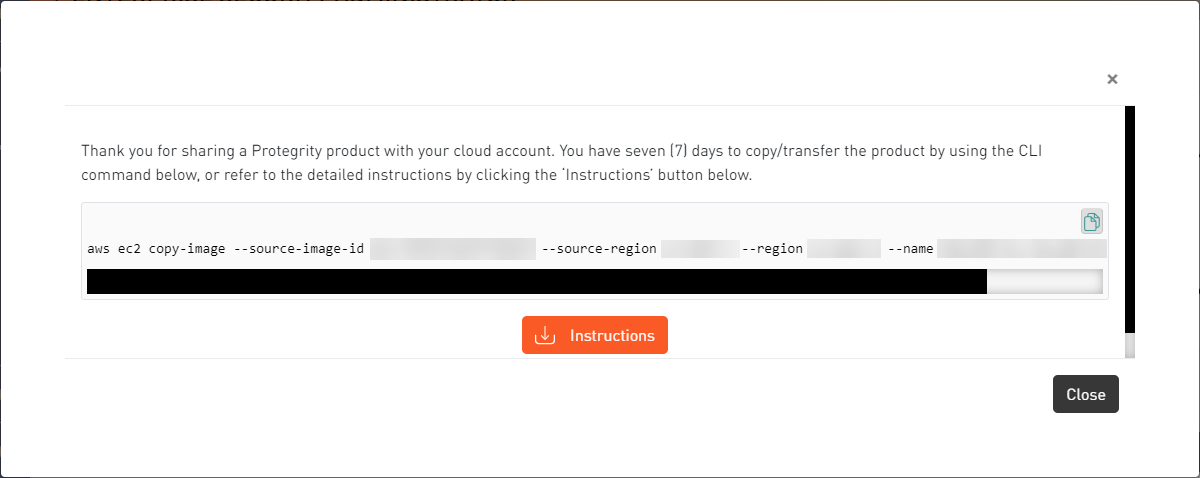

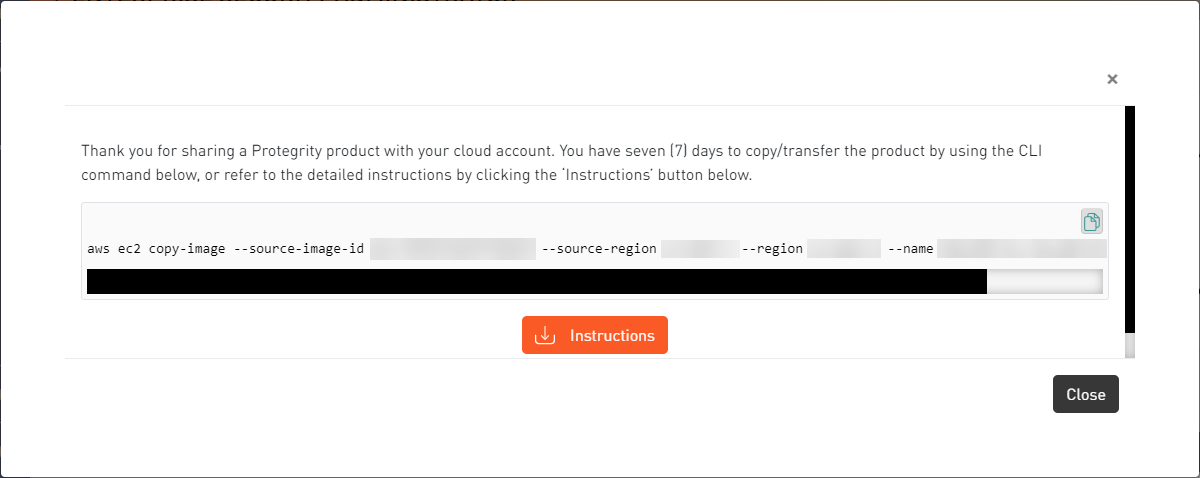

Installing ESA on AWS

The following steps must be completed to run an ESA on AWS:

- Verifying the prerequisites.

- Obtaining the AMI.

- Creating an instance of the ESA from the AMI.

- Configuring the various inbound and outbound ports in the VPC.

- Logging to the AWS instance using the SSH Client.

- Finalizing the AWS instance.

- Logging into ESA.

Installing ESA on Azure

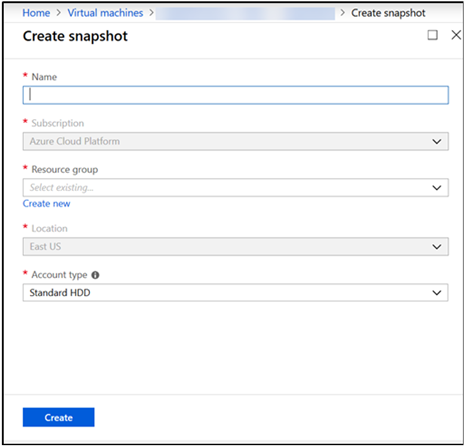

The following steps must be completed to run an ESA on Azure:

- Verifying the prerequisites.

- Creating a Resource Group.

- Creating a Storage Account.

- Creating a Container.

- Obtaining the Azure BLOB.

- Create an image from the BLOB.

- Create a VM from the image.

- Accessing the ESA.

- Finalizing the installation of ESA on the instance.

- Logging into ESA.

Installing ESA on GCP

The following steps must be completed to run an ESA on GCP:

1.4.3 - Installing ESA on VMware

This section describes the process to install the ESA using an OVA template.

For more information about the compatible VMware version, refer to the Release Notes of the relevant release.

1.4.3.1 - Creating an OVA Template

Perform the steps to create an Open Virtual Appliance (OVA) template:

Log in to the VMware Client console.

Navigate to Inventories > VMs and Templates.

From the left navigation pane, select the required project.

Right-click the project name and select Deploy OVF Template….The Deploy OVF Template screen appears.

From Select an OVF template, select the preferred method to upload the .ova file.The .ova file can be accessed using the URL or by uploading a local file.

Click Next.

From Select a name and folder, enter the name of the virtual machine in the Virtual machine name field and select the location for virtual machine. Click Next.

From Select a destination compute resource, select the required compute resource. Click Next.

From Review details, verify the publisher, download size, and size on disk. Click Next.

From Select storage, select the required disk formats, VM Storage Policy, Show datastores from Storage DRS clusters, and datastore to store the deployed OVF or OVA template.

Click Next.

From Select network, select the required network. Click Next.

From Ready to complete, verify the details and click Finish.

This may take sometime to successfully complete the creation of virtual machine. Ensure to proceed only once the virtual machine is created successfully.

After the instance is successfully created, from the left navigation pane, select the virtual machine name.

Right-click the virtual machine name and select Convert to Template.A Confirm Convert dialog box appears.

Click Yes.

The OVA template is successfully created.

1.4.3.2 - Creating a Virtual Machine using OVA template

Perform the steps to create a virtual machine using the OVA template:

Navigate to Inventories > VMs and Templates.

From the left navigation pane, select the required project.

Select the required OVA template.

Right-click the template name, and select New VM from This Template.

From Select a name and folder, enter the name of the virtual machine in the Virtual machine name field and select the location for virtual machine. Click Next.

From Select a destination compute resource, select the required compute resource. Click Next.

From Select storage, select the required storage.Select the required disk formats, VM Storage Policy, Show datastores from Storage DRS clusters, and datastore to store the deployed OVF or OVA template.

Click Next.

From Select clone options, select the required clone options.

If the Customize the operating option is selected, then the Customize guest OS screen appears.Configure the required OS for the virtual machine. Click Next.

If the Customize this virtual machine’s hardware option is selected, then the Customize hardware screen appears.Configure the required hardware for the virtual machine. Click Next.

From Ready to complete, verify the details and click Finish.

The virtual machine is created successfully.

1.4.3.3 - Installing the ESA on the Virtual Machine

1. Starting the installation

Ensure that the virtual machine is powered on before starting the installation process.

To install the ESA:

Select the virtual machine.

Click LAUNCH WEB CONSOLE.

2. Configuring Network Settings

After selecting the NIC for management, configure the network for the ESA. During the network configuration, the system tries to connect to a DHCP server to obtain the hostname, default gateway, and IP addresses for the ESA. If the DHCP is not available, then you can configure the network information manually.

To configure the network settings:

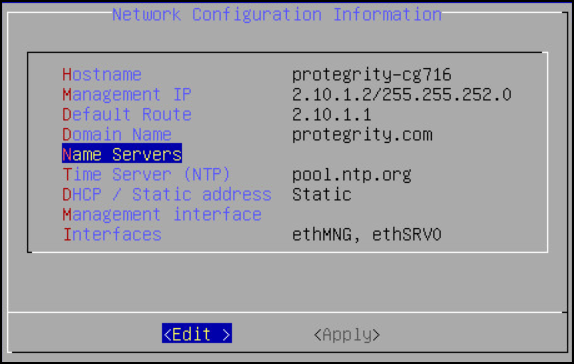

If the DHCP server is configured, then the screen containing the network information appears.

If the DHCP server is not available, then the Network Configuration Information screen appears.

Select Manual and press ENTER.

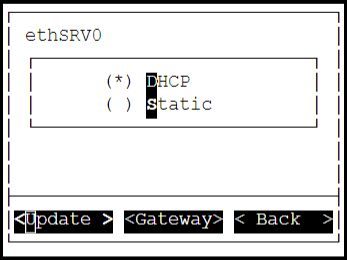

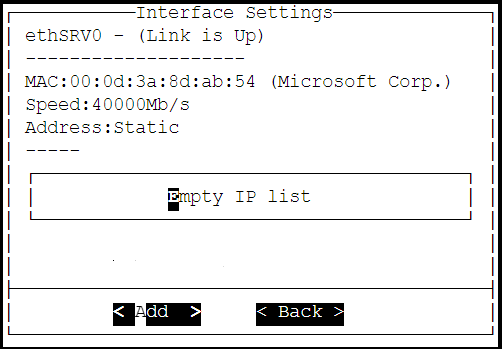

Select DHCP / Static address to configure the DHCP / Static address for the ESA and choose Edit.

Select Static address and choose Update.

If you want to change the hostname of the ESA, then perform the following steps.

- Select Hostname and select Edit.

- Change the hostname and select OK.

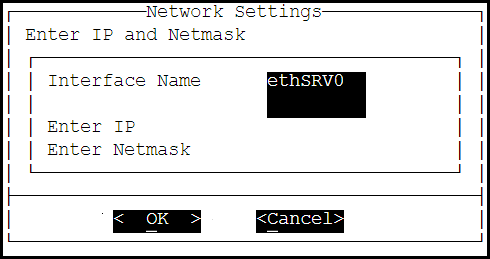

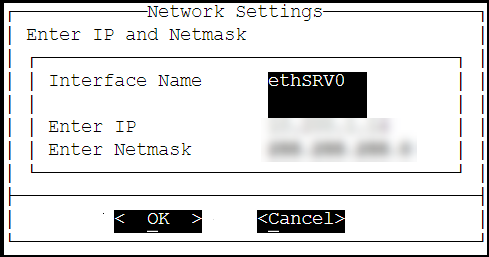

Select Management IP to configure the management IP address for the ESA and select Edit.

- Add the IP address assigned to the ethMNG interface. This IP address configures the ESA to use the Web UI.

- Enter the Netmask. The ethMNG interface must be connected to the LAN with this Netmask value.

- Select OK.

Select Default Route to configure the default route for the ESA and select Edit.

- Enter the IP address for the default network traffic.

- Select Apply.

Select Domain Name and select Edit.

- Enter the domain name. For example, protegrity.com.

- Select Apply.

Select Name Servers and select Edit.

- Add the IP address of the name server.

- Select OK.

To configure the NTP, then perform the following steps.

- Select Time Server (NTP), and press Edit.

- Add NTP time server on a TCP/IP network.

- Select Apply.

Select Apply.

The network settings are configured.

3. Configuring Time Zone

After you configure the network settings, the Time Zone screen appears.

To set the Time Zone:

On the Time Zone screen, select the time zone.

Select Next.

The time zone is configured.

4. Configuring the Nearest Location

After configuring the time zone, the Nearest Location screen appears.

To Set the Nearest Location:

On the Nearest Location screen, select the nearest location.

Select OK.The Initial Server Settings screen appears.This screen also allows you to update the default settings of date and time, keyboard manufacturer, keyboard model, and keyboard layout.

Edit the required settings. Select OK.

5. Updating the Date and Time

To Update the Date and Time:

Press SPACE and select Update date and time.

Press ENTER.

Select the date.

Select Set Date and press ENTER.

Set the time.

Click Set Time and press ENTER.

The date and time settings are configured.

6. Configuring GRUB Settings

On the ESA, GRUB version 2 (GRUB2) is used for loading the kernel. If you want to protect the boot configurations, then you can secure it by enforcing a username and password combination for the GRUB menu.

During installation for the ESA on-premise, a screen to configure GRUB credentials appears. If you want to protect the boot configurations, then you can secure it by enforcing a username and password combination for the GRUB menu. While installing the ESA, the GRUB menu can be secured by creating a username and setting password as described in the following task.

To configure GRUB settings:

From the GRUB Credentials page, press the SPACEBAR to select Enable.

By default the Disable is selected. If you continue to choose Disable, then the security for the GRUB menu is disabled. It is recommended to enable GRUB to secure the ESA.

You can enable this feature from the CLI Manager after the installation is completed. On the CLI Manager, navigate to Administration > GRUB Credential Settings to enable the GRUB settings.

For more information about GRUB, refer to the section Securing the GRand Unified Bootloader (GRUB).

Select OK.

Enter a username in the Username text box.

The requirements for the Username are as follows:

- It should contain a minimum of three and maximum of 16 characters.

- It should not contain numbers and special characters

Enter a password in the Password and Re-type Password text boxes.

The requirements for the Password are as follows:

- It must contain at least eight characters.

- It must contain a combination of alphabets, numbers, and printable characters.

Select OK and press ENTER.

A message

Credentials for the GRUB menu has been set successfullyappears.Select OK.

7. Setting up Users and Passwords

Only authorized users can access the ESA. The Protegrity Data Security Platform defines a list of roles for each user who can access the ESA. These are system users and LDAP administrative users who have specific roles and permissions. When you install the ESA, the default users configured are as follows:

- root: Super user with access to all commands and files.

- admin: User with administrative privileges to perform all operations.

- viewer: User who can view, but does not have edit permissions.

- local_admin: Local administrator that can be used when the admin user is not accessible.

After completing the server settings, the Users Passwords screen appears that allows you set the passwords for the users.

To set the LDAP user passwords:

Add the passwords of the users.

Ensure that the passwords for the users comply with the password polices.

For more information about the password policies, refer Password Policy Configuration

Select Apply.

The user passwords are set.

8. Licensing

After the ESA components are installed, the Temporary License screen appears. This screen takes time. It is recommended to wait for few minutes before proceeding.

After the ESA is installed, you must apply for a valid license within 30 days.

For more information about licenses, refer Licensing.

9. Installing Products

In the final steps of installing the ESA, select the components to install.

To select products to install:

Press space to select and install the required products.

Click OK.

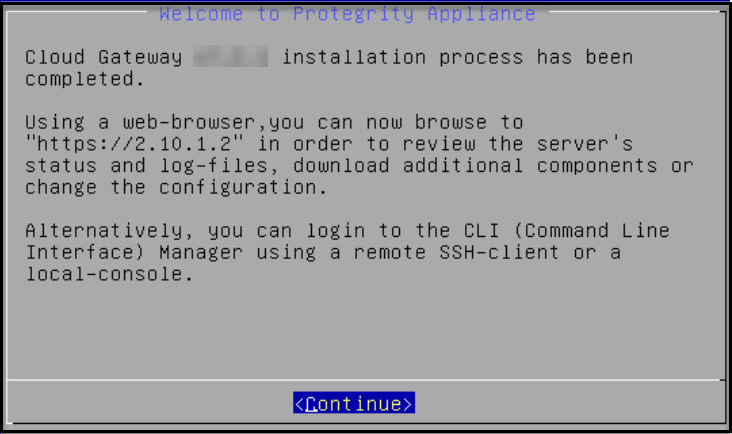

The selected products are installed.After installation is completed, the

Welcome to Protegrity Appliancescreen appears.Select Continue to view the CLI Login screen.

1.5 - Configuring the ESA

Configuring authentication settings

User authentication is the process of identifying someone who wants to gain access to a resource. A server contains protected resources that are only accessible to authorized users. When you want to access any resource on the server, the server uses different authentication mechanism to confirm your identity.

You can configure the authentication using for the following methods.

Configuring accounts and passwords

You can change your current password from the CLI Manager. The CLI Manager includes options to change passwords and permissions for multiple users.

For more information on configuring accounts and passwords, refer to section Accounts and Passwords Management.

Configuring Syslog

The Appliance Logs are available for Protegrity Appliances, such as, the ESA or the DSG. The Appliance Logs tool can be differentiated into appliance common logs and appliance-specific logs. Syslog is a log type that is common for all appliances.

For more information about configuring syslog, refer the section Working with Logs.

Configuring external certificates

External certificates or digital certificates are used to encrypt online communications securely between two entities over the Internet. It is a digitally signed statement that is used to assert the online identities of individuals, computers, and other entities on the network, utilizing the security applications of Public Key Infrastructure (PKI). Public Key Infrastructure (PKI) is the standard cryptographic system that is used to facilitate the secure exchange of information between entities.

For more information on configuring certificates, refer here.

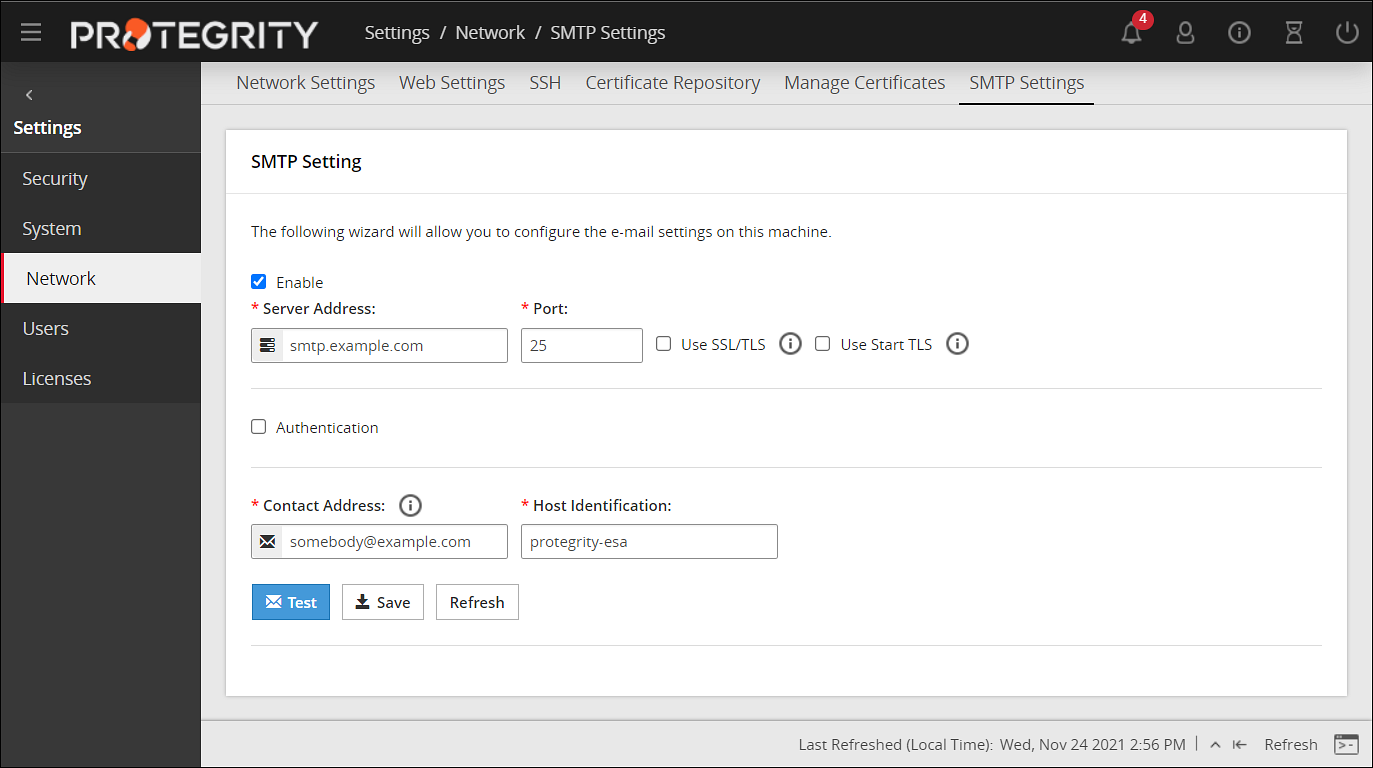

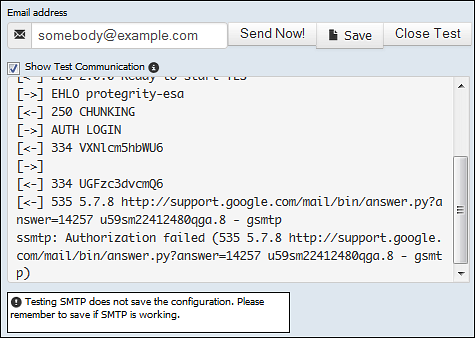

Configuring SMTP

The Simple Mail Transfer Protocol (SMTP) setting allows the system to send emails. You can set up an email server that supports the notification features in Protegrity Reports.

To configure SMTP from Web UI:

Login to the ESA.

Navigate to Settings > Network.

Click the SMTP Settings tab.

The following screen appears.

For more information about configuring SMTP, refer Email Setup.

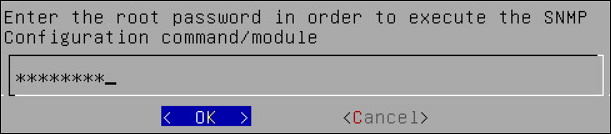

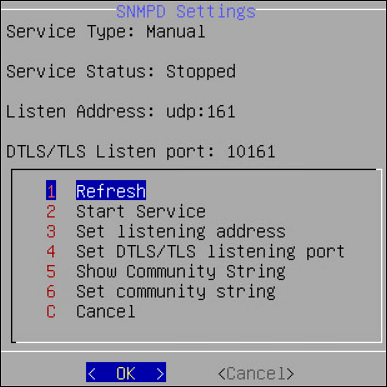

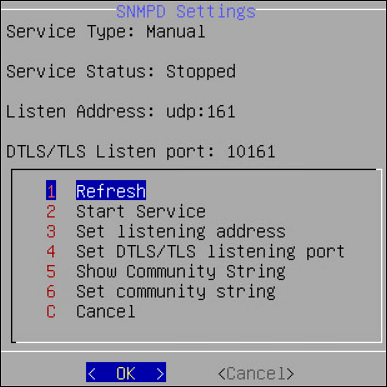

Configuring SNMP

Using Simple Network Management Protocol (SNMP), you can query the performance data.

By default, due to security reasons, the SNMP service is disabled. To enable the service and provide its basic configuration (listening address, community string) you can use the SNMP tool available in the CLI Manager.

To initialize SNMP configuration:

Login to the CLI Manager.

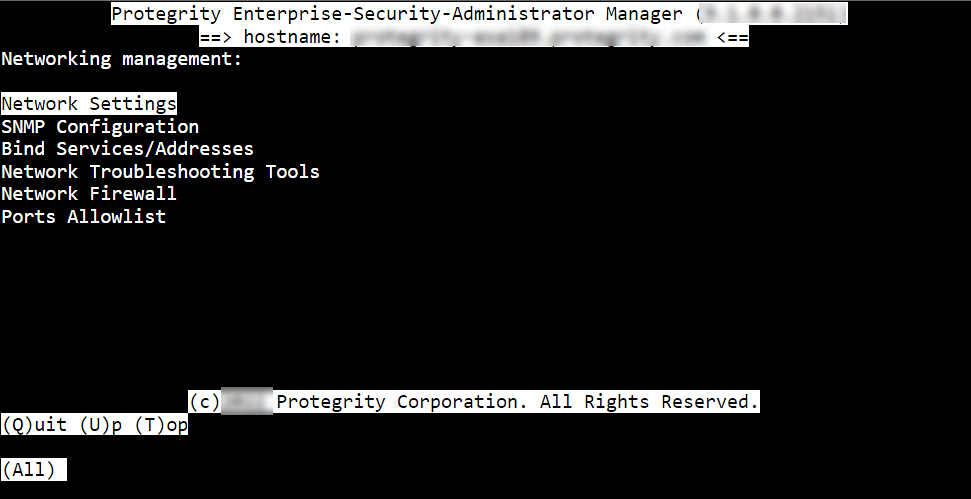

Navigate to Networking > SNMP Configuration.

Enter the root password to execute the SNMP configuration and click OK.

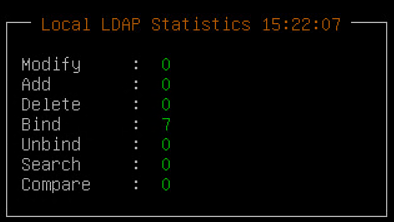

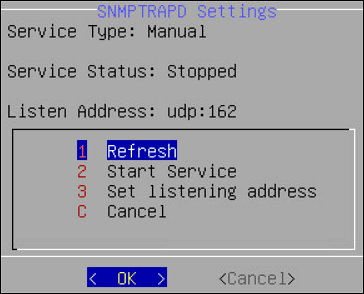

The following screen appears.

You can also start the SNMP Service from the Web UI. Navigate to System > Services to start the SNMP service.

For more information about configuring SNMP, refer Configure SNMP.

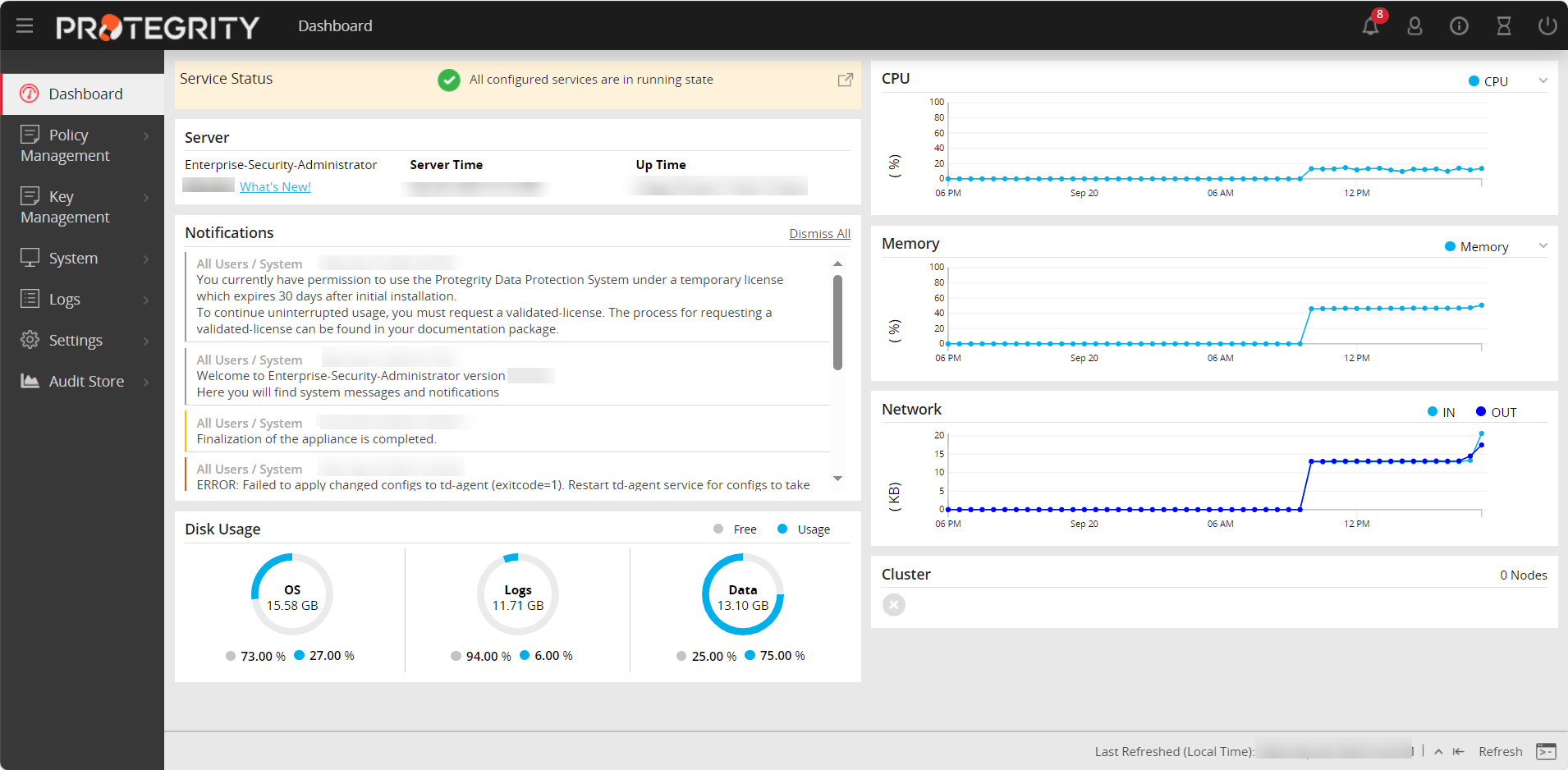

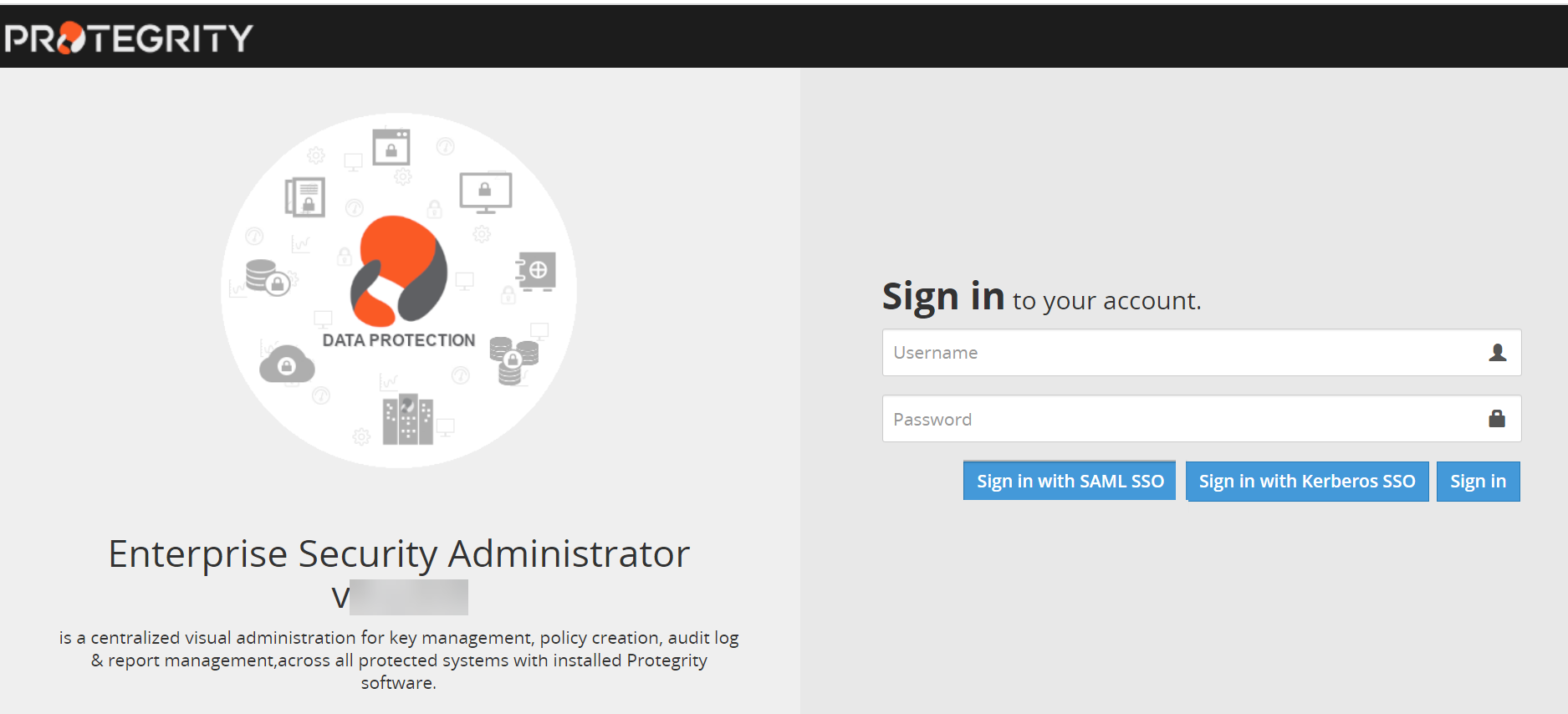

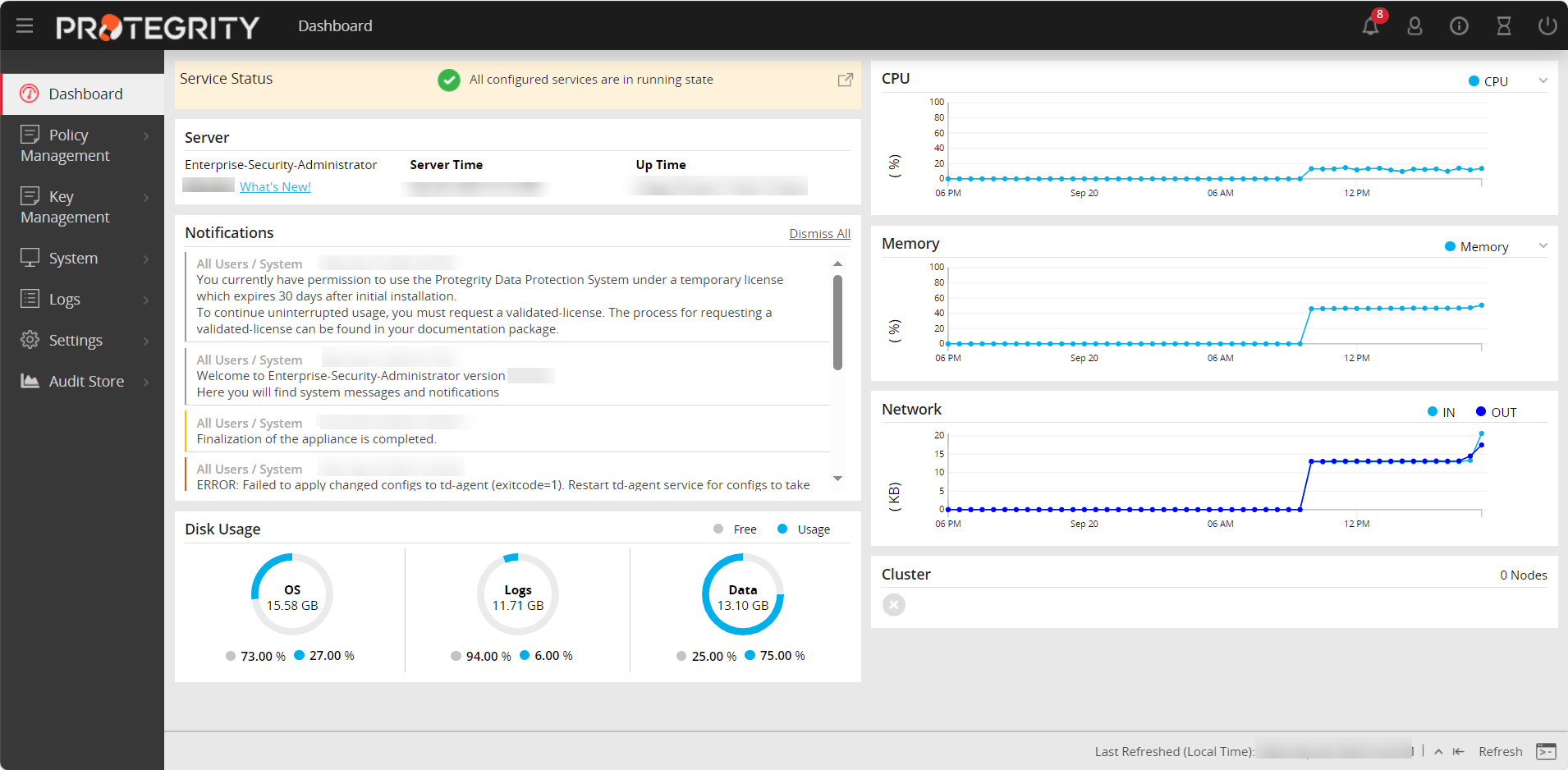

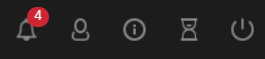

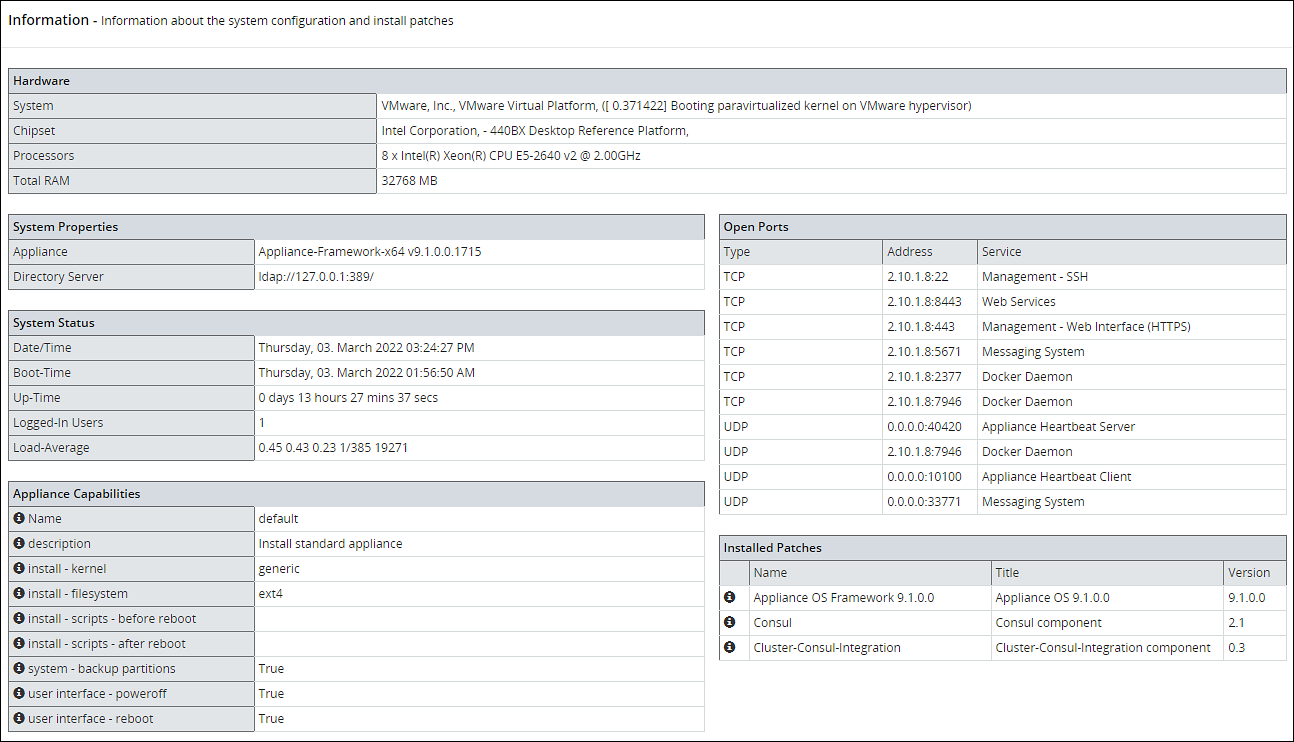

1.6 - Verifying the ESA installation from the Web UI

To verify the ESA installation from the Web UI:

Login to the ESA Web UI.

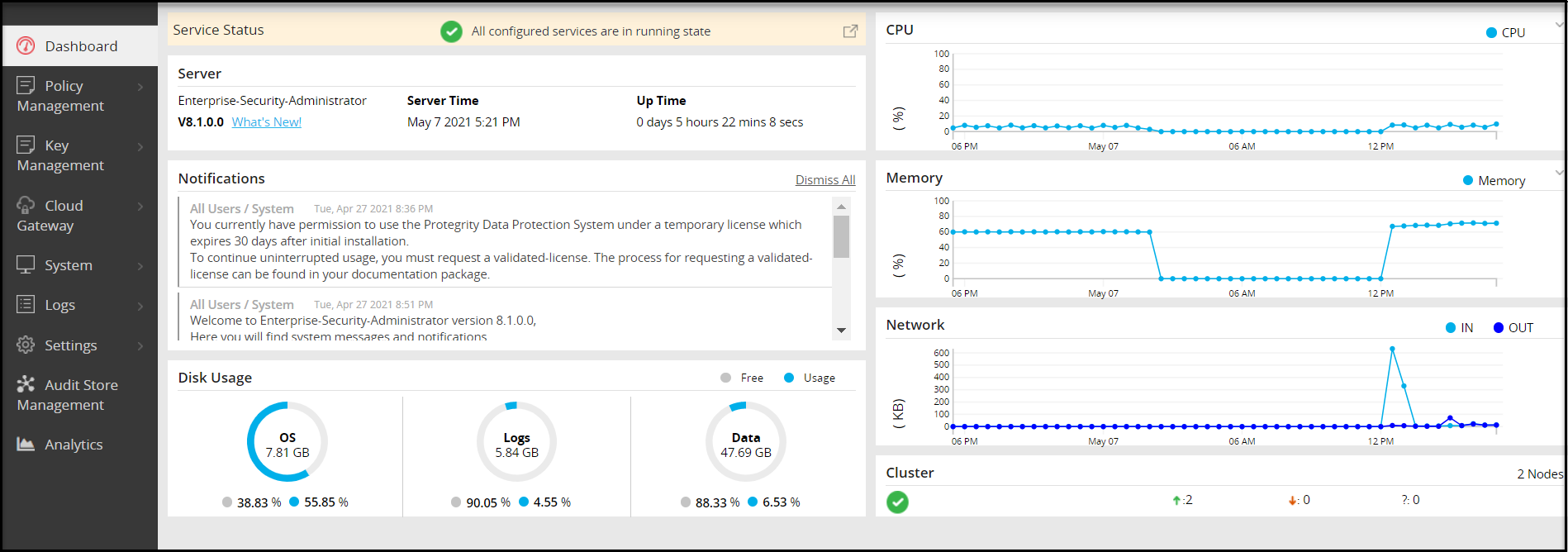

The ESA dashboard appears.

Navigate to System > Information.

The screen displaying the information of your system appears.

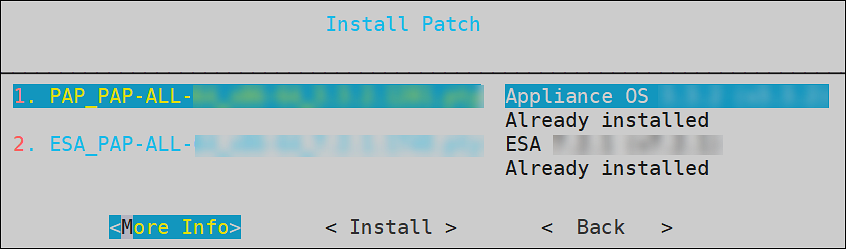

Under the Installed Patches area, the ESA_10.1.0 entry appears.

Navigate to System > Services and ensure that all the required services are running.

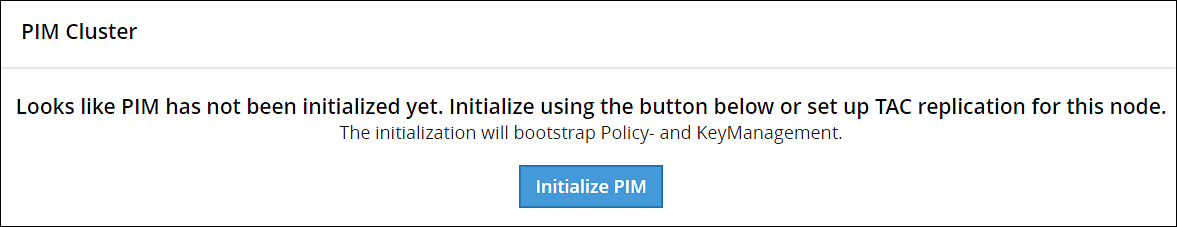

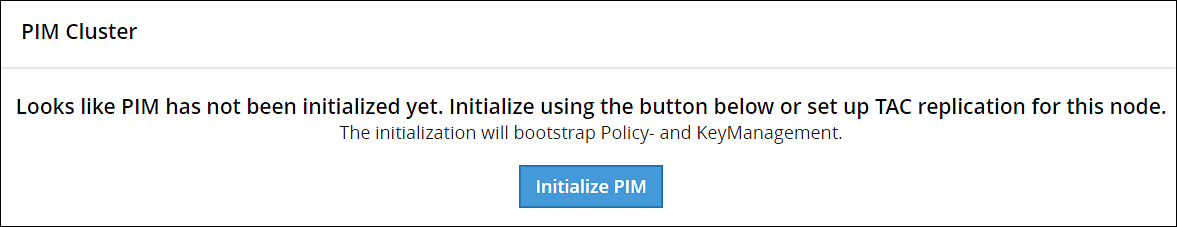

1.7 - Initializing the Policy Information Management (PIM) Module

To initialize the PIM module:

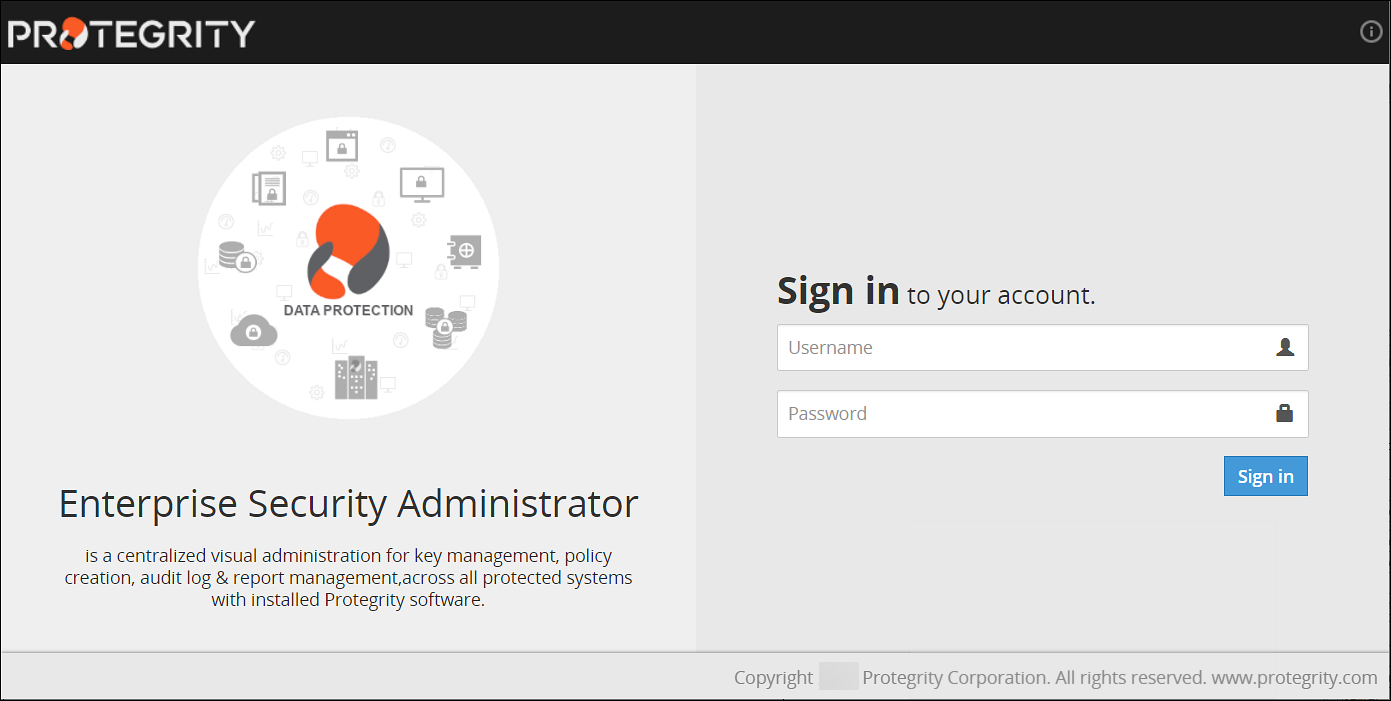

In a web browser, enter the ESA IP address in the window task bar.

Enter the Username and Password.

Click Sign in.

The ESA dashboard appears.

Navigate to Policy Management > Dashboard.

The following screen to initialize PIM appears.

Click Initialize PIM.

A confirmation message appears.

Click OK.

The Policy management screen appears.

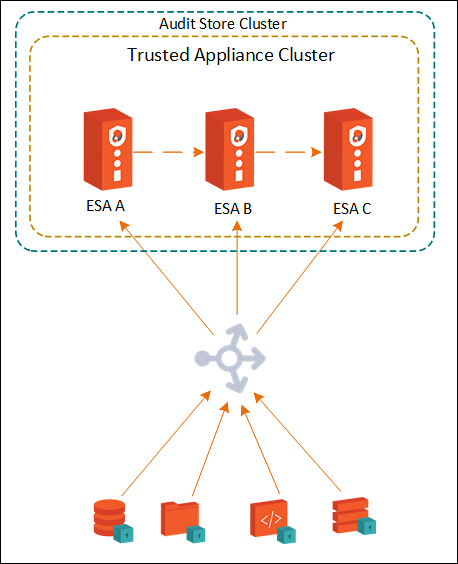

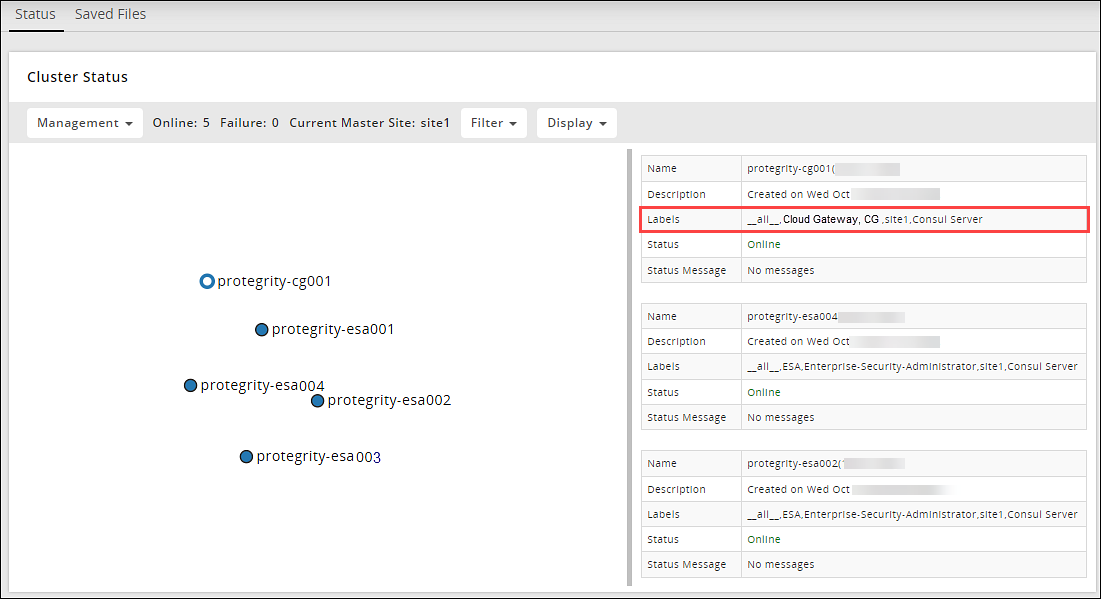

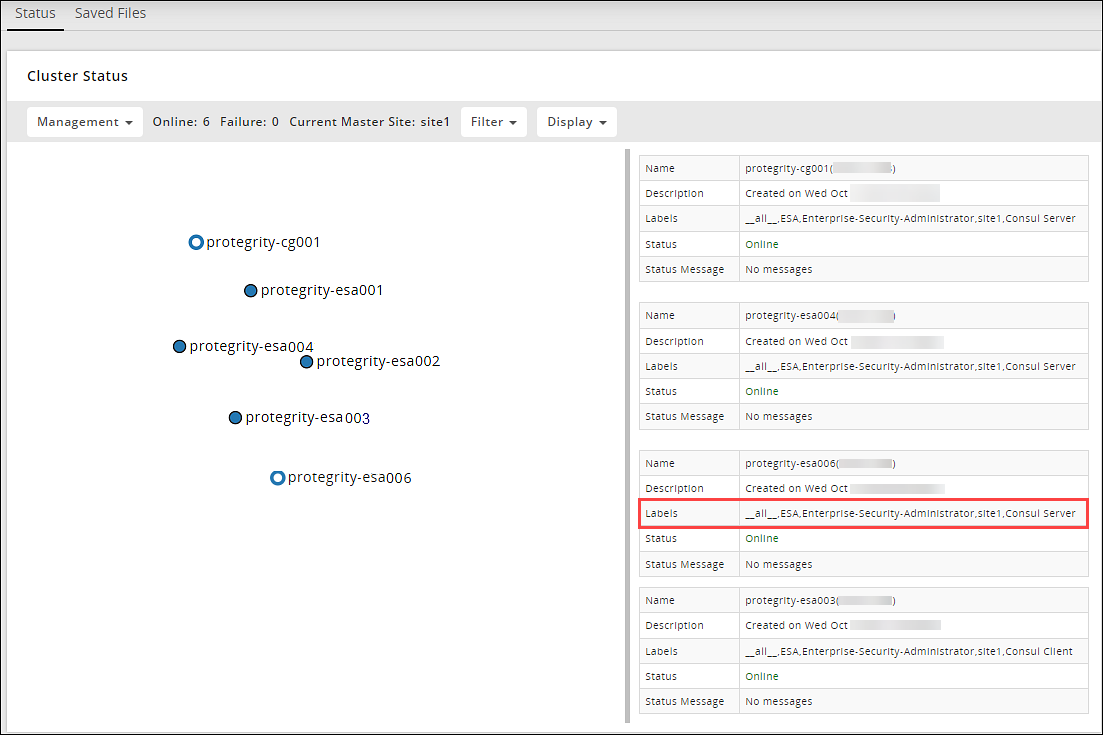

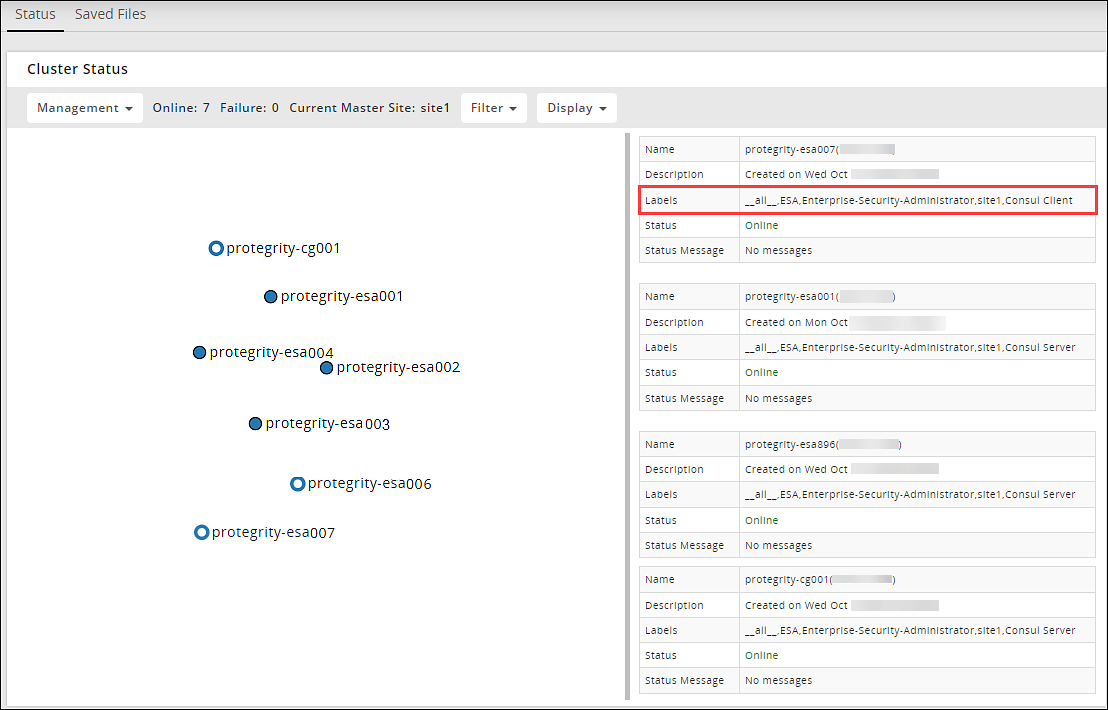

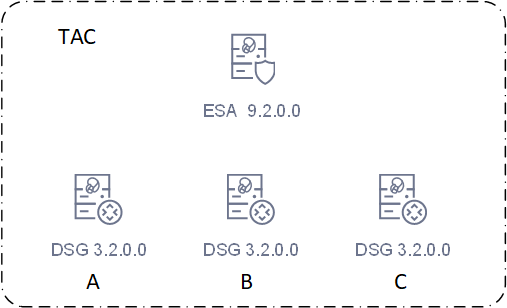

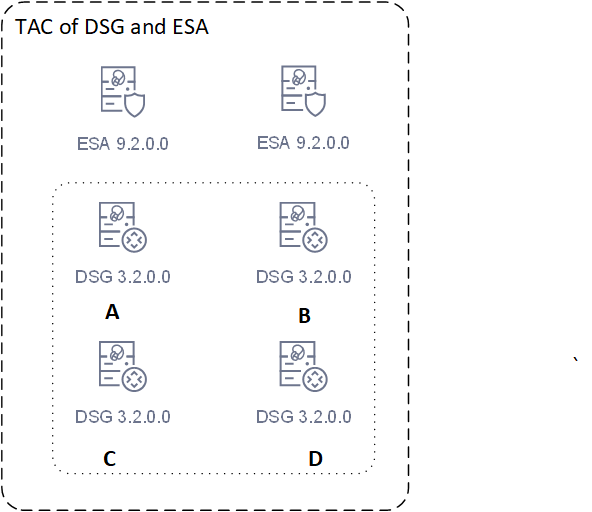

1.8 - Configuring the ESA in a Trusted Appliances Cluster (TAC)

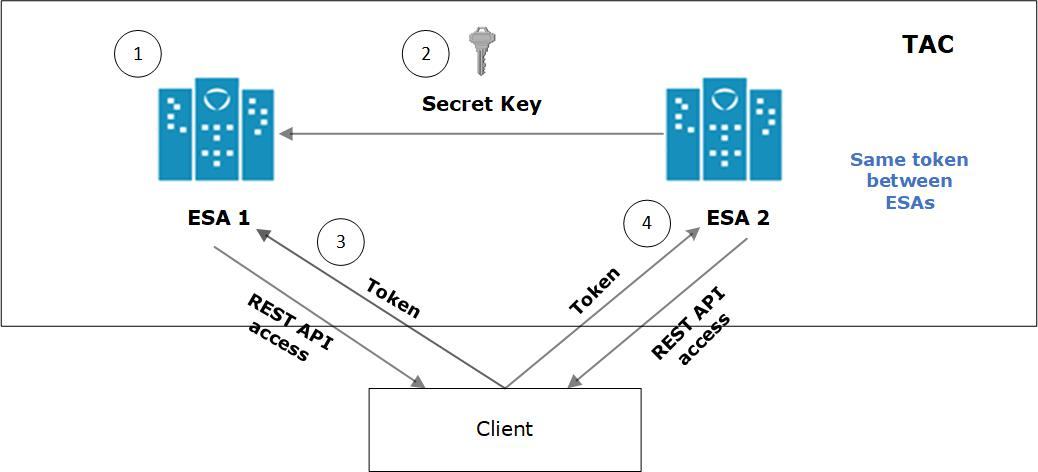

The following figure illustrates the TAC setup.

TAC is established between the primary ESA A and the secondary ESAs, ESA B and ESA C.

For more information about TAC, refer here.

Data replication for policies, forensics, or DSG configuration takes place between all the ESAs.

For more information about replication tasks, refer here.

The Audit Store cluster is enabled for the ESAs.

For more information about enabling Audit Store Cluster, refer here.

All the ESAs are added as a part of the Audit Store Cluster.

For more information about adding an ESA to the Audit Store Cluster, refer here.

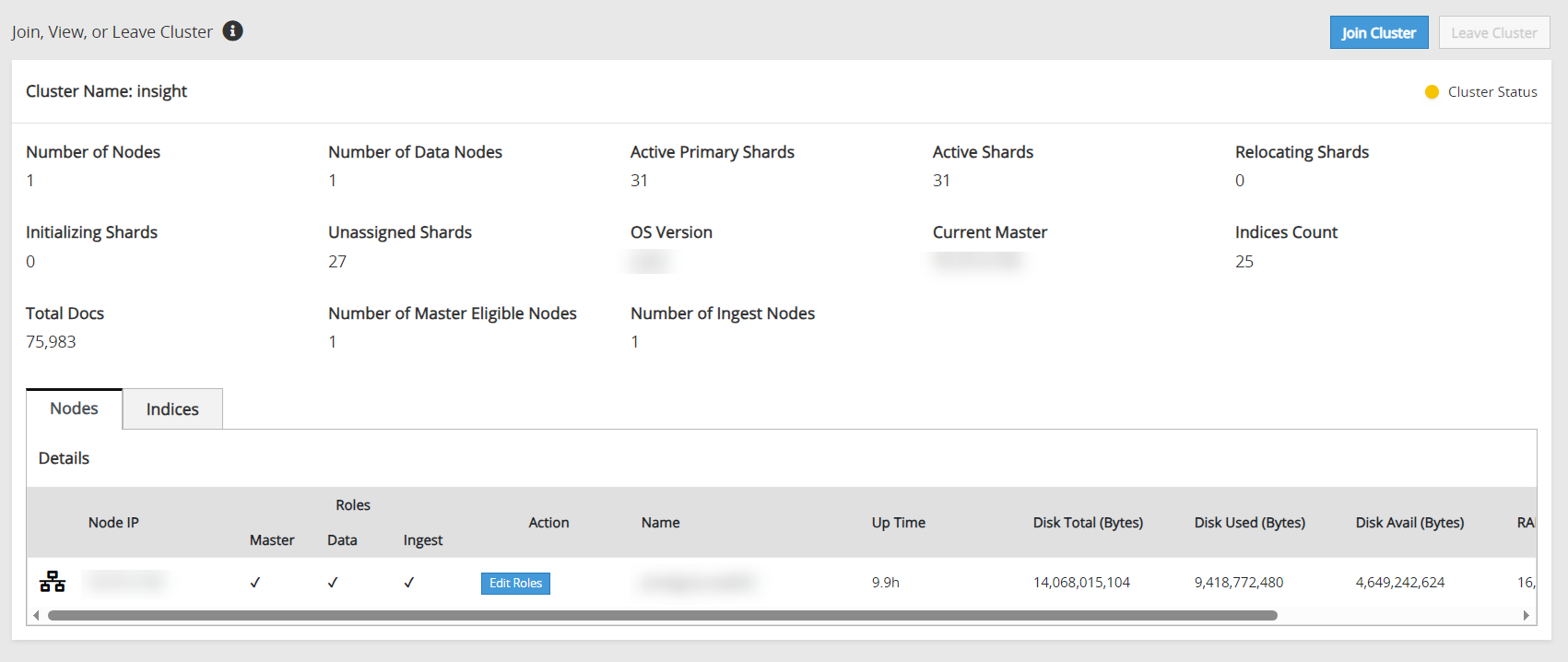

1.9 - Creating an Audit Store Cluster

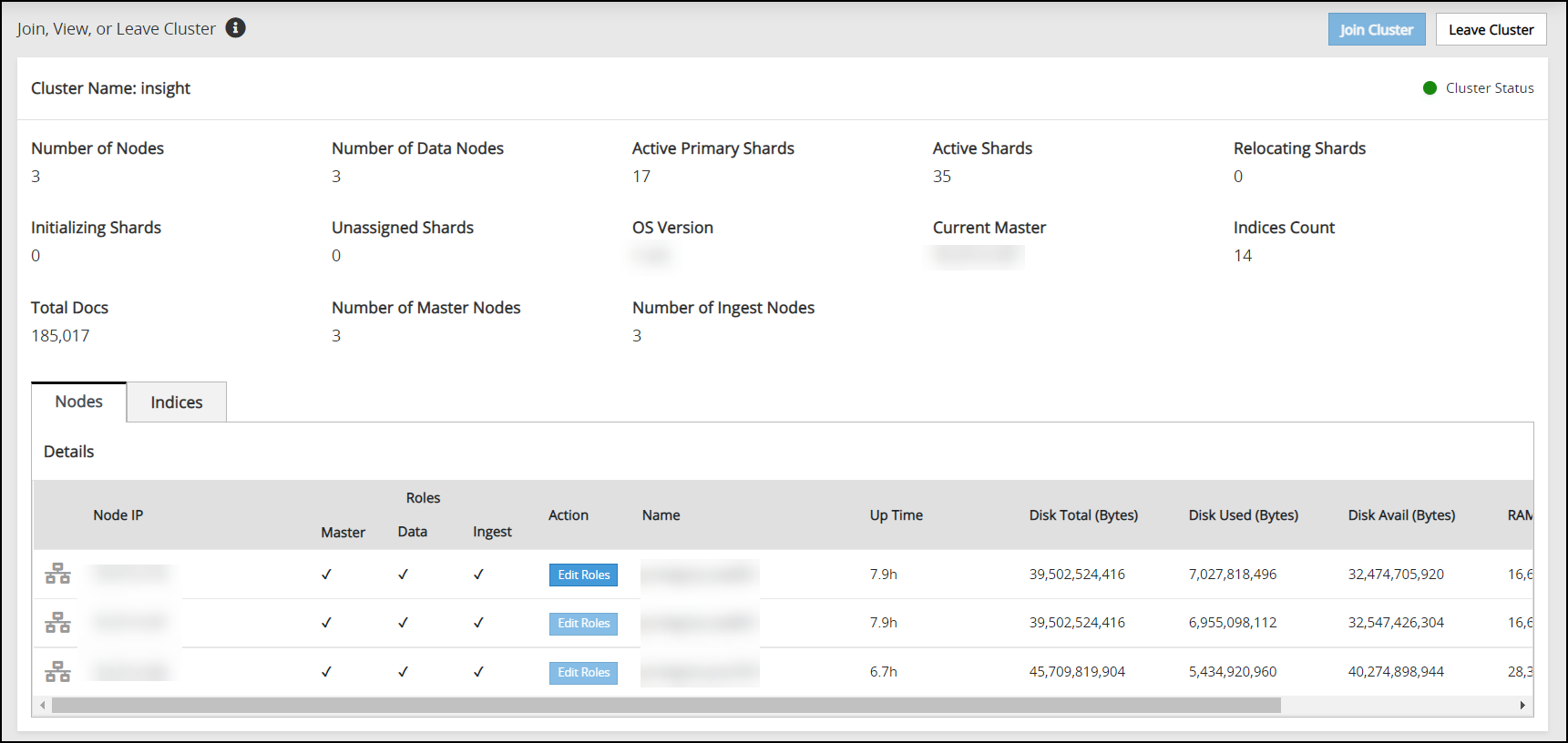

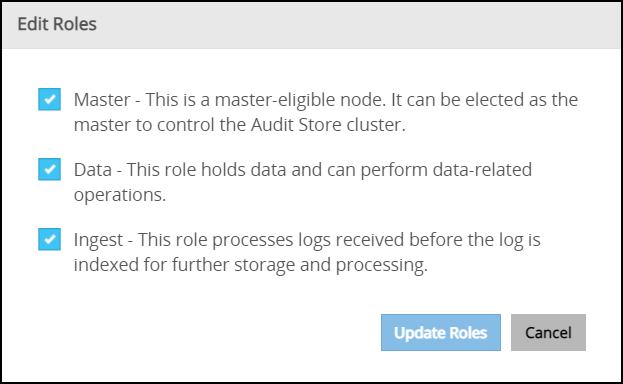

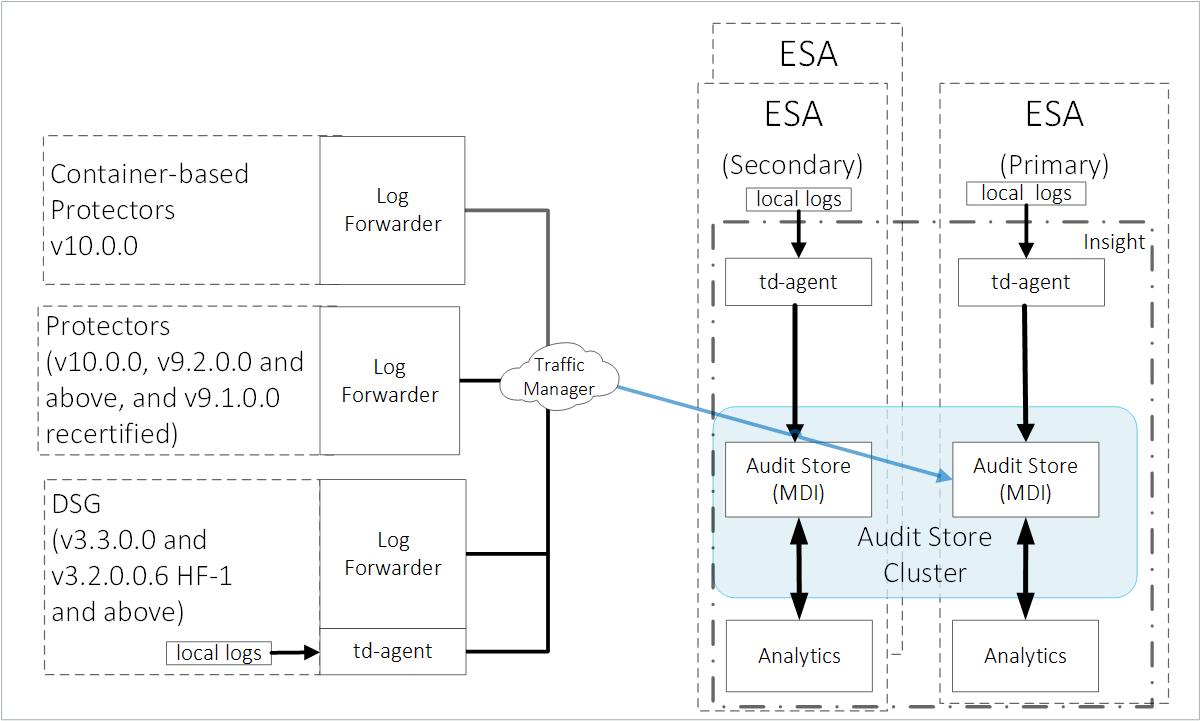

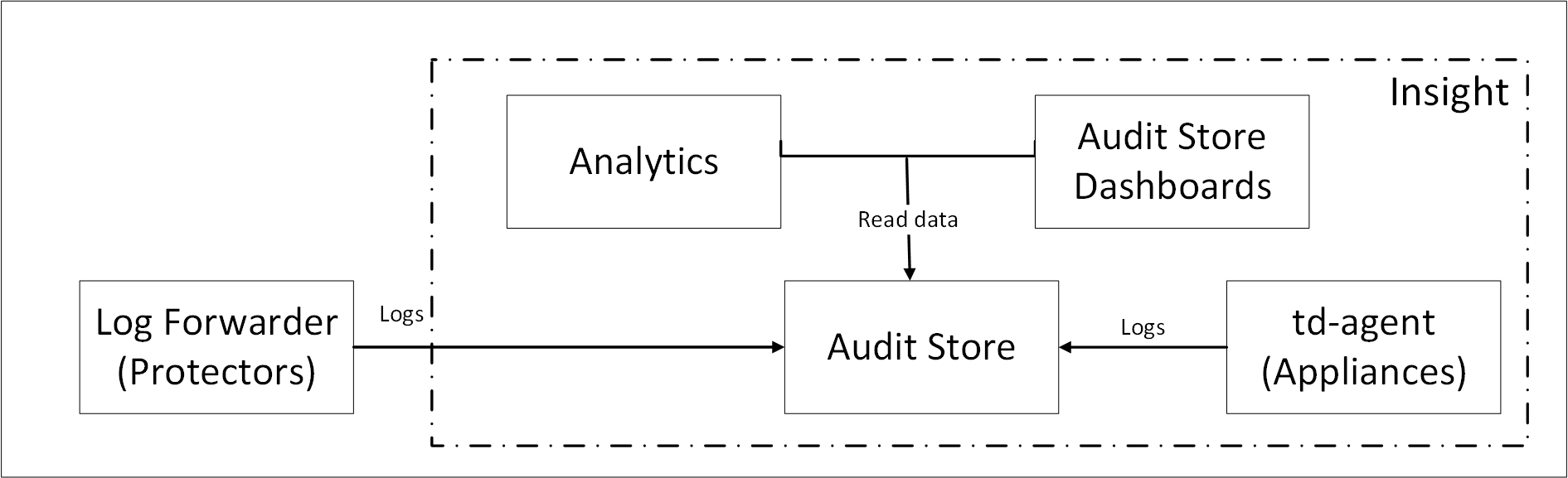

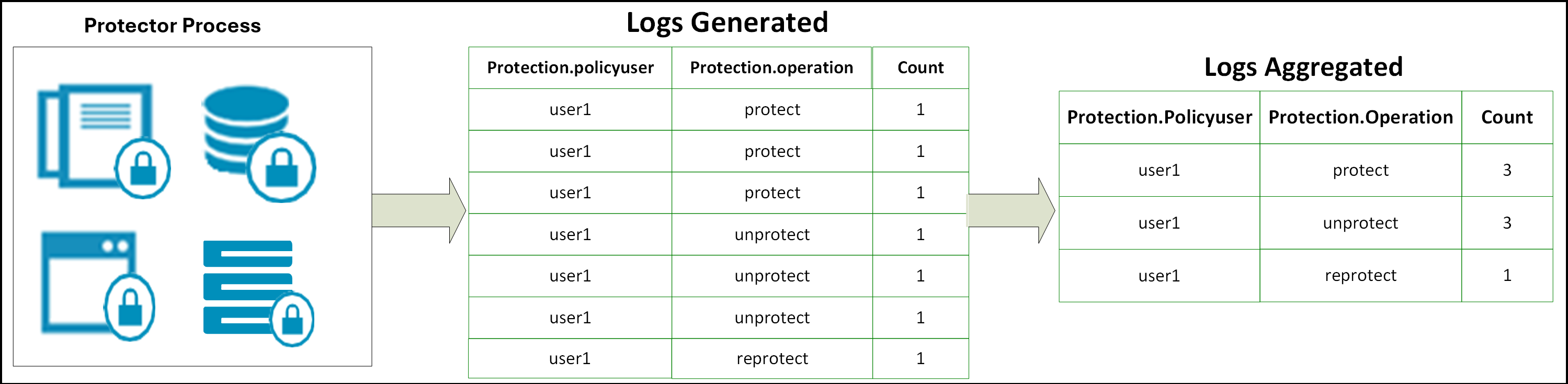

The Audit Store cluster is a collection of nodes that process and store data. The Audit Store is installed on the ESA nodes. The logs generated by the Appliance, such as, ESA or DSG, and Protector machines are stored in this Audit Store. The logs are useful for obtaining information about the nodes and the cluster on the whole. The logs can also be monitored for any data loss, system compromise, or any other issues with the nodes in the Audit Store cluster.

An Audit Store cluster must have a minimum of three nodes with the Master-eligible role due to following scenarios:

- 1 master-eligible node: If only one node with the Master-eligible role is available, then it is elected the Master, by default. In this case, if the node becomes unavailable due to some failure, then the cluster becomes unstable as there is no additional node with the Master-eligible role.

- 2 master-eligible nodes: A cluster where only two nodes have the Master-eligible role, then both have the Master-eligible role at the minimum to be up and running for the cluster to remain functional. If any one of those nodes becomes unavailable due to some failure, then the minimum condition for the nodes with the Master-eligible role is not met and cluster becomes unstable. This setup is not recommended for a multi-node cluster.

- 3 master-eligible nodes and above: In this case, if any one node goes down, then the cluster can still remain functional because the cluster requires a minimum of two nodes with the Master-eligible role.

Completing the Prerequisites

Ensure that the following prerequisites are met before configuring the Audit Store Cluster. Protegrity recommends that the Audit Store Cluster has a minimum of three ESAs for creating a highly-available multi-node Audit Store cluster.

Prepare and set up three v10.1.0 ESAs.

Create the TAC on the first ESA. This will be the Primary ESA.

Add the remaining ESAs to the TAC. These will be the secondary ESAs in the TAC. For more information about installing the ESA, refer here.

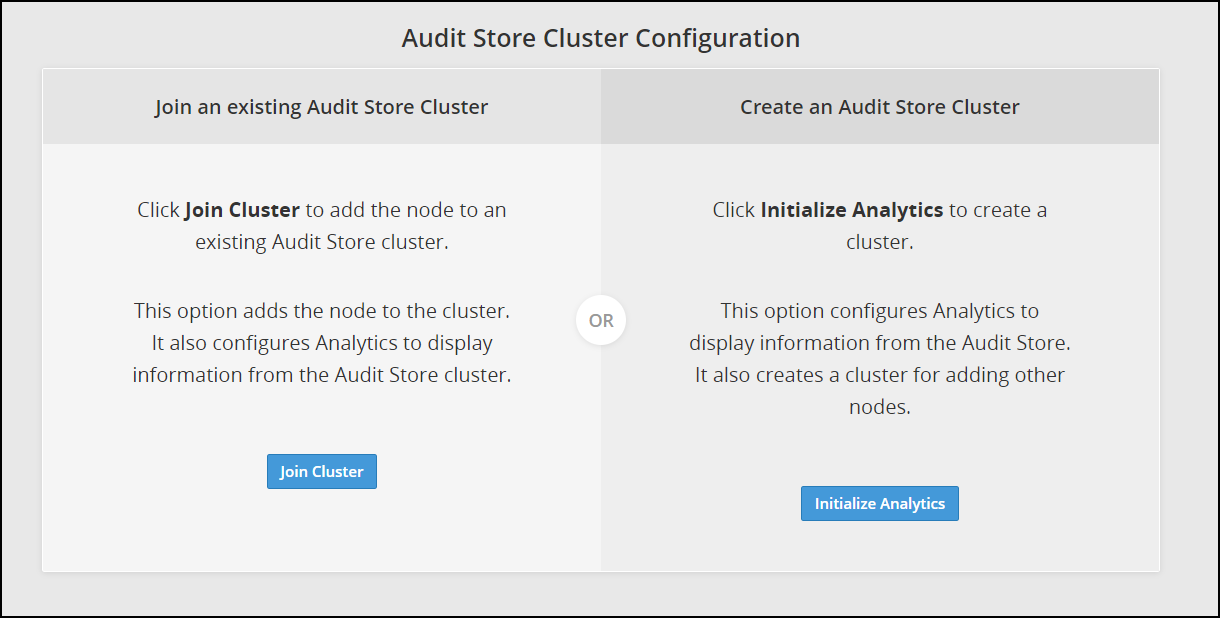

Creating the Audit Store Cluster on the ESA

Initialize the Audit Store only on the first ESA or the Primary ESA in the TAC. This also configures Insight to retrieve data from the Audit Store. Additionally, the required processes, such as, td-agent, is started and Protegrity Analytics is initialized. The Audit Store cluster is initialized on the local machine so that other nodes can join this Audit Store cluster.

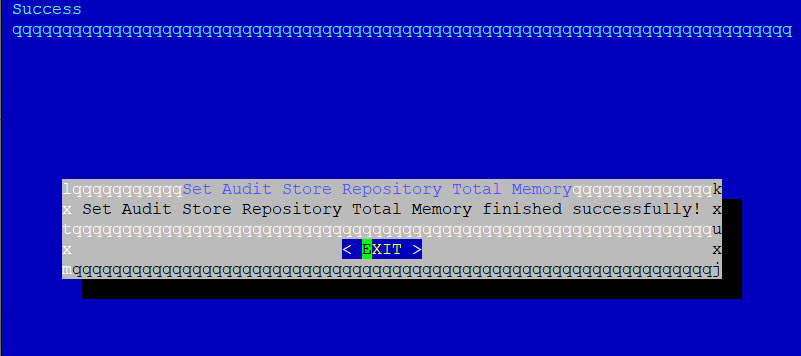

Perform the following steps to initialize the Audit Store.

Log in to the ESA Web UI.

Navigate to Audit Store > Initialize Analytics.

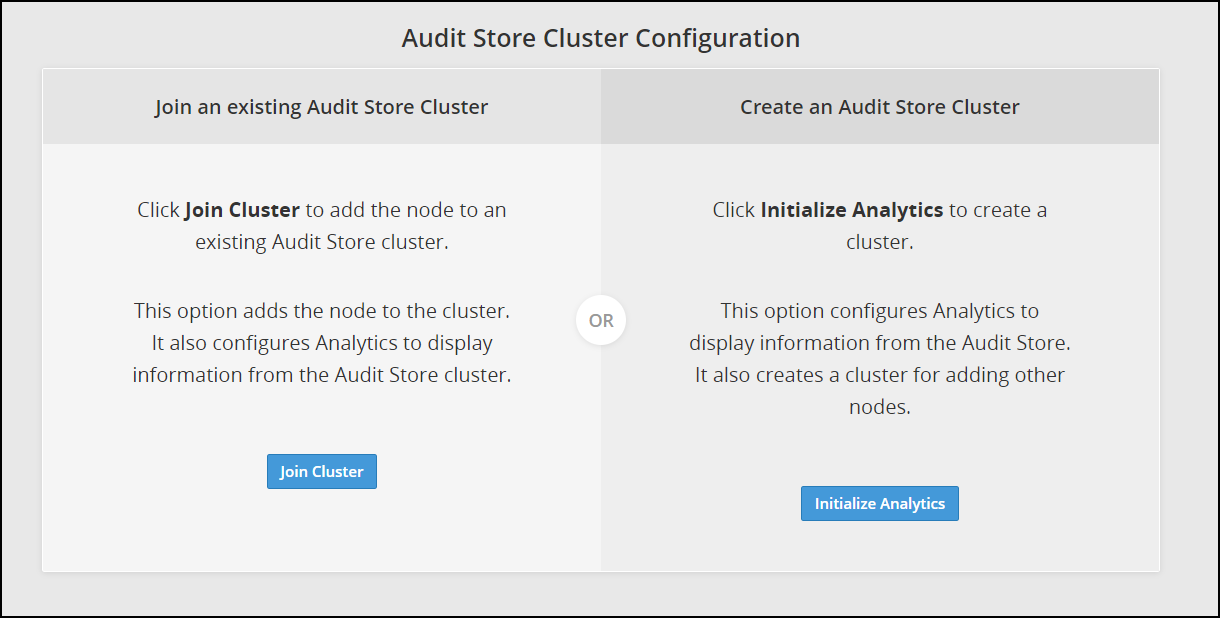

The following screen appears.

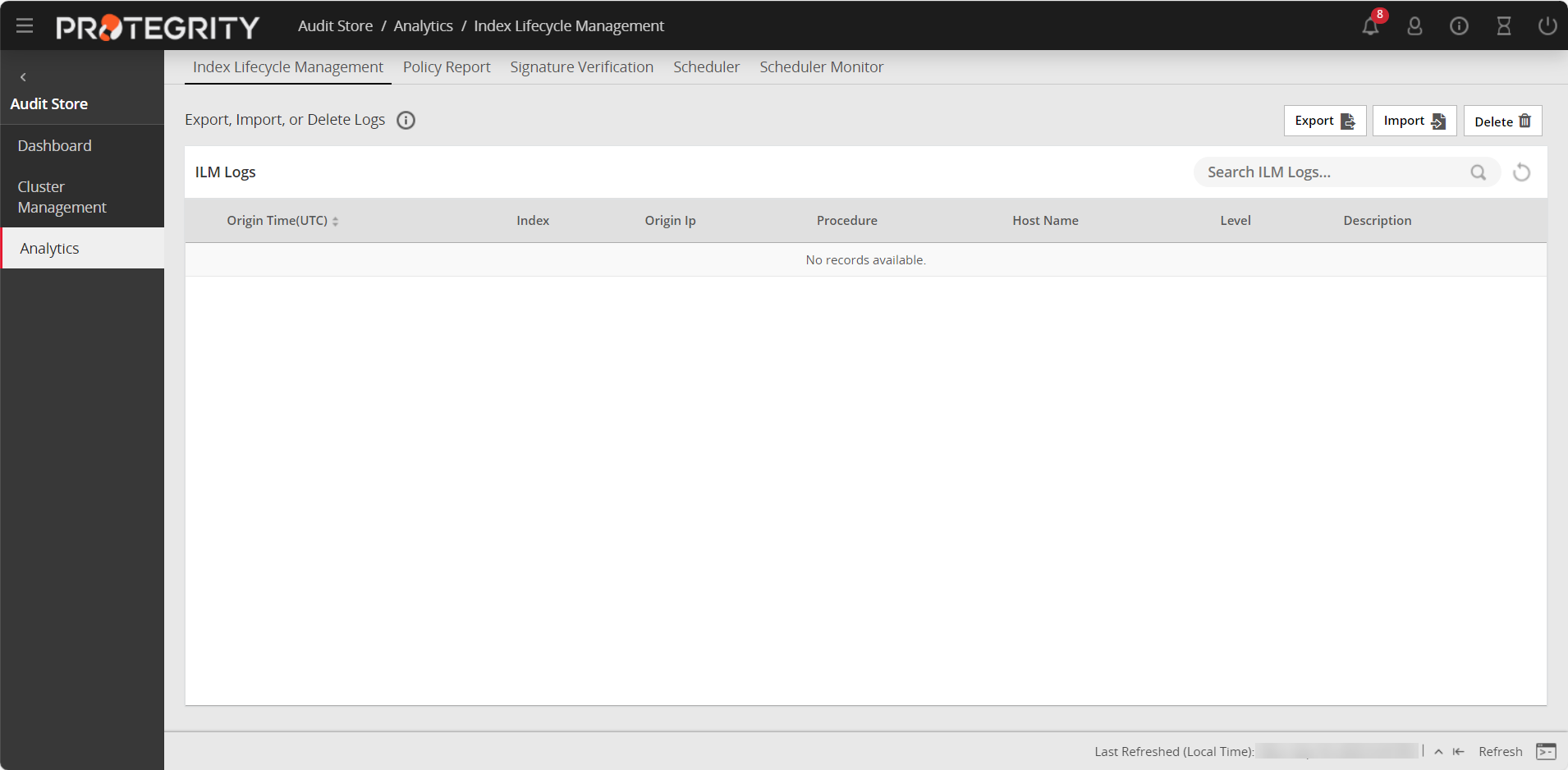

Click Initialize Analytics.

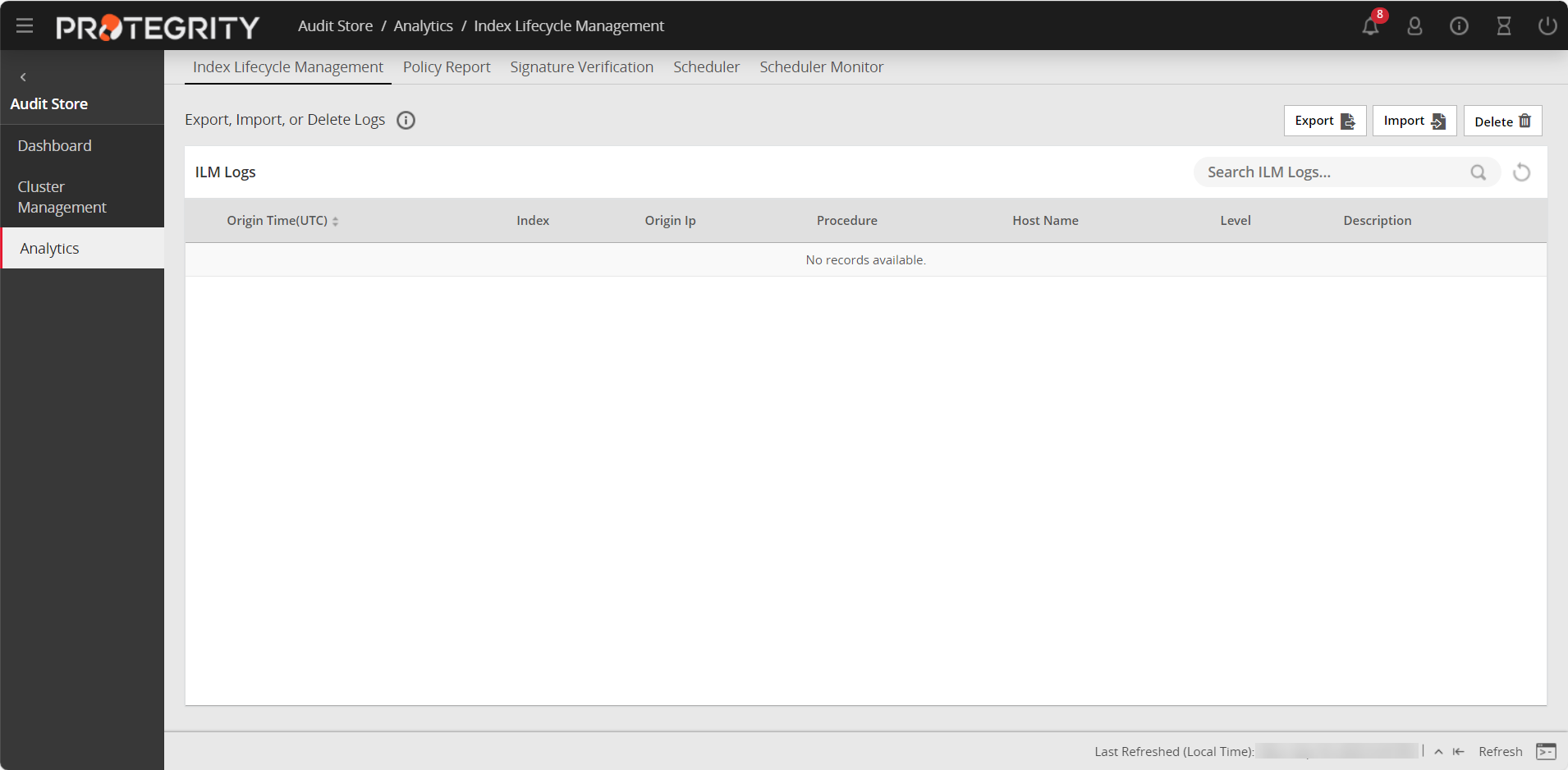

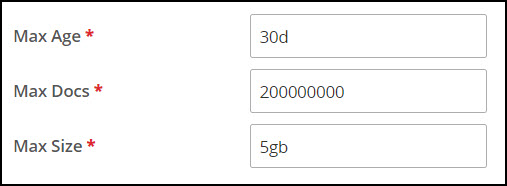

Protegrity Analytics is now configured and retrieves data for the reports from the Audit Store. The Index Lifecycle Management screen is displayed. The data is available on the Audit Store > Dashboard tab.

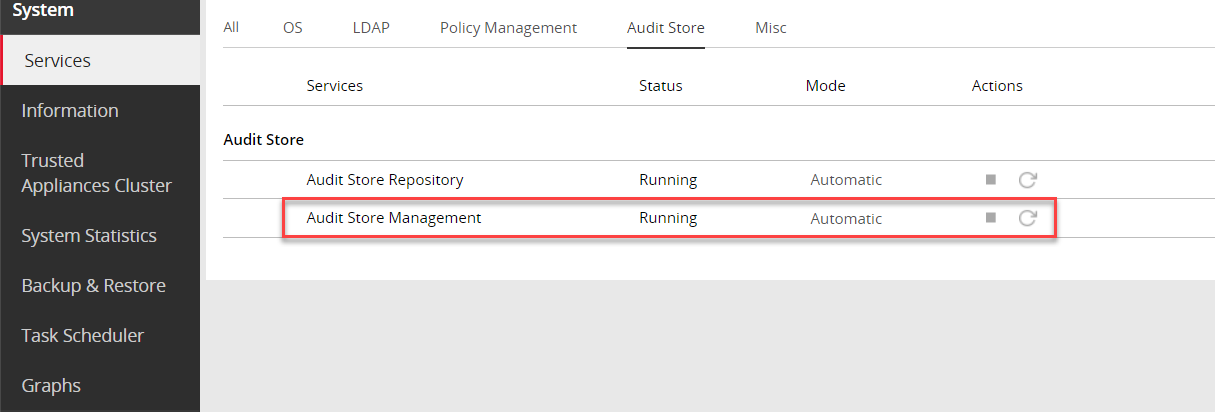

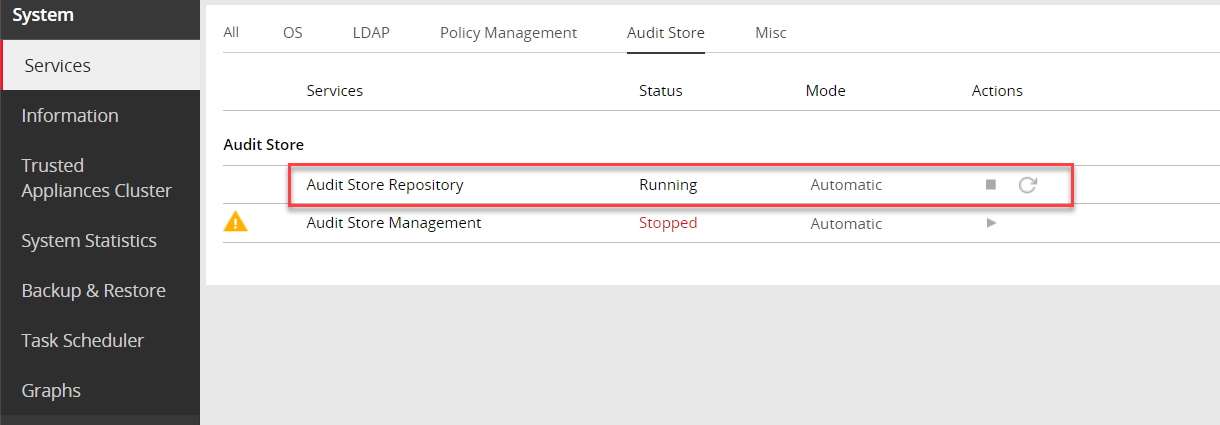

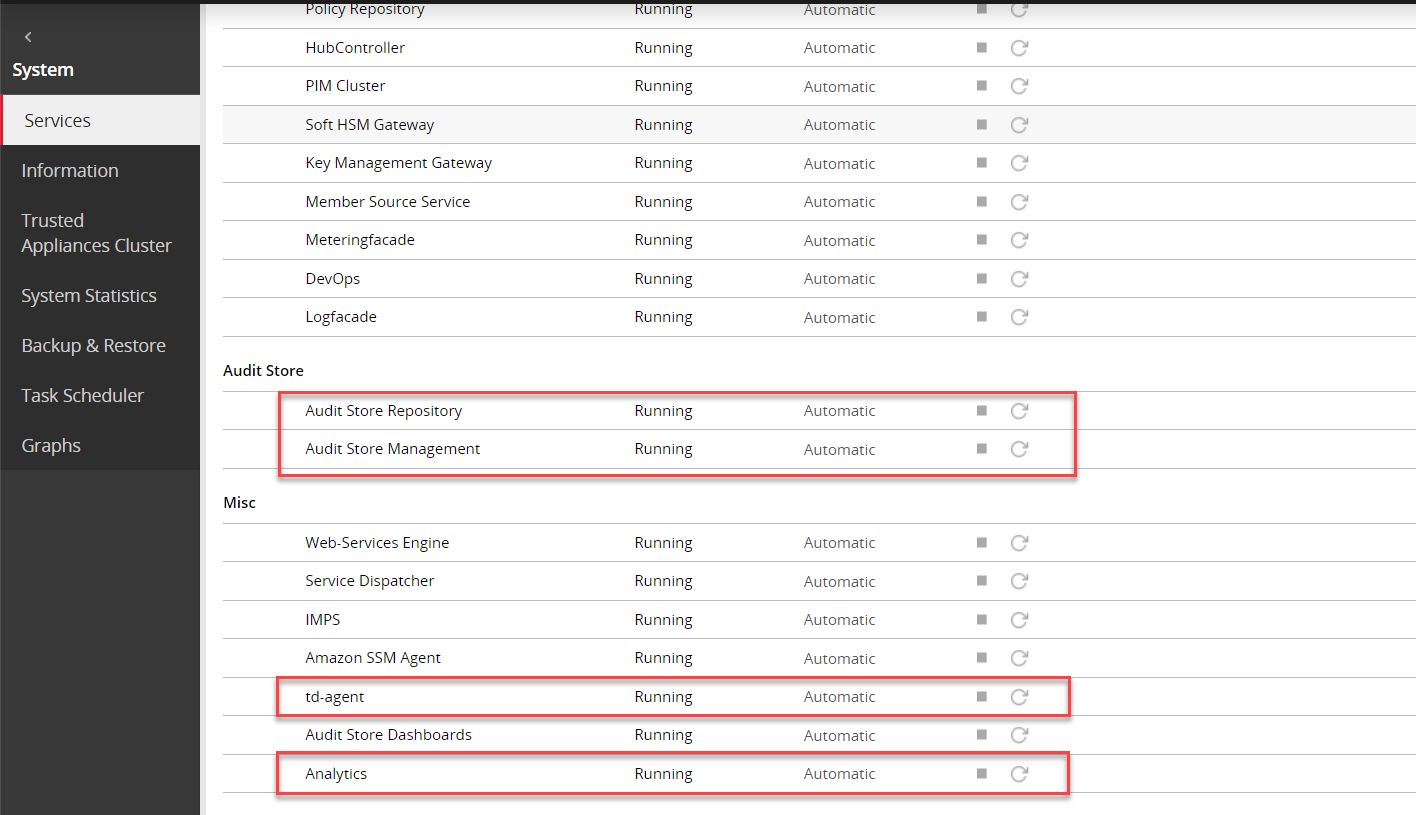

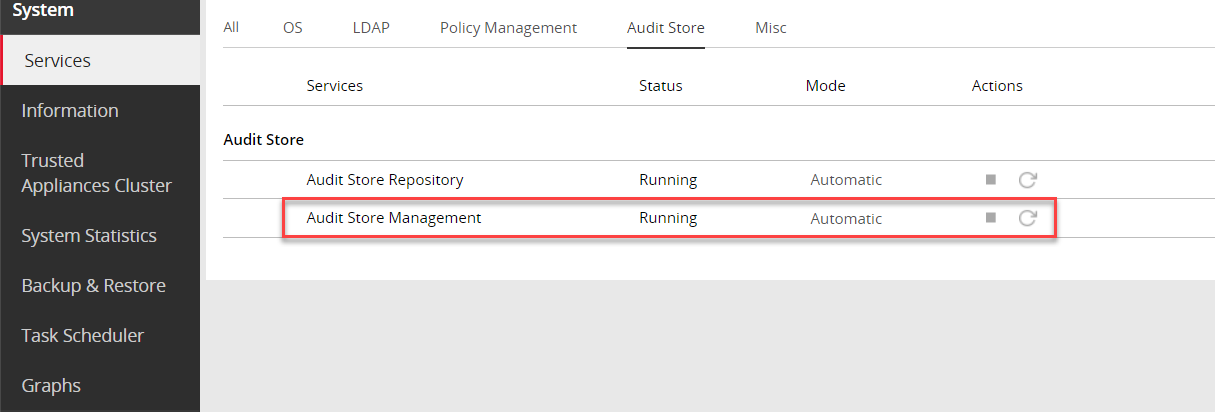

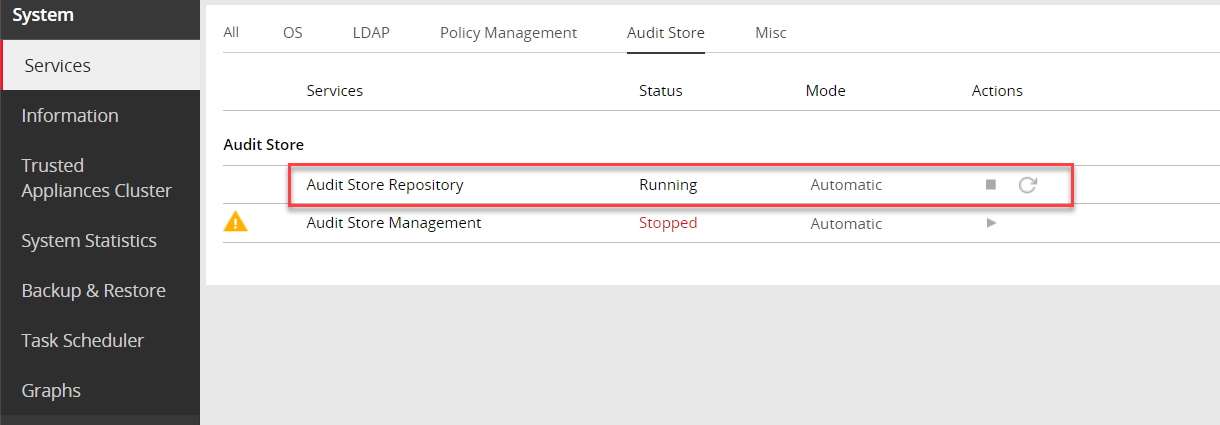

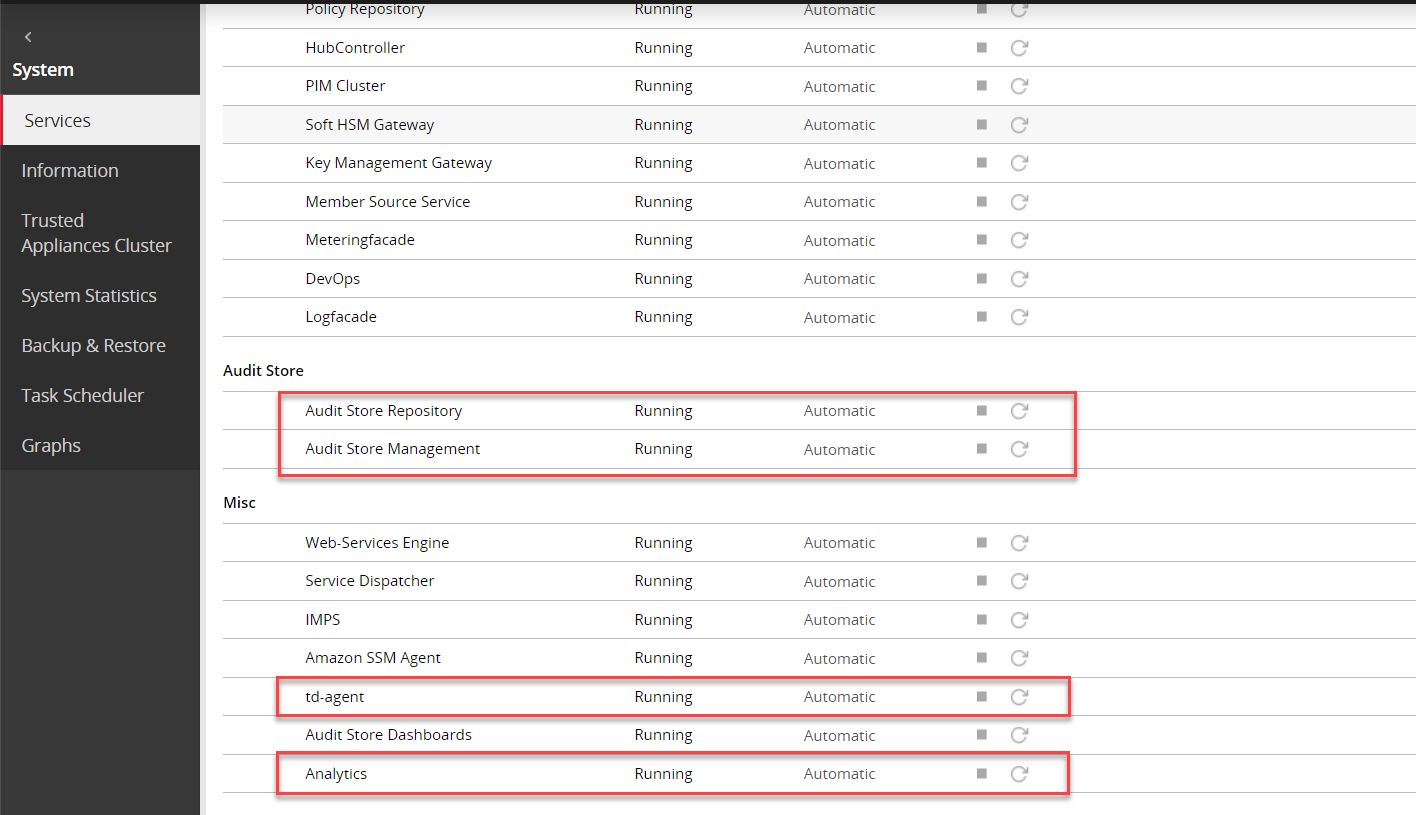

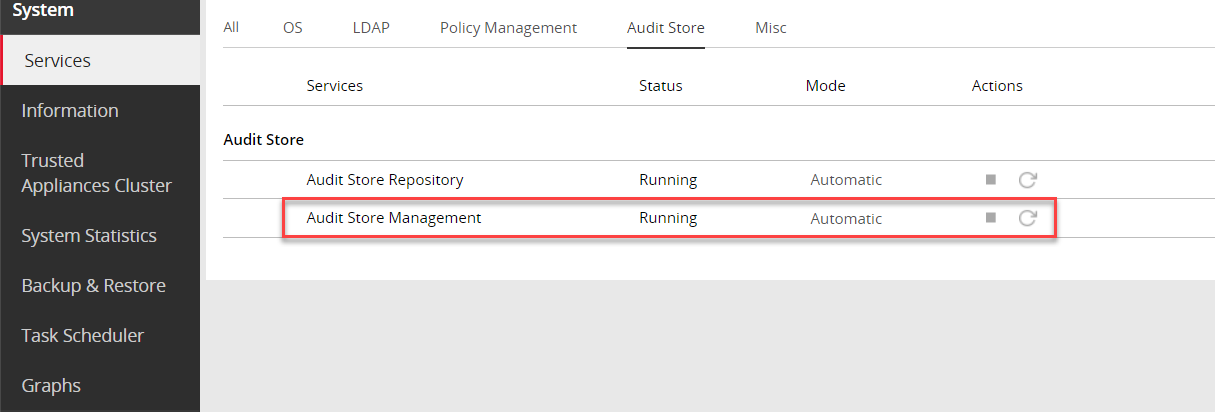

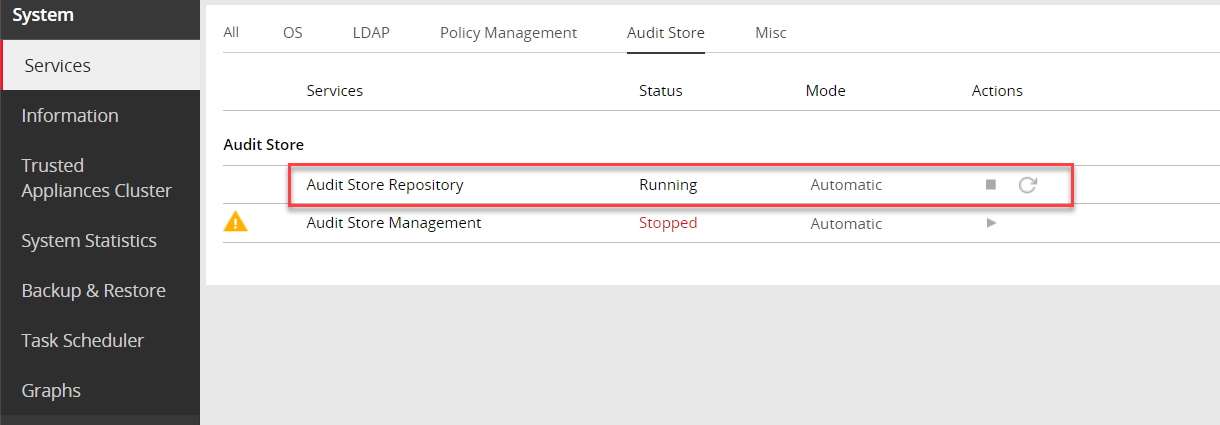

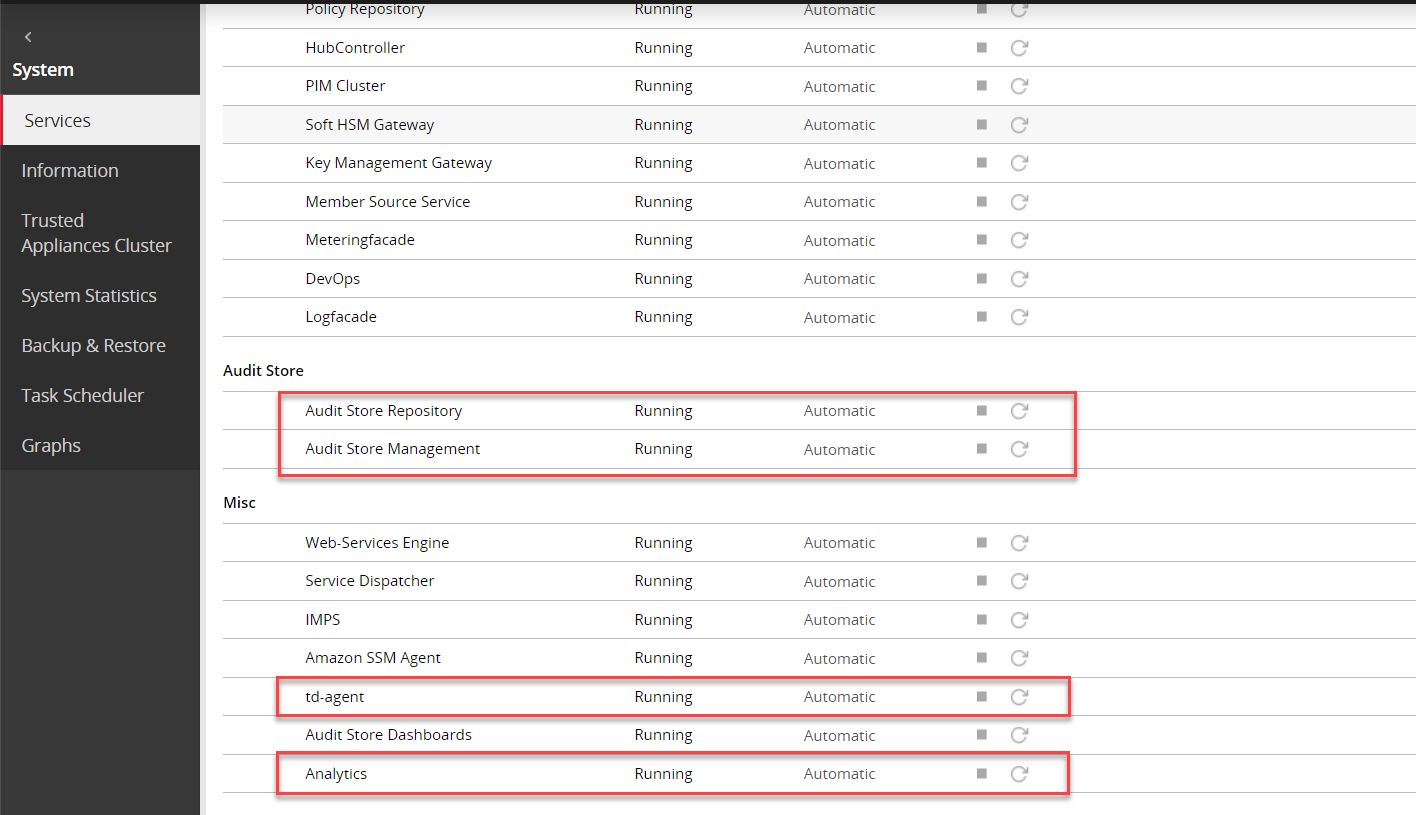

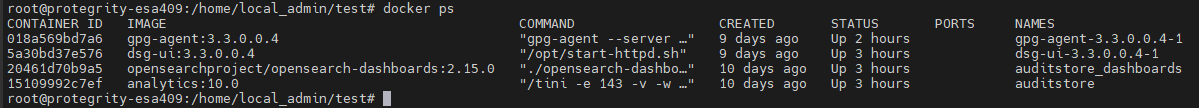

Verify that the following Audit Store services are running by navigating to System > Services:

- Audit Store Management

- Audit Store Repository

- Audit Store Dashboards

- Analytics

- td-agent

Adding an ESA to the Audit Store Cluster

Add multiple ESAs to the Audit Store cluster to increase the cluster size. In this case, the current ESA is added as a node in the Audit Store cluster. After the configurations are completed, the required processes are started and the logs are read from the Audit Store cluster.

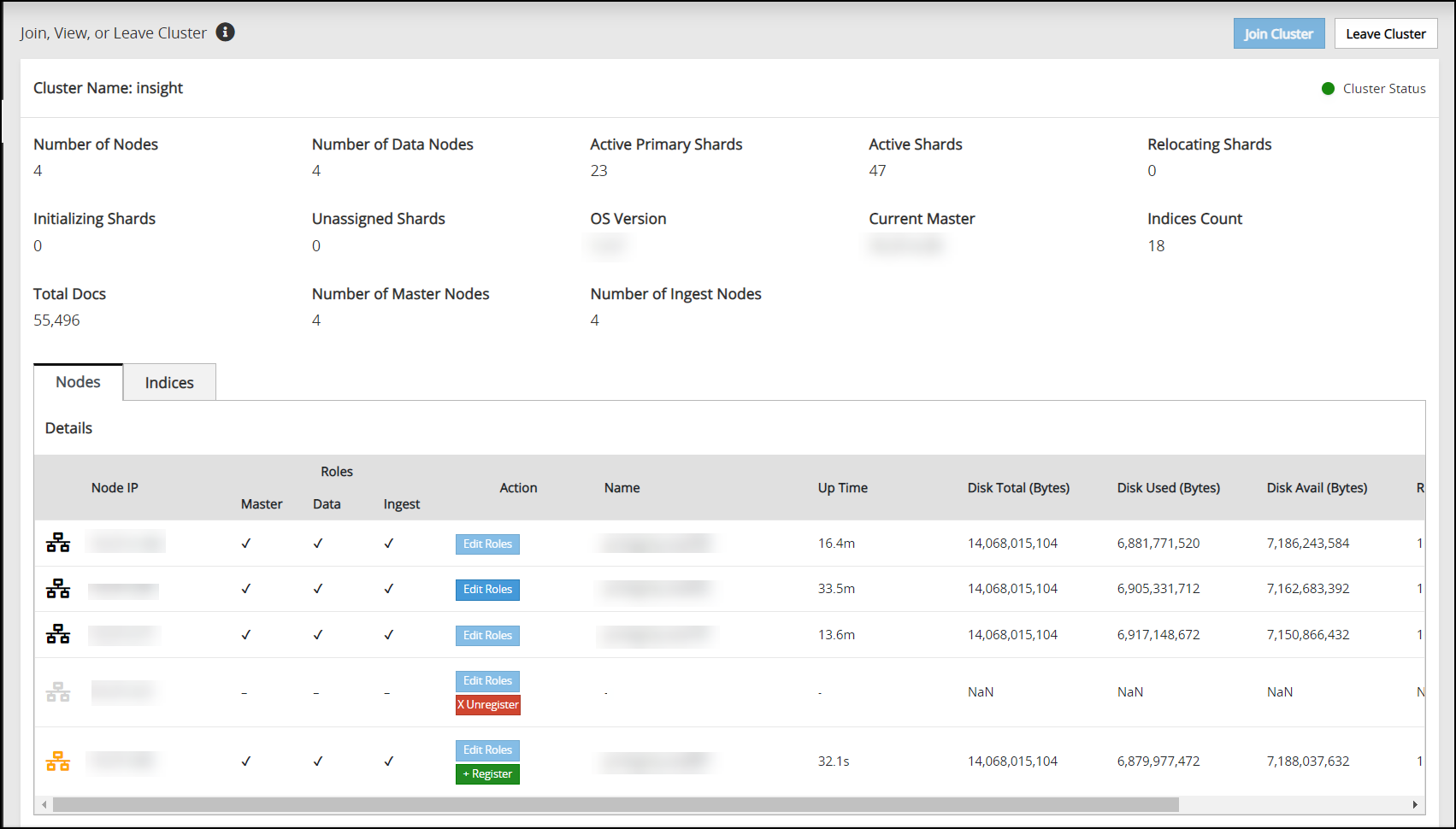

The Audit Store cluster information is updated when a node joins the Audit Store cluster. This information is updated across the Audit Store cluster. Hence, nodes must be added to an Audit Store cluster one at a time. Adding multiple nodes to the Audit Store at the same time using the ESA Web UI would make the cluster information inconsistent, make the Audit Store cluster unstable, and would lead to errors.

Ensure that the following prerequisites are met:

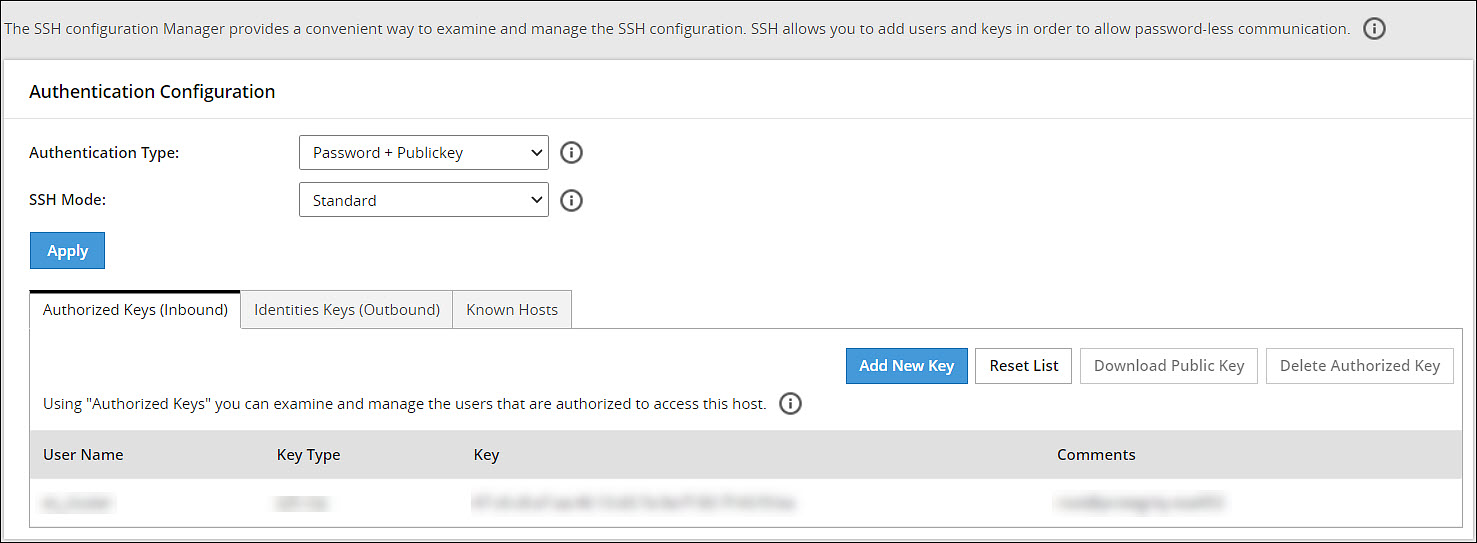

- Ensure that the SSH Authentication type on all the ESAs is set to Password + PublicKey. For more information about setting the authentication, refer here.

- Ensure that the Audit Store cluster is initialized on the node that must be joined.

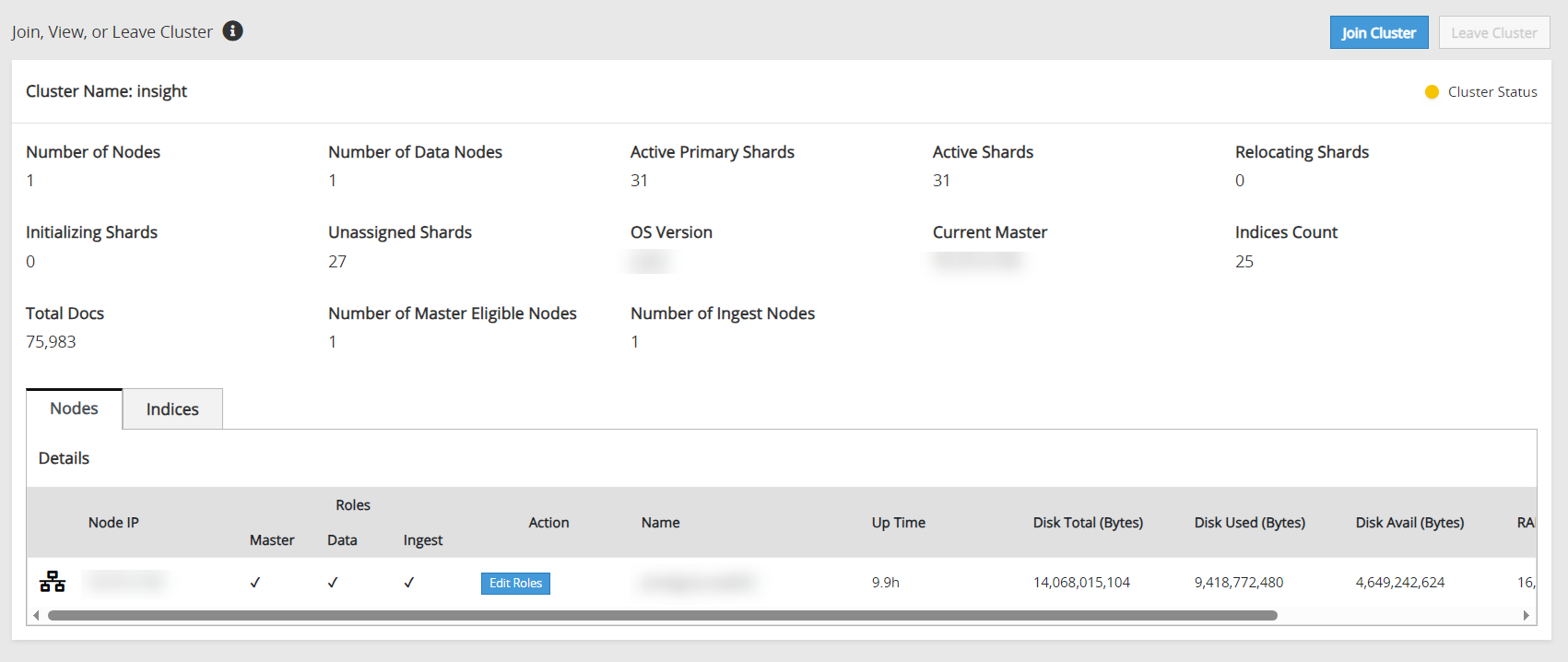

- The health status of the target Audit Store node is green or yellow.

- The health status of the Audit Store node that must be added to the cluster is green or yellow.

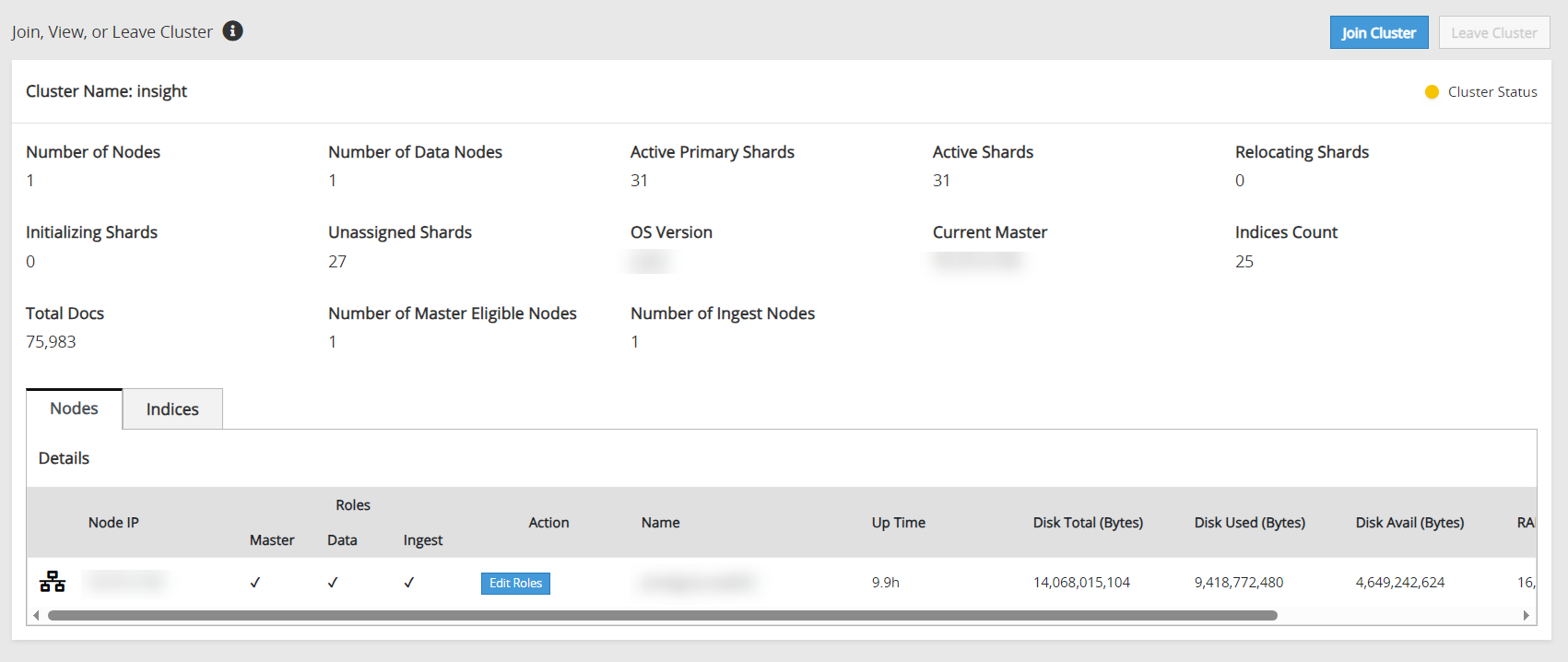

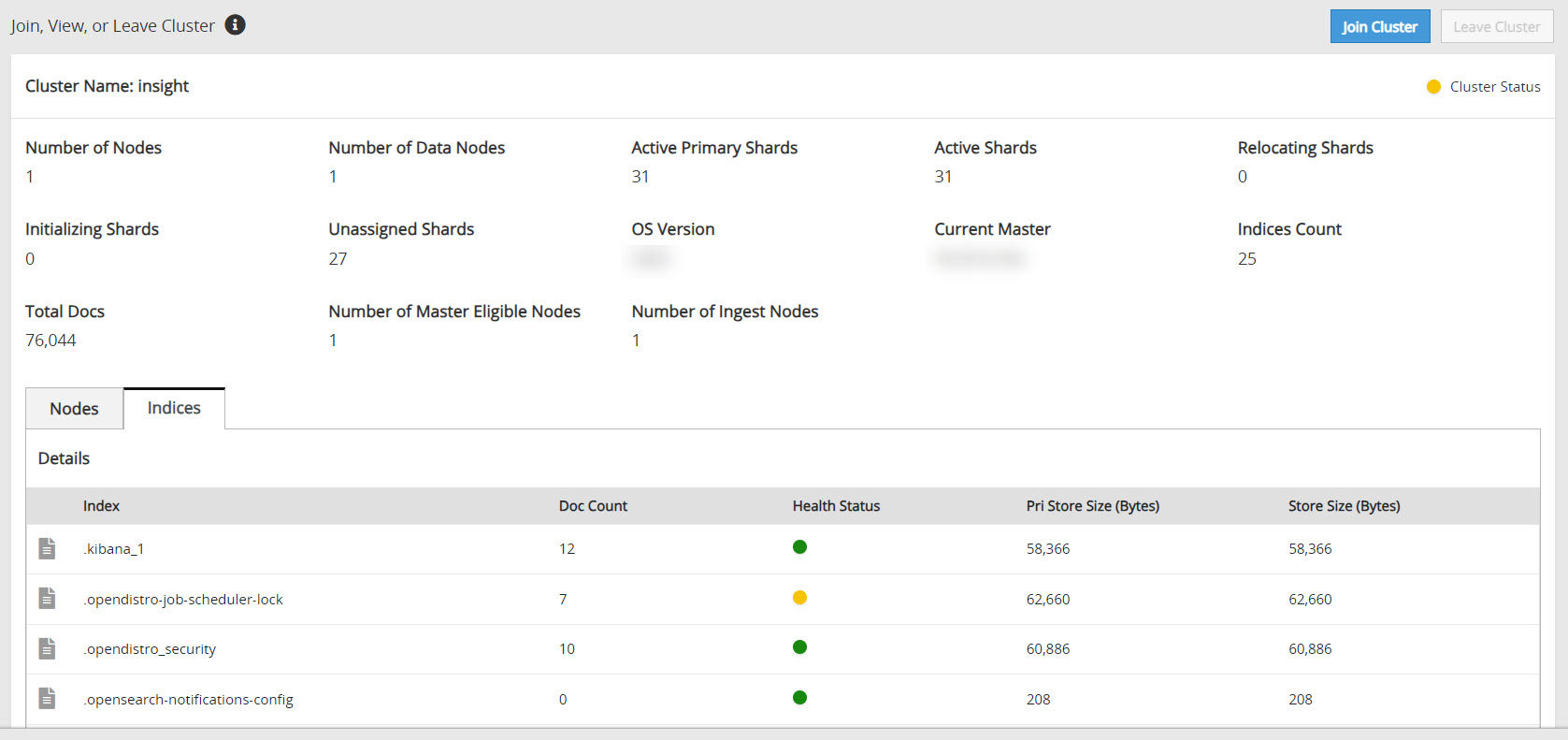

To check the health status of a node, log in to ESA Web UI of the node, click Audit Store > Cluster Management > Overview, and view the Cluster Status from the upper-right corner of the screen. For more information about the health status, refer here.

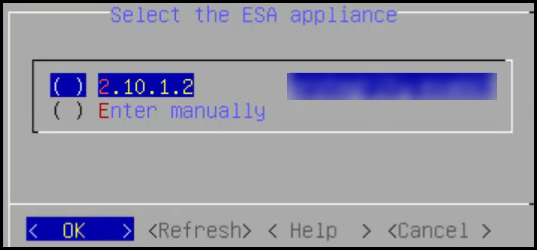

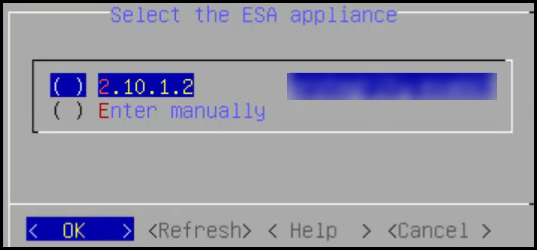

Log in to the Web UI of the second ESA.

Navigate to Audit Store > Initialize Analytics.

The following screen appears.

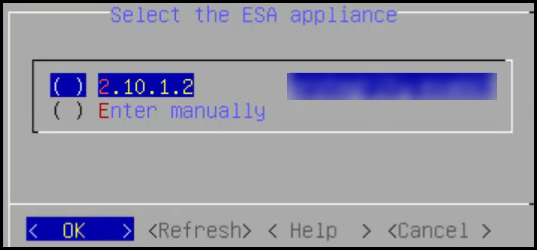

Click Join Cluster.

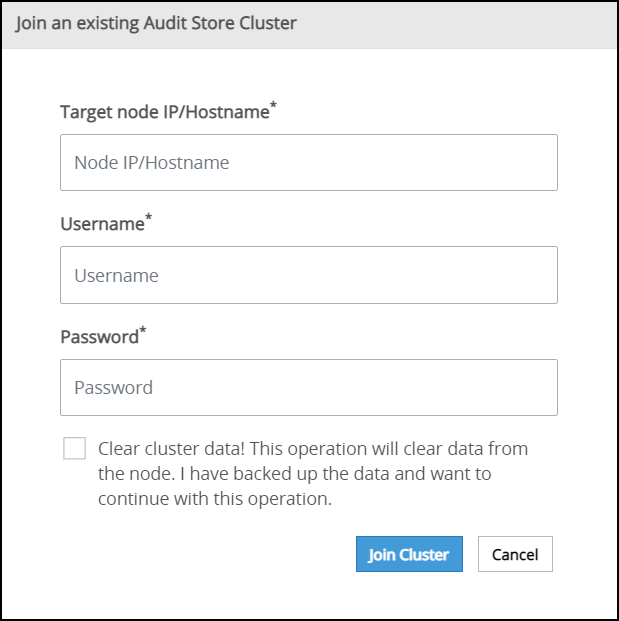

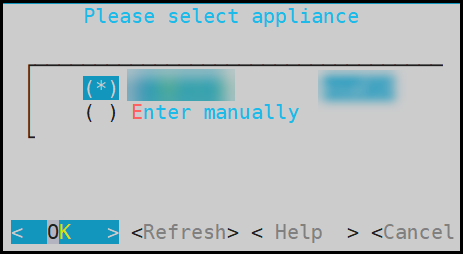

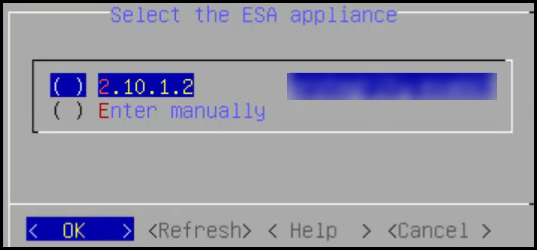

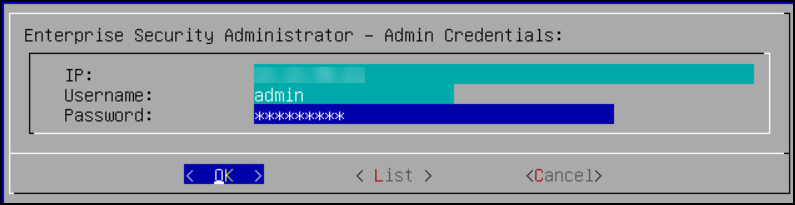

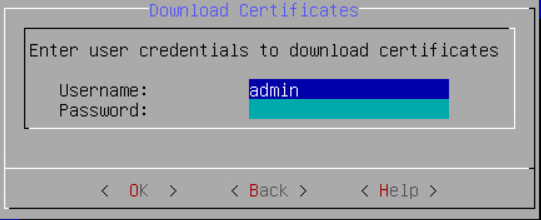

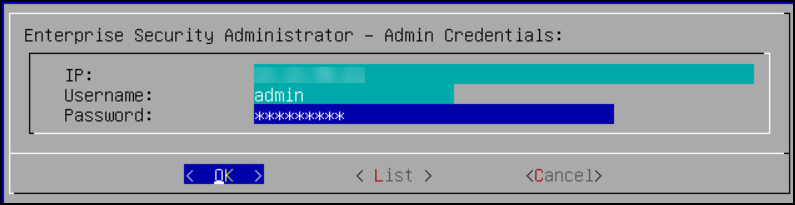

The following screen appears.

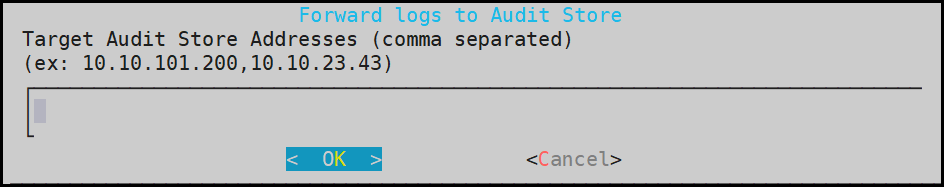

Specify the IP address or the hostname of the Audit Store cluster to join. Use hostname only if the hostname is resolved between the nodes.

Ensure that Protegrity Analytics is initialized and the Audit Store cluster is already created on the target node. A node cannot join the cluster if Protegrity Analytics is not initialized on the target node.

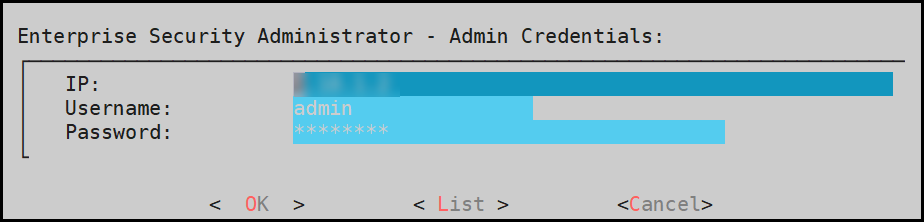

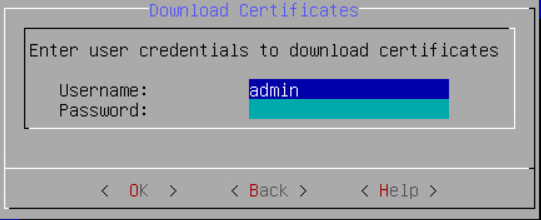

Specify the administrator username and password for the Audit Store cluster. If required, select the Clear cluster data check box to clear the Audit Store data from the current node before joining the Audit Store cluster. The check box will only be enabled if the node is a re-purposed ESA and has data, that is, if the Audit Store was earlier installed on the ESA.

Selecting this check box will delete the existing data on the re-purposed ESA before adding it to the Audit Store.

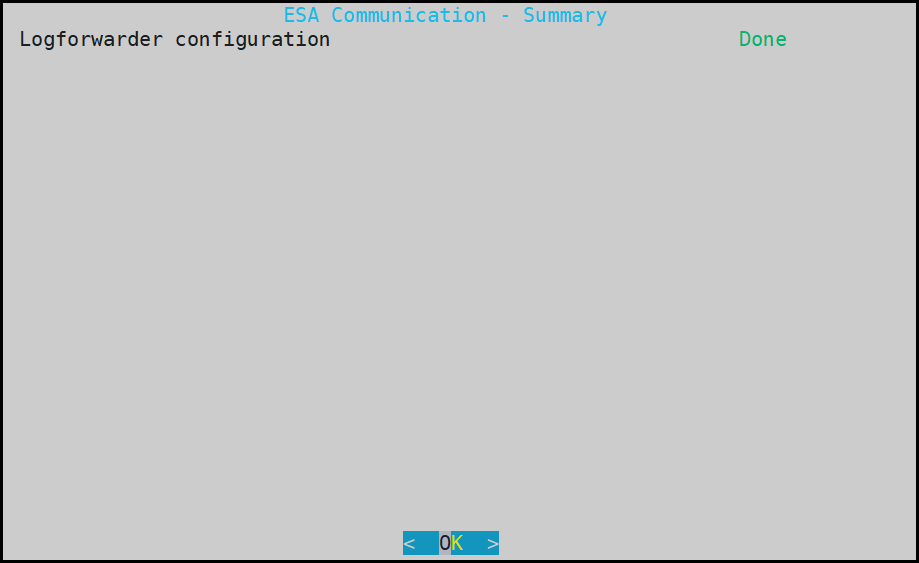

Click Join Cluster.

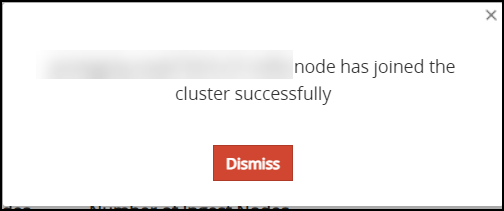

A confirmation message appears as shown in the following figure.

Click Dismiss.

The Index LIfecycle Management screen appears as shown in the following figure.

Repeat the steps to add the remaining ESAs as required. Add only one ESA at a time. After adding the ESA, wait till the cluster becomes stable. The cluster is stable when the cluster status indicator turns green.

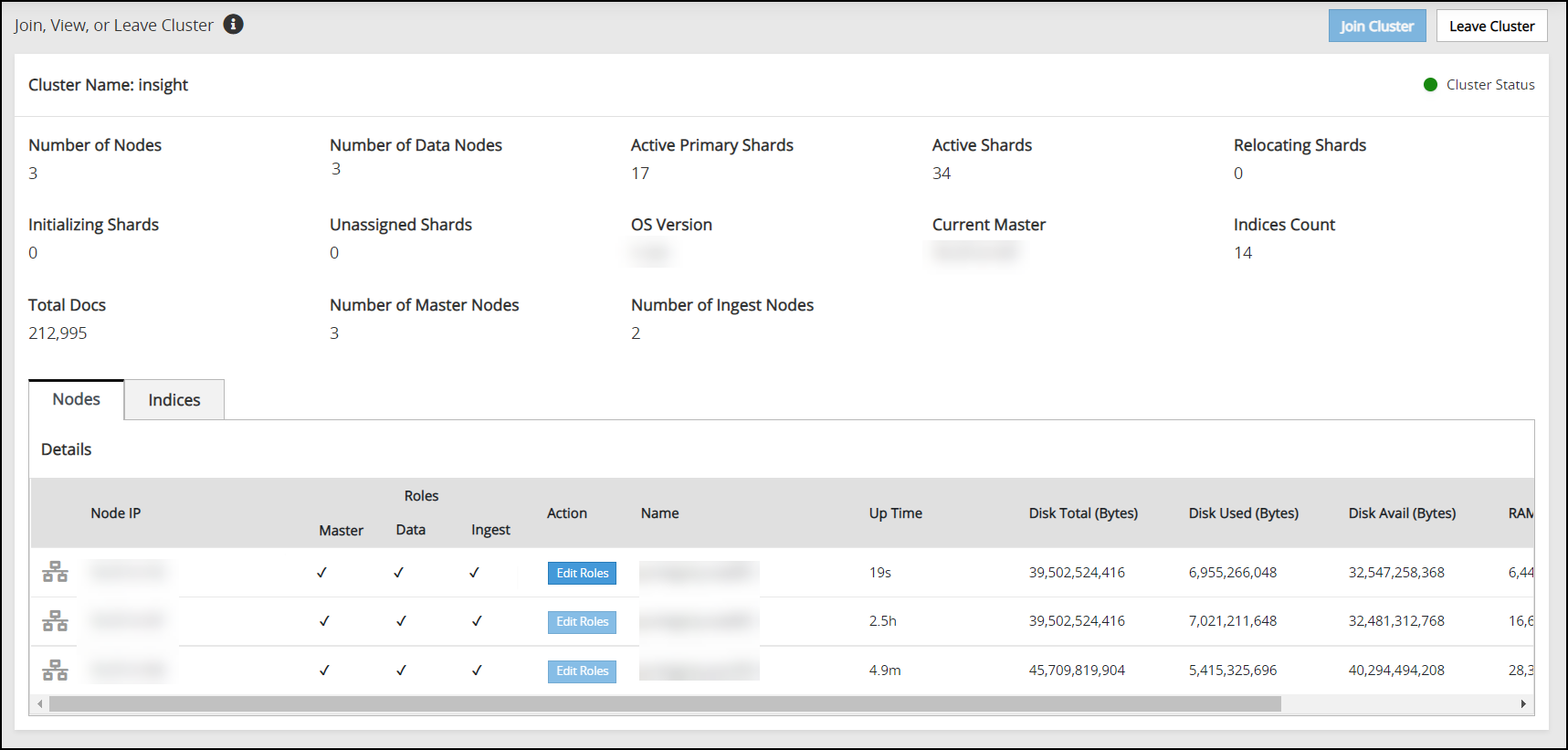

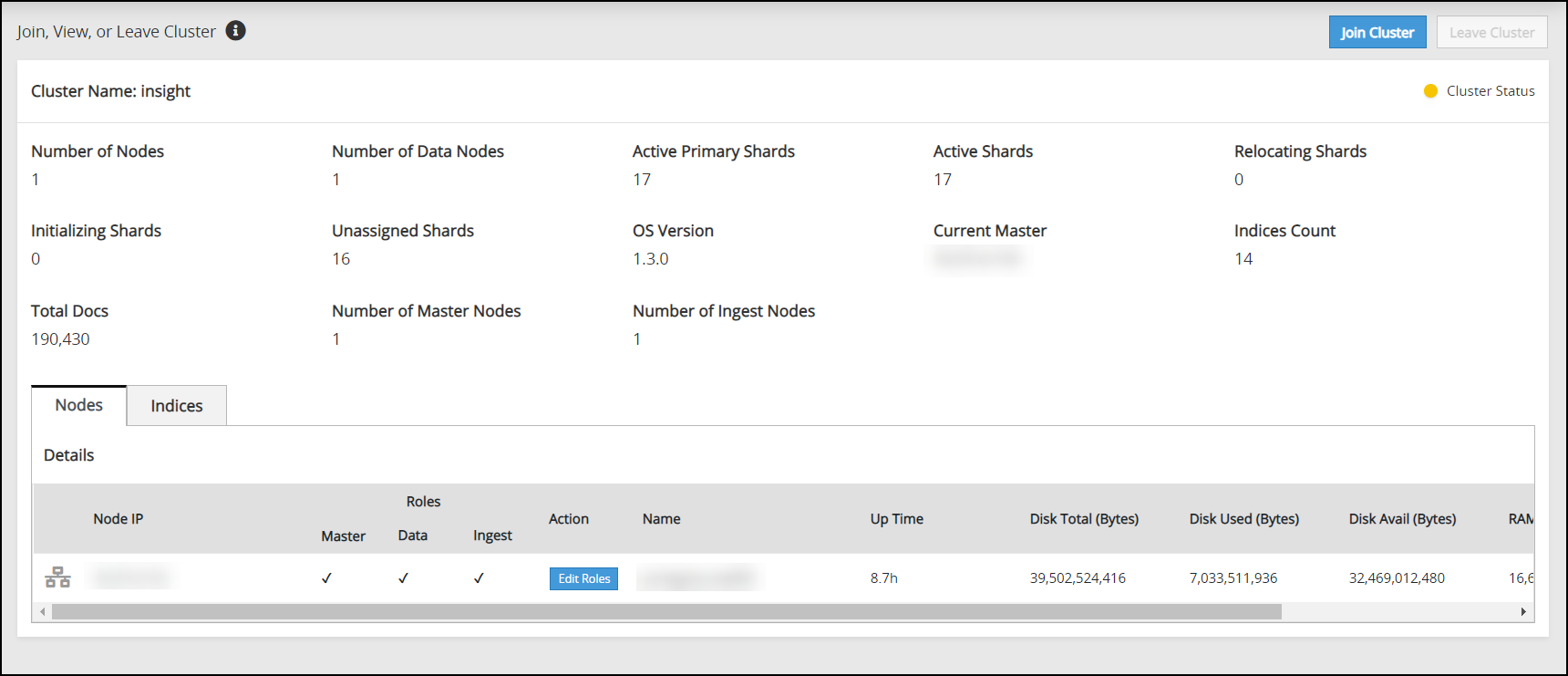

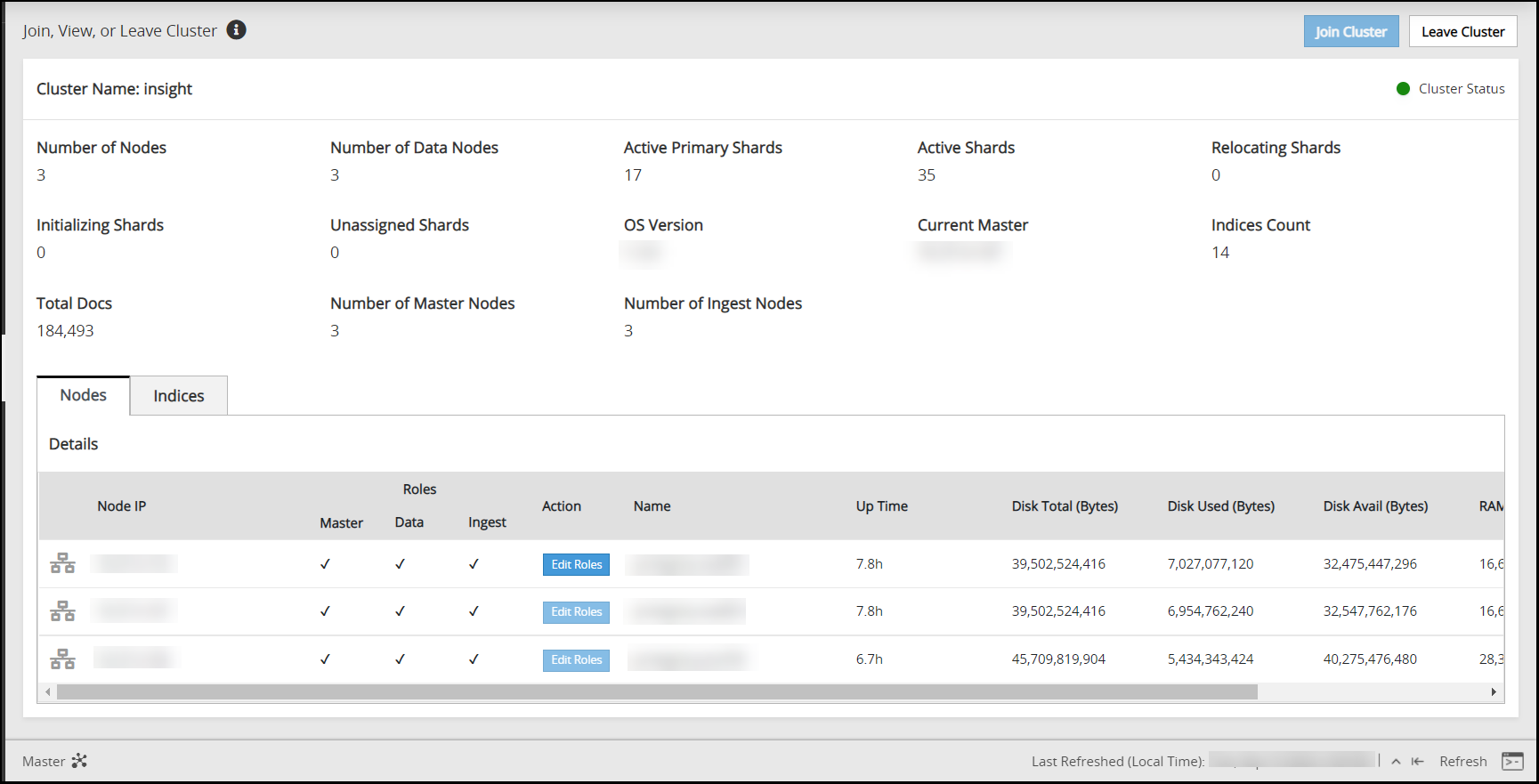

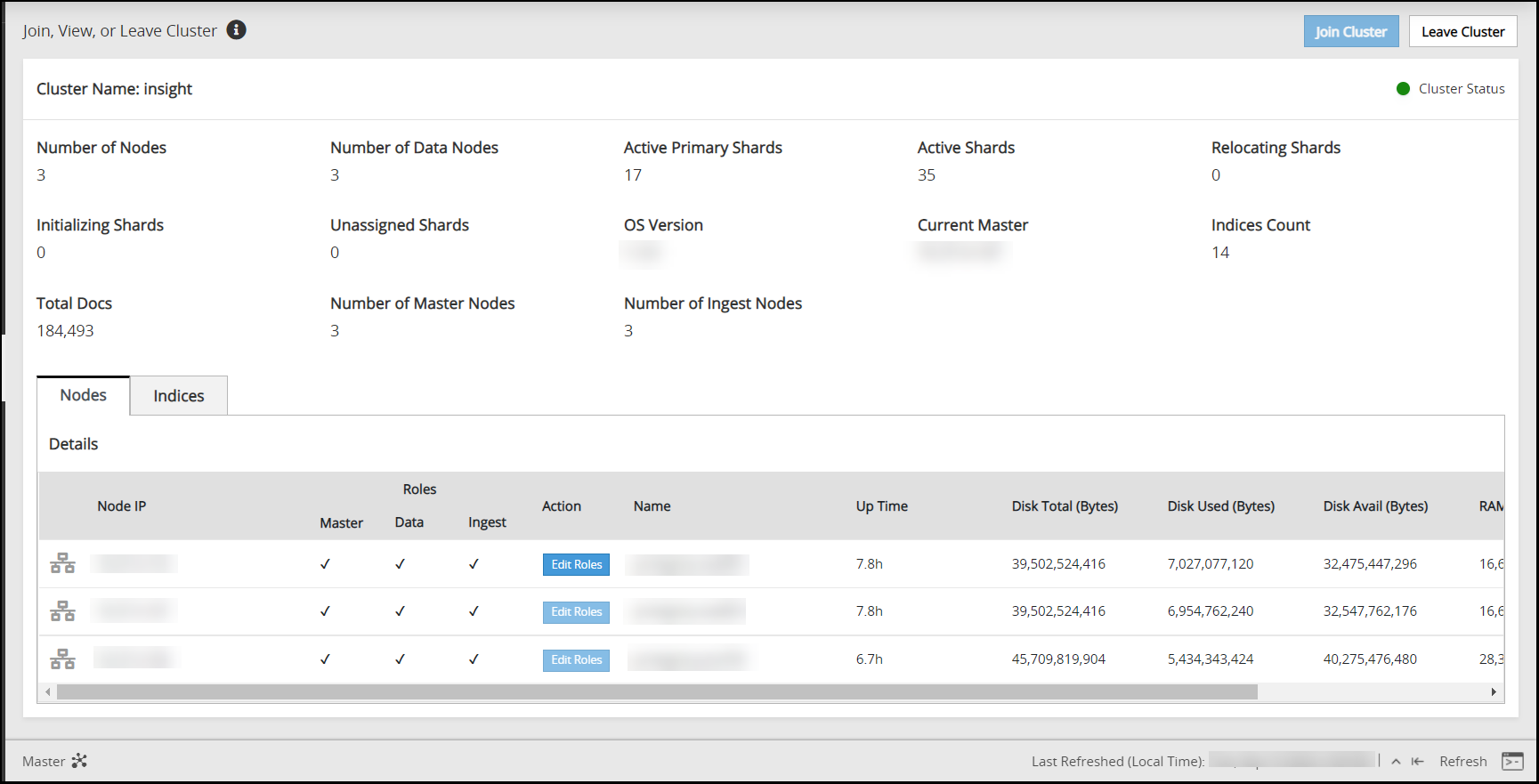

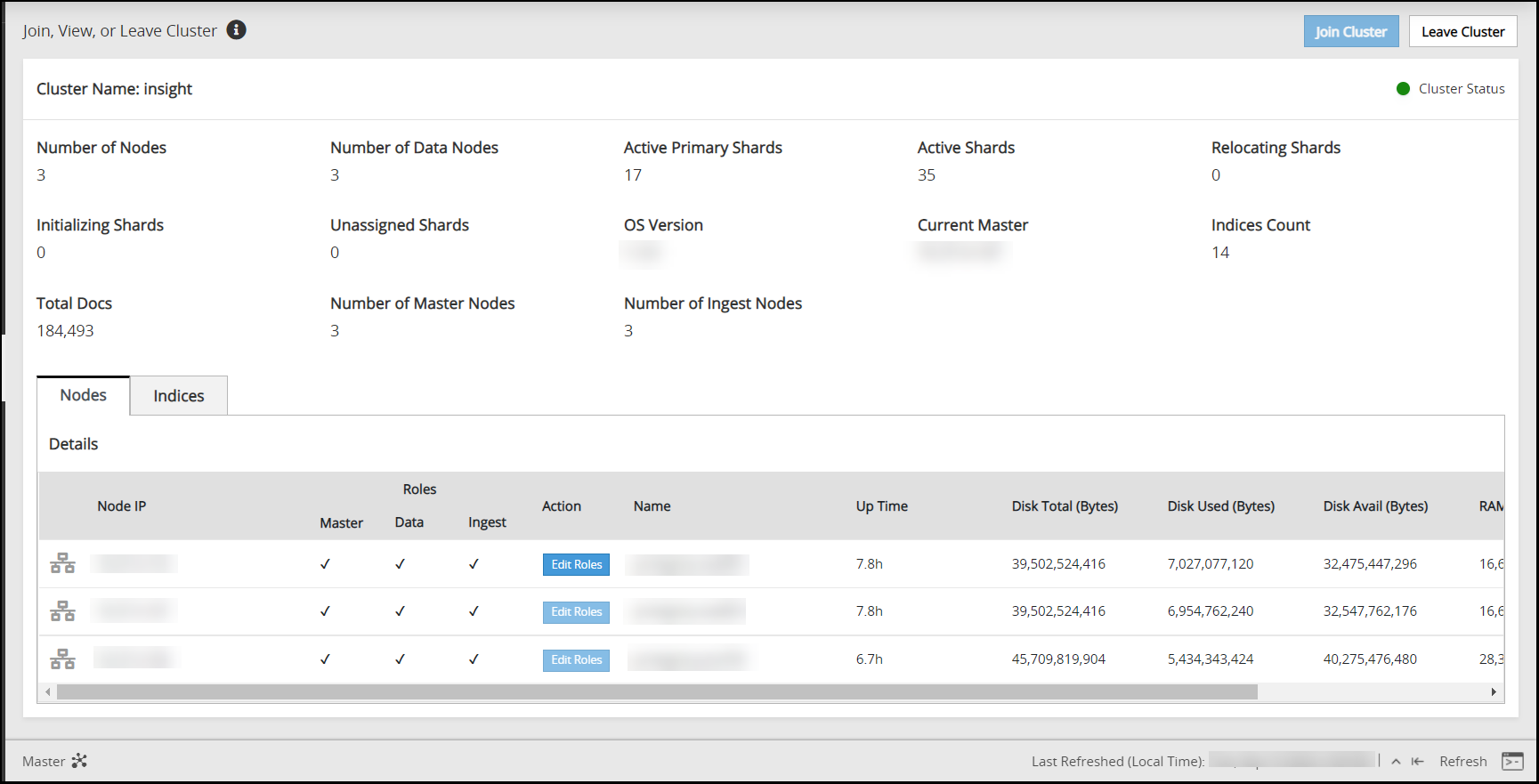

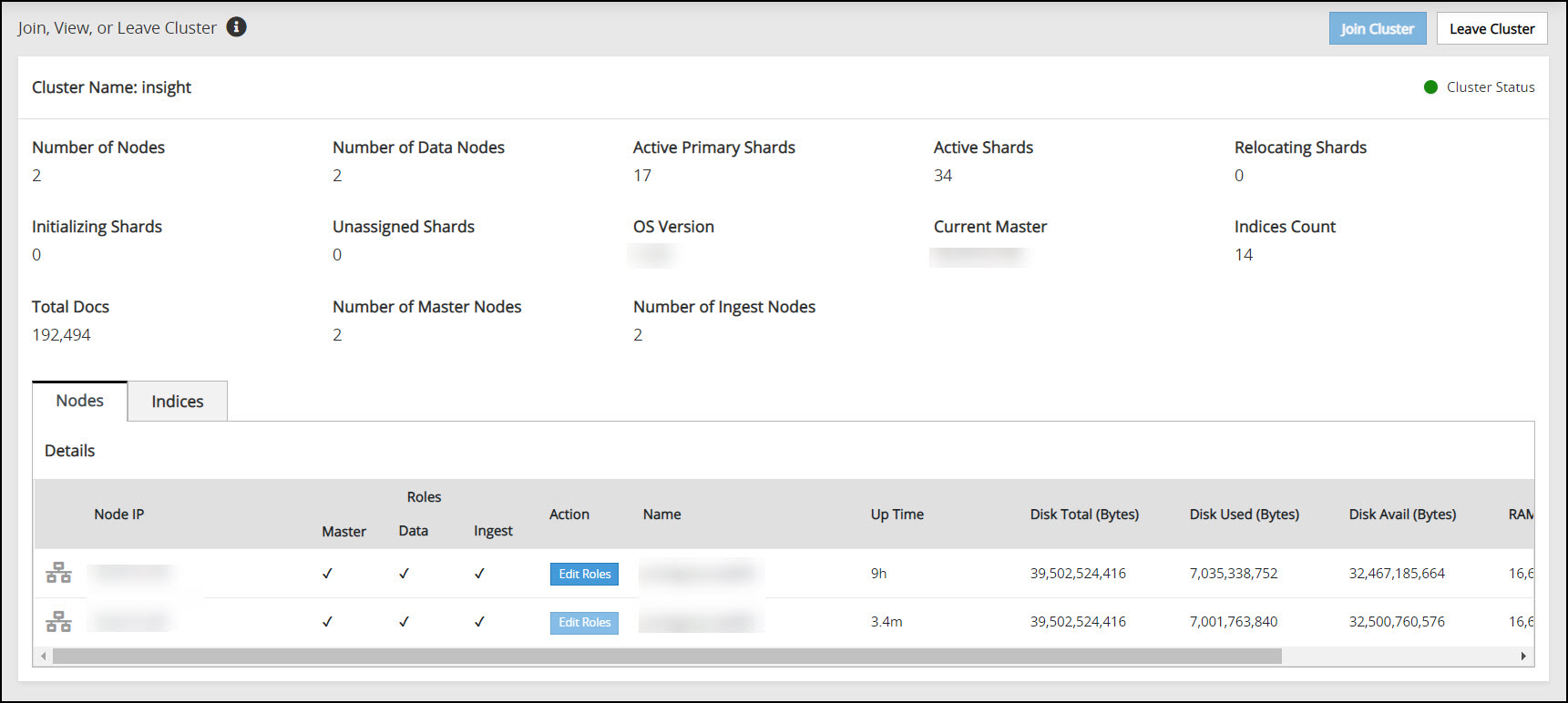

Verifying the Audit Store Cluster

View the Audit Store Management page to verify that the configurations were completed successfully using the steps provided here.

Log in to the ESA Web UI.

Navigate to the Audit Store > Cluster Management > Overview page.

Verify that the nodes are added to the cluster. The health of the nodes must be green.

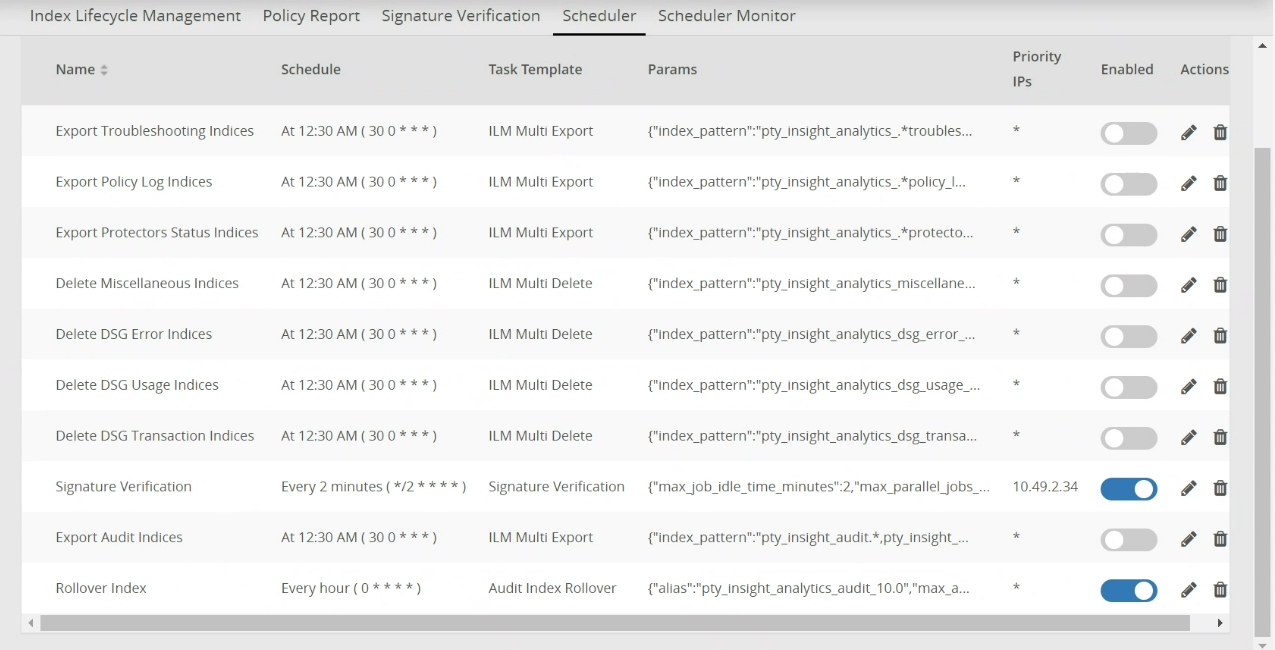

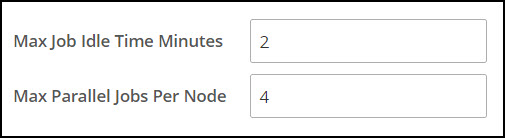

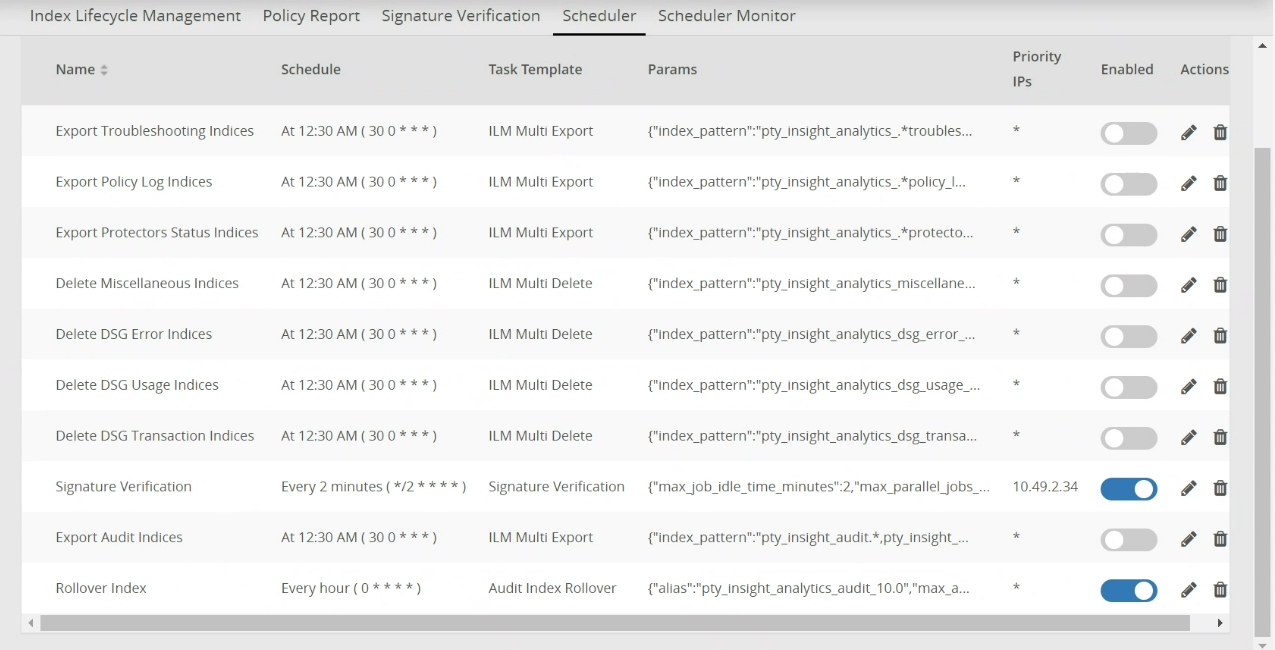

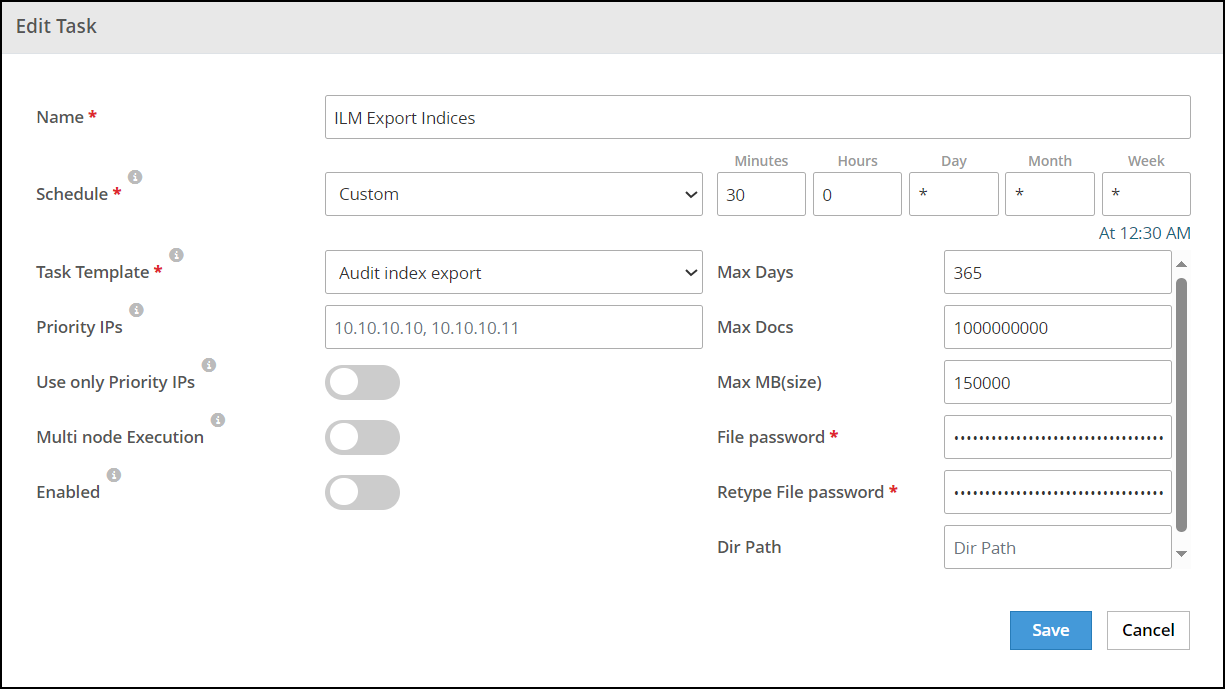

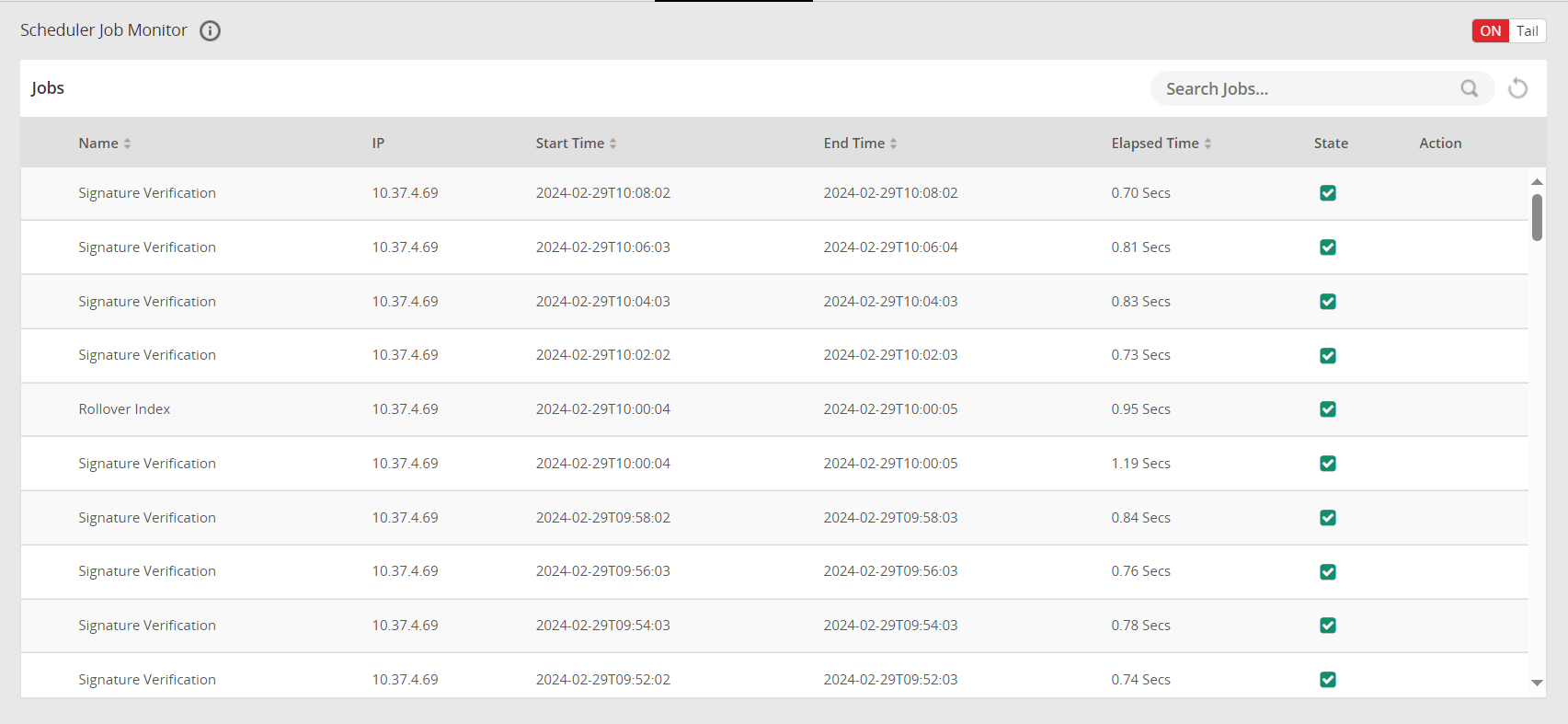

Updating the Priority IP List for Signature Verification

Signature verification jobs run on the ESA and use the ESA’s processing time. Update the priority IP list for the default signature verification jobs after setting up the system. By default, the primary ESA will be used for the priority IP. If there are multiple ESAs in the priority list, then additional ESAs are available to process the signature verifications jobs. This frees up the Primary ESA’s processor to handle other important tasks.

For example, if the maximum jobs to run on an ESA is set to 4 and 10 jobs are queued to run on 2 ESAs, then 4 jobs are started on the first ESA, 4 jobs are started on the second ESA, and 2 jobs will be queued to run till an ESA job slot is free to accept and run the queued job.

For more information about scheduling jobs, refer here.

For more information about signature verification jobs, refer here.

Use the steps provided in this section to update the priority IP list.

Log in to the ESA Web UI.

Navigate to Audit Store > Analytics > Scheduler.

From the Action column, click the Edit icon (

) for the Signature Verification task.

) for the Signature Verification task.Update the Priority IPs filed with the list of the ESAs available separating the IPs using commas.

Click Save.

Enter the root password, to apply the updates.

2 - Configuration

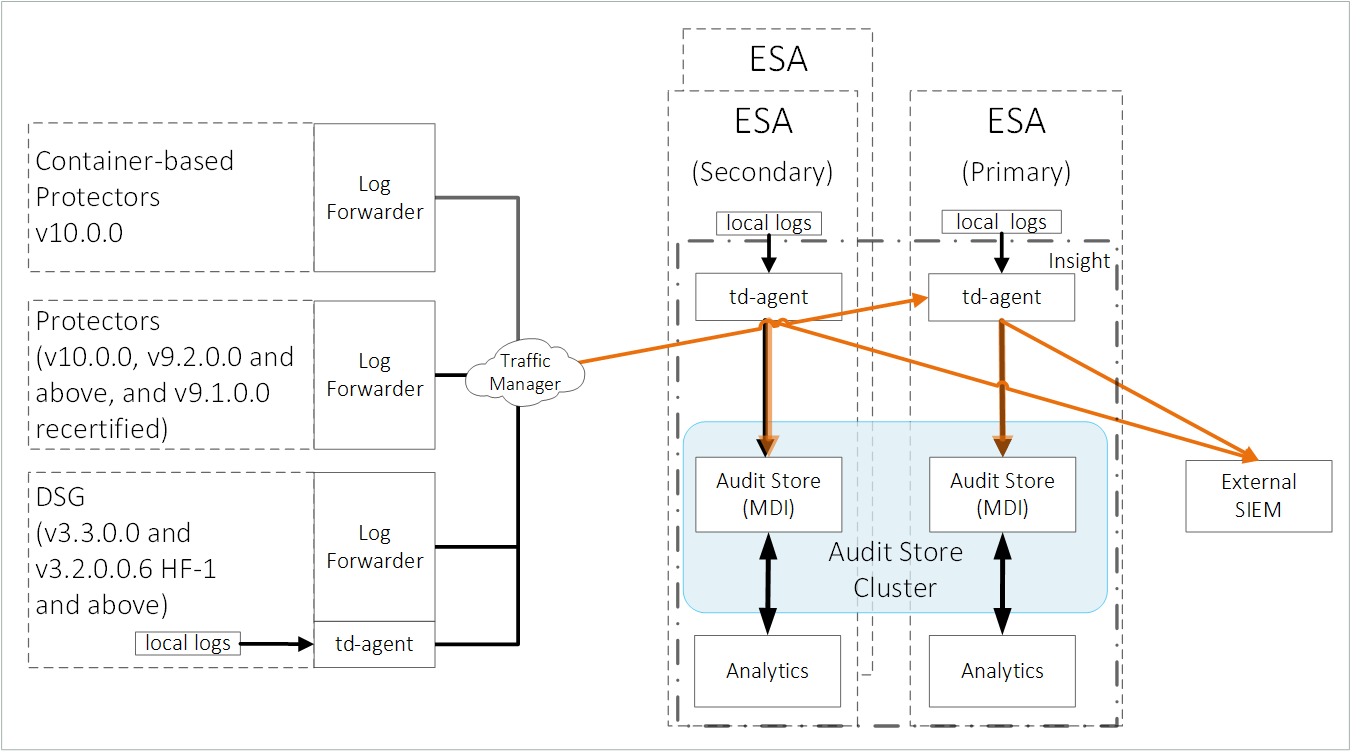

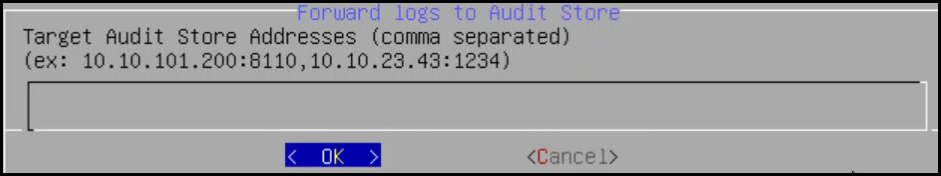

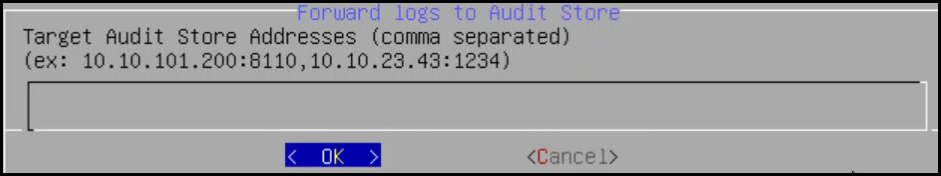

2.1 - Sending logs to an external security information and event management (SIEM)

This is an optional step.

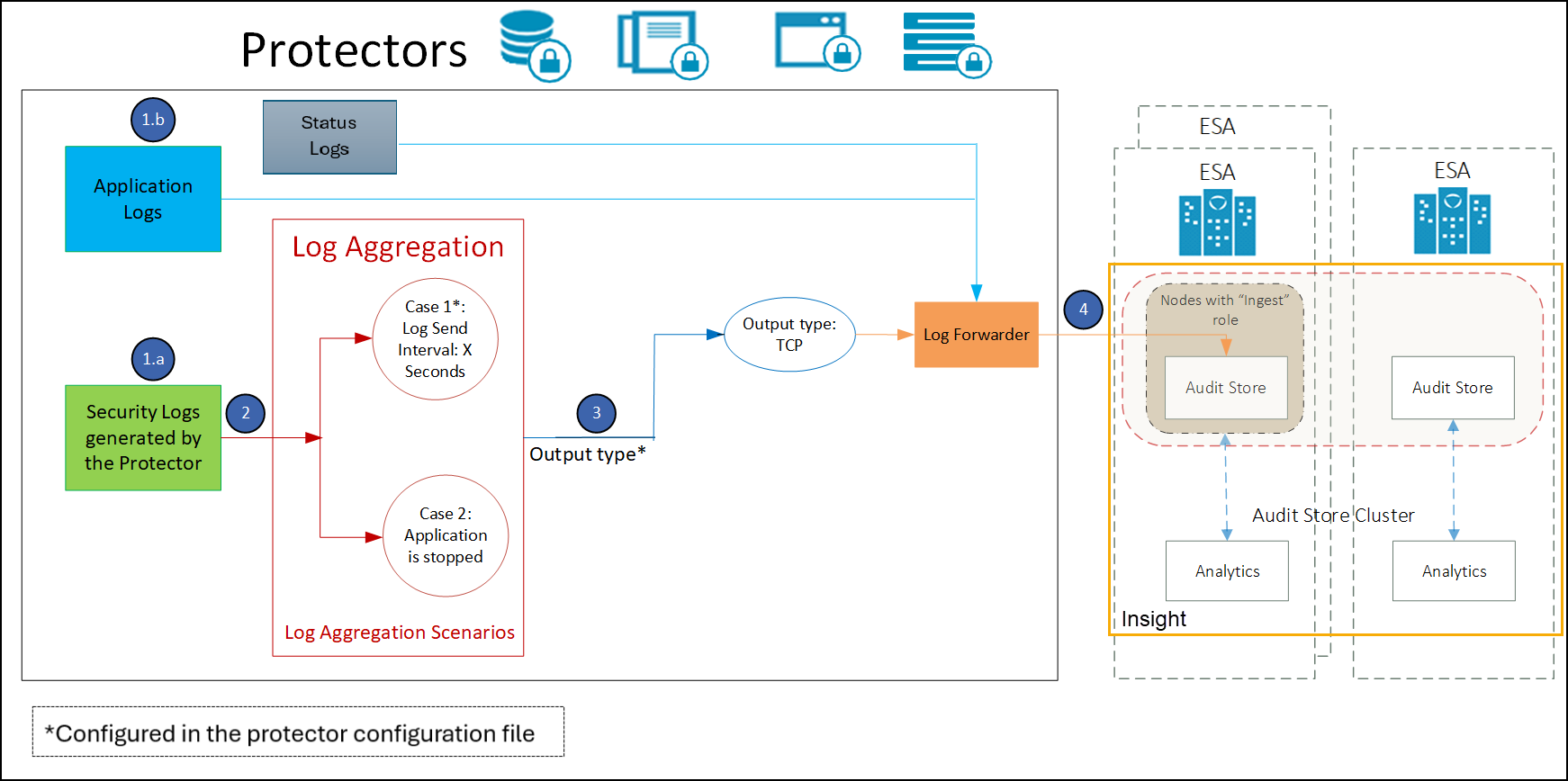

In the default setup, the logs are sent from the protectors directly to the Audit Store on the ESA using the Log Forwarder on the protector.

For more information about the default flow, refer here.

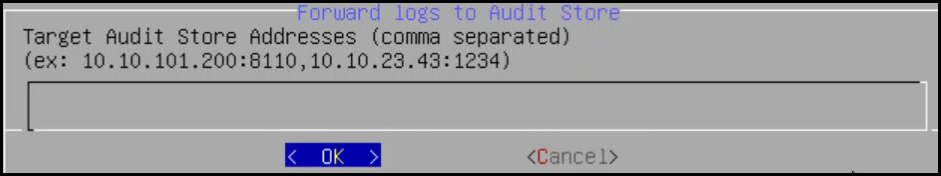

To forward logs to Insight and the external SIEM, the td-agent is configured to listen for protector logs. The protectors are configured to send the logs to the td-agent on the ESA. Finally, the td-agent is configured to forward the logs to the required locations.

Ensure that the logs are sent to the ESA and the external SIEM using the steps provided in this section. The logs sent to the ESA are required by Protegrity support for troubleshooting the system in case of any issues. Also, ensure that the ESA hostname specified in the configuration files are updated when the hostname of the ESA is changed.

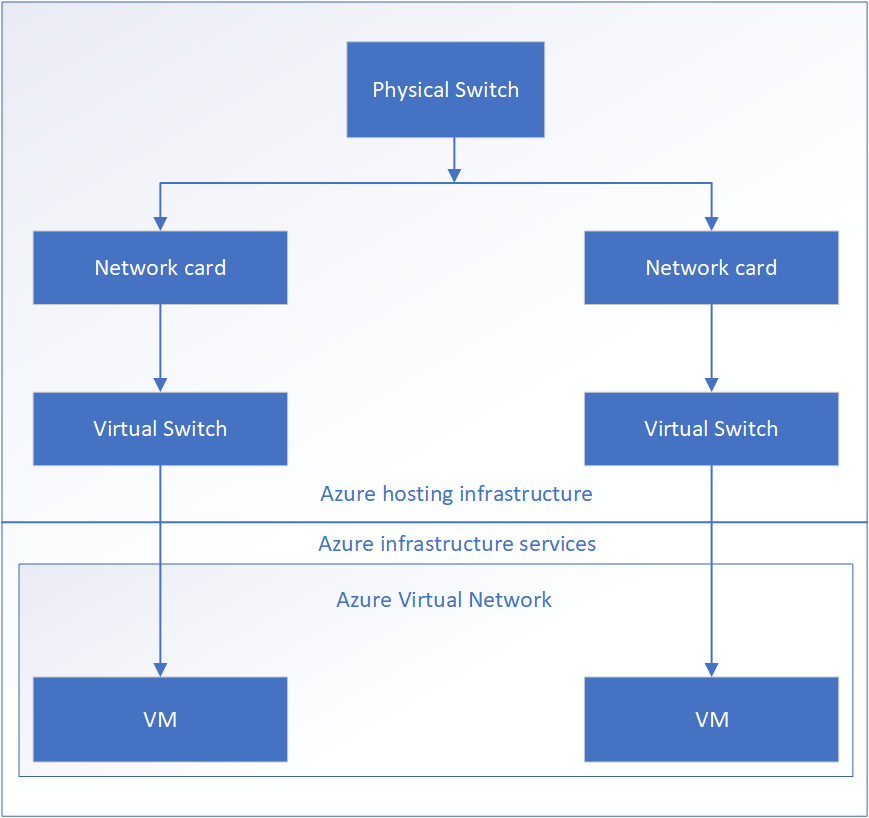

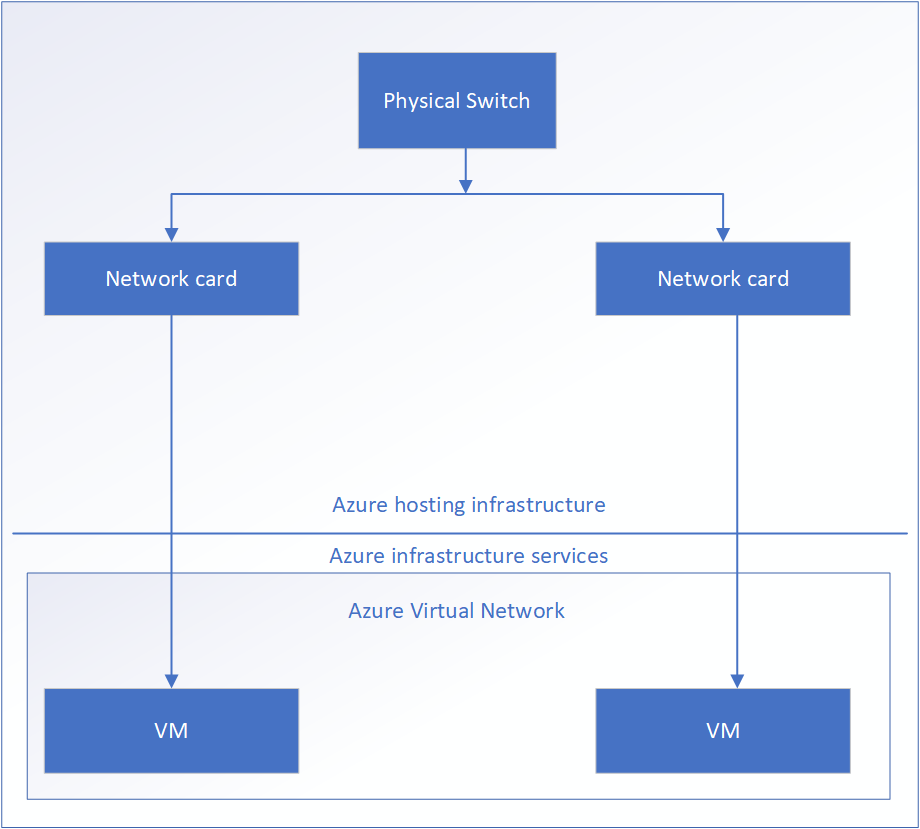

An overview architecture diagram for sending logs to Insight and the external SIEM is shown in the following figure.

The ESA v10.1.0 only supports protectors having the PEP server version 1.2.2+42 and later.

Forward the logs generated on the protector to Insight and the external SIEM using the following steps. Ensure that all the steps are completed in the order specified.

Set up td-agent to receive protector logs.

Send the protector logs to the td-agent.

Configure td-agent to forward logs to the external endpoint.

1. Setting up td-agent to receive protector logs

Configure the td-agent to listen to logs from the protectors and to forward the logs received to Insight.

To configure td-agent:

Add the port 24284 to the rule list on the ESA. This port is configured for the ESA to receives the protector logs over a secure connection.

For more information about adding rules, refer here.

Log in to the CLI Manager of the Primary ESA.

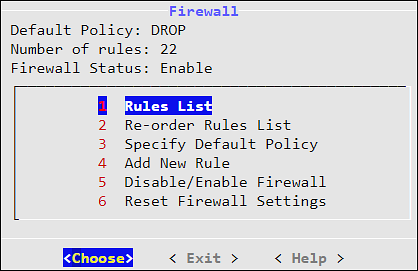

Navigate to Networking > Network Firewall.

Enter the password for the root user.

Select Add New Rule and select Choose.

Select Accept and select Next.

Select Manually.

Select TCP and select Next.

Specify 24284 for the port and select Next.

Select Any and select Next.

Select Any and select Next.

Specify a description for the rule and select Confirm.

Select OK.

Open the OS Console on the Primary ESA.

Log in to the CLI Manager of the Primary ESA.

Navigate to Administration > OS Console.

Enter the root password and select OK.

Enable td-agent to receive logs from the protector.

Navigate to the config.d directory using the following command.

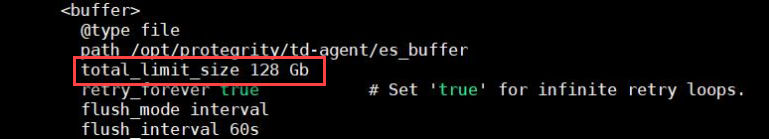

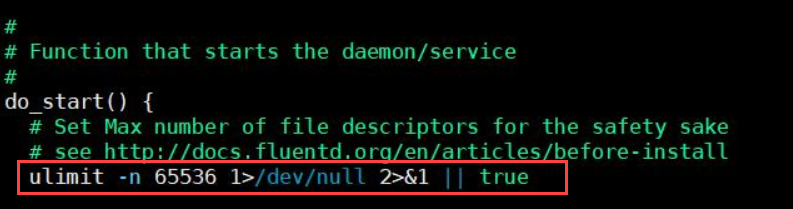

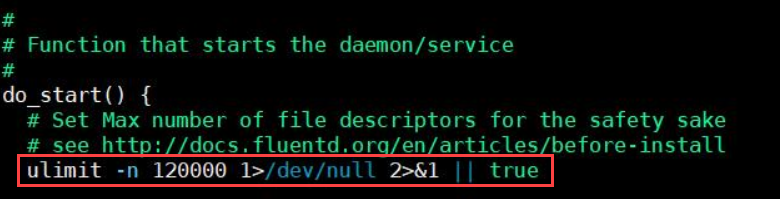

cd /opt/protegrity/td-agent/config.dEnable the INPUT_forward_external.conf file using the following command. Ensure that the certificates exist in the directory that is specified in the INPUT_forward_external.conf file. If an IP address or host name is specified for the bind parameter in the file, then ensure that the certificates are updated to match the host name or IP address specified. If the host name, IP address, or domain name of the system is updated, then the bind value in this file must be updated. For more information about updating the bind value, refer here.

mv INPUT_forward_external.conf.disabled INPUT_forward_external.conf

Optional: Update the configuration settings to improve the SSL/TLS server configuration on the system.

Navigate to the config.d directory using the following command.

cd /opt/protegrity/td-agent/config.dOpen the INPUT_forward_external.conf file using a text editor.

Add the list of ciphers to the file. Update and use the ciphers that are required. Enter the entire line of code on a single line and retain the formatting of the file.

<source> @type forward bind <Hostname of the Primary ESA> port 24284 <transport tls> ca_path /mnt/ramdisk/certificates/mng/CA.pem cert_path /mnt/ramdisk/certificates/mng/server.pem private_key_path /mnt/ramdisk/certificates/mng/server.key ciphers "ALL:!aNULL:!eNULL:!SSLv2:!SSLv3:!DHE:!AES256-SHA:!CAMELLIA256-SHA:!AES128-SHA:!CAMELLIA128-SHA:!TLS_RSA_WITH_RC4_128_MD5:!TLS_RSA_WITH_RC4_128_SHA:!TLS_RSA_WITH_3DES_EDE_CBC_SHA:!TLS_DHE_RSA_WITH_3DES_EDE_CBC_SHA:!TLS_RSA_WITH_SEED_CBC_SHA:!TLS_DHE_RSA_WITH_SEED_CBC_SHA:!TLS_ECDHE_RSA_WITH_RC4_128_SHA:!TLS_ECDHE_RSA_WITH_3DES_EDE_CBC_SHA" </transport> </source>Save and close the file.

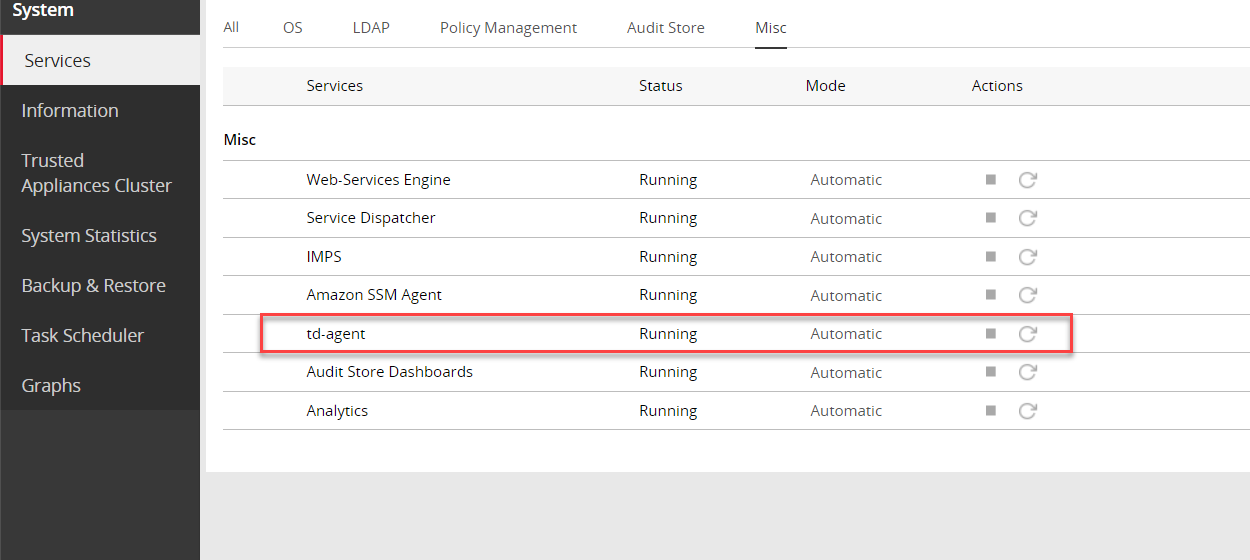

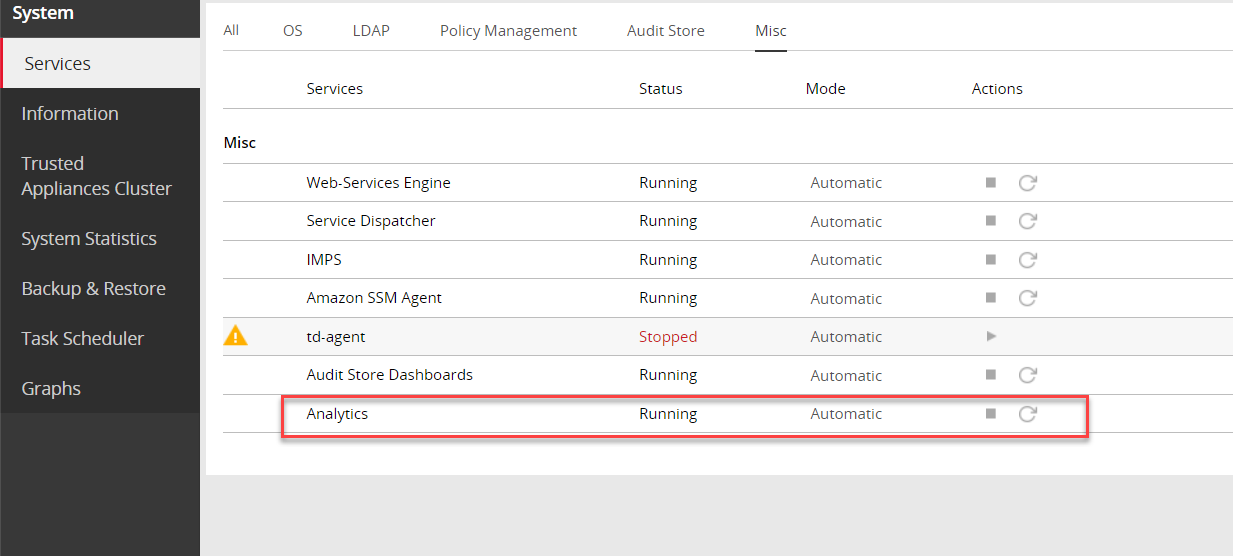

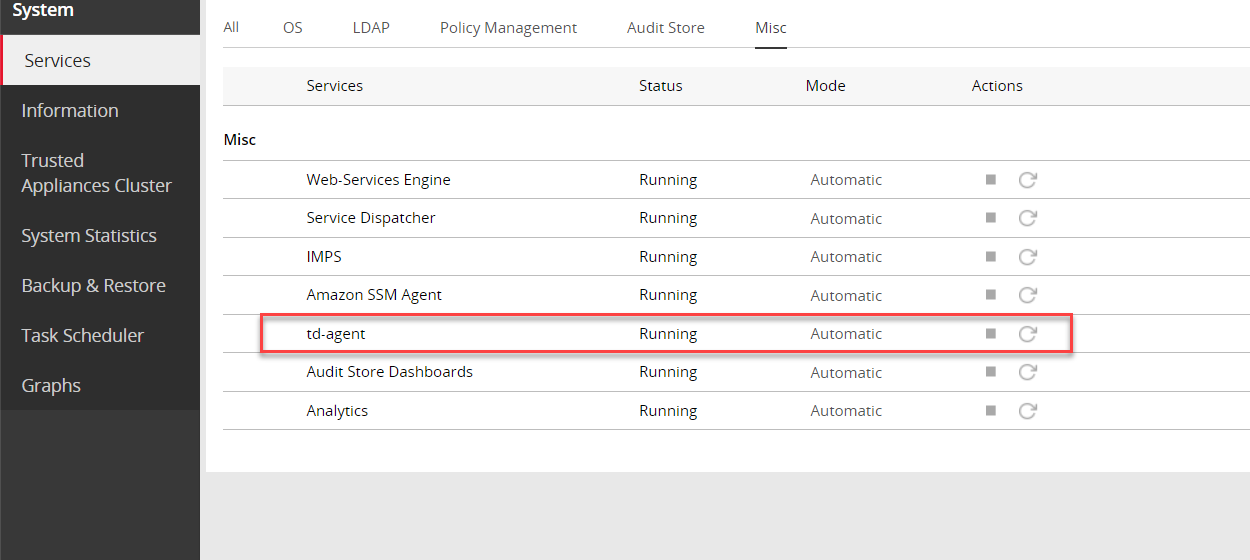

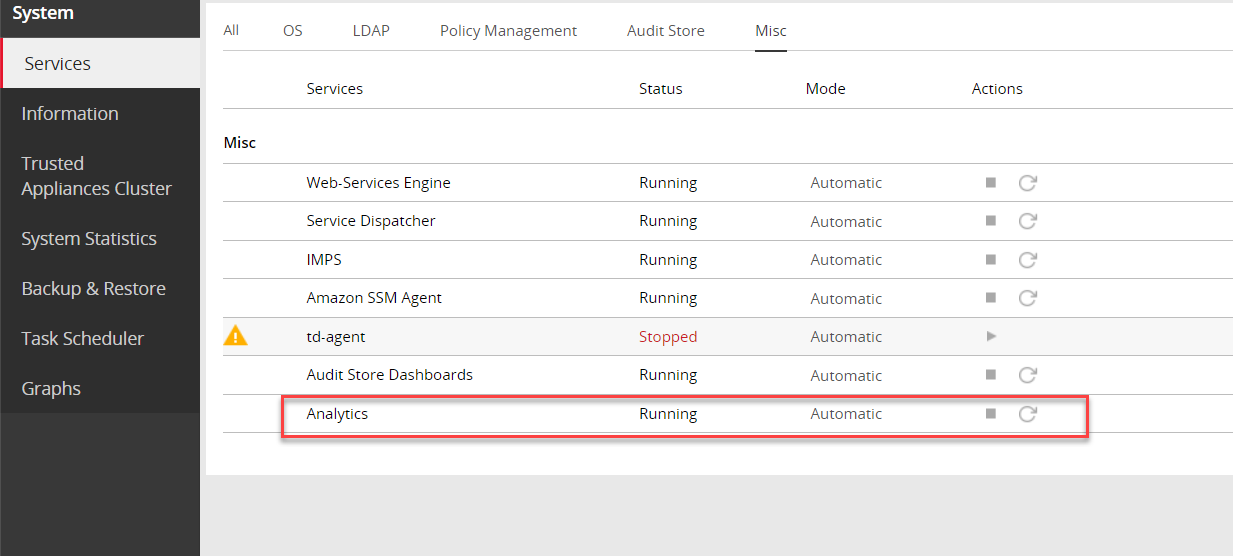

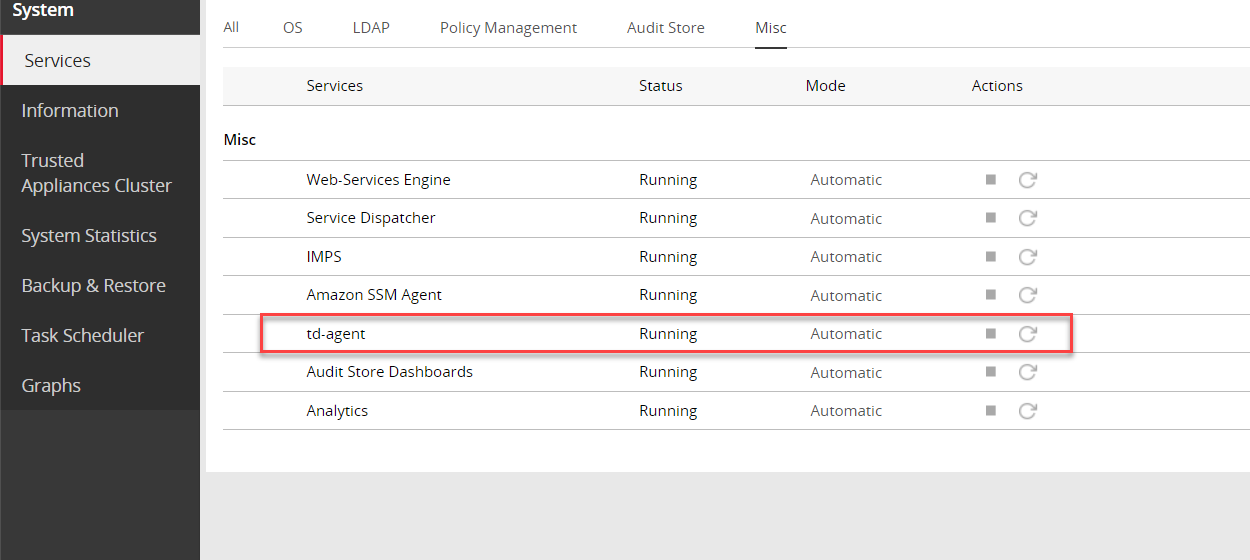

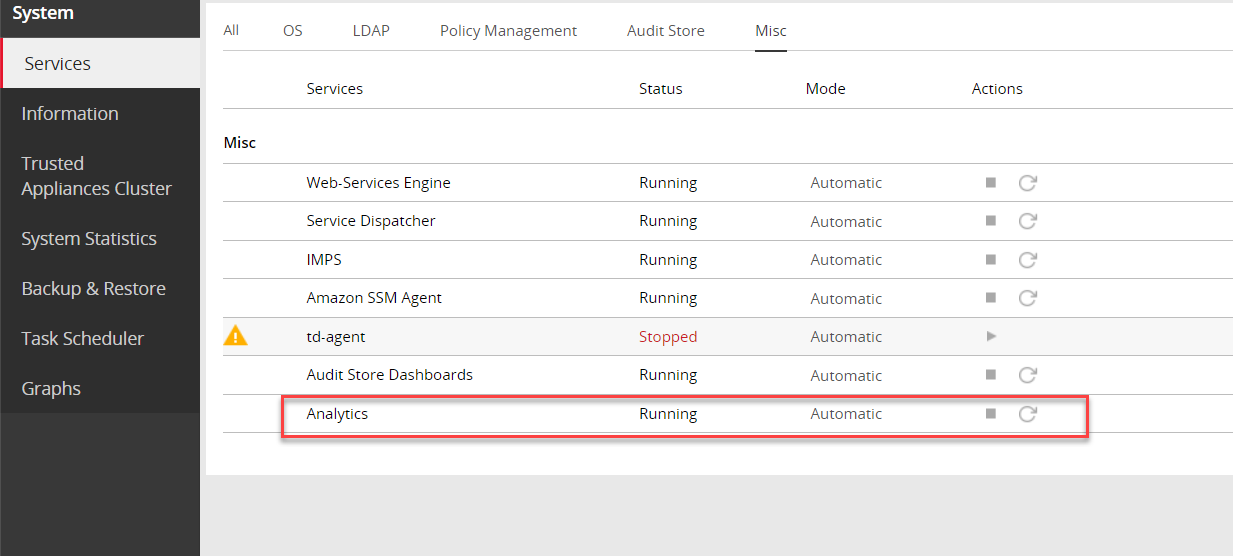

Restart the td-agent service.

Log in to the ESA Web UI.

Navigate to System > Services > Misc > td-agent.

Restart the td-agent service.

Repeat the steps on all the ESAs in the Audit Store cluster.

2. Sending the protector logs to the td-agent

Configure the protector to send the logs to the td-agent on the ESA or appliance. The td-agent forwards the logs received to Insight and the external location.

To configure the protector:

Log in and open a CLI on the protector machine.

Back up the existing files.

Navigate to the config.d directory using the following command.

cd /opt/protegrity/logforwarder/data/config.dProtectors v10.0.0 and later use the /opt/protegrity/logforwarder/data/config.d path. Use the /opt/protegrity/fluent-bit/data/config.d path for earlier protectors.

Back up the existing out.conf file using the following command.

cp out.conf out.conf_backupProtectors v9.2.0.0 and later use the out.conf file. Use the out_elastic.conf file for protectors v9.1.0.0 and earlier.

Back up the existing upstream.cfg file using the following command.

cp upstream.cfg upstream.cfg_backupProtectors v9.2.0.0 and later use the upstream.cfg file. Use the upstream_es.cfg file for protectors v9.1.0.0 and earlier.

Update the out.conf file for specifying the logs that must be forwarded to the ESA.

Navigate to the /opt/protegrity/logforwarder/data/config.d directory. Protectors v10.0.0 and later use the /opt/protegrity/logforwarder/data/config.d path. Use the /opt/protegrity/fluent-bit/data/config.d path for earlier protectors.

Open the out.conf file using a text editor.

Update the file contents with the following code.

Update the code blocks for all the options with the following information:

Update the Name parameter from opensearch to forward.

Delete the following Index, Type, and Time_Key parameters:

Index pty_insight_audit Type _doc Time_Key ingest_time_utcDelete the Supress_Type_Name and Buffer_Size parameters:

Suppress_Type_Name on Buffer_Size false

The updated extract of the code is shown here.

[OUTPUT] Name forward Match logdata Retry_Limit False Upstream /opt/protegrity/logforwarder/data/config.d/upstream.cfg storage.total_limit_size 256M net.max_worker_connections 1 net.keepalive off Workers 1 [OUTPUT] Name forward Match flulog Retry_Limit no_retries Upstream /opt/protegrity/logforwarder/data/config.d/upstream.cfg storage.total_limit_size 256M net.max_worker_connections 1 net.keepalive off Workers 1Ensure that the file does not have any trailing spaces or line breaks at the end of the file.

Protectors v10.0.0 and later use the /opt/protegrity/logforwarder/data/config.d path. Use the /opt/protegrity/fluent-bit/data/config.d path for earlier protectors. Protectors v9.2.0.0 and later use the upstream.cfg file. Use the upstream_es.cfg file for protectors v9.1.0.0 and earlier.

Save and close the file.

Update the upstream.cfg file for forwarding the logs to the ESA.

Navigate to the /opt/protegrity/logforwarder/data/config.d directory.

Protectors v10.0.0 and later use the /opt/protegrity/logforwarder/data/config.d path. Use the /opt/protegrity/fluent-bit/data/config.d path for earlier protectors.

Open the upstream.cfg file using a text editor.

Update the file contents with the following code.

Update the code blocks for all the nodes with the following information:

Update the Port to 24284.

Delete the Pipeline parameter:

Pipeline logs_pipeline

The updated extract of the code is shown here.

[UPSTREAM] Name pty-insight-balancing [NODE] Name node-1 Host <IP address of the ESA> Port 24284 tls on tls.verify offThe code shows information updated for one node. For multiple nodes, update the information for all the nodes.

Ensure that there are no trailing spaces or line breaks at the end of the file.

If the IP address of the ESA is updated, then update the Host value in the upstream.cfg file.

Protectors v9.2.0.0 and later use the Name parameter as pty-insight-balancing. Use the Name parameter as pty-es-balancing for protectors v9.1.0.0 and earlier.

Save and close the file.

Restart logforwarder on the protector using the following commands.

/opt/protegrity/logforwarder/bin/logforwarderctrl stop /opt/protegrity/logforwarder/bin/logforwarderctrl start

Protectors v10.0.0 and later use the /opt/protegrity/logforwarder/bin path. Use the /opt/protegrity/fluent-bit/bin path for earlier protectors.

If required, complete the configurations on the remaining protector machines.

Update the td-agent configuration to send logs to the external location.

3. Configuring td-agent to forward logs to the external endpoint

As per the setup and requirements, the logs forwarded can be formatted using the syslog-related fields and sent over TLS to the SIEM. Alternatively, send the logs without any formatting over a non-TLS connection to the SIEM, such as, syslog.

The ESA has logs generated by the appliances and the protectors connected to the ESA. Forward these logs to the syslog server and use the log data for further analysis as per requirements.

For a complete list of plugins for forwarding logs, refer to https://www.fluentd.org/plugins/all.

Before you begin: Ensure that the external syslog server is available and running.

The following options are available, select any one of the sections based on the requirements:

- Forwarding Logs to a Syslog Server

- Forwarding Logs to a Syslog Server Over TLS

To forward logs to the external SIEM:

Open the CLI Manager on the Primary ESA.

Log in to the CLI Manager of the Primary ESA where the td-agent was configured in Setting Up td-agent to Receive Protector Logs.

Navigate to Administration > OS Console.

Enter the root password and select OK.

Navigate to the /products/uploads directory using the following command.

cd /products/uploadsObtain the required plugins files using one of the following commands based on the setup.

If the appliance has Internet access, then run the following commands.

wget https://rubygems.org/downloads/syslog_protocol-0.9.2.gemwget https://rubygems.org/downloads/remote_syslog_sender-1.2.2.gemwget https://rubygems.org/downloads/fluent-plugin-remote_syslog-1.0.0.gemIf the appliance does not have Internet access, then complete the following steps.

- Download the following setup files from a system that has Internet and copy them to the appliance in the /products/uploads directory.

- Ensure that the files downloaded have the execute permission.

Prepare the required plugins files using the following commands.

Assign the required ownership permissions to the software using the following command.

chown td-agent *.gemAssign the required permissions to the software installed using the following command.

chmod -R 755 /opt/td-agent/lib/ruby/gems/3.2.0/Assign ownership of the .gem files to the td-agent user using the following command.

chown -R td-agent:plug /opt/td-agent/lib/ruby/gems/3.2.0/

Install the required plugins files using one of the following commands based on the setup.

If the appliance has Internet access, then run the following commands.

sudo -u td-agent /opt/td-agent/bin/fluent-gem install syslog_protocolsudo -u td-agent /opt/td-agent/bin/fluent-gem install remote_syslog_sendersudo -u td-agent /opt/td-agent/bin/fluent-gem install fluent-plugin-remote_syslogIf the appliance does not have Internet access, then run the following commands.

sudo -u td-agent /opt/td-agent/bin/fluent-gem install --local /products/uploads/syslog_protocol-0.9.2.gemsudo -u td-agent /opt/td-agent/bin/fluent-gem install install --local /products/uploads/remote_syslog_sender-1.2.2.gemsudo -u td-agent /opt/td-agent/bin/fluent-gem install --local /products/uploads/fluent-plugin-remote_syslog-1.0.0.gem

Update the configuration files using the following steps.

Navigate to the config.d directory using the following command.

cd /opt/protegrity/td-agent/config.dBack up the existing output file using the following command.

cp OUTPUT.conf OUTPUT.conf_backupOpen the OUTPUT.conf file using a text editor.

Update the following contents in the OUTPUT.conf file.

Update the match tag in the file to <match *.*.* logdata flulog>.

Add the following code in the match tag in the file:

<store> @type relabel @label @syslog </store>

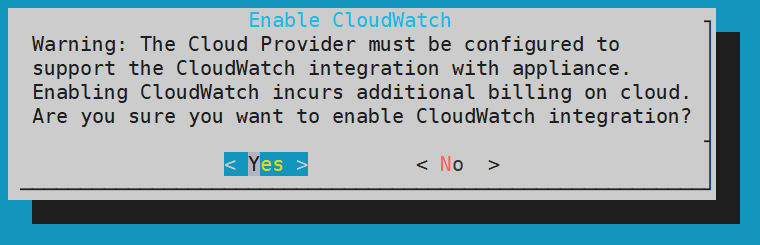

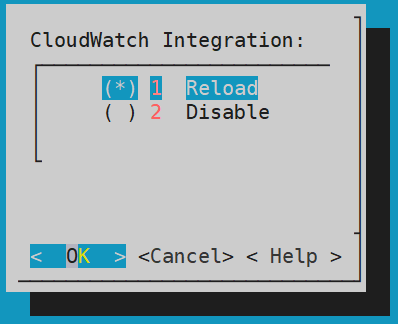

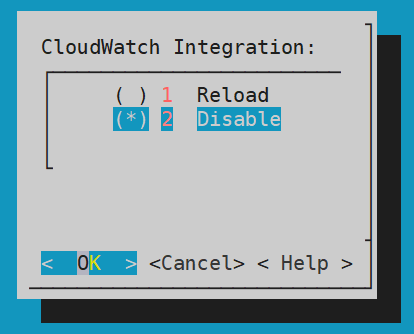

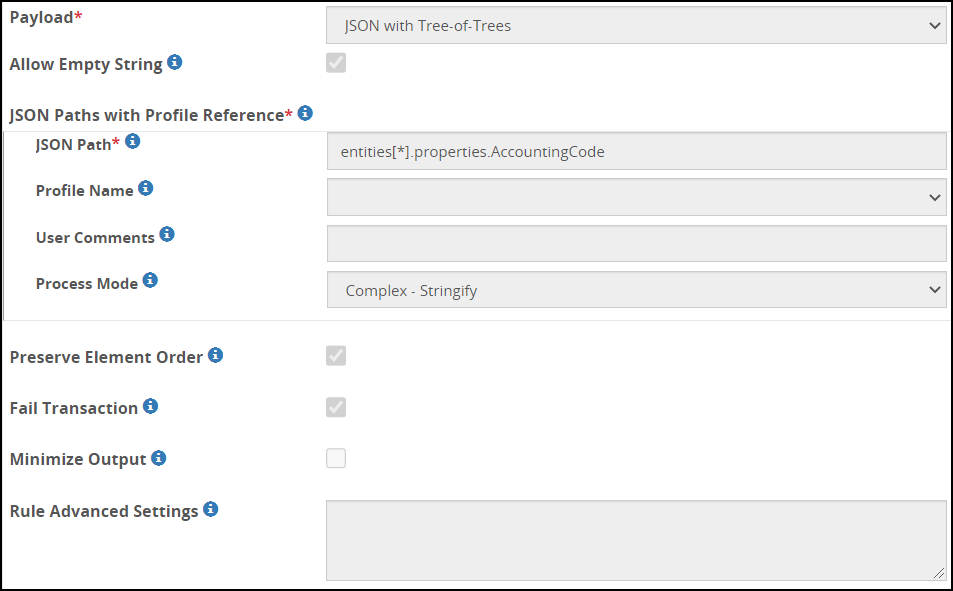

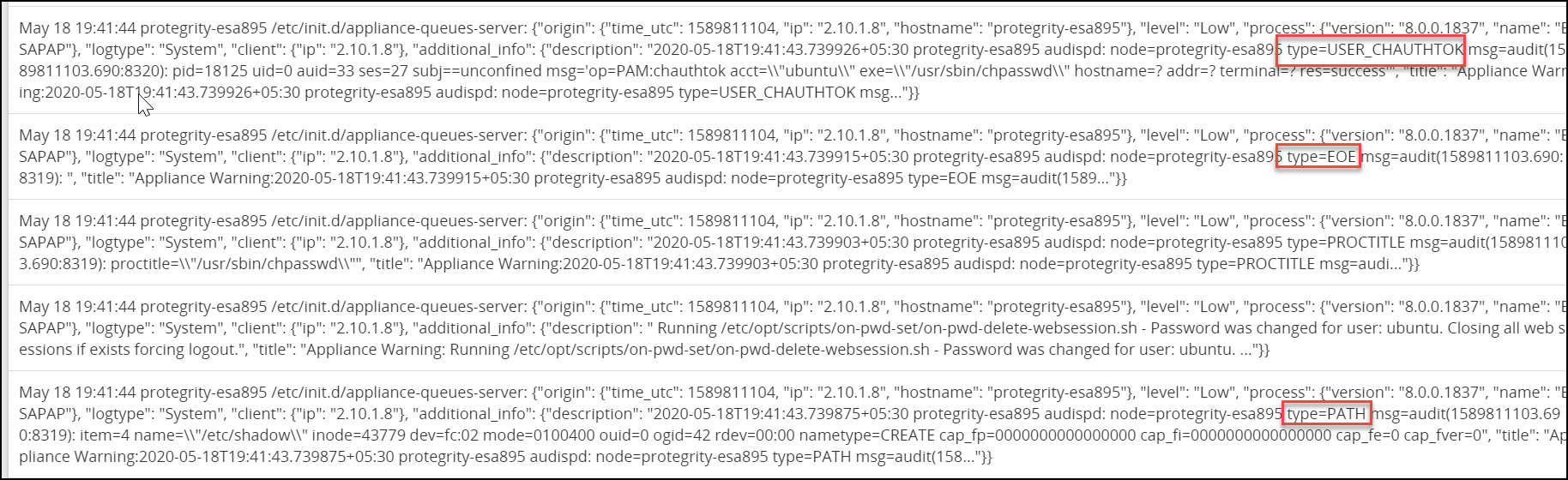

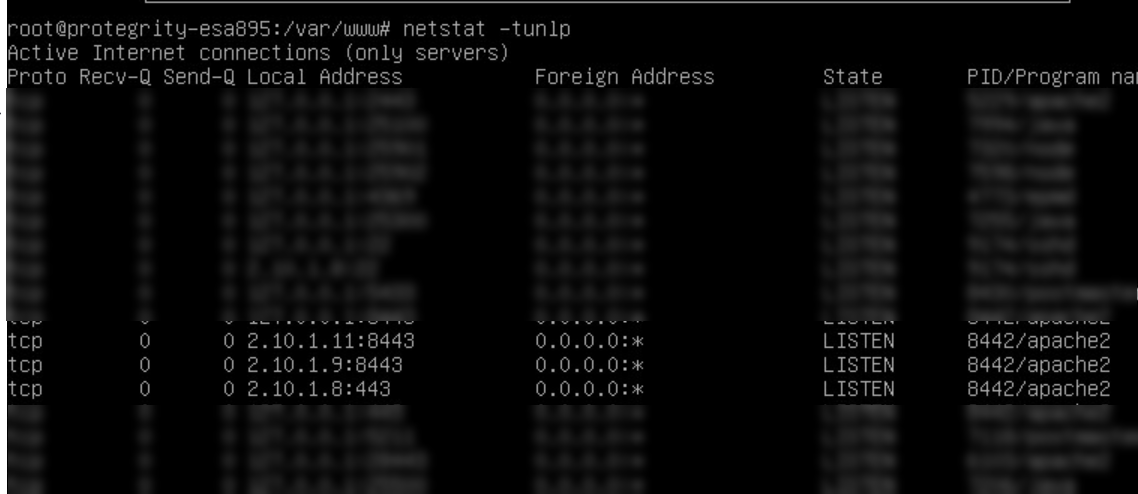

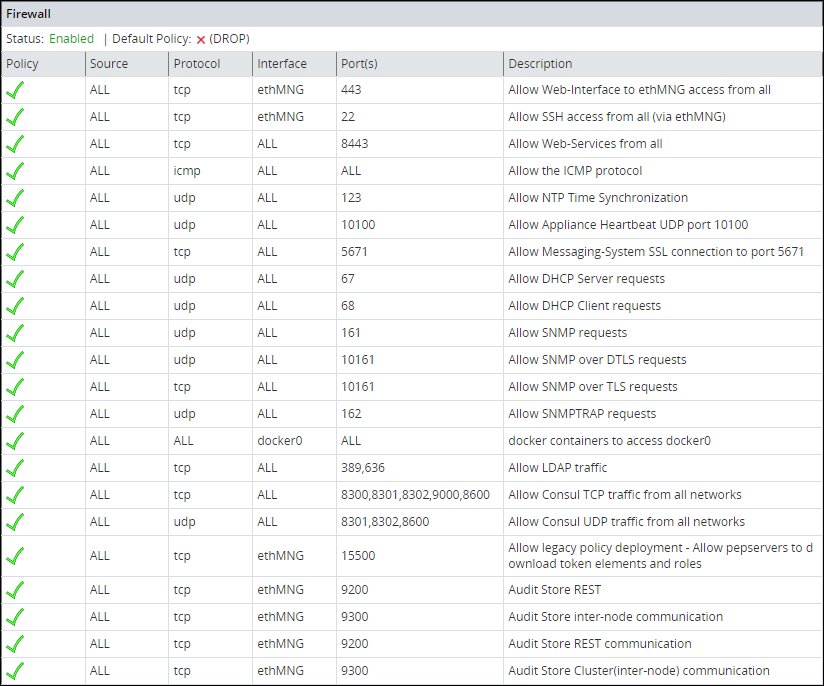

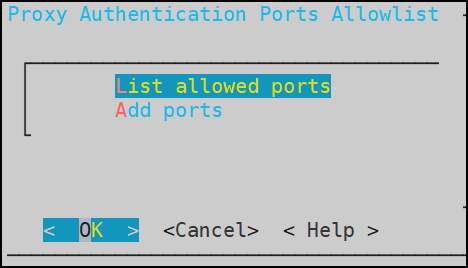

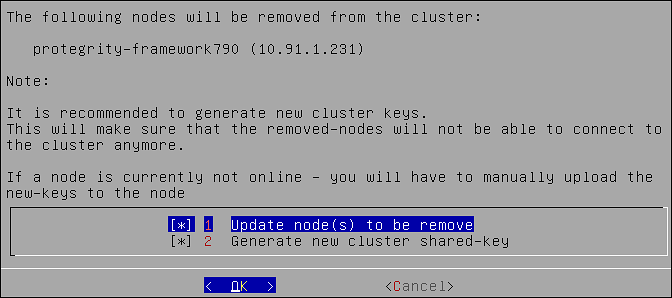

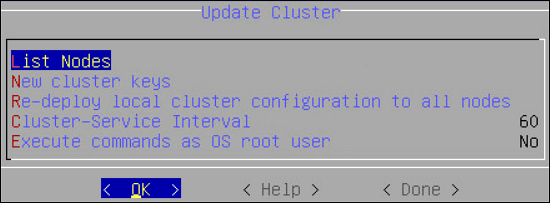

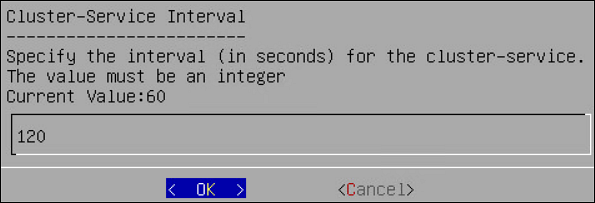

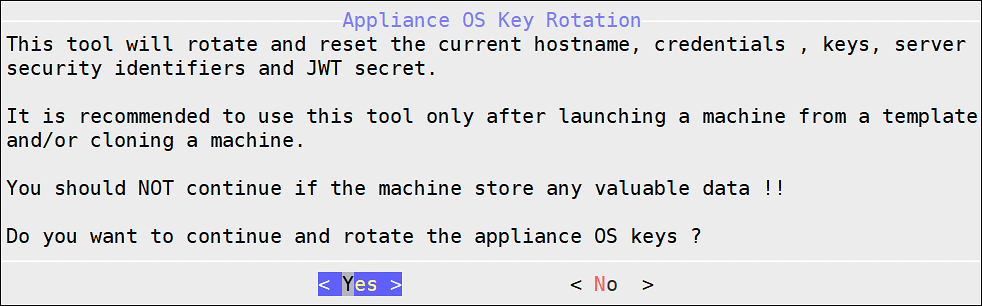

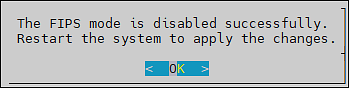

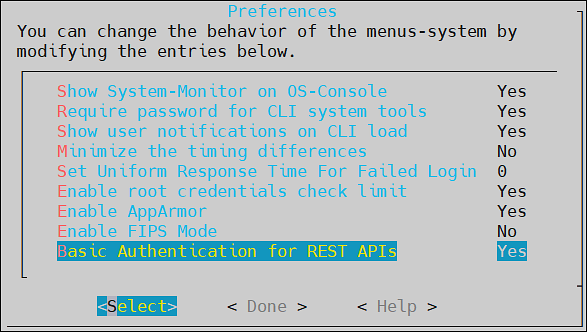

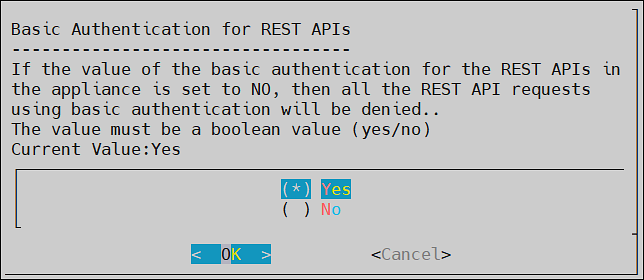

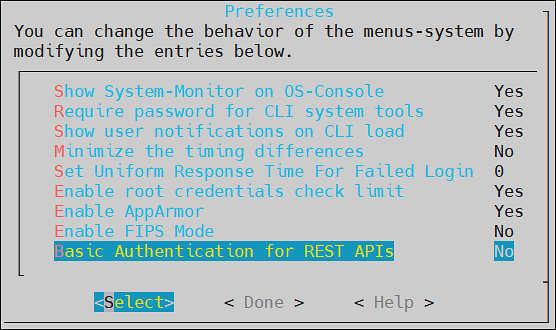

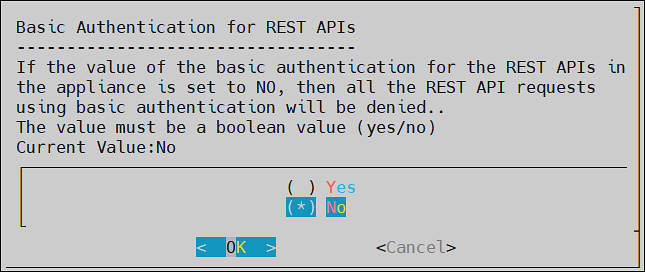

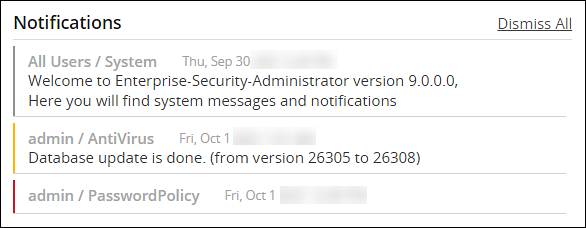

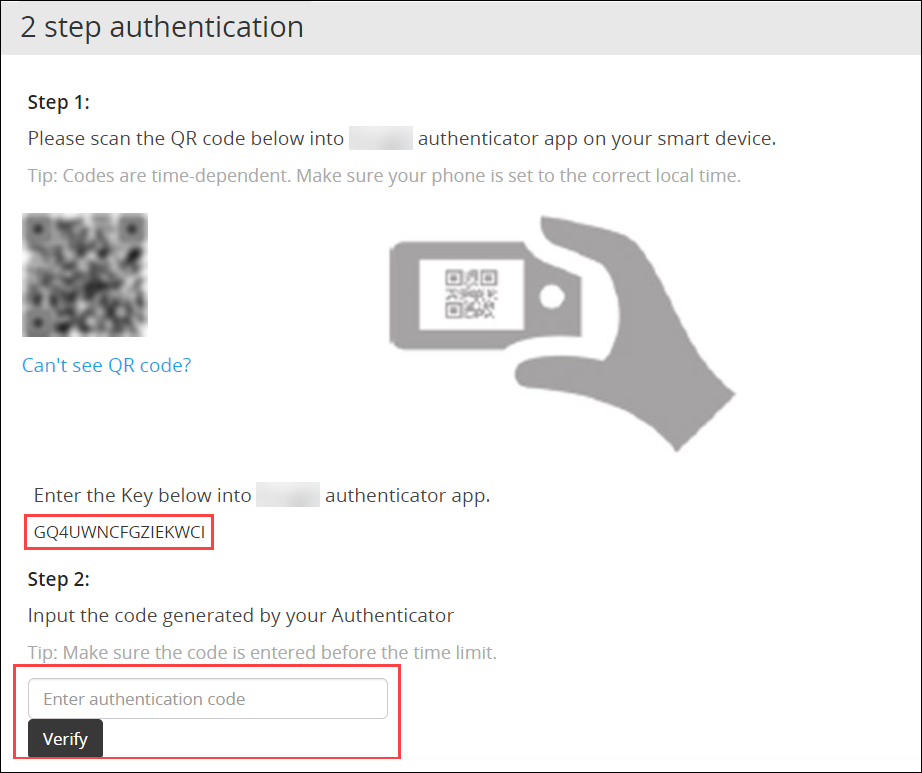

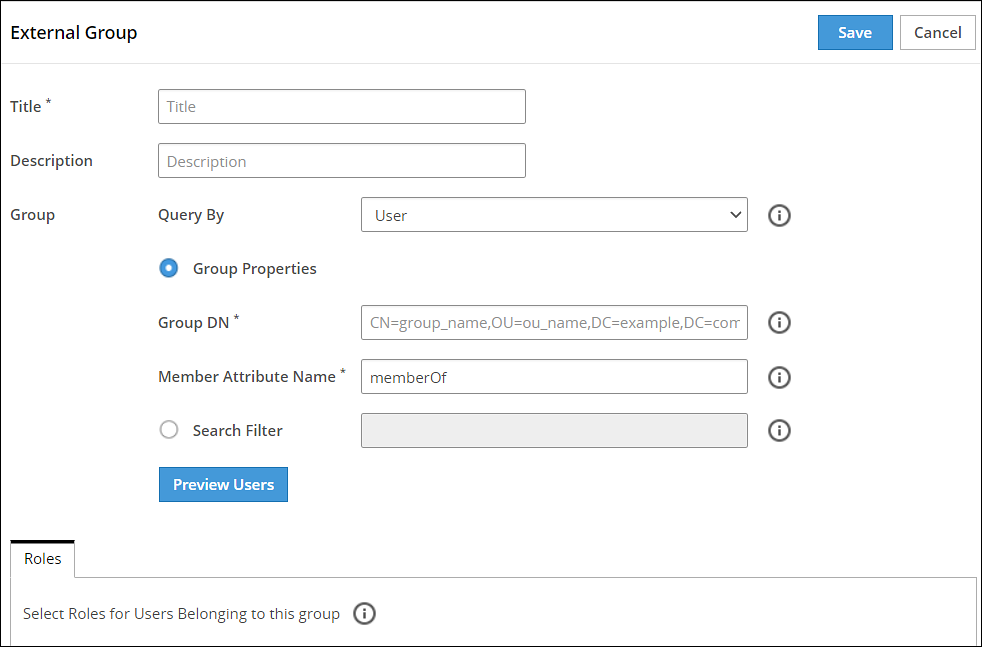

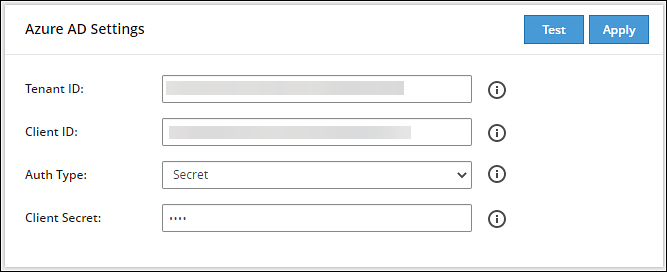

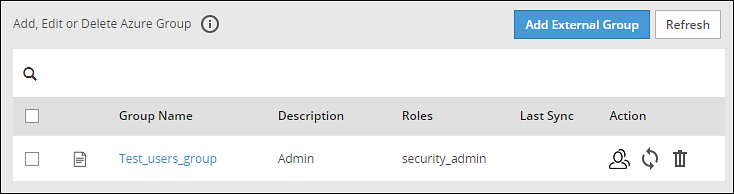

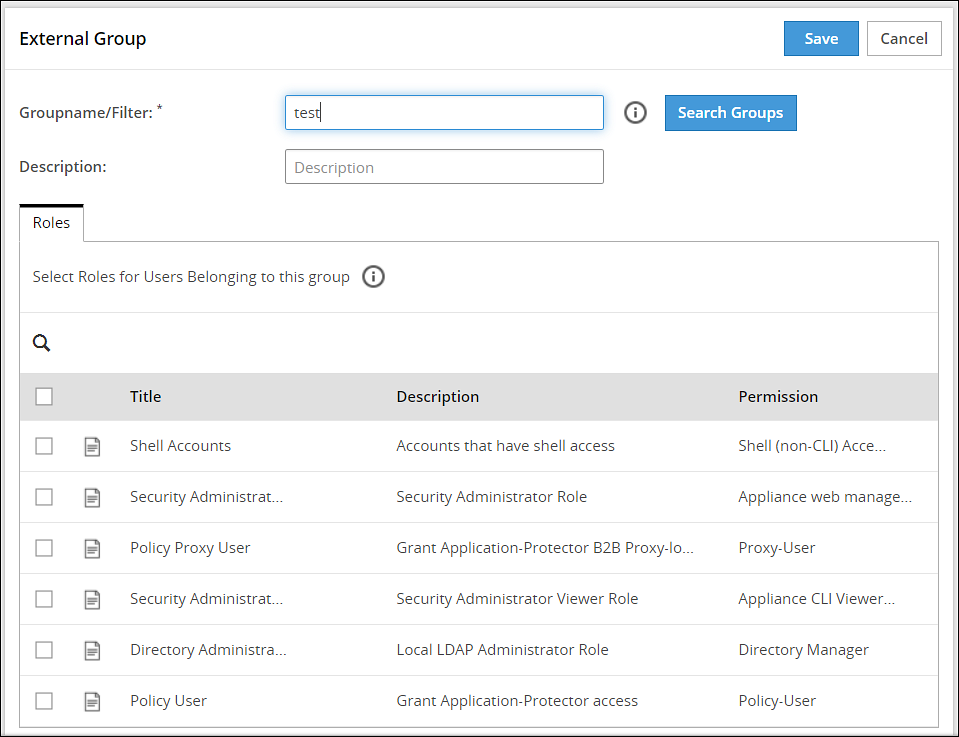

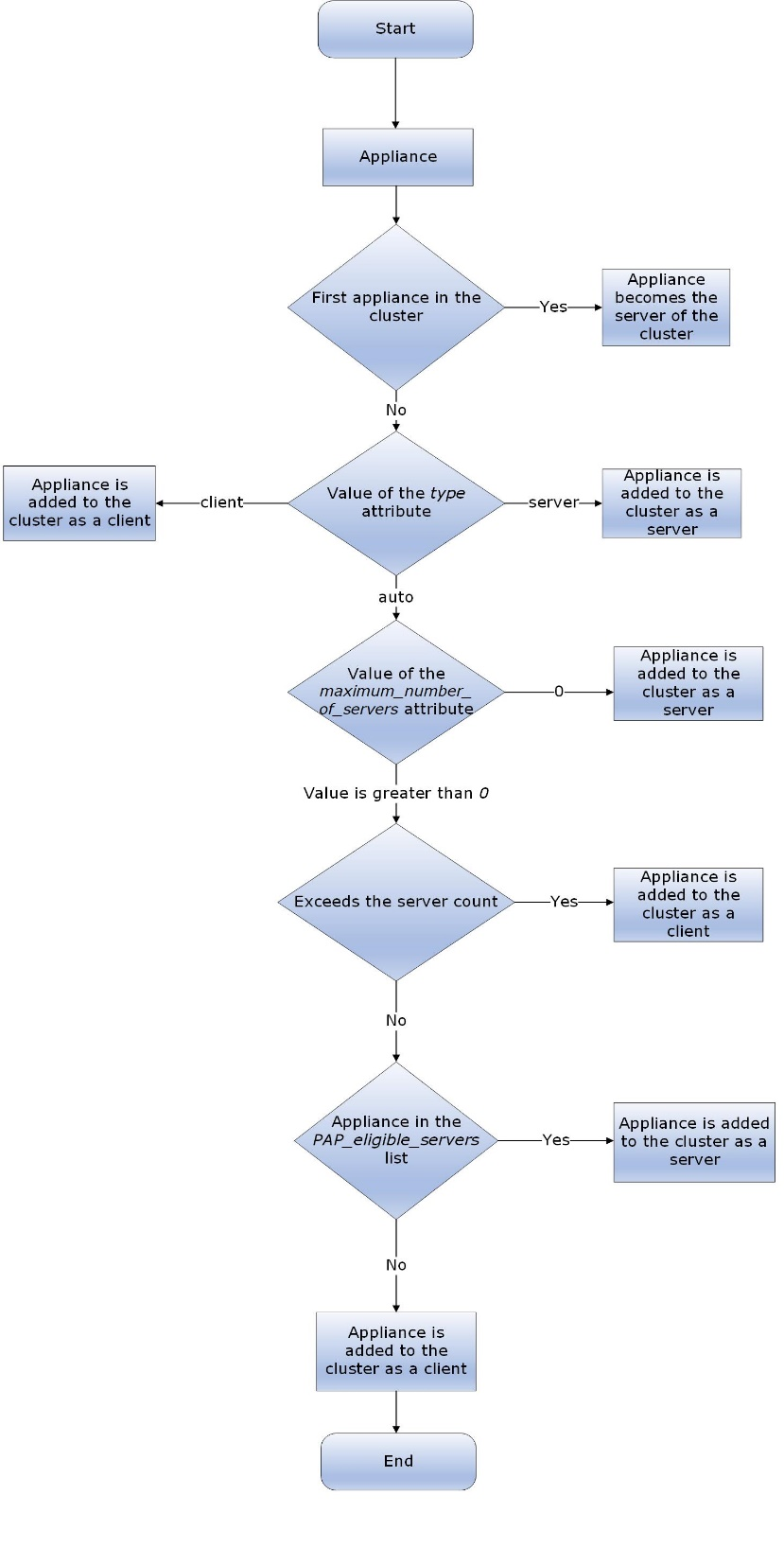

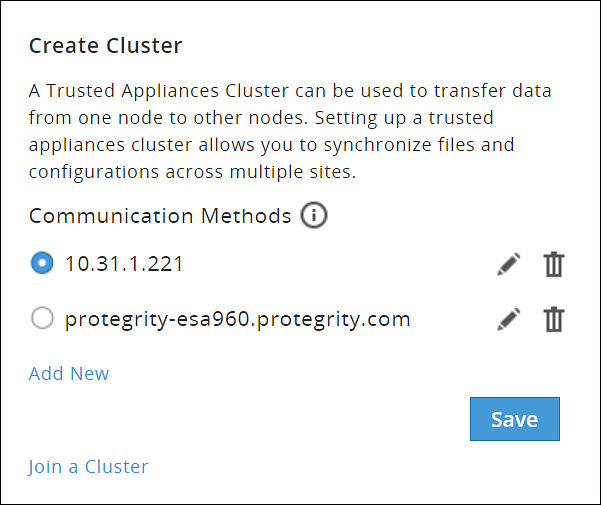

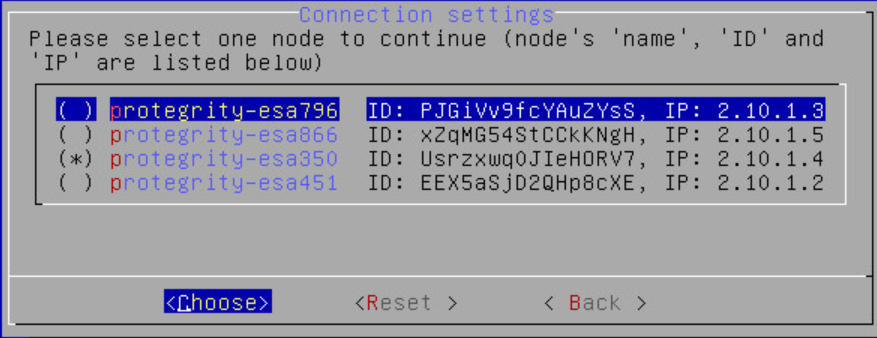

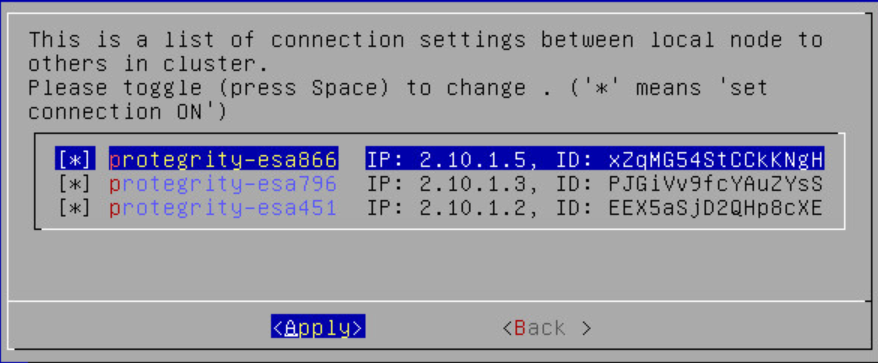

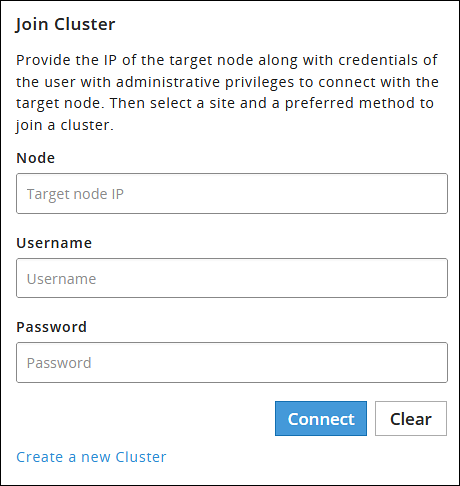

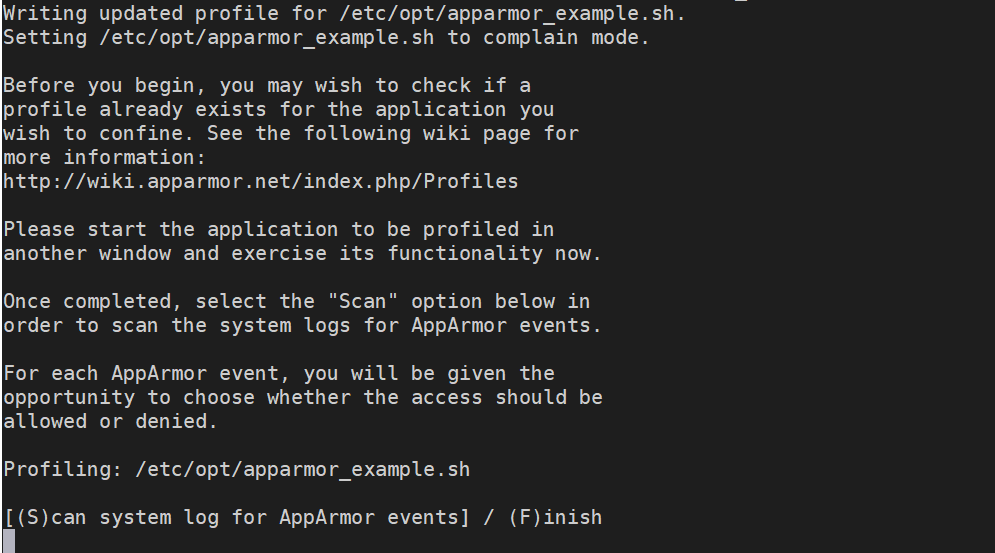

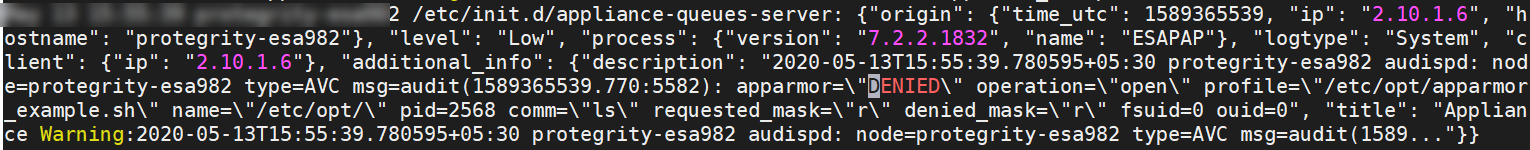

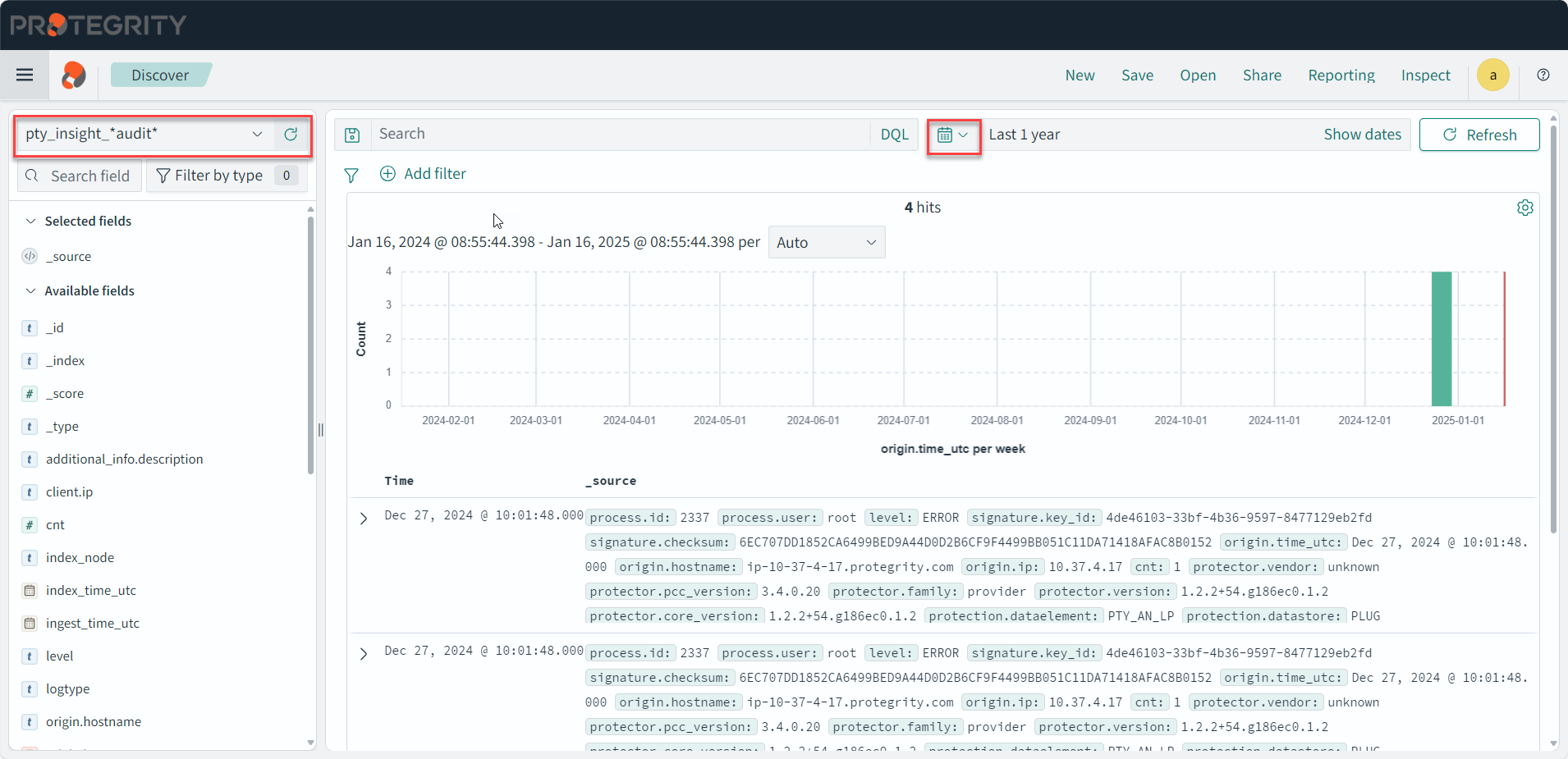

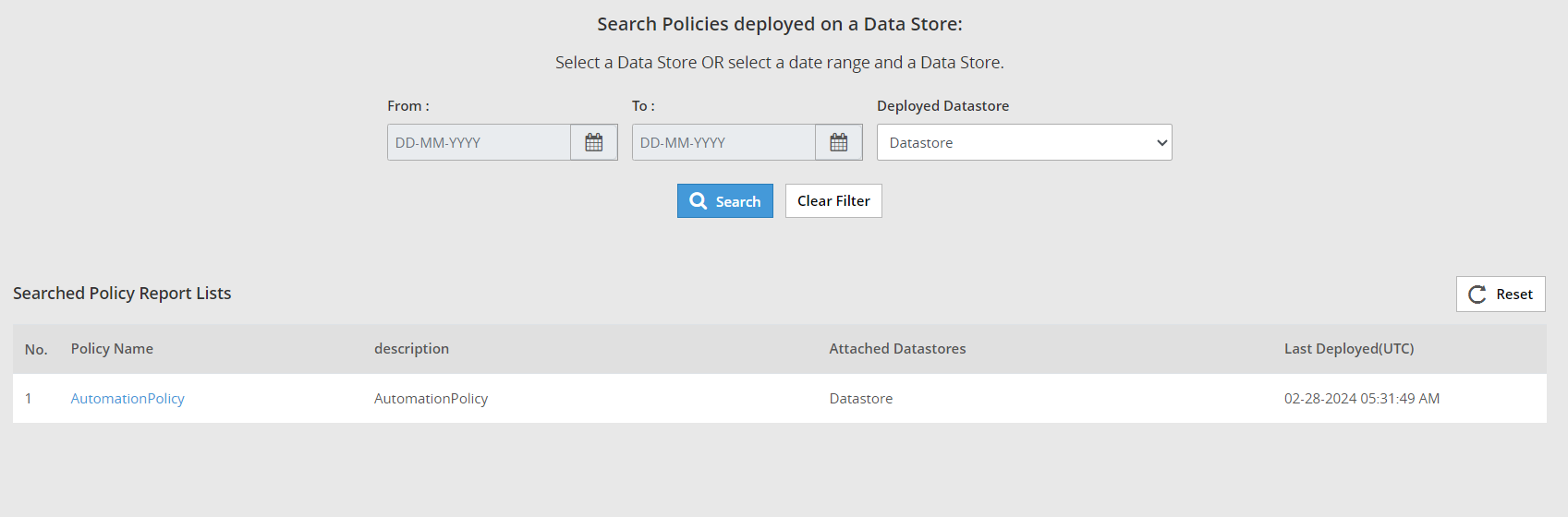

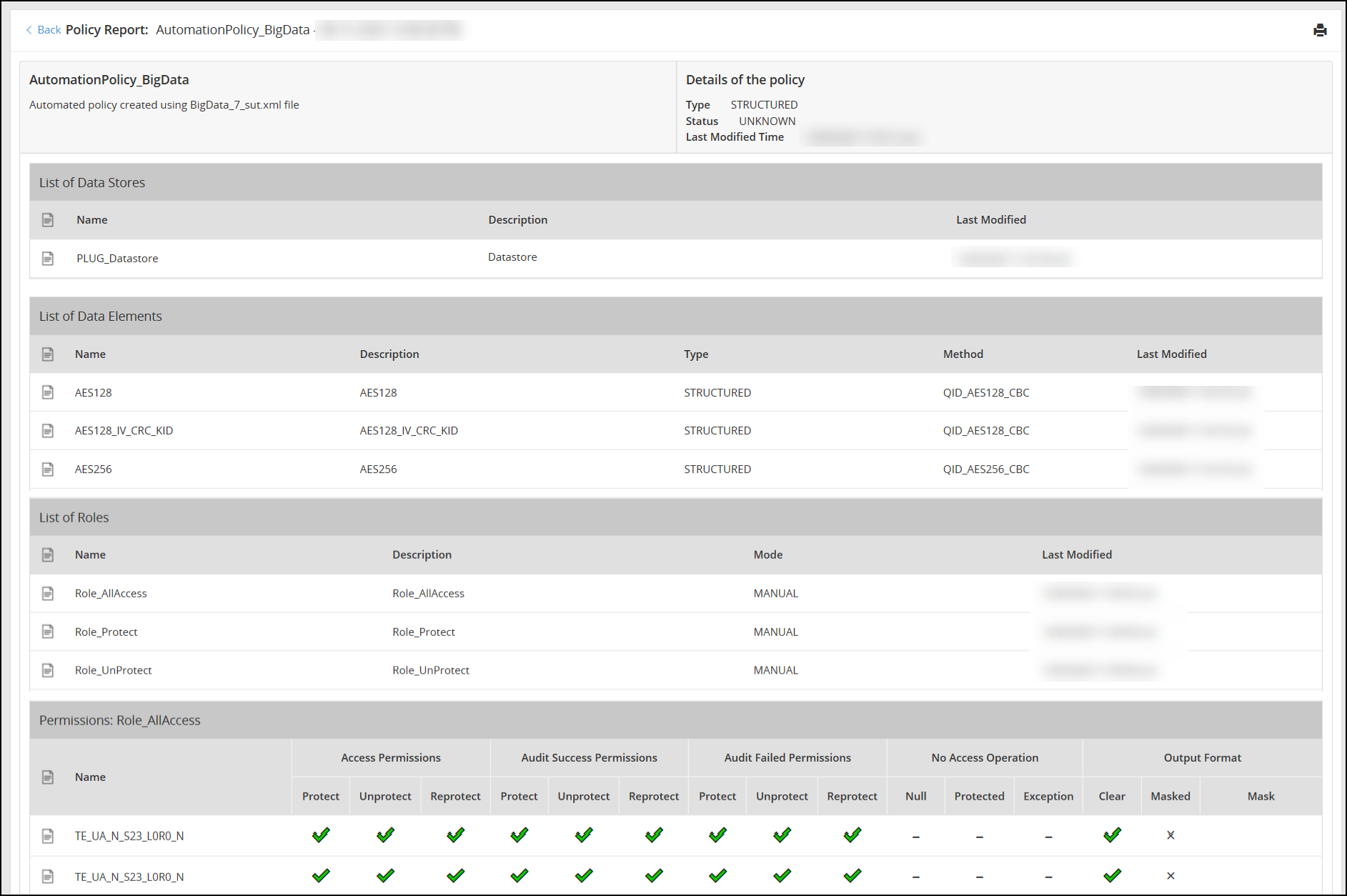

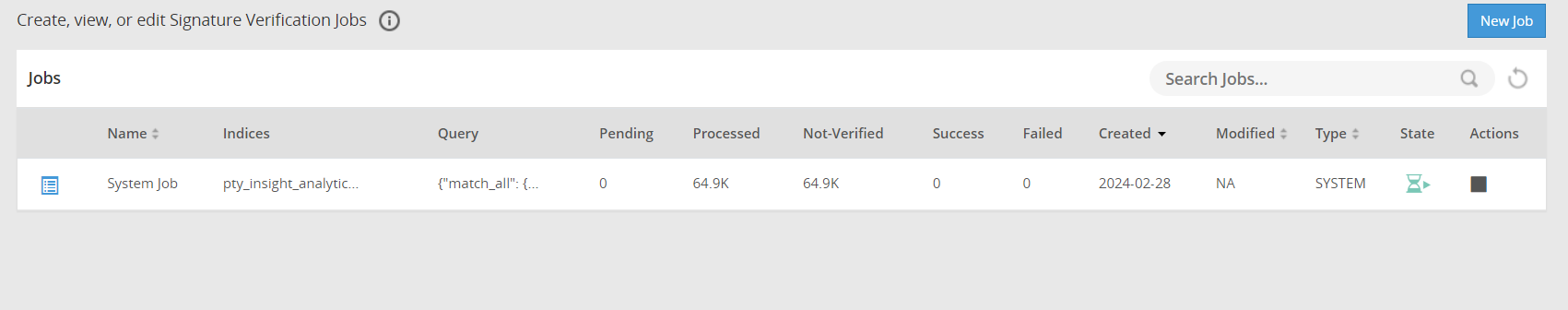

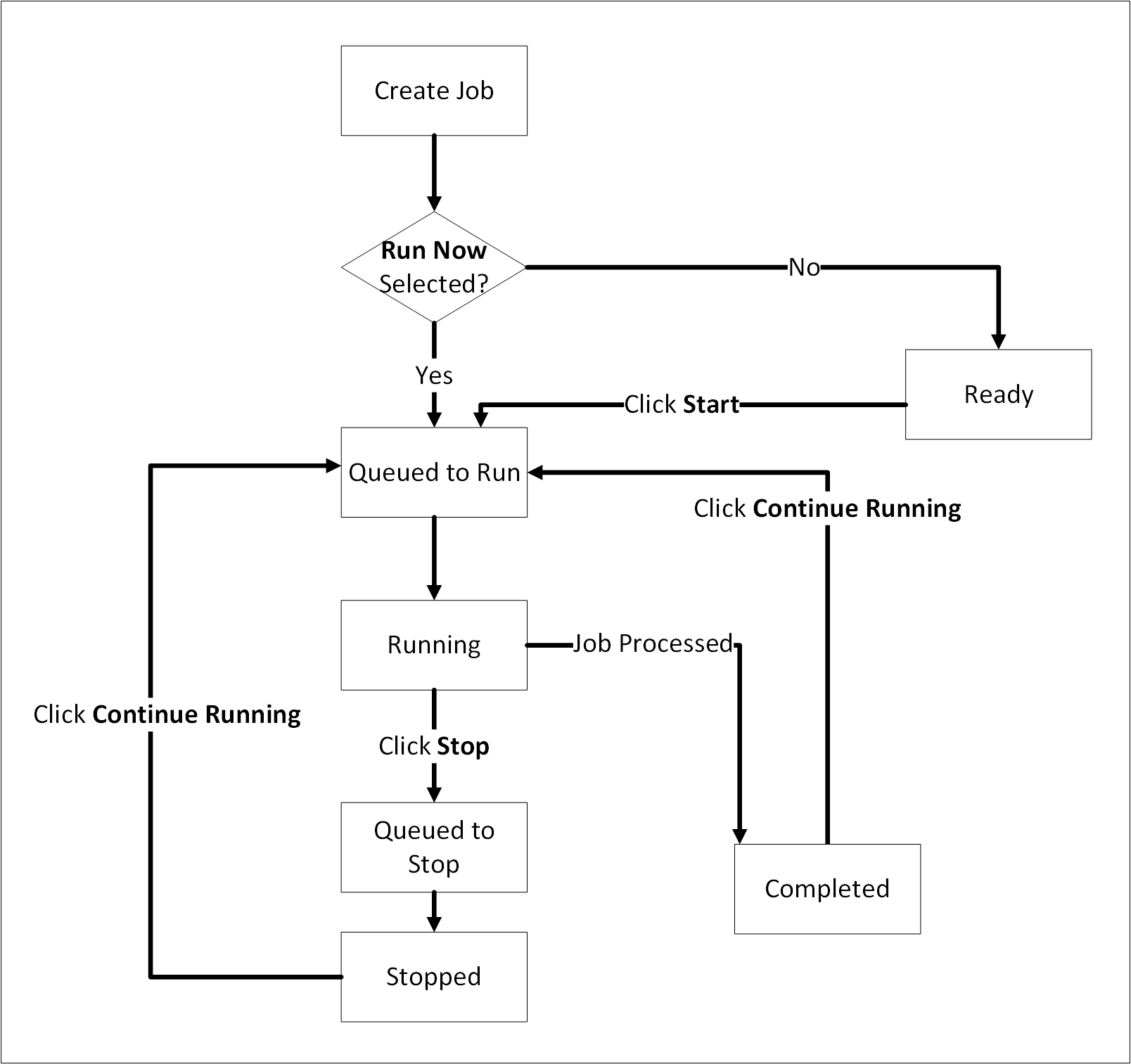

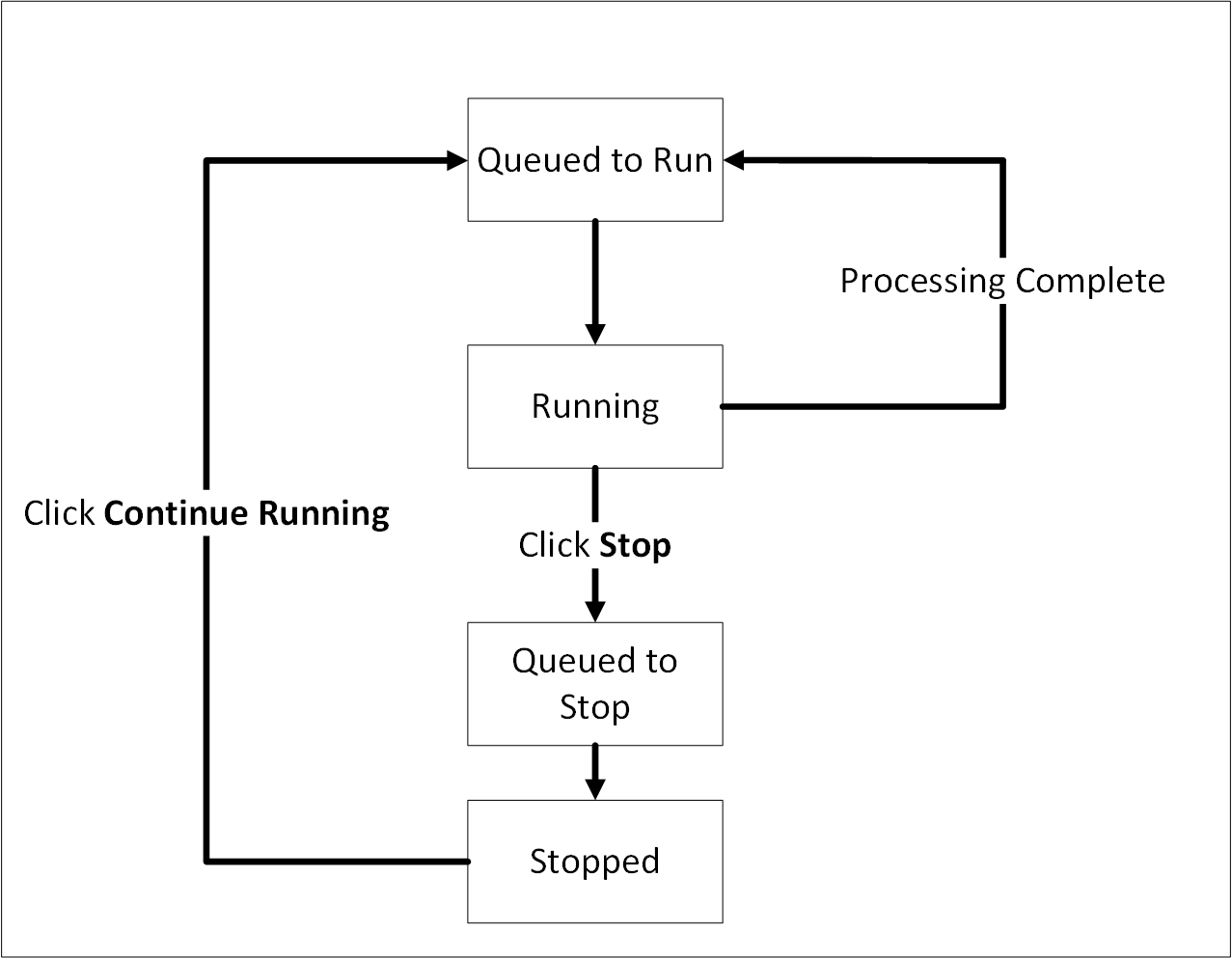

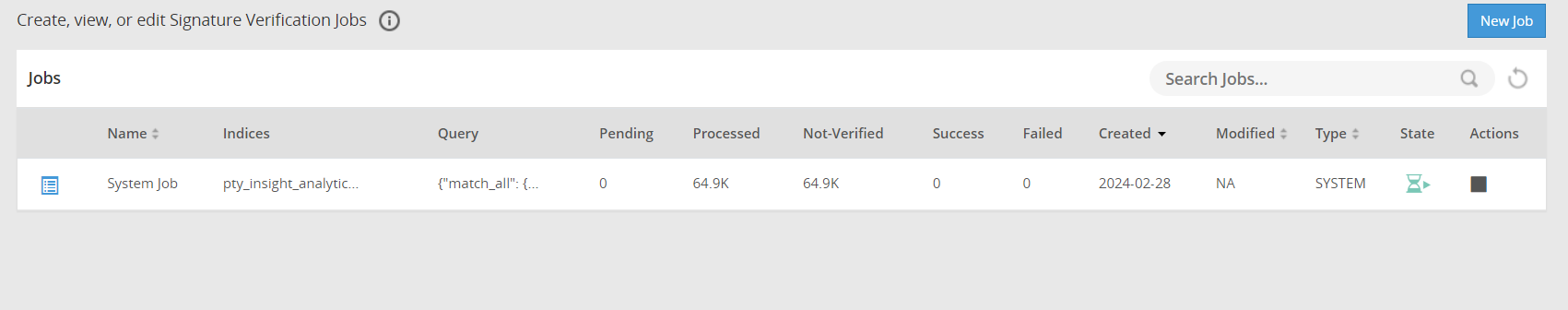

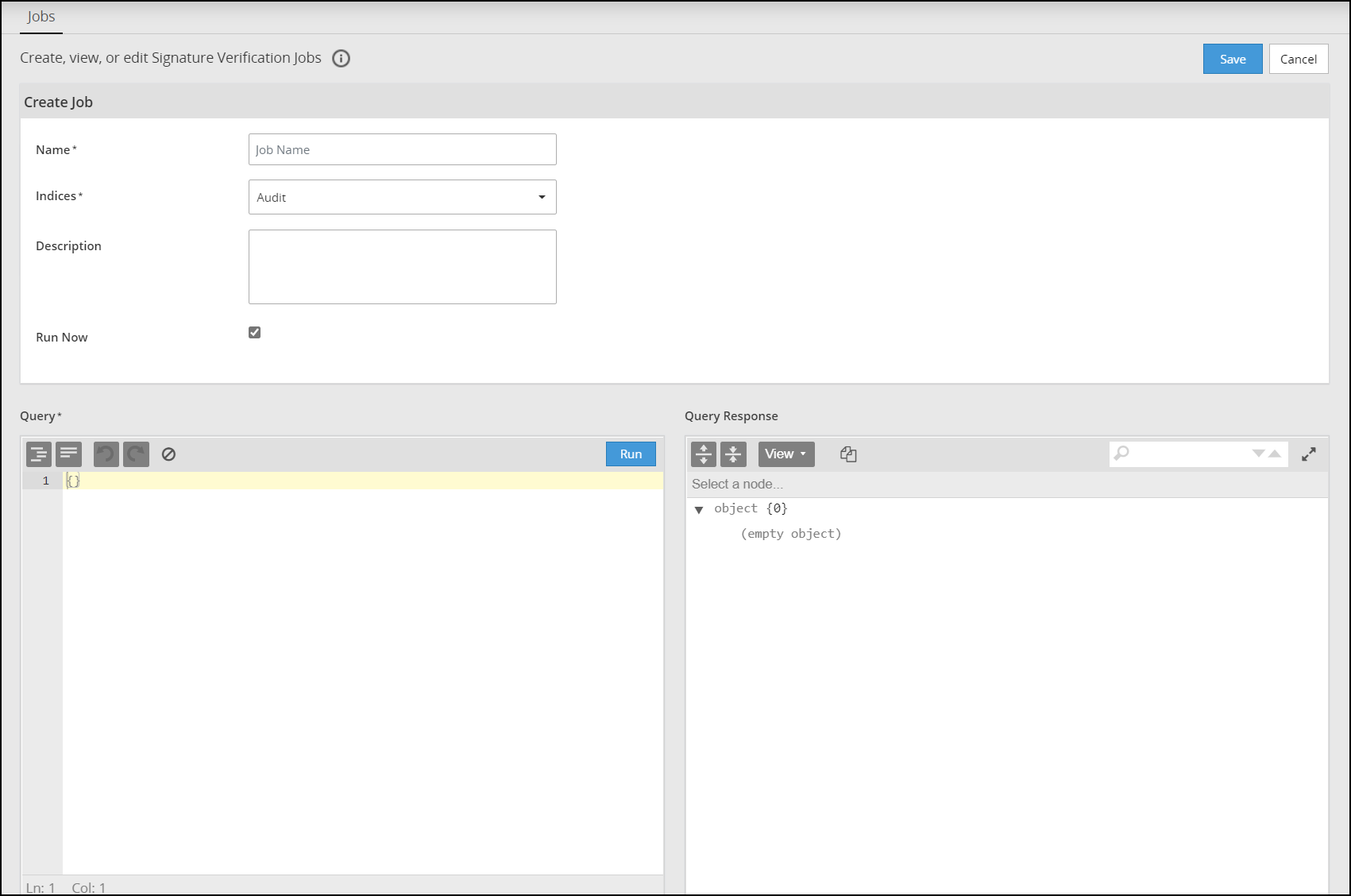

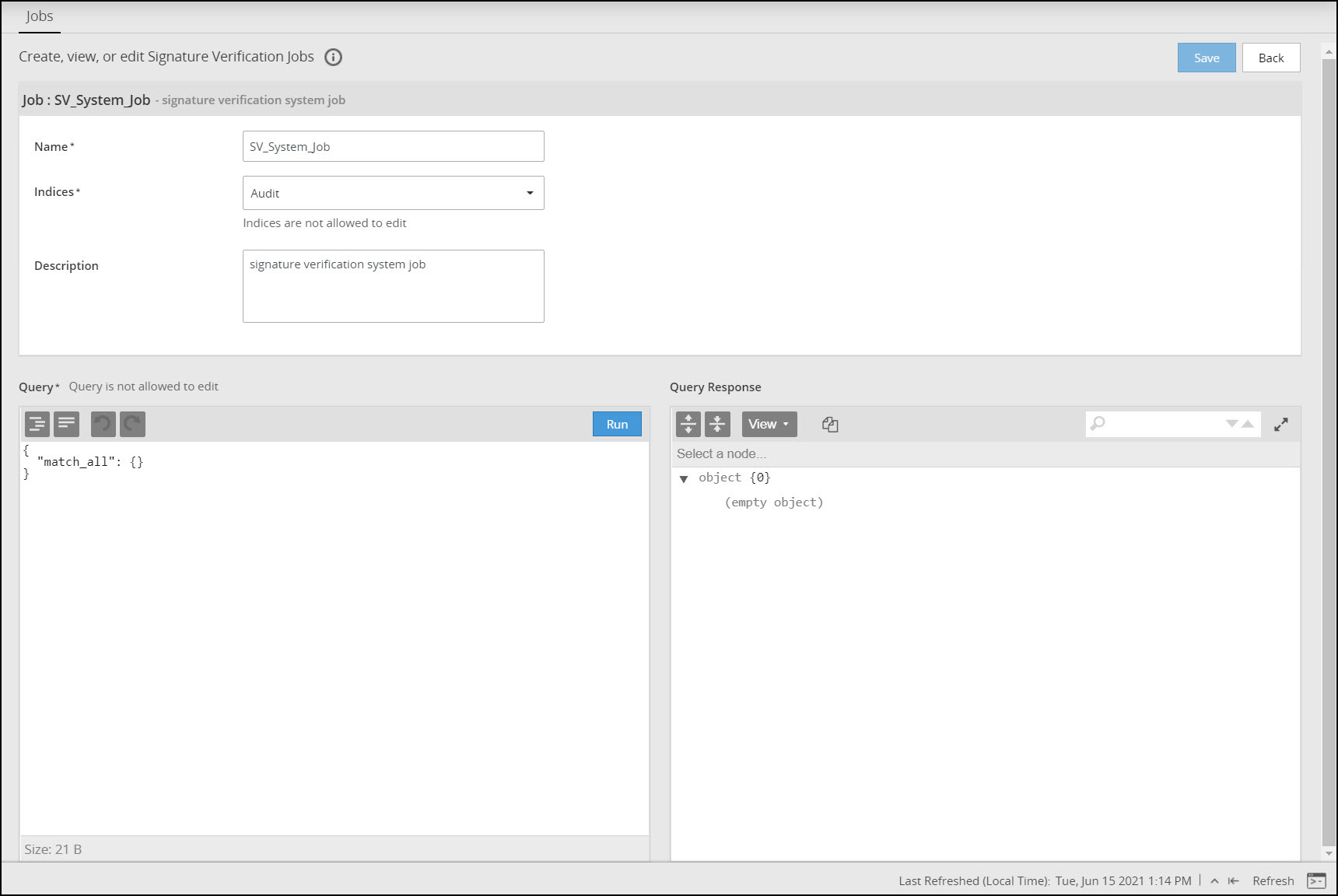

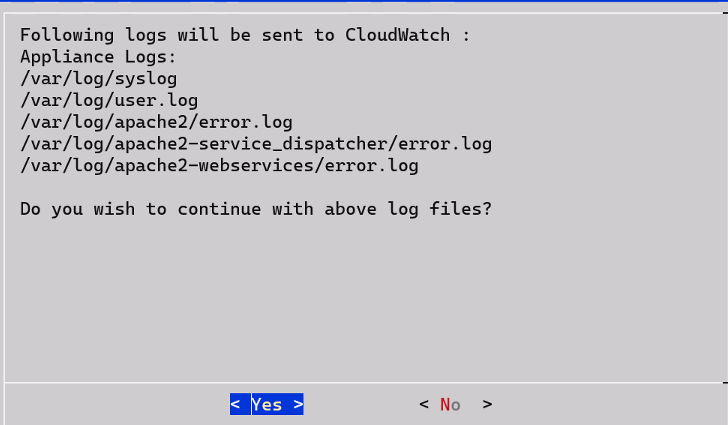

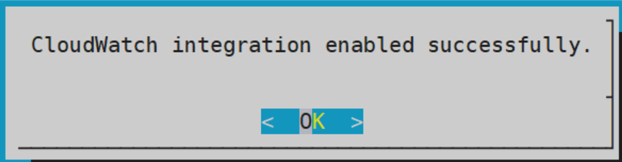

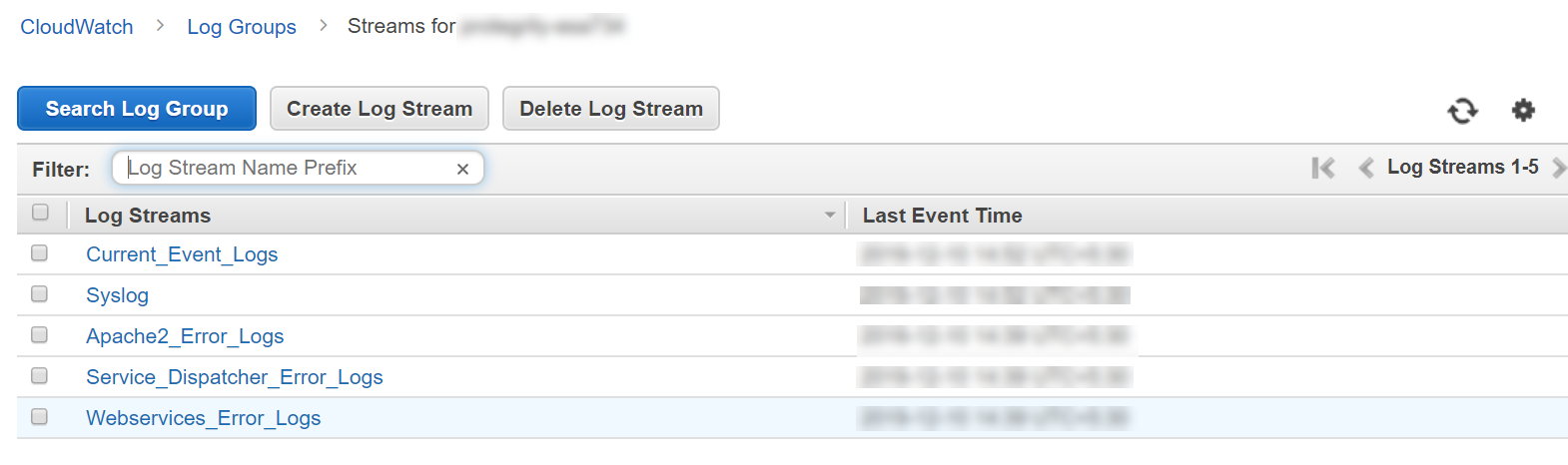

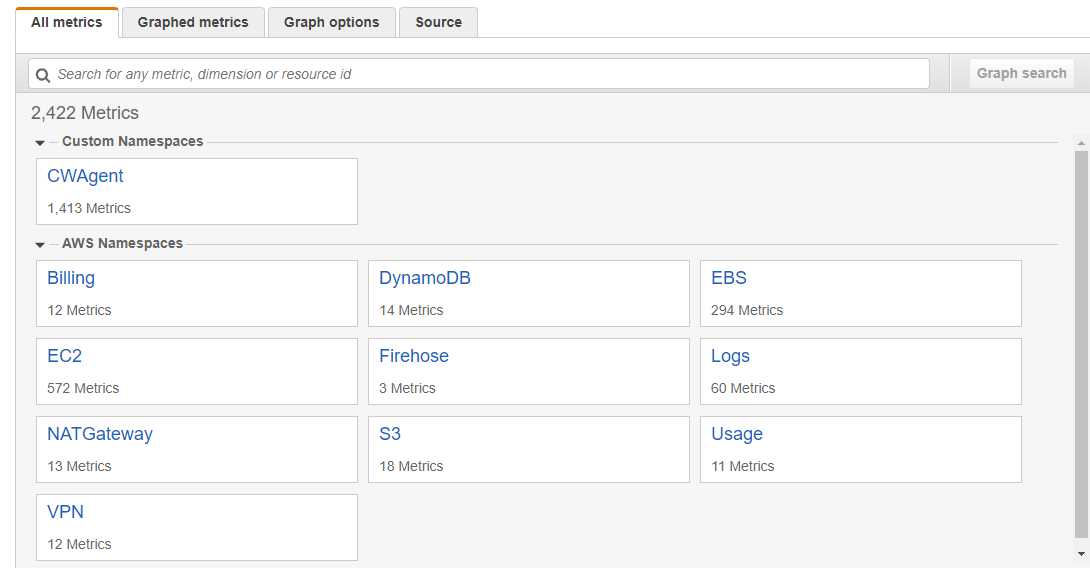

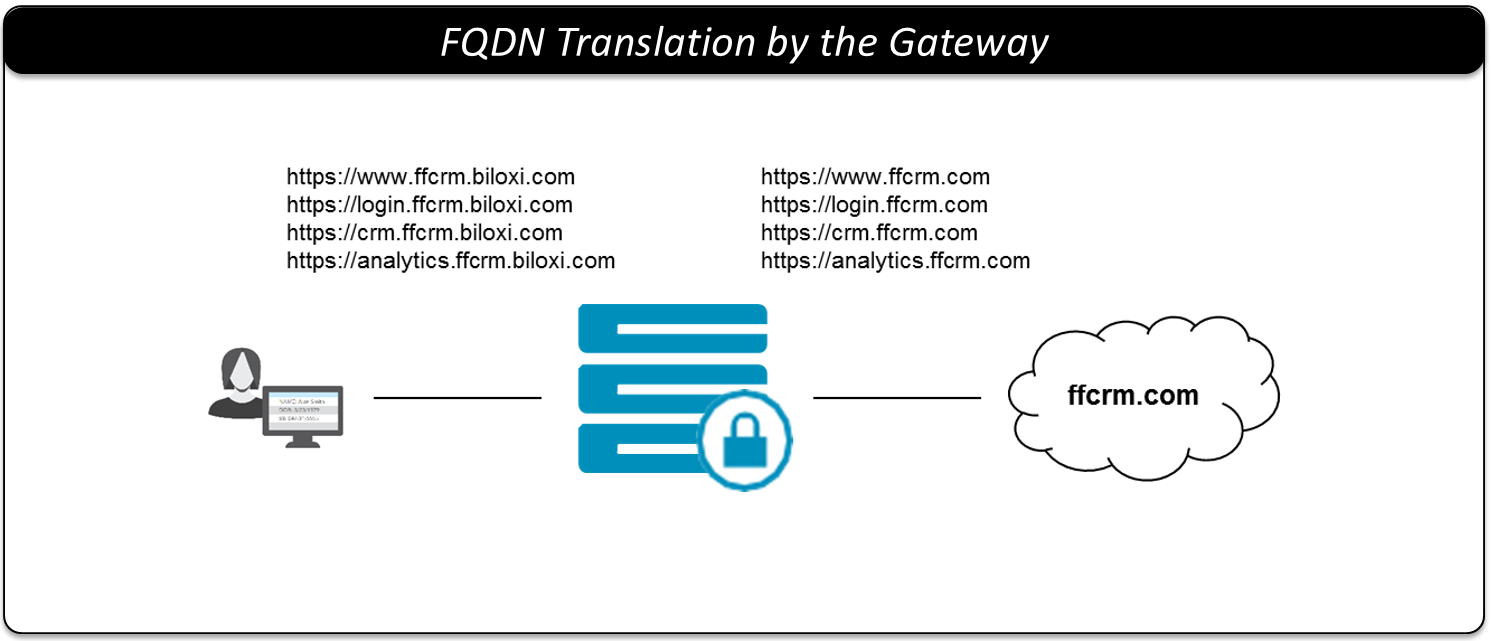

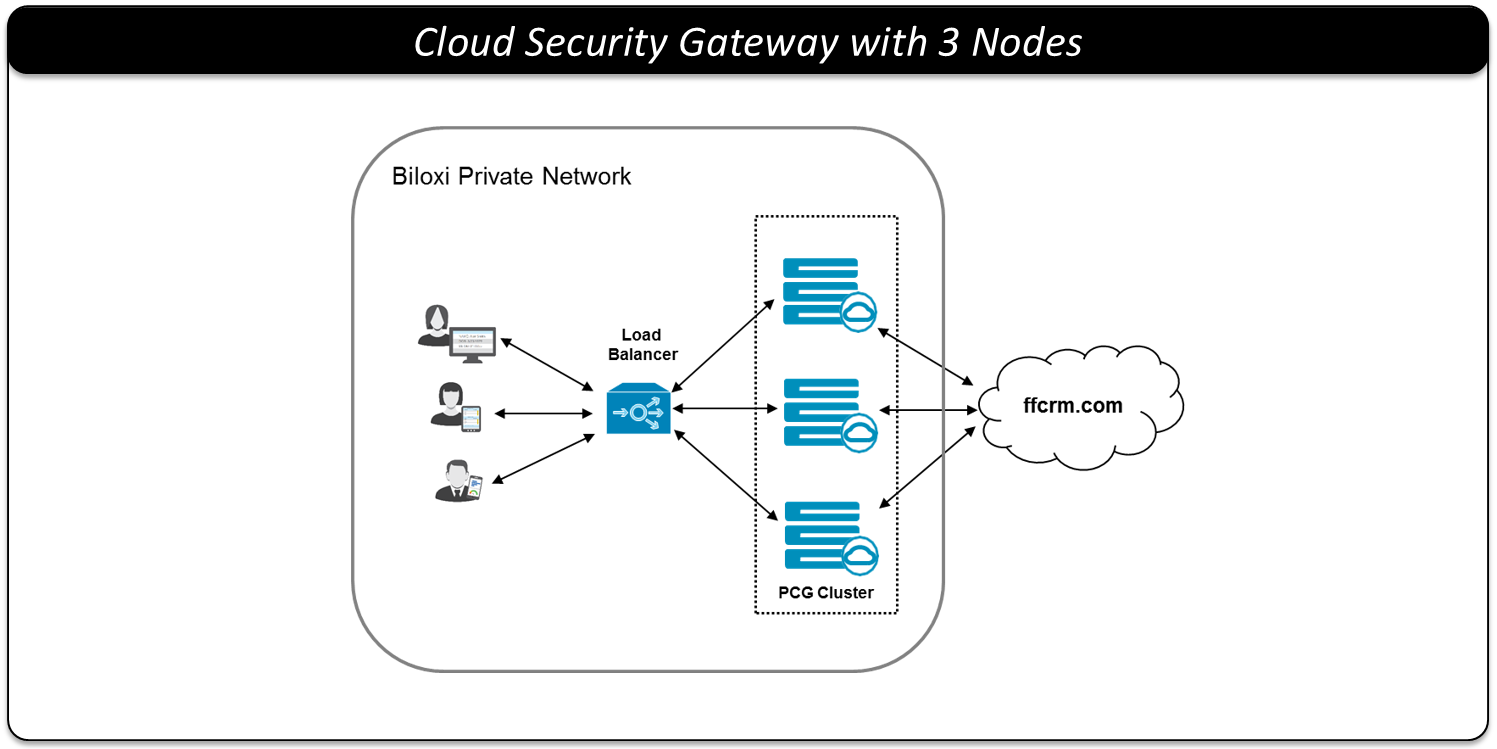

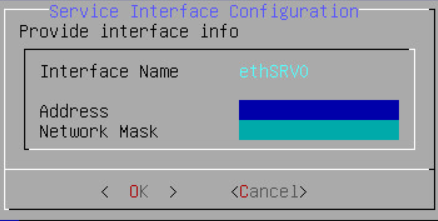

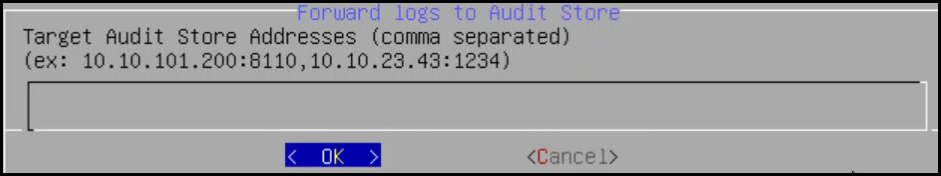

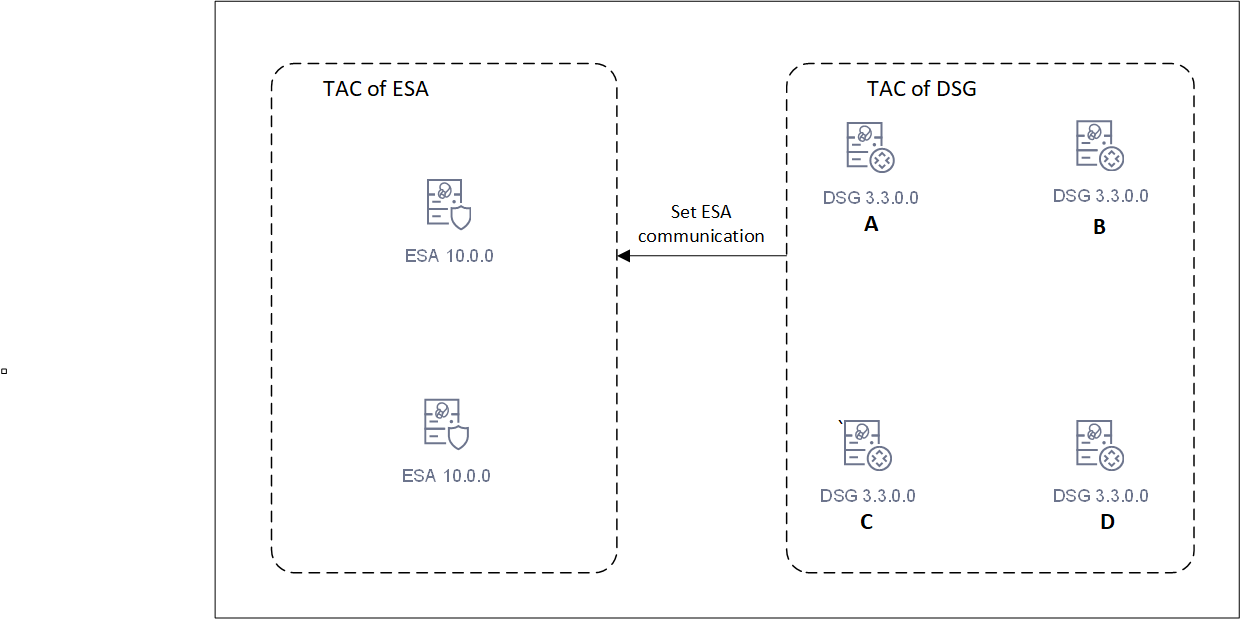

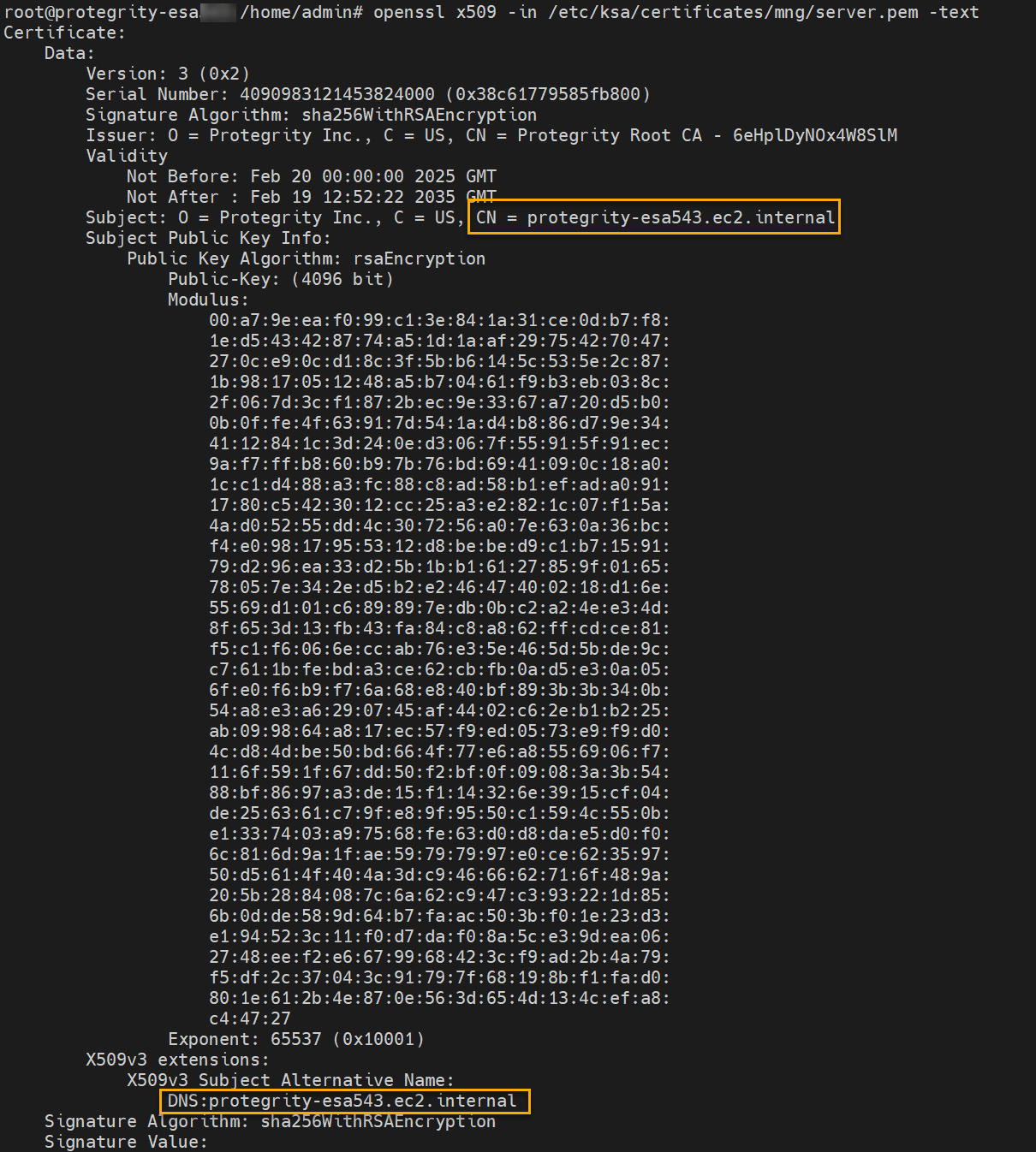

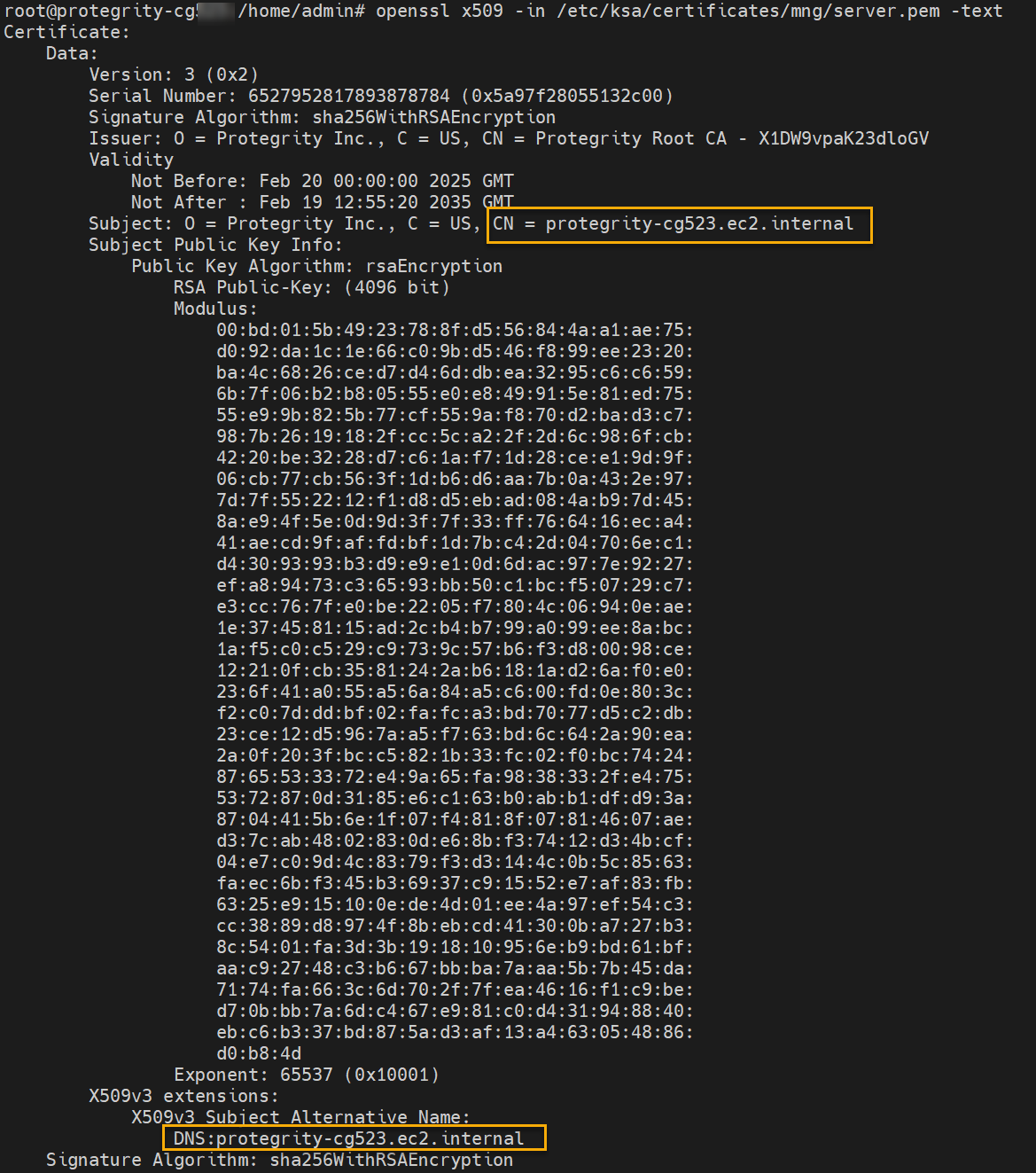

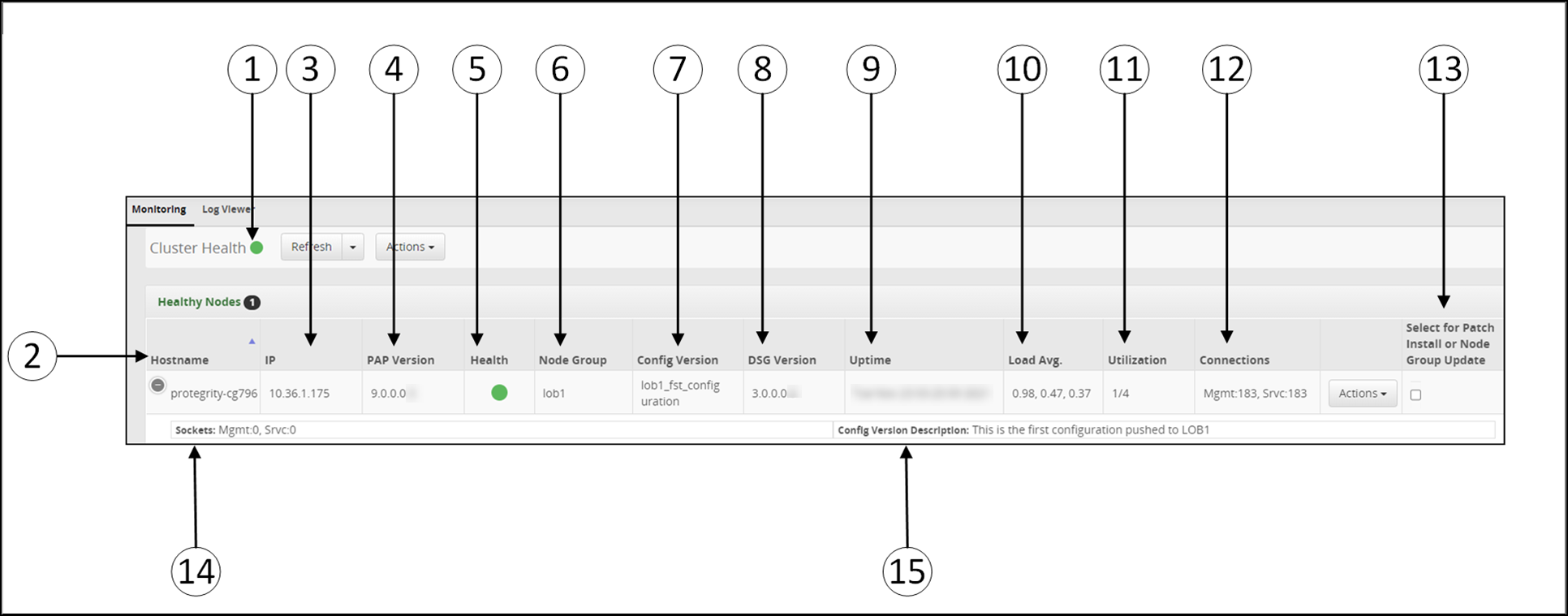

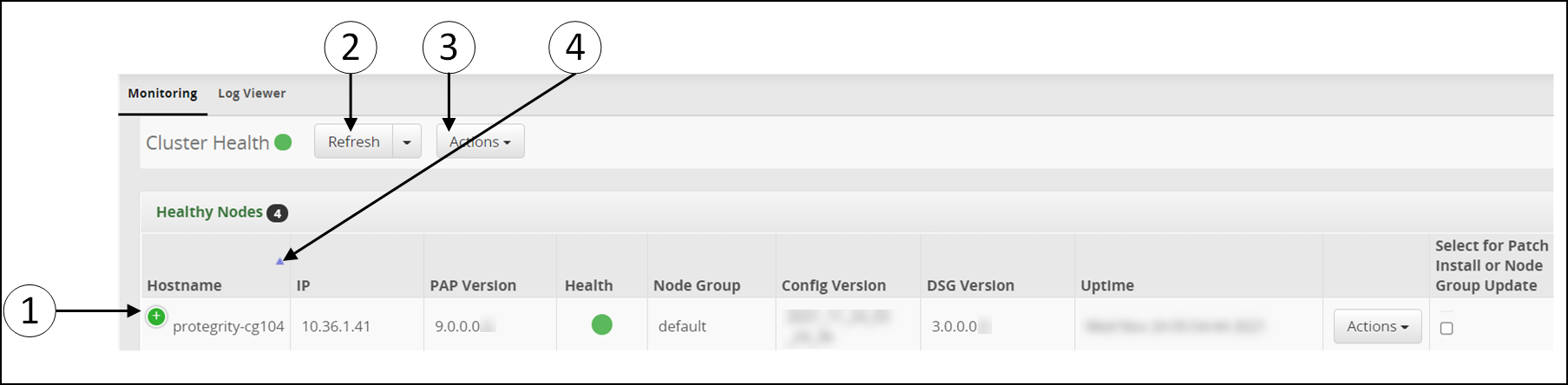

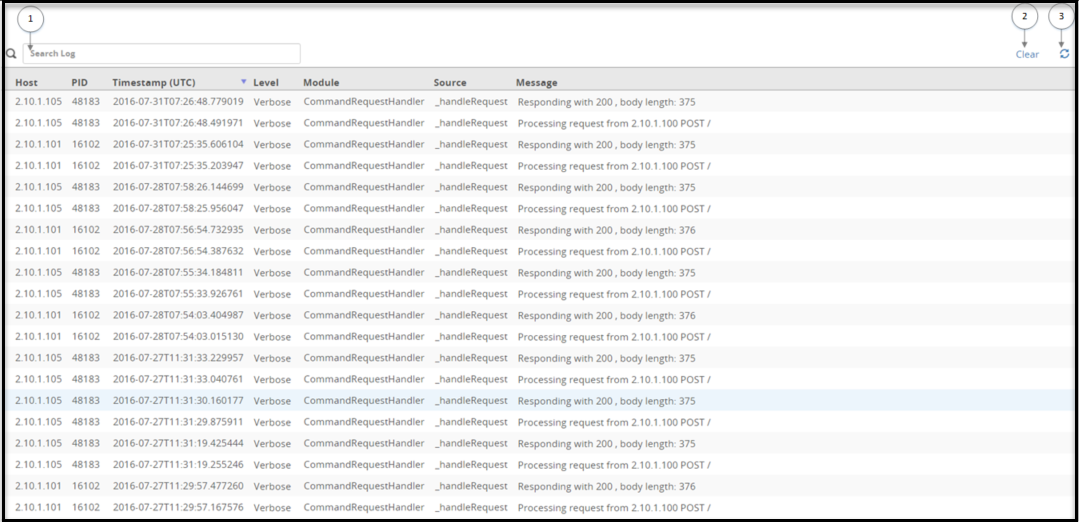

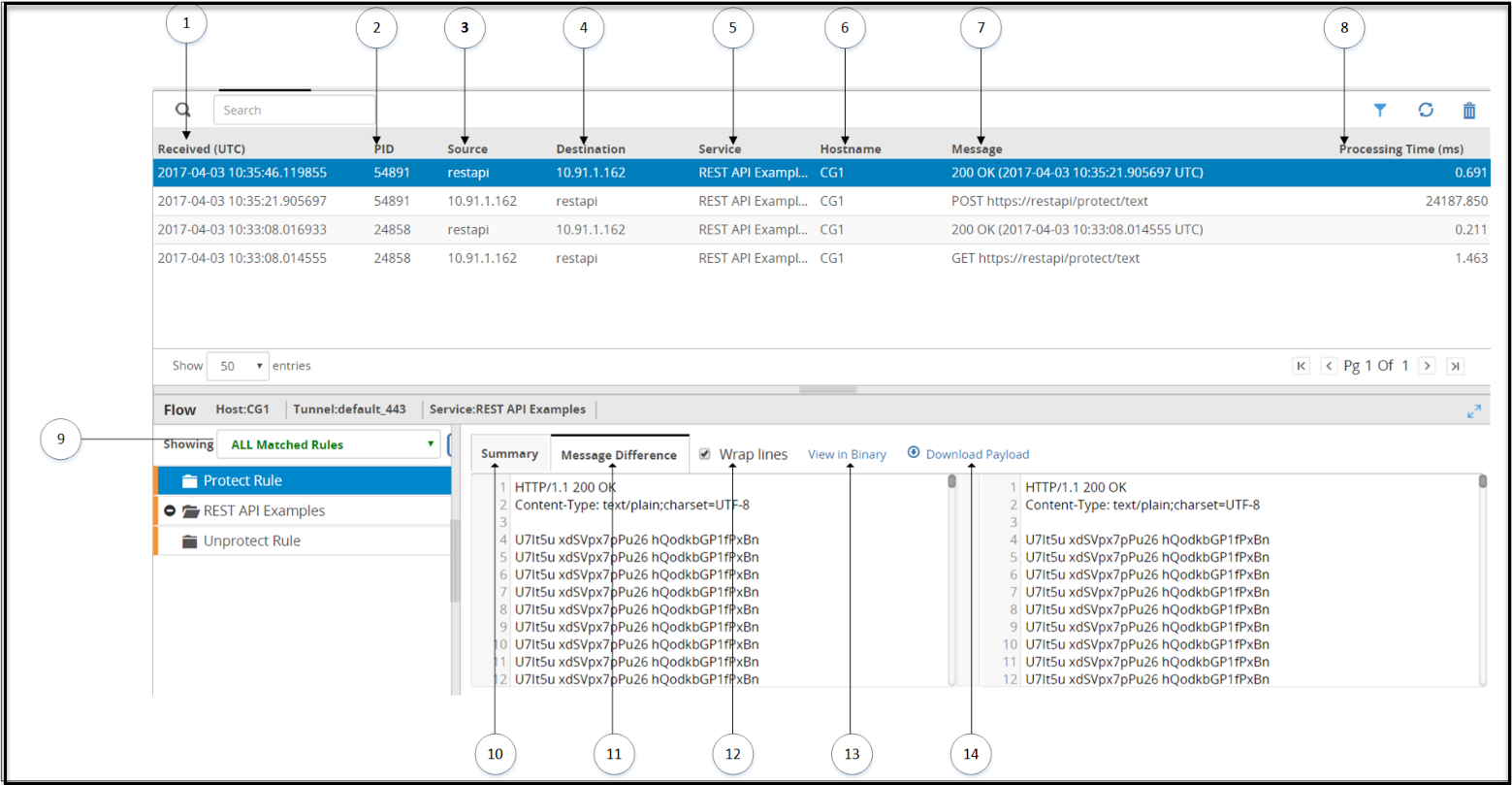

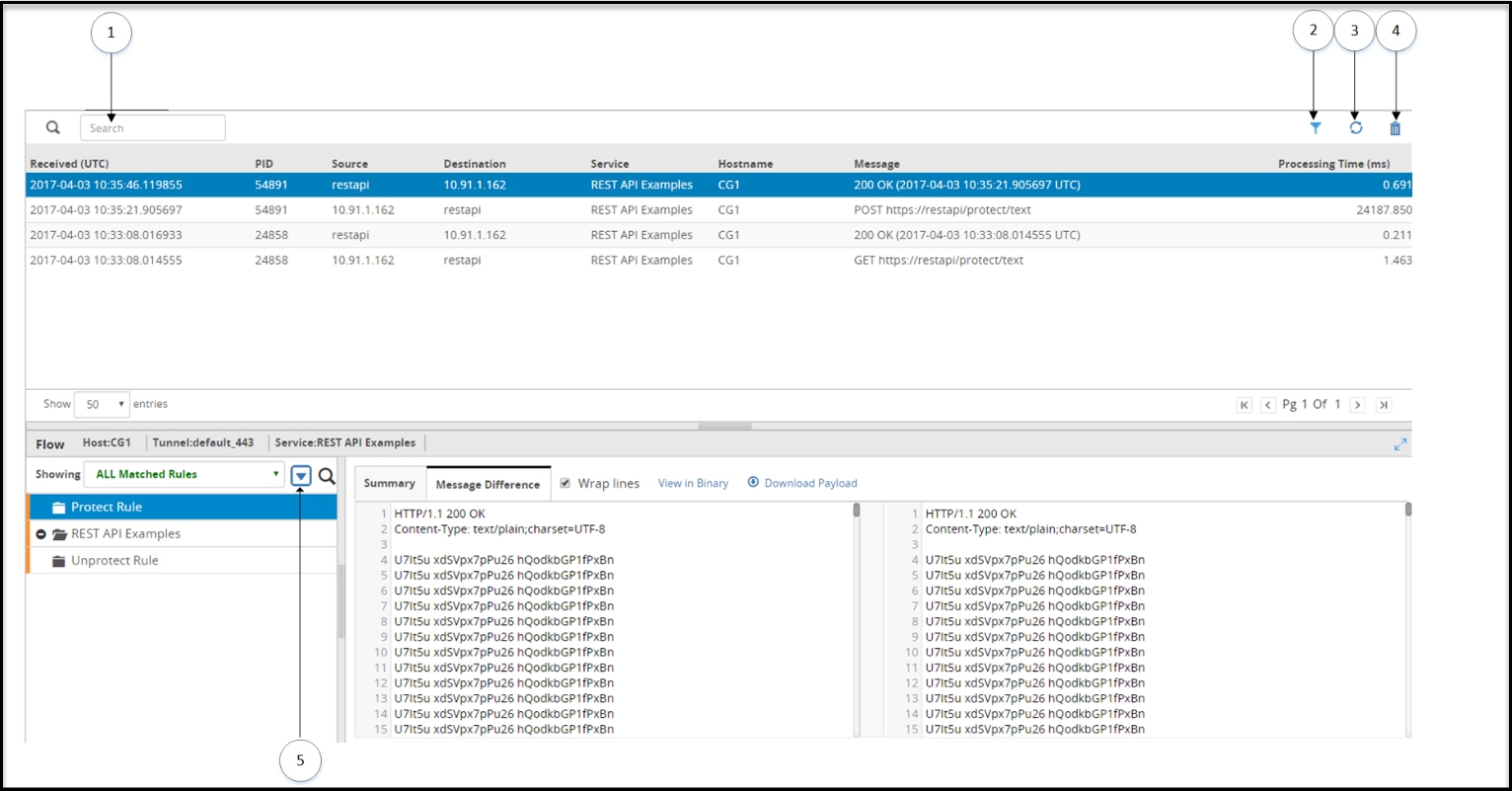

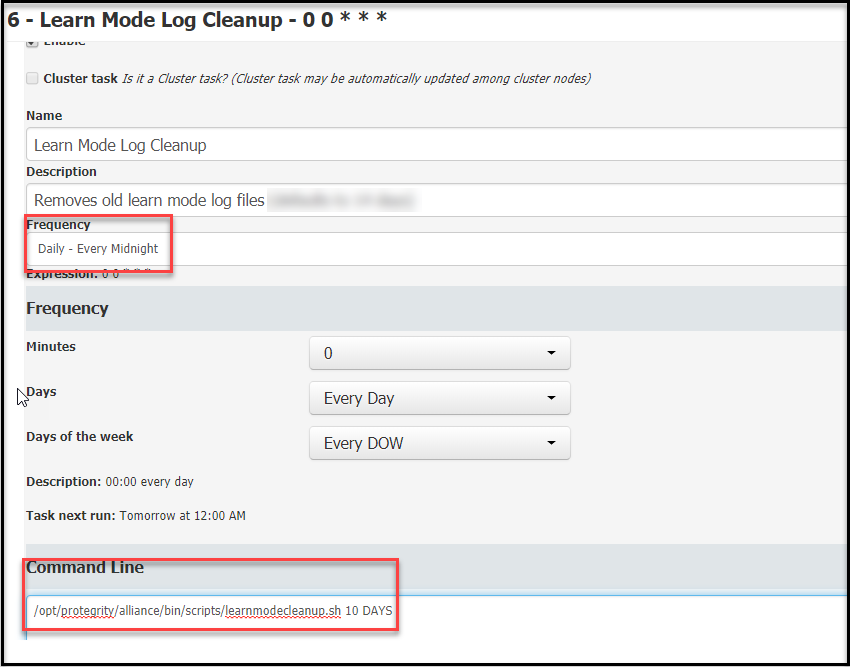

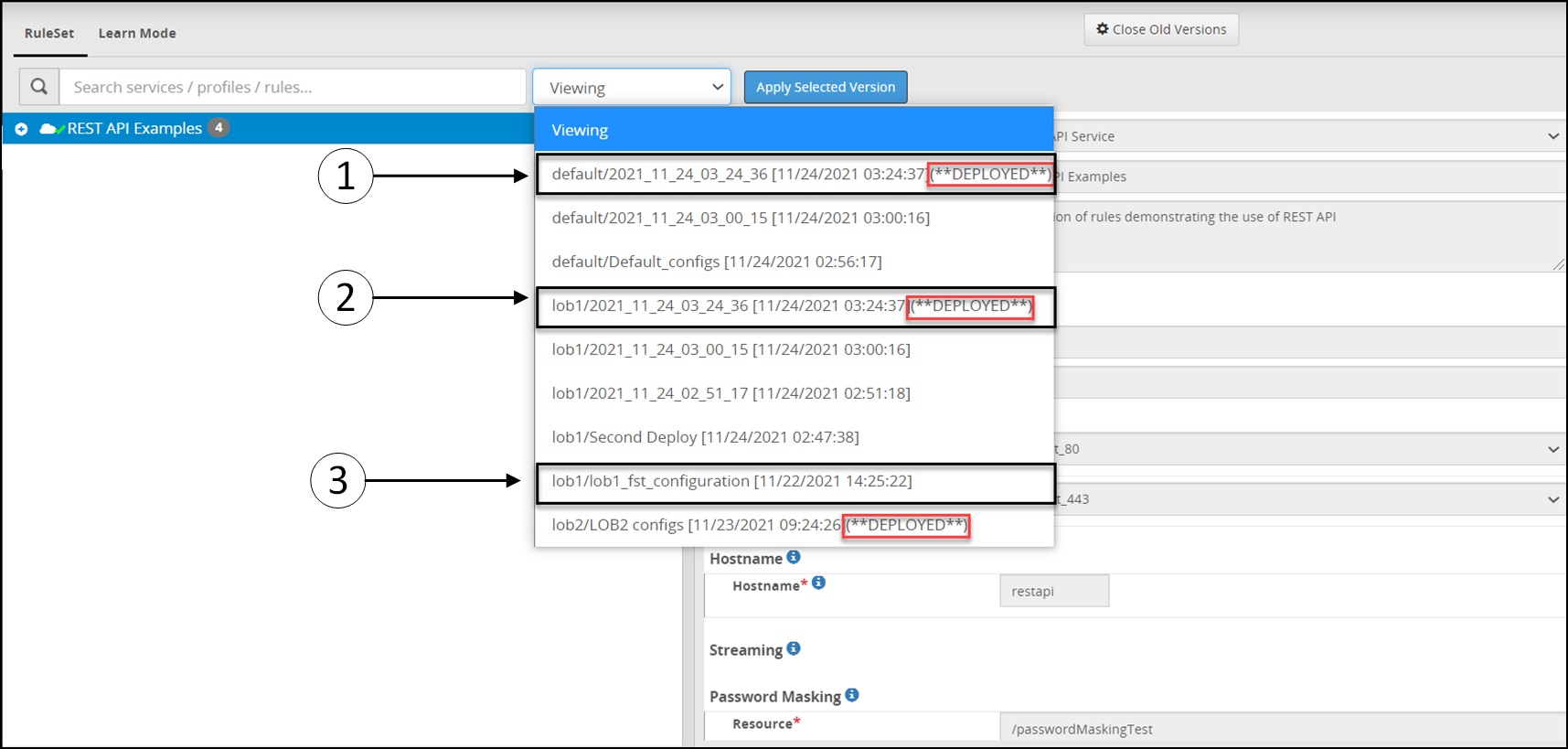

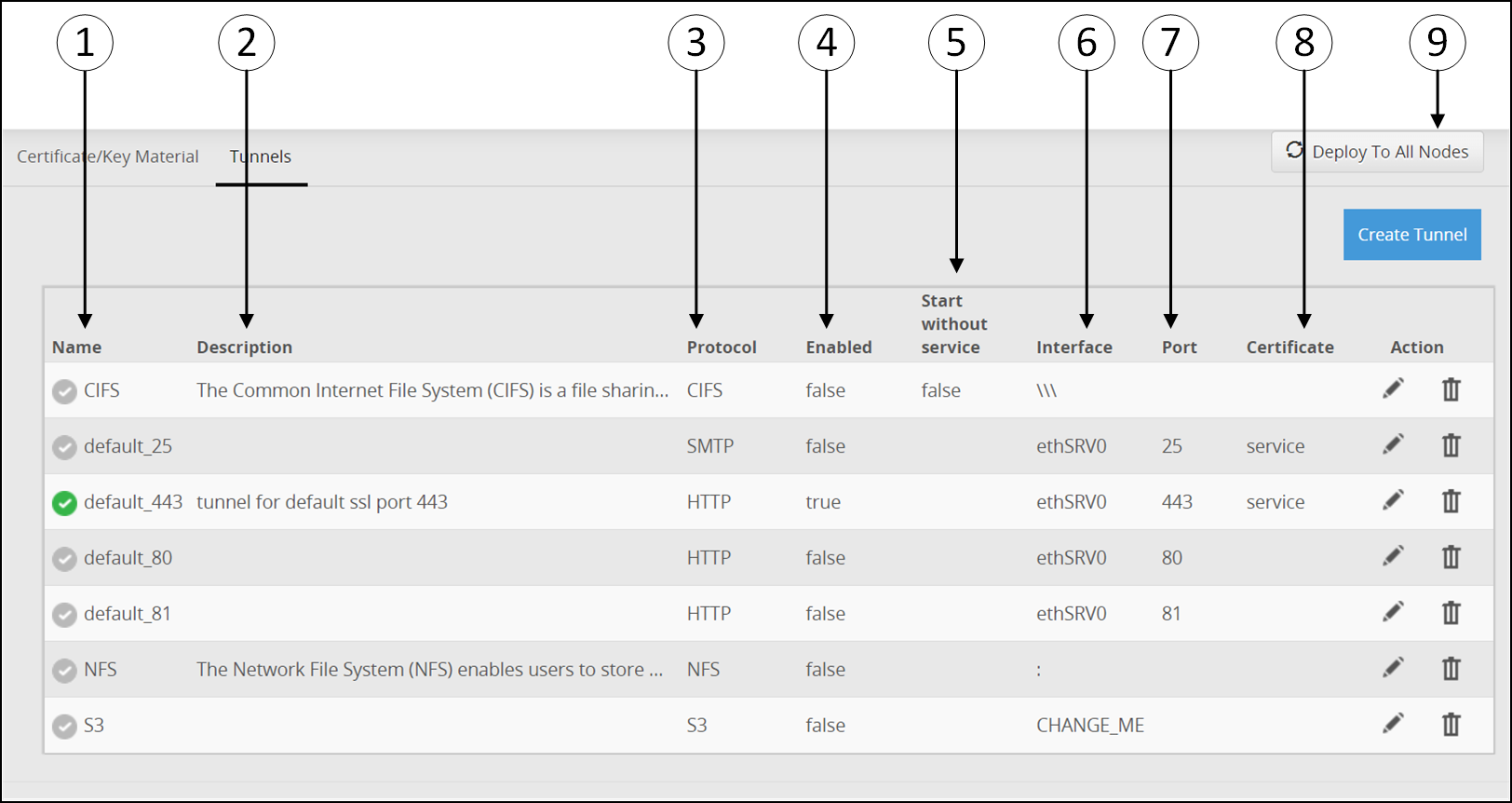

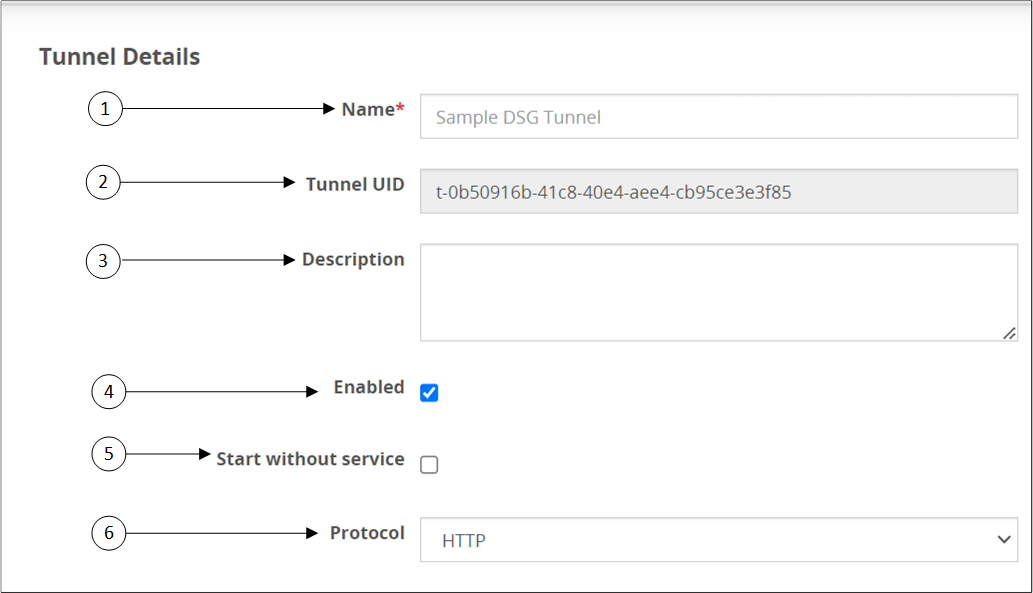

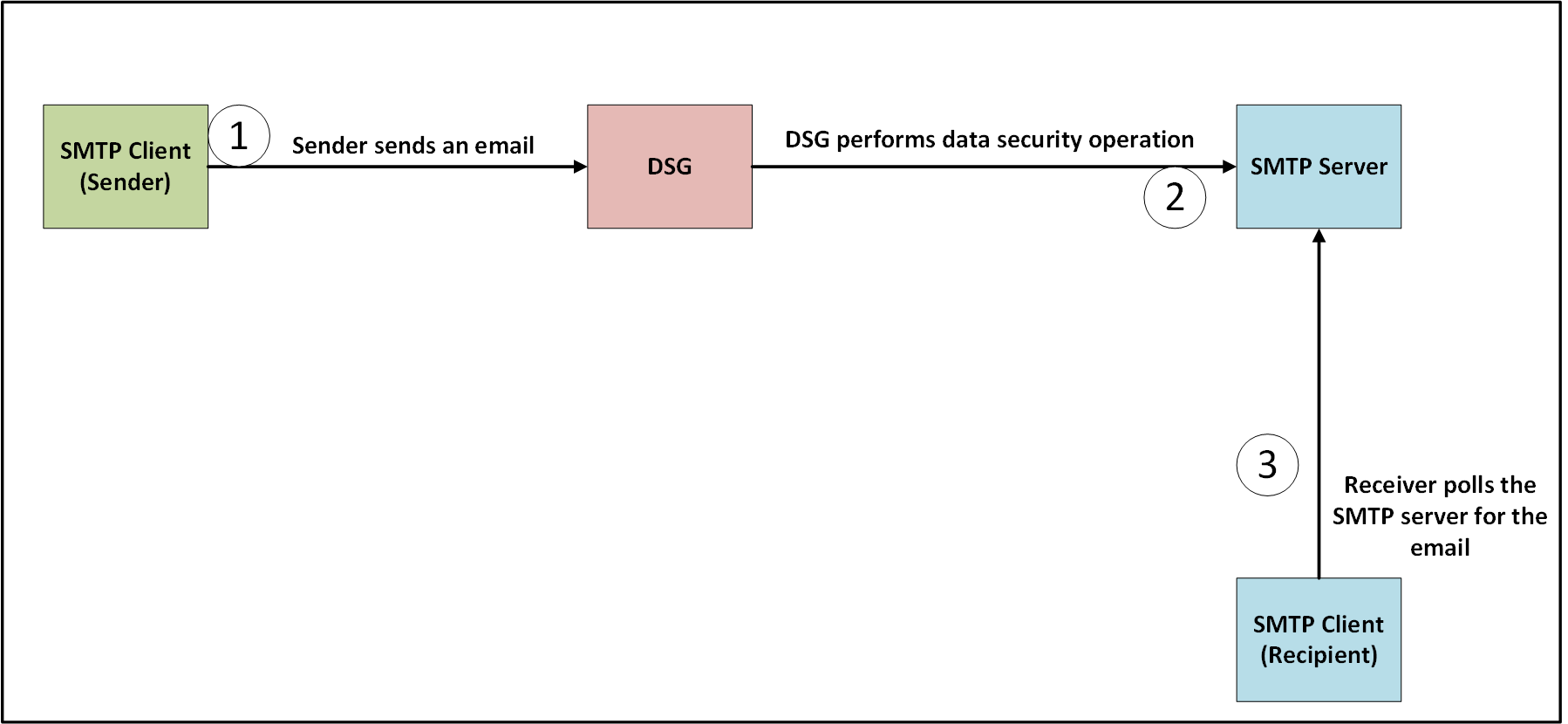

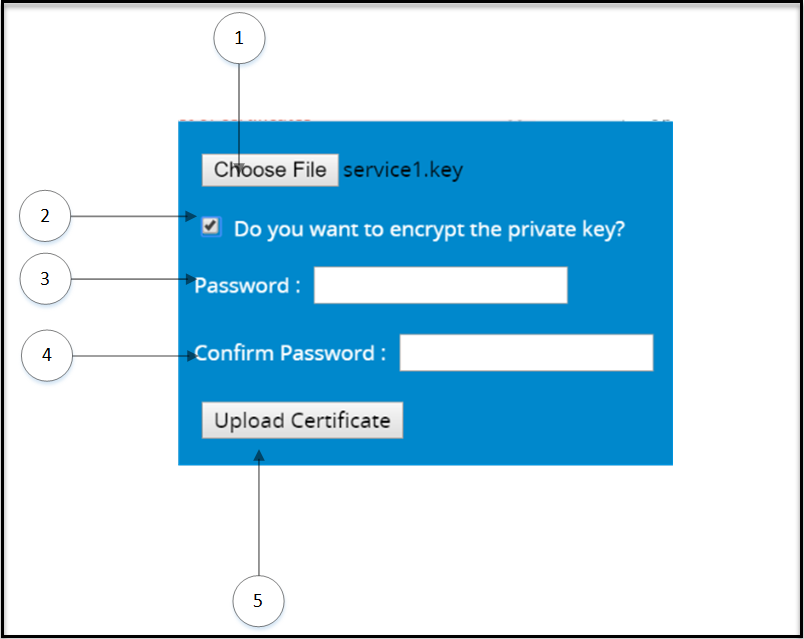

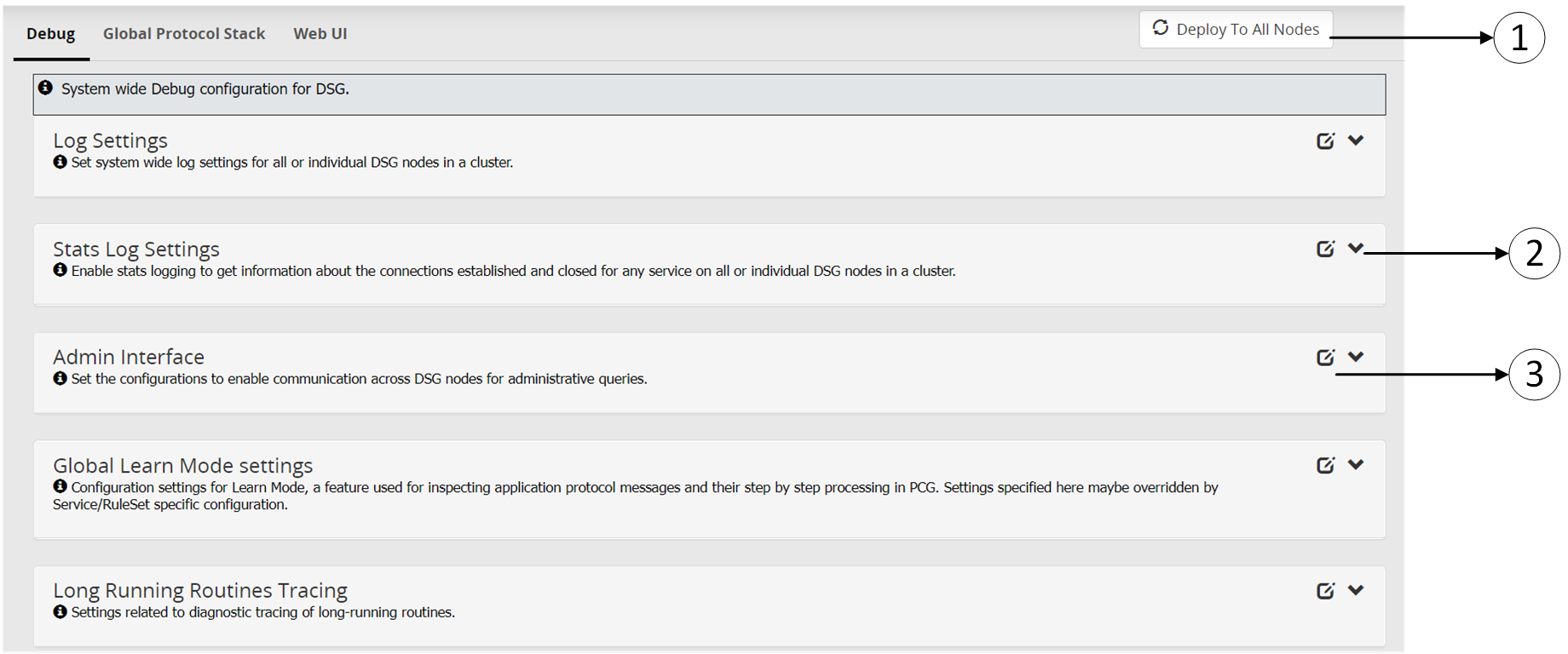

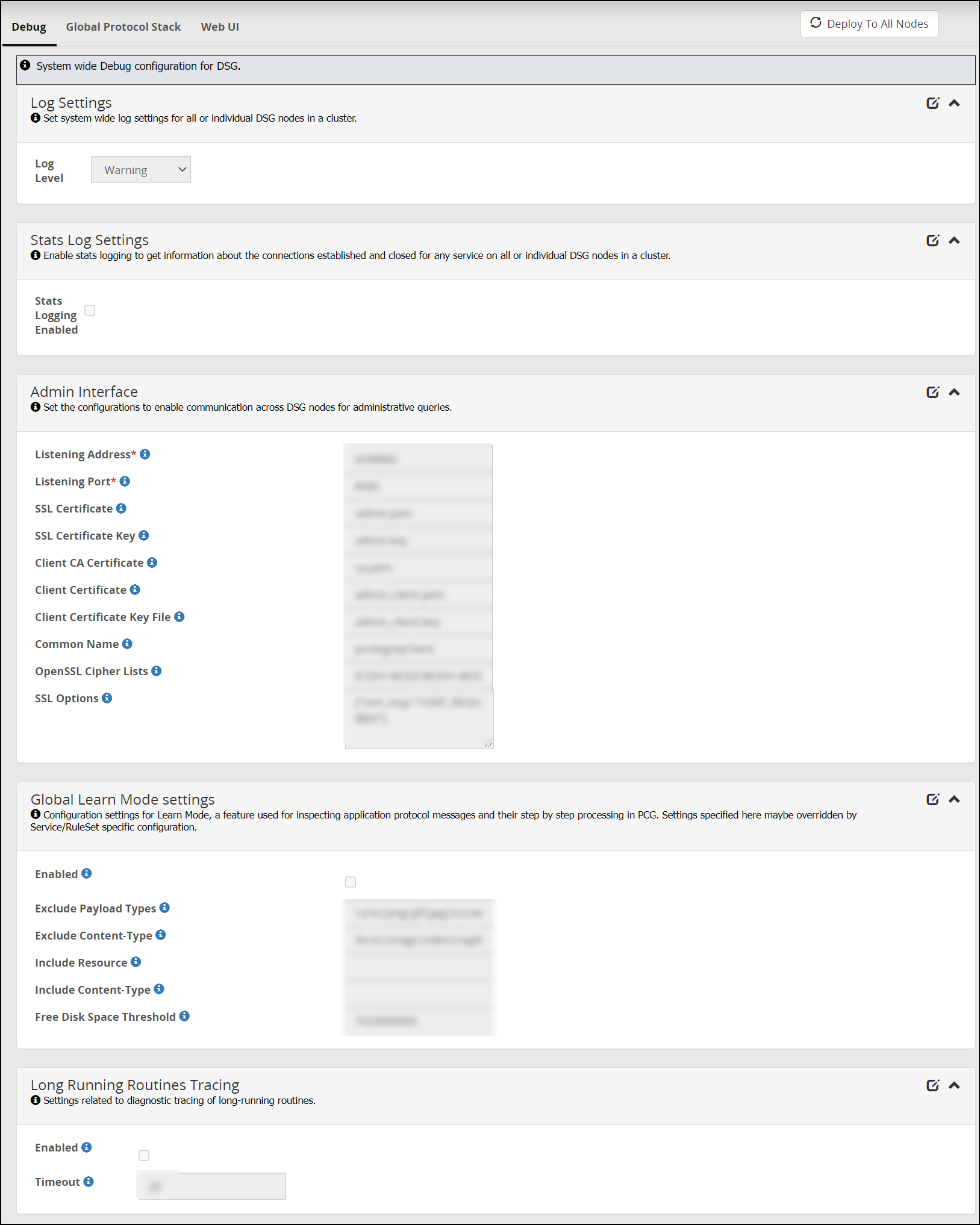

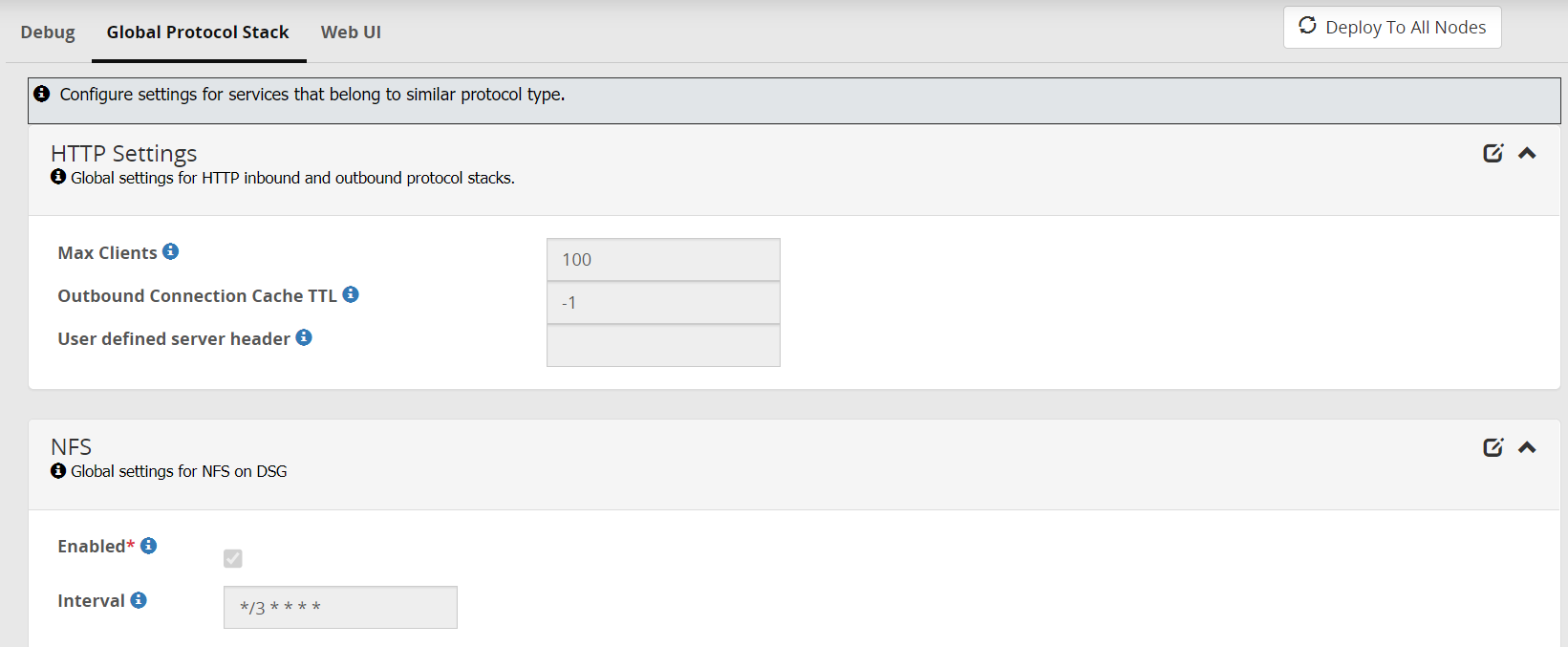

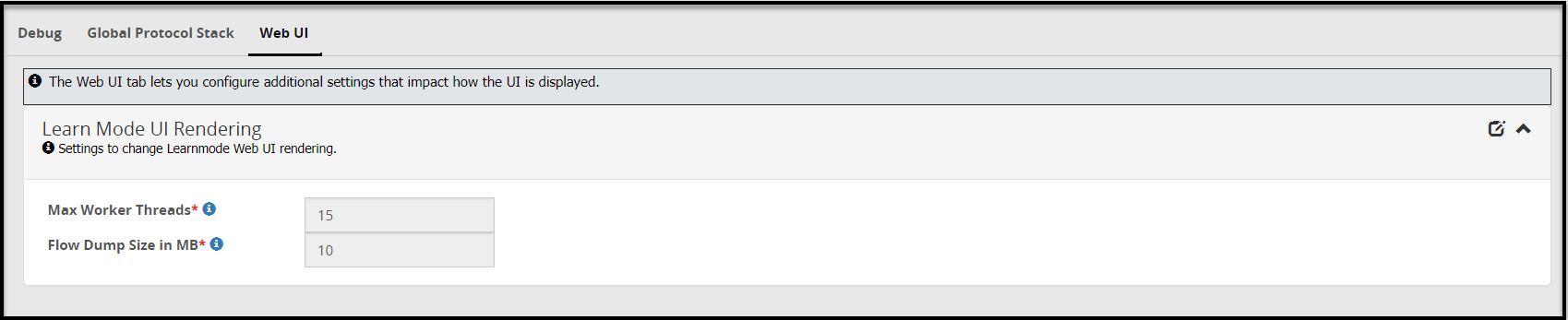

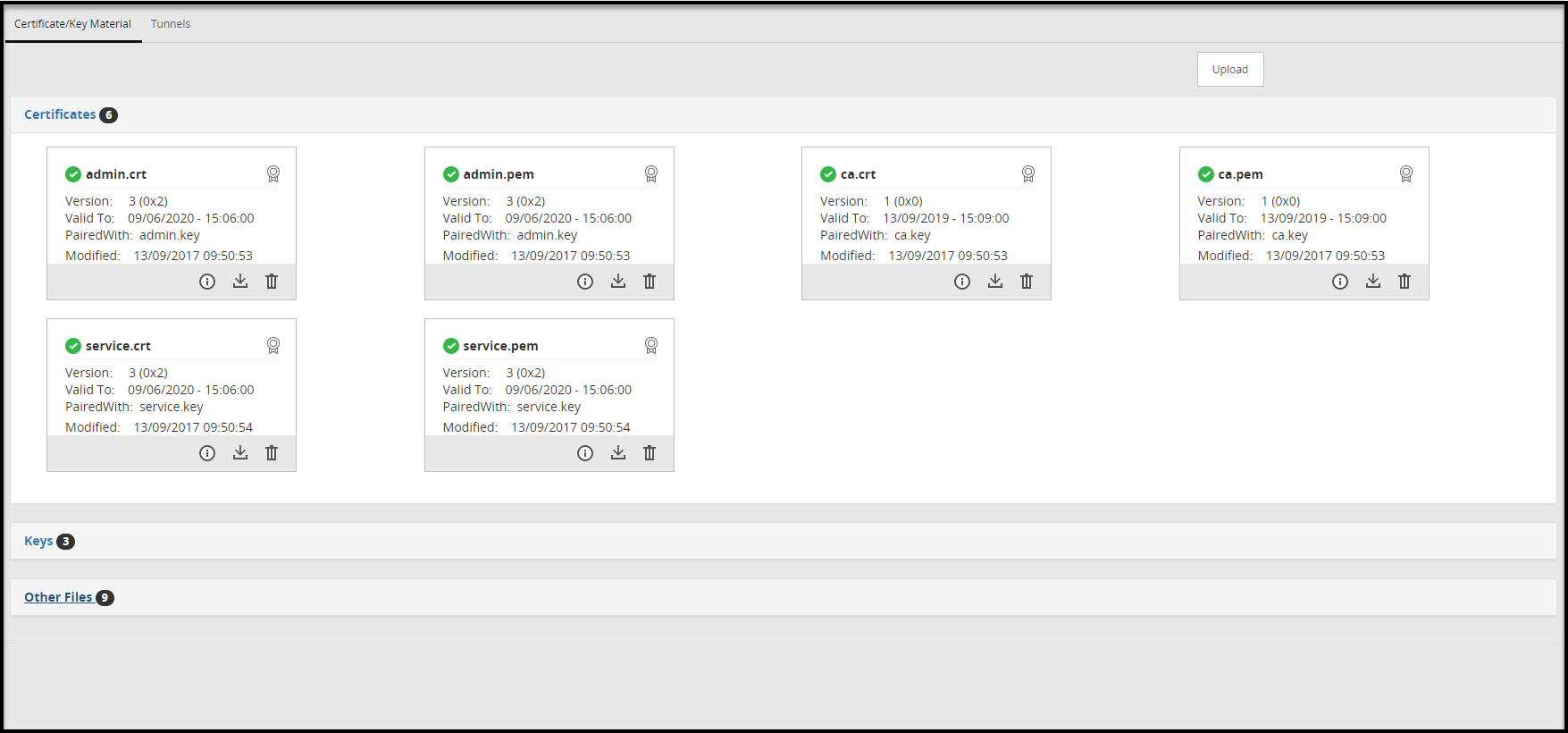

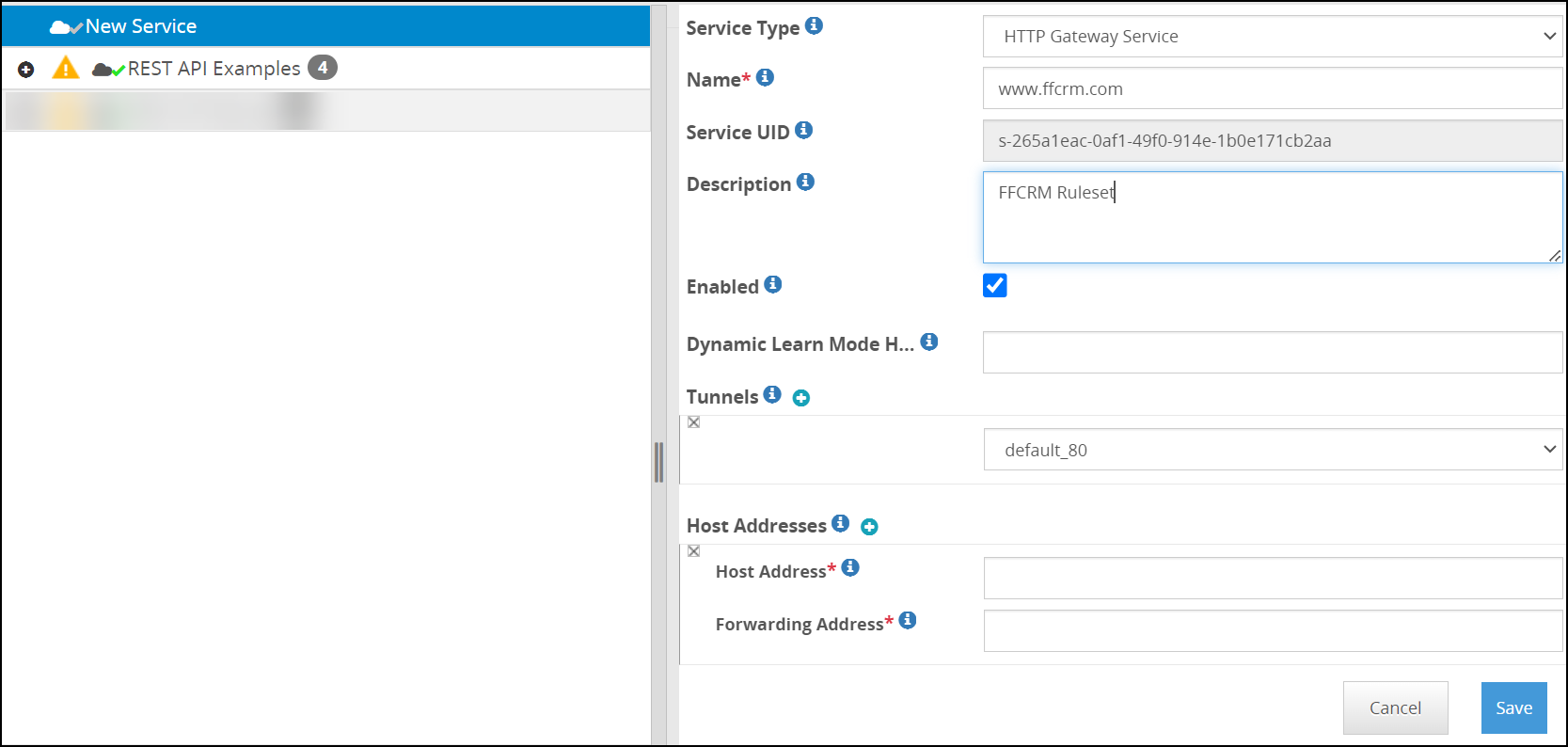

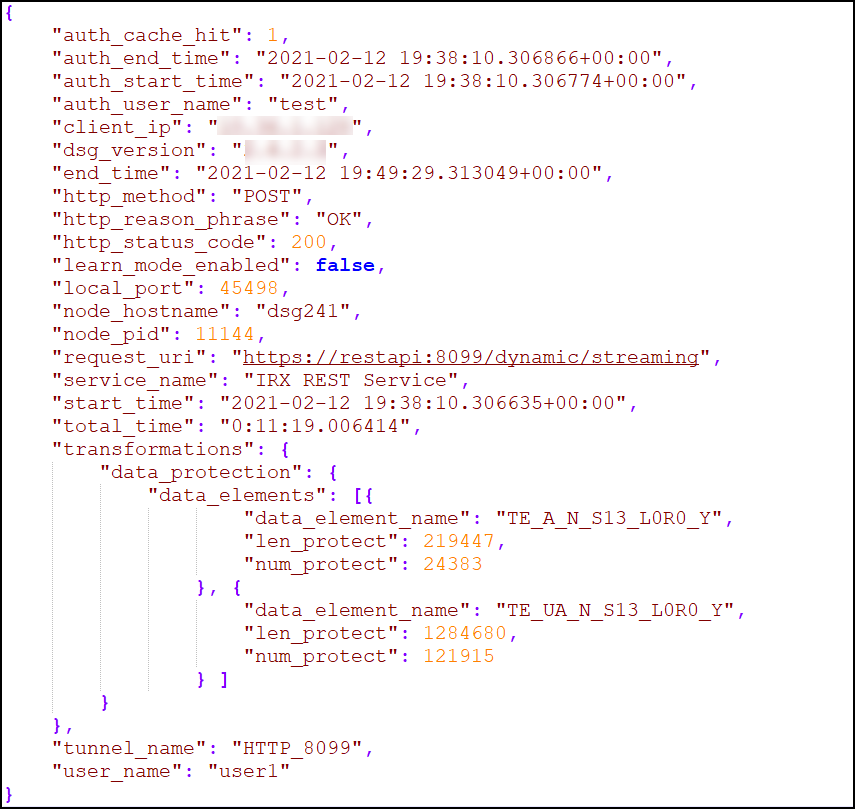

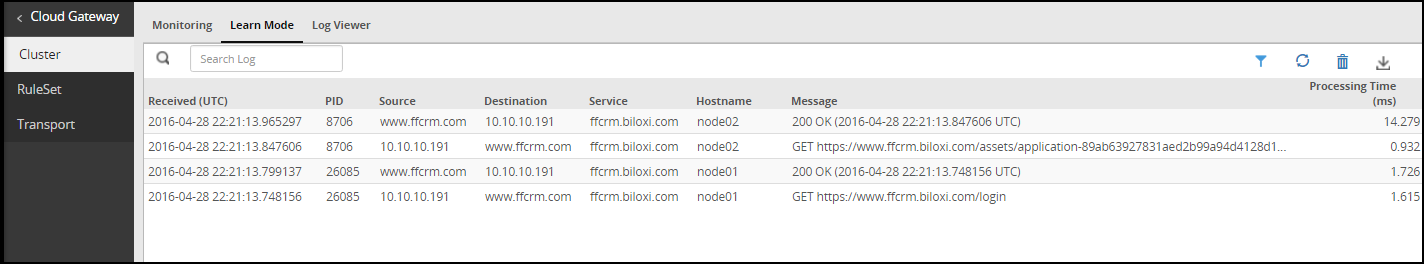

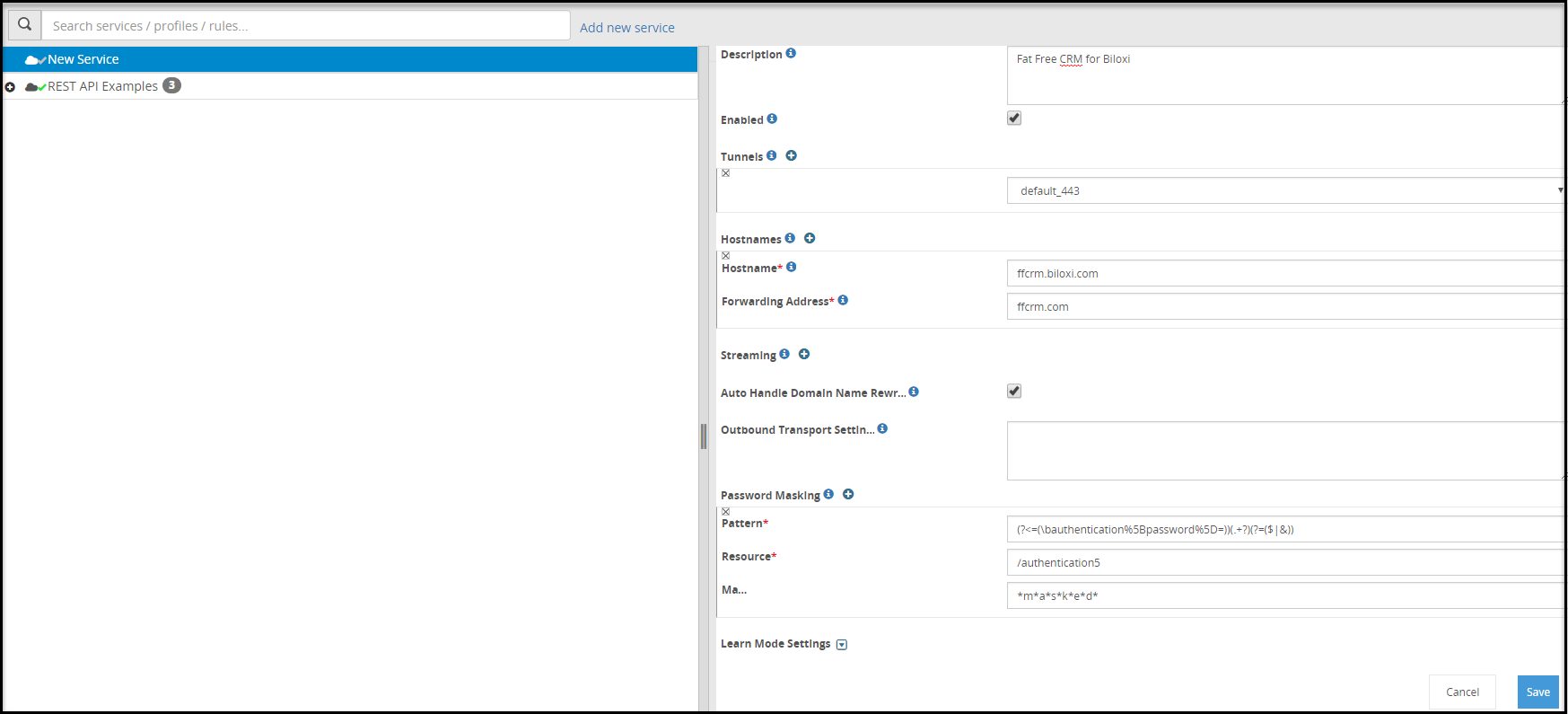

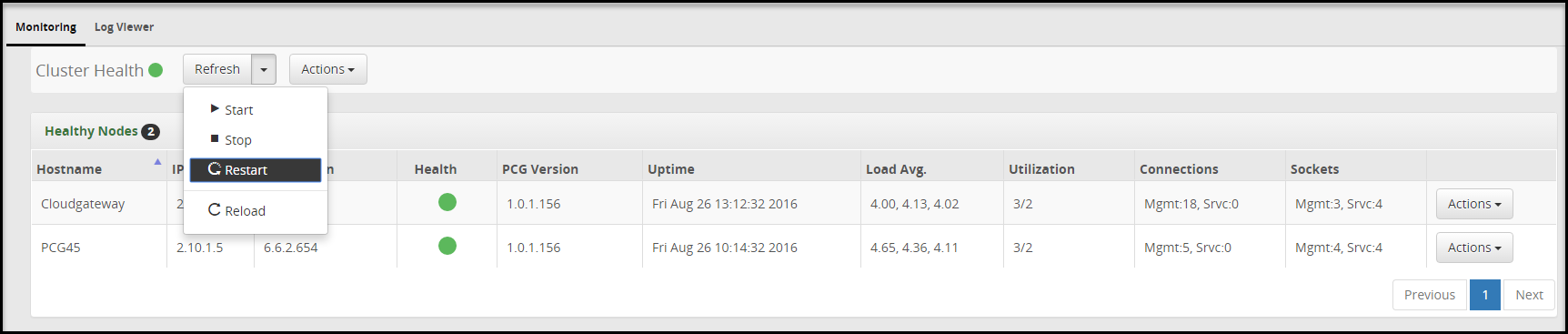

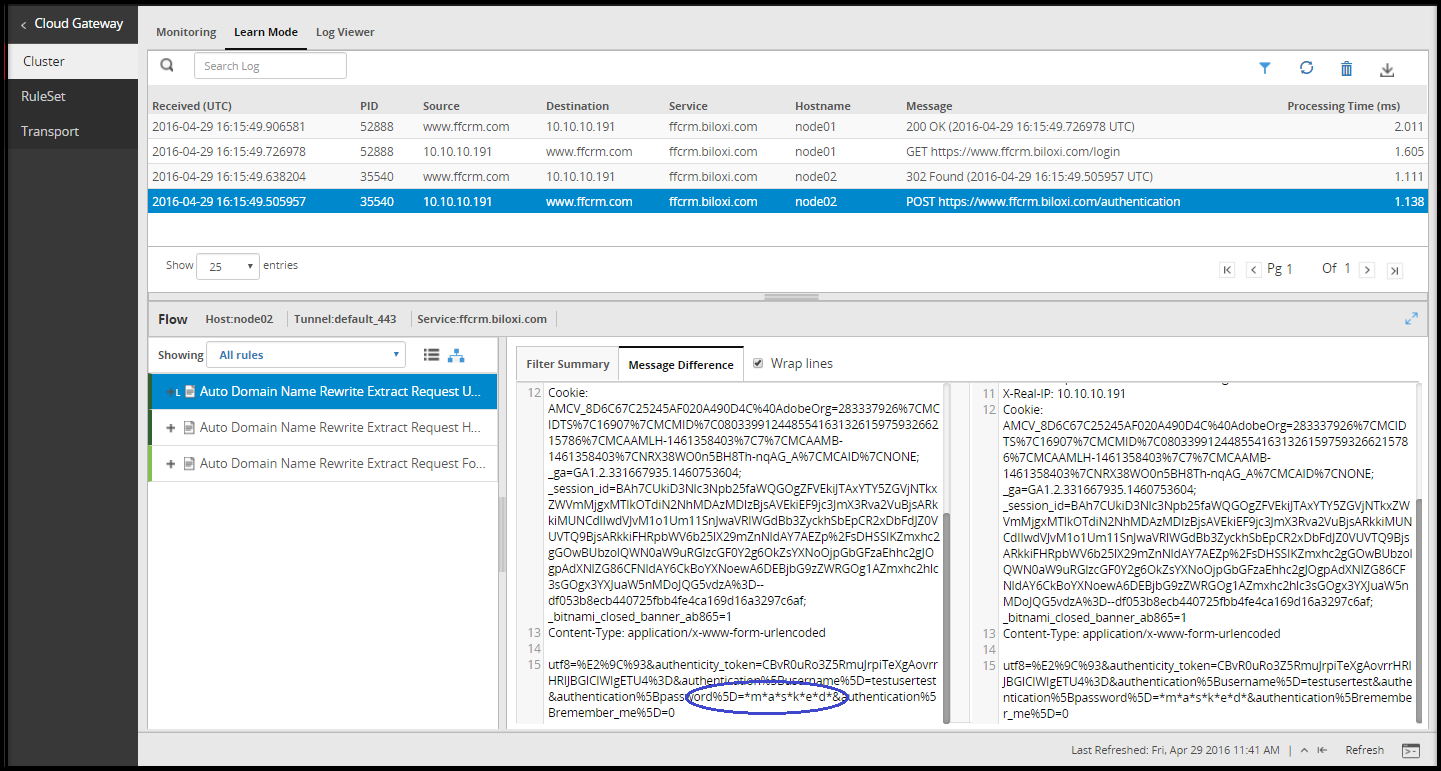

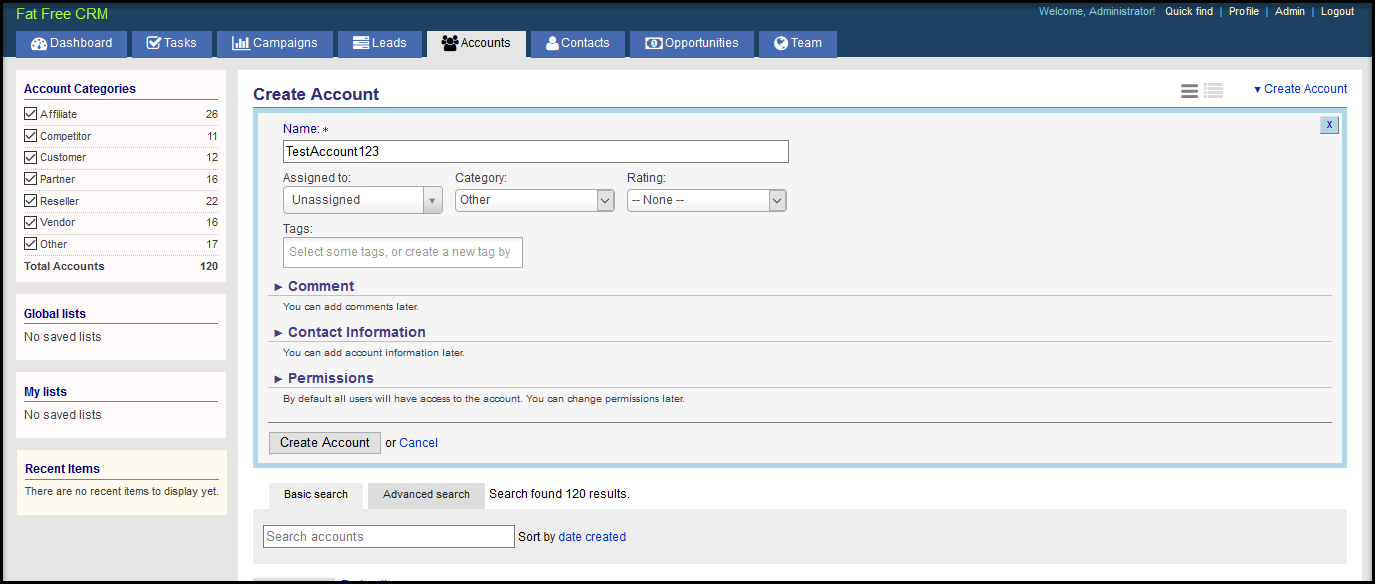

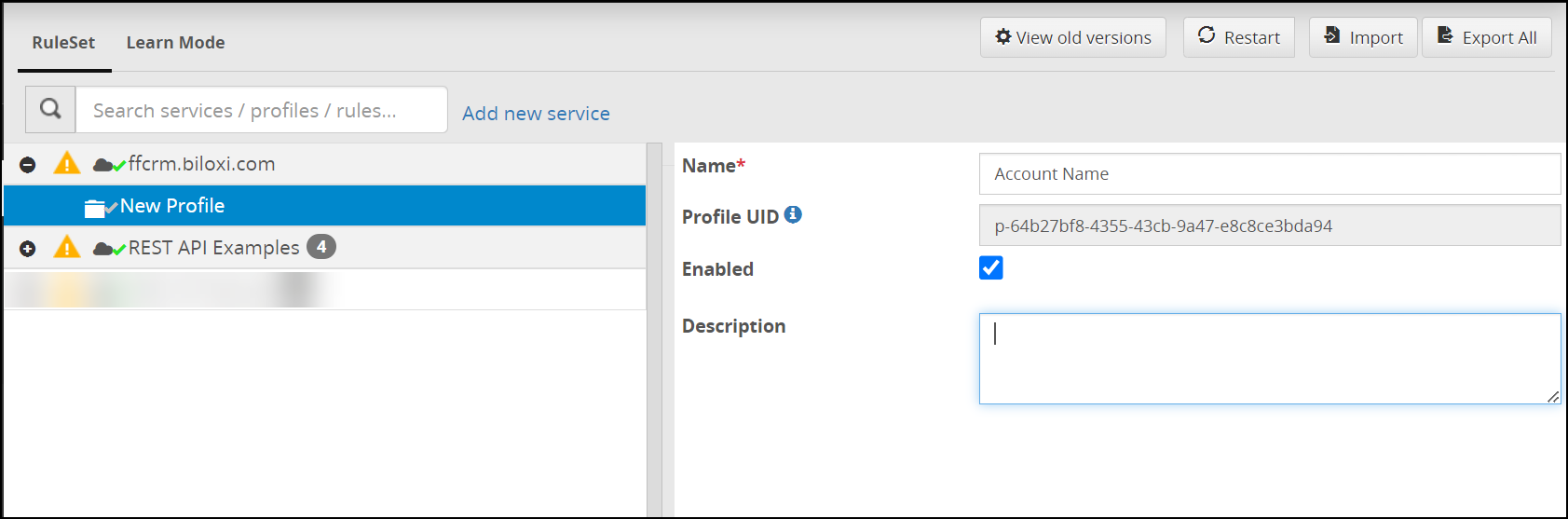

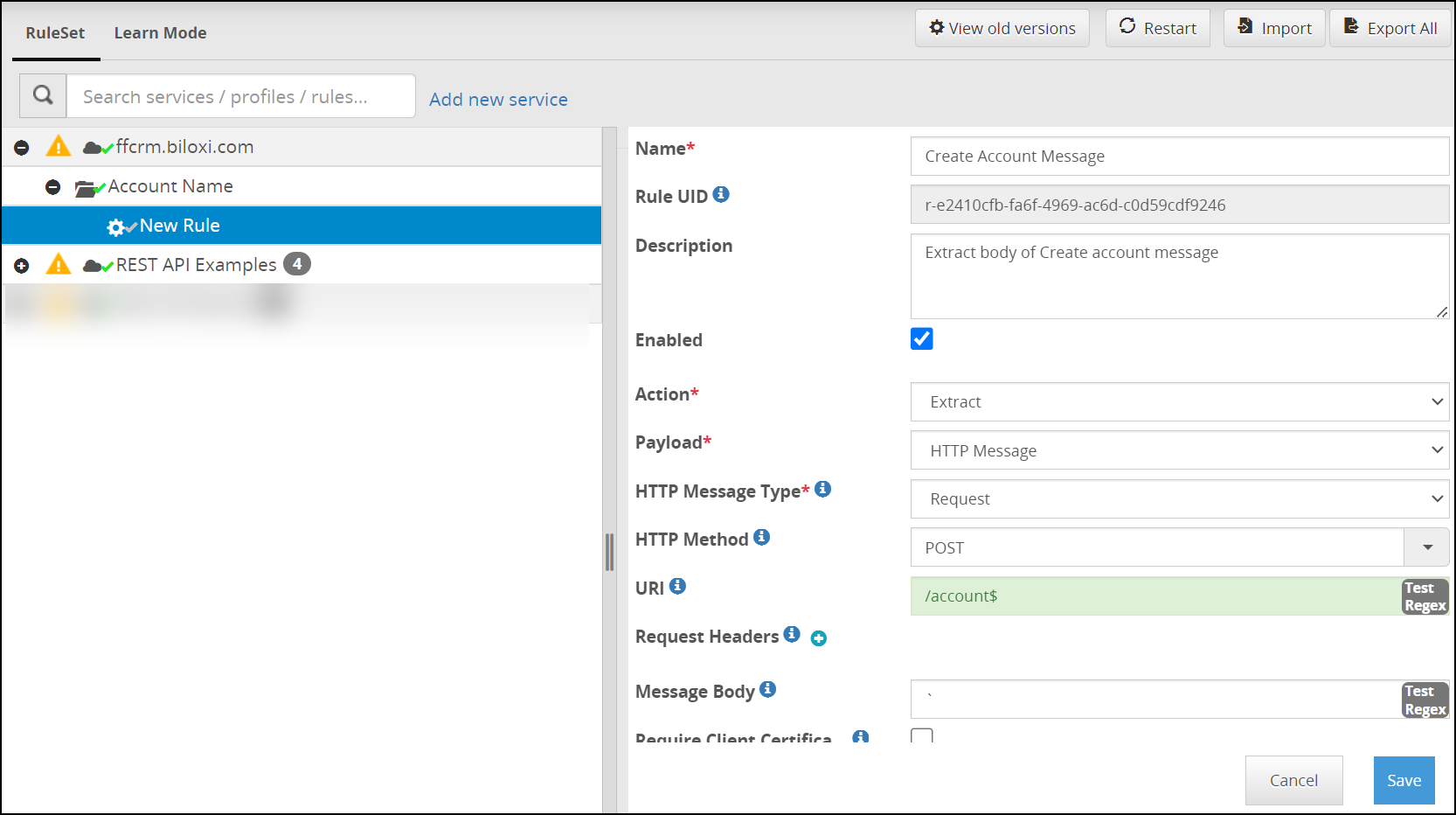

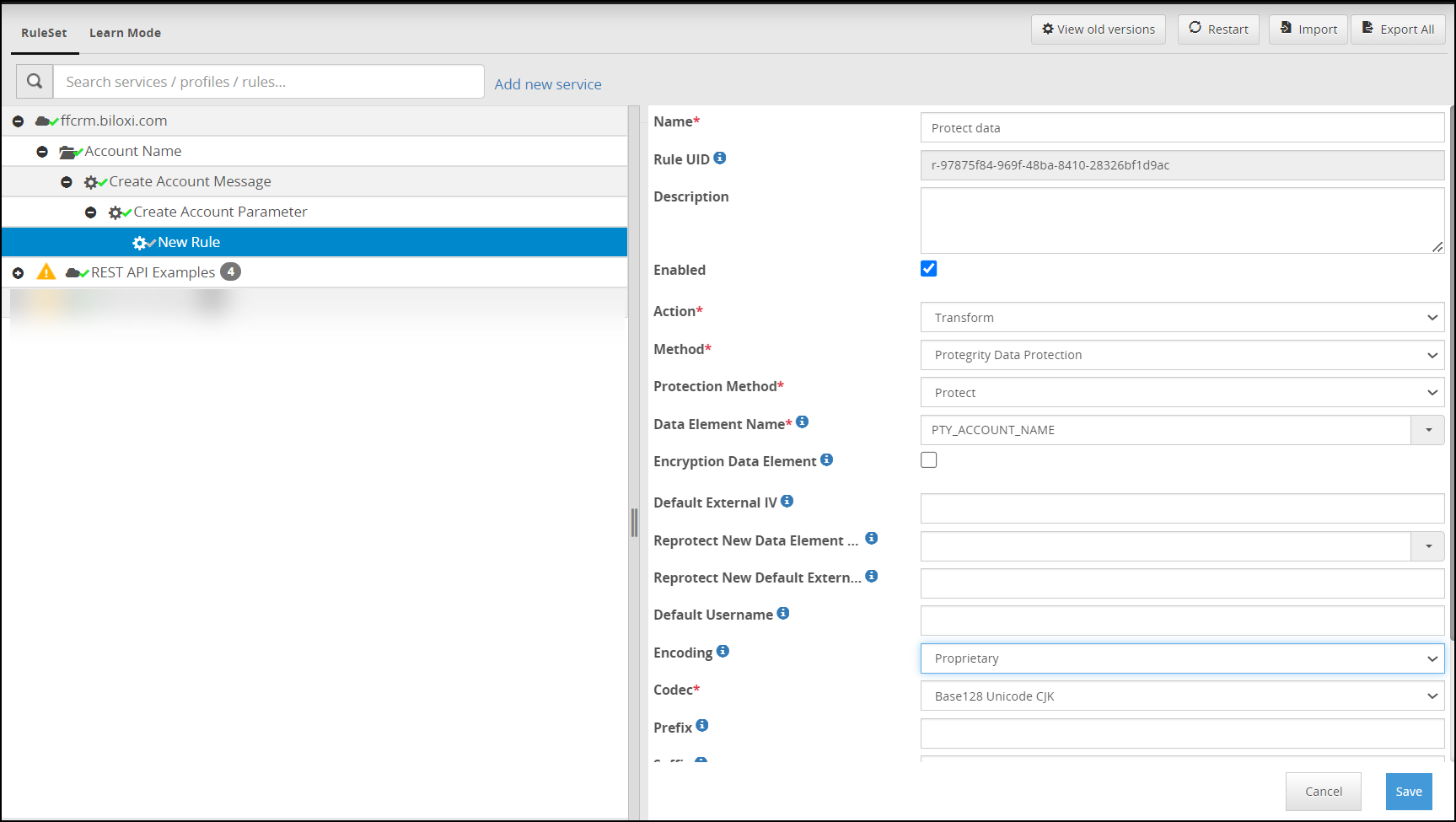

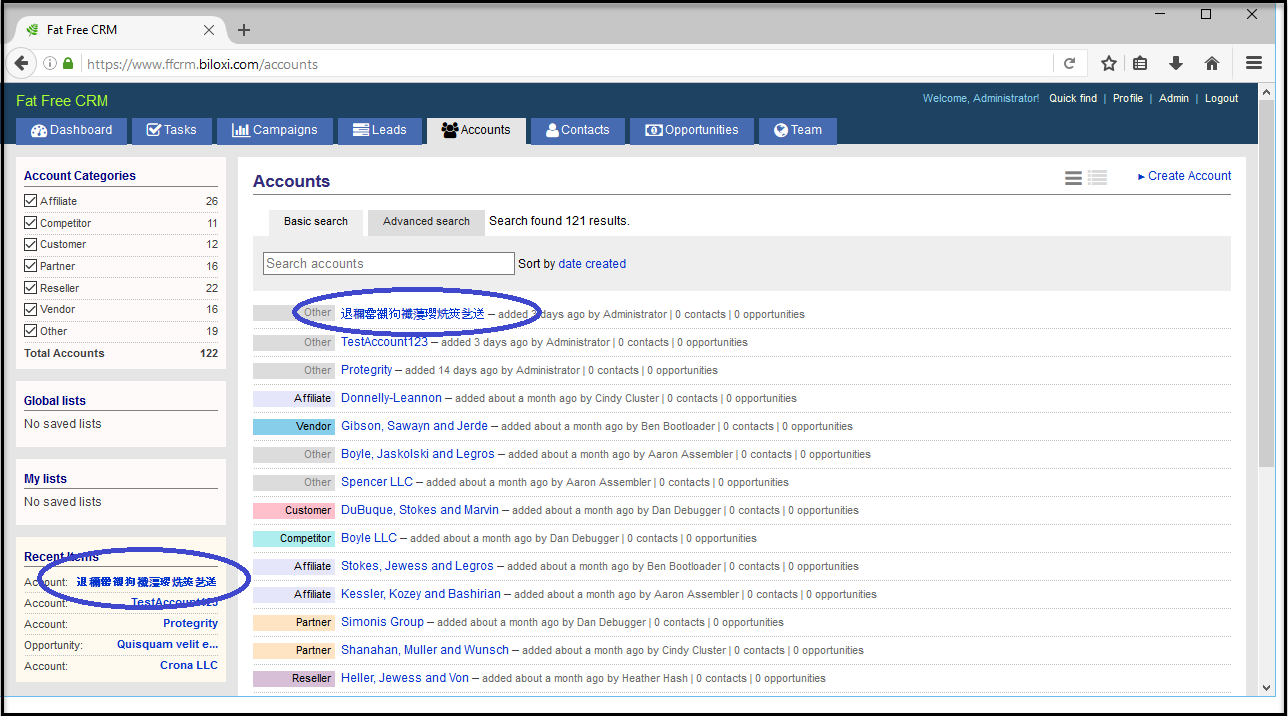

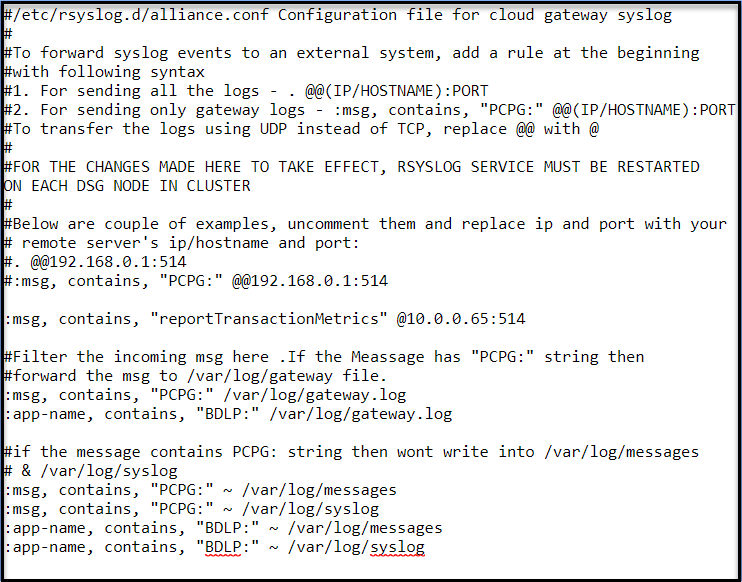

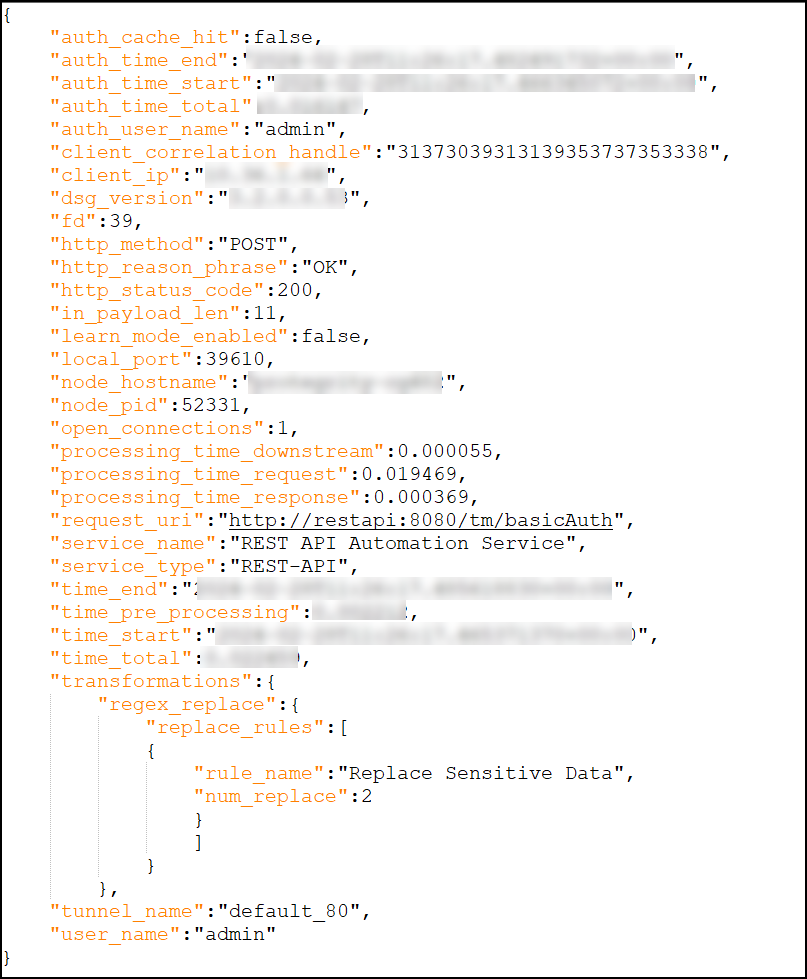

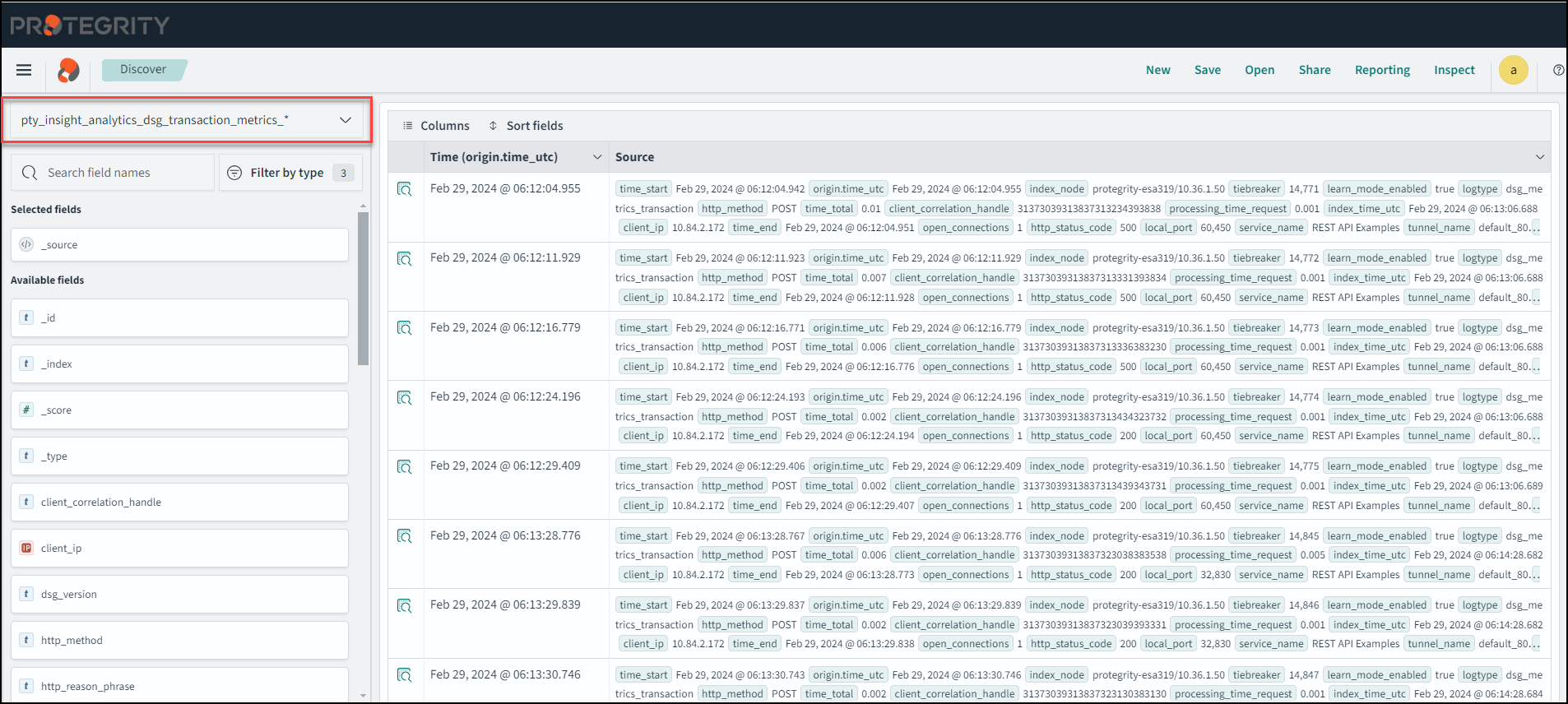

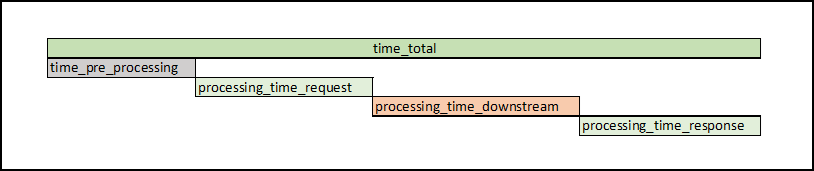

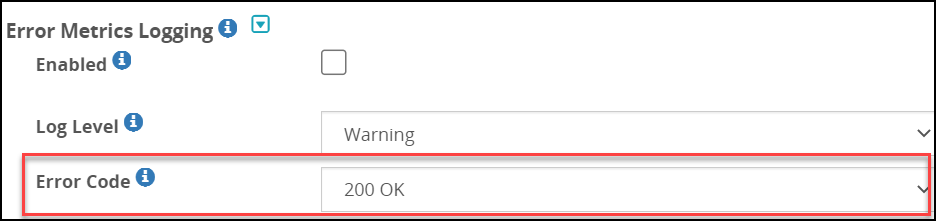

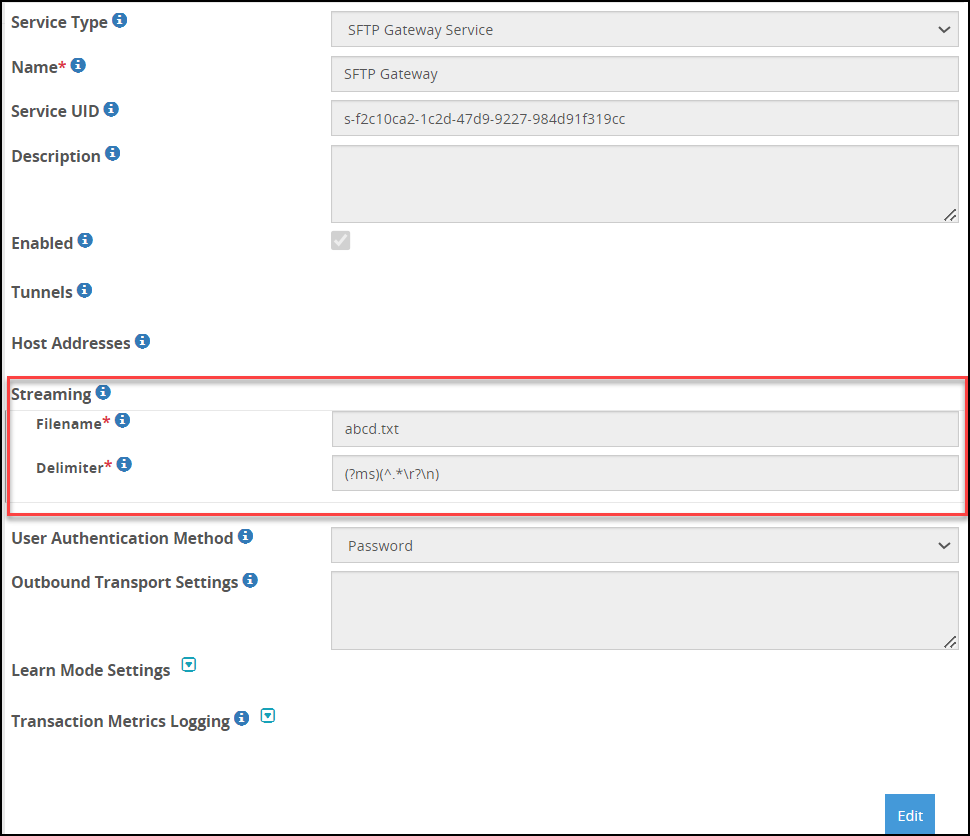

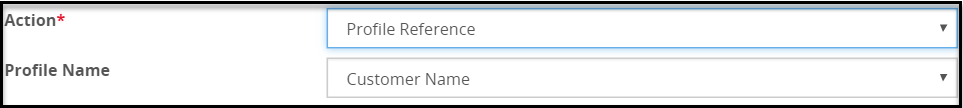

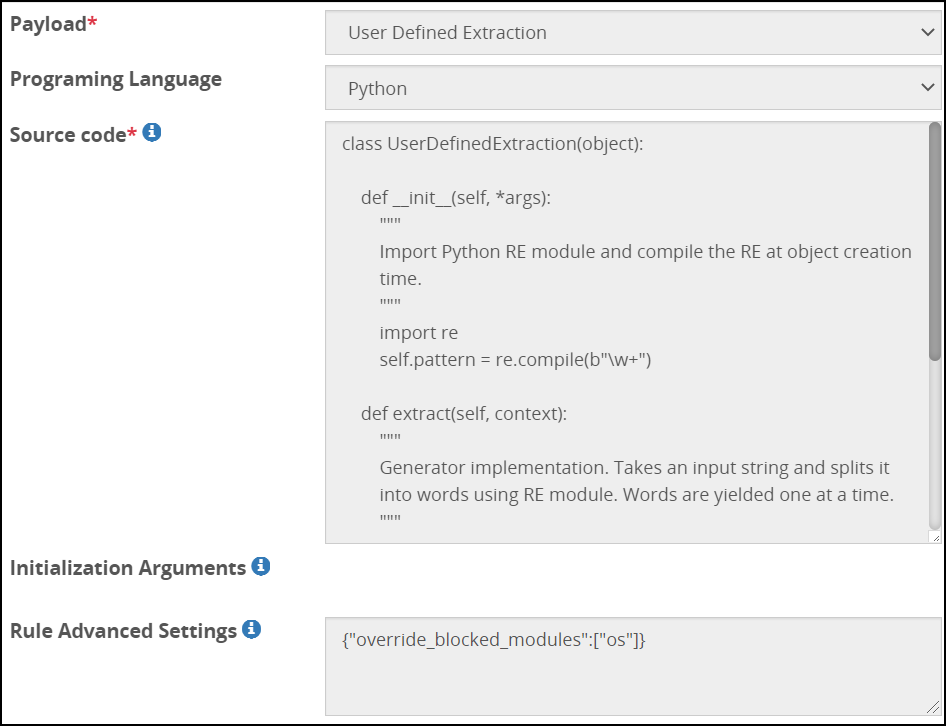

The final OUTPUT.conf file with the updated content is shown here: